Playing with fq_codel in 2.4

-

@thenarc Not seen anything like that, but I was aware that the traffic shaping in earlier pfSense instances could play havoc with the connection if it changed for some other reason. I have recently built a v2.5.0 fresh instance and configured it with FQ_CoDel with no issues.

-

@pentangle Thanks for the input. I'd feel better had I not seen the spontaneous reset after adjusting these parameters following a fresh install; although it was a fresh install plus a config restore, so perhaps I pulled in some invalid configuration along with it. Just didn't have the stamina at the time to re-configure everything from scratch ;)

-

I have applied the same settings for my 150/10 Mb connection but my download speed wont move above 130Mb. Upload is fine. Checked CPU usage also during the speedtest but it's fine abou 30% utilization at all.

These are my config, similar to @Ricardox 's:

Pfsense VM with Intel NICs 2CPU 4GB RAM (about 60% utilized)

All network hardware offload off because of suricata inline mode.DownLimiter:

147Mb, Tail Drop - FQ_CODEL (5,100,300,10240,20480), Queue 10000, ECN off

DownQueue:

Taildrop, ECN offAny idea/tweak I could try?

-

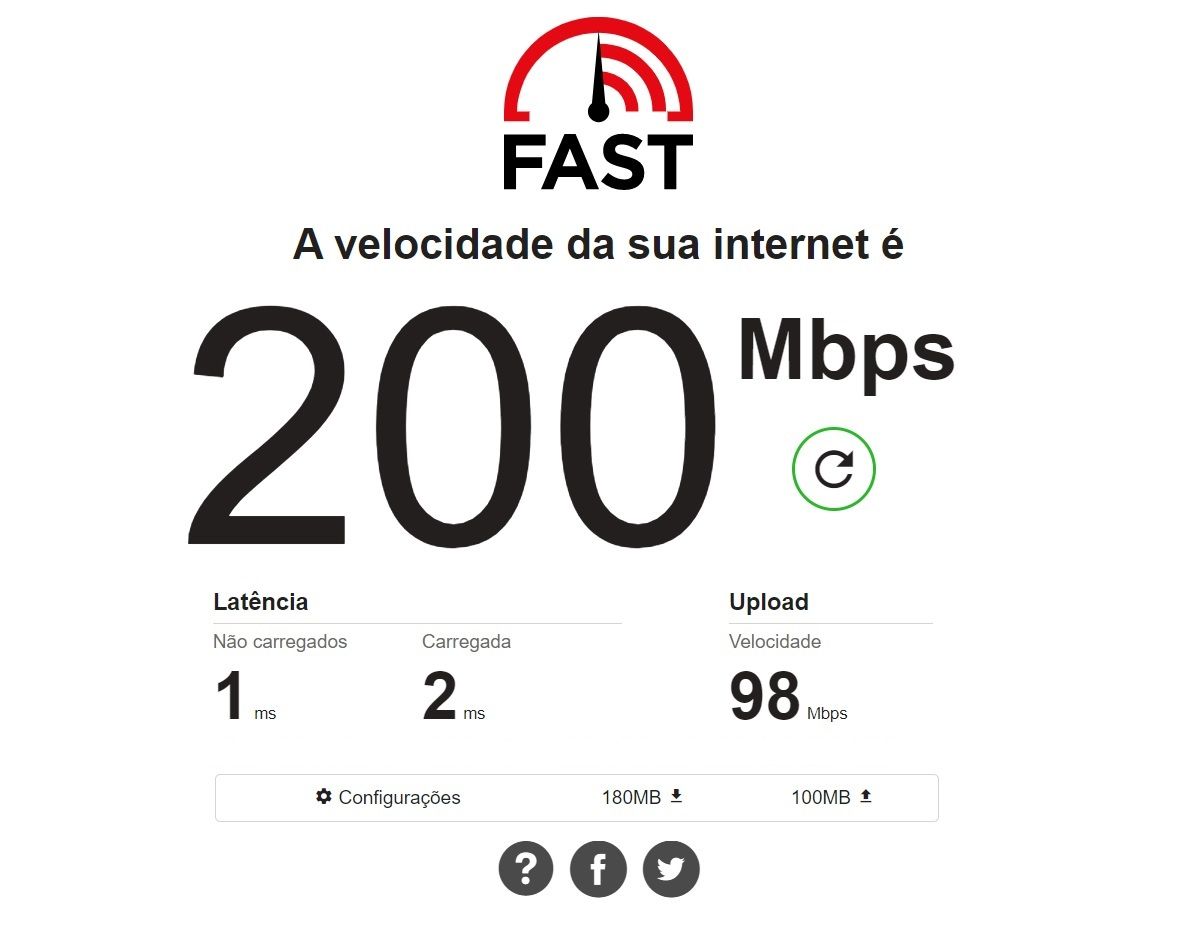

@mind12 Installed Open-VM-Tools? For my 200/100 MB network I have no loss of speed. X86 PC!

realtek gigabit network card

-

@ricardox

Sure, without the limiters I get maximum speed too. -

I think I still have a bit off tuning to do... Any recommendations?

Have Comcast 400/25 service.

Getting ~380/23 with my limiter config and bufferbloat lags of 56ms/41ms respectively, but with max download bufferbloat lag spiking up to ~230ms.DSLReports SpeedTest (limiters on)

WANDown limiter @ 400mbit/s

Queue: CoDel, target:5 interval:100

Scheduler Config: FQ_CODEL, target:5, interval:100, quantum: 1514, limit: 5120, flows 1024, QueueLength: 1001, ECN: [checked]WANUp limiter @ 25Mbit/s

Queue: CoDel, target:5 interval:100

Scheduler: FQ_CODEL, target:5, interval:100, quantum: 1514, limit: 10240, flows 1024, QueueLength: 1001, ECN: [checked]EDIT: added detail with limiters disabled.

Perhaps I should just turn them off??? Am I really getting any benefit?448/24 MBit/s and 51/67 ms bufferbloat with limiters disabled

[DSLReports SpeedTest (no limiters)]

SW:

pfSense v. 2.4.5-RELEASE-p1

pfBlockerNG-devel (2.2.5_37), ntopng, bandwidthd, telegraf

Openvpn server active, no connections at time of test.HW:

Protectli Vault FW6C

Intel(R) Core(TM) i5-7200U CPU @ 2.50GHz

Current: 2400 MHz, Max: 2601 MHz

AES-NI CPU Crypto: Yes (active)

CPU Utilization: ~5%

Memory Usage: ~17% of 8GB

Network HW Offloading: [edit]disabledenabled -

@fabrizior Hm if the latency wont go below 50ms with and without the limiters I don't see any reason to use them. In my setup despite the speed decrease with the limiters the latency is around 10ms.

Sadly I dont know and have not found any info about those advanced scheduling parameters and how to tune them. Have you tried the values/config we posted?

-

-

@mind12 See the images of my configuration above, I am using fq_codel limiters.

-

@fabrizior Out of curiosity, have you tied setting your download limiter bandwidth higher than 400Mbps? I only ask because my ISP recently doubled my download speed from 100Mbps to 200Mbps and I've observed some inaccuracy when I bumped my download limiter bandwidth accordingly. Specifically, when I tried setting it to just 200, the observed actual limit - over multiple tests using flent - was more like 150Mbps. Through many iterations of testing and upping the limit, I found that I had to set my download limiter's bandwidth to 240Mbps is order to achieve an actual limit of ~200Mbps. I can't explain why, but my test results are consistent.

-

I'm still seeing bufferbloat lag latencies up to between 400-800ms as maximum spikes during testing with the averages being in the 50ms range. What would cause this to continue to occur?

Also, and separately, that RFC says:

5.2.4. Quantum

The "quantum" parameter is the number of bytes each queue gets to

dequeue on each round of the scheduling algorithm. The default is

set to 1514 bytes, which corresponds to the Ethernet MTU plus the

hardware header length of 14 bytes.In systems employing TCP Segmentation Offload (TSO), where a "packet"

consists of an offloaded packet train, it can presently be as large

as 64 kilobytes. In systems using Generic Receive Offload (GRO),

they can be up to 17 times the TCP max segment size (or 25

kilobytes). These mega-packets severely impact FQ-CoDel's ability to

schedule traffic, and they hurt latency needlessly. There is ongoing

work in Linux to make smarter use of offload engines.Is this still a current issue?

Will test disabling TSO when I can take the interfaces offline.

Should I also turn off LRO? -

I get 460-480Mbps down without the limiters enabled.

Have been playing with setting the limiter at anywhere from 350-450 and currently testing at 425 Mbps and getting ~390-410Mbps per test.Regardless of the bandwidth limit setting, I'm consistently getting an average bufferbloat lag of ~50-70 ms with spikes up to 800+ms when the limiter is enabled. Lag is also in the ~50-70ms avg. range with the limiter disabled, but the max lag tops out around ~100ms (instead of ~800).

Upload speed is 25Mbps with limiter set to 24Mbps and getting 21-22Mbps effective with bufferbloat lag of ~35 ms avg and spikes up to ~100ms.

Very curious as to why the limiter isn't improving the avg. lag and the max lag gets worse.

-

@mind12 latency is unique to the individual connection, so quoting a specific (such as "10ms") isn't helpful. What you should see is latency that doesn't change massively when you start to saturate the connection.

-

@fabrizior "Very curious as to why the limiter isn't improving the avg. lag and the max lag gets worse."

QoS on your endpoint does nothing to change the underlying quality (or lack of it) of your connection. If you are sat on the end of a connection that's terminating in an exchange where they've oversold connectivity and the feed line out of the exchange to the internet is saturated then there's absolutely nothing you can do about that except choose a better ISP and hope their infrastructure is better.

If your max lag gets worse then it's likely your settings are awry. Please try the following:- delete your limiters

- ensure you aren't running any other QoS within pfSense

- delete your limiter firewall rules

- Go to the limiters, and create the first one as follows:

- tick "enable limiter and its children"

- Give it a name of WANDown

- set your download bandwidth to ~90% of your lowest speedtest.net download score (which I assume is about 450Mbps)

- No mask

- Give it a description of "WAN Downloads"

- Choose CoDel as the queue management algorithm

- Choose FQ_CODEL as the scheduler

- Tick ECN

- Click Save

- Go back and change the CoDel target to 5 and interval to 100, and the FQ_CODEL target to 5, interval to 100, quantum to 300, limit to 20480 and flows to 4096

- Click Save again

- Click "Add New Queue" next to the save button

- Tick to enable this queue

- Give it a name of "WANDownQ"

- No Mask

- Description "WAN Download Queue"

- Queue Management Algorithm = CoDel

- Queue length left blank

- ECN ticked

- Click Save

- Ensure the Target and Interval are set to 5 and 100 respectively (should be the default)

Now go back and create another set of limiter and queue with the same settings for the Upload, entering in 90% of the slowest upload speed you get from speedtest.net (but with the same other parameters...and of course name them WAN Upload etc)

Then, go into the firewall rules and into floating rules, and you're looking to create 4 rules right at the top of the list, as follows:

Rule 1

- Action = Pass

- Quick = ticked

- Interface = WAN

- Direction = out

- Address family = IPv4

- Protocol = TCP/UDP

- Source = ANY

- Destination = ANY

- Give it a description :)

- Click "Advanced Options"

- Scroll down to "Gateway" and select the WAN gateway

- In the in/out pipe section, set the in pipe to WANUpQ and the out pipe to WANDownQ

- Click Save

Rule 2

- Action = Pass

- Quick = ticked

- Interface = WAN

- Direction = in

- Address family = IPv4

- Protocol = TCP/UDP

- Source = ANY

- Destination = ANY

- Give it a description :)

- Click "Advanced Options"

- Leave the Gateway as default this time

- In the in/out pipe section, this time set the in pipe to WANDownQ and the out pipe to WANUpQ

- Click Save

Rules 3 & 4 are mirrors of rules 1 and 2 except this time selecting IPv6 as the Address Family and using the relevant IPv6 gateway in rule 3 where you select the gateway.

This should give you a working set of limiters.

Now, once you have this, you need to test whether it's going to do you any favours at all (or whether your underlying ISP connection is sh1t). You do this by:

- Measuring your network with the dslreports speedtest with no other load on the network

- Measuring the same, but with a load on the network (e.g. get someone else to run a ton of Youtube/Netflix/etc at the same time)

Your buffer bloat shouldn't vary too much. If it varies a lot (e.g. 30ms or more) then you may have an underlying ISP connection that is overprovisioned or of not very good quality, or they're already doing some QoS on it. First thing you would want to check is if you reduce the throughput values in the limiters does it improve things? If not, and you've brought it down a fair whack, then it's likely the issue is with the underlying pipe.

Hope that helps. It's mainly just an amalgamation of all the info given in here over the years, but it's served me well and it might do you to blow away your existing QoS and restart just to get it all fresh.

-

@pentangle

This is a great guide except the firewall rules, where you should use Match action instead of Pass, because rule evaluation will stop with Quick checked and you will unintentionally allow any TCP/UDP traffic in and out of your WAN.

The pfsense docs also recommend Match action for limiters."The match action is unique to floating rules. A rule with the match action will not pass or block a packet, but only match it for purposes of assigning traffic to queues or limiters for traffic shaping. Match rules do not work with Quick enabled."

https://docs.netgate.com/pfsense/en/latest/firewall/floating-rules.html#match-action -

@mind12 said in Playing with fq_codel in 2.4:

https://docs.netgate.com/pfsense/en/latest/firewall/floating-rules.html#match-action

For reals?

Why has this never come up in the many threads about how to configure the fq_codel limiter?Also, after setting all this up, traceroute on router is working fine, but on LAN clients, traceroute is now only showing the final IP/FQDN for all hops unexpectedly.

What the hell did I break now?

-

@fabrizior

It has come up, I saw it also in this thread. I was also shocked that even in Tom's from Lawrence Systems video was a pass rule not a match.

Of course if there is no NAT that could match a connection they can't connect but the possibility is there.Traceroute needs another floating rule to work if you use a limiter:

https://forum.netgate.com/topic/112527/playing-with-fq_codel-in-2-4/814 -

@Pentangle

Thanks for the specific recommendations.I've done as you suggest and dropped/rebuilt my limiters and firewall rules.

I did make two changes to the firewall rules though (just had to...) the WAN-IN rules for IPv6 and IPv4 I did as one, setting Address Family to IPv4+IPv6 - since there is no gateway definition needed, this seemed reasonable.

I also set to Action = Match vs Pass (and quick = not-ticked) all all floating rules for this config.None of these changes (yours or my deviations herein) has made any difference in reducing my bufferbloat latency results.

I do see where this config provides benefit, keeping latencies consistent (such as they are), when under high load.

I tested under load by adding a 10GB download using 100 sockets set to consume 240Mbit/s. This ran for about 5m39s from another LAN client to a remote internet service while I ran the dslreports speedtest twice from my primary desktop (24-core, 64GB RAM, 2x1gE bonded NICs). The two tests I ran under load (w/ & w/o limiters) were both completed during the 10GB download period.

WAN Limiter bandwidth was set at 415Mbit/s (down) and 22 Mbit/s (up) for these tests.

Test Bandwidth Latency # Conditions (Down/Up Mbit/s) (Down/Up ms) 1 lim: disabled, load: no 452/22 89/65 2 lim: disabled, load: no 460/24 64/66 3 lim: disabled, load: no 472/24 68/66 4 lim: disabled, load: no 426/25 62/67 5 lim: 415/22, load: no 390/21 53/34 6 lim: 415/22, load: yes 116/16 42/33 7 lim: disabled, load: yes 191/21 96/62Seems interesting that download latency was lower under load than without while the limiters were enabled. If this was at work... I would probably run multiple tests per each set of conditions and pull min/max/median/std.dev... but this data at least leads me to conclude that the limiters are functional and of benefit under load, even with the seemingly higher than desired latency floor.

Is this really bufferbloat, with the limiters turned on, or simply the lag to whatever testing servers dslReports is picking? no-load latency dropped substantially with the limiters turned on; just not as low as desired.

With regards to the loss of maximum bandwidth (for the sake of stable latency) am I correct in my understanding that with this baseline, I can re-test with higher limiter bandwidth settings to figure out how high I can crank it up before my latency numbers become undesirably unstable?

-

@mind12 said in Playing with fq_codel in 2.4:

https://forum.netgate.com/topic/112527/playing-with-fq_codel-in-2-4/814

floating rule (pass, quick) for IPv4 ICMP traceroute is set, as is the one for ICMP echoreq/echorep.

LAN clients tracceroute still not working properly.

What's next in how to triage this?@mind12 @pentangle @TheNarc Thanks a LOT for your time. I appreciate it.

-

@fabrizior

I have just tested it, it also failed on one of my windows clients.

I added the ICMP floating rule per the guide I sent you and it's working now:Add quick pass floating rule to handle ICMP echo-request and echo-reply. This rule matches ping packets so that they are not matched by the limiter rules. See bug 9024 for more info.

Action: Pass

Quick: Tick Apply the action immediately on match.

Interface: WAN

Direction: any

Address Family: IPv4

Protocol: ICMP

ICMP subtypes: Echo reply, Echo Request

Source: any

Destination: any

Description: limiter drop echo-reply under load workaround

Click Save