Why is pfsense slower than OpenWrt in my case?

-

Hey guys. I'm setting up my homelab and would like to replace my current firewall(OpenWRT) with pfSense. They are both great software btw. However in my case pfSense is 50% slower than OpenWRT from the result of

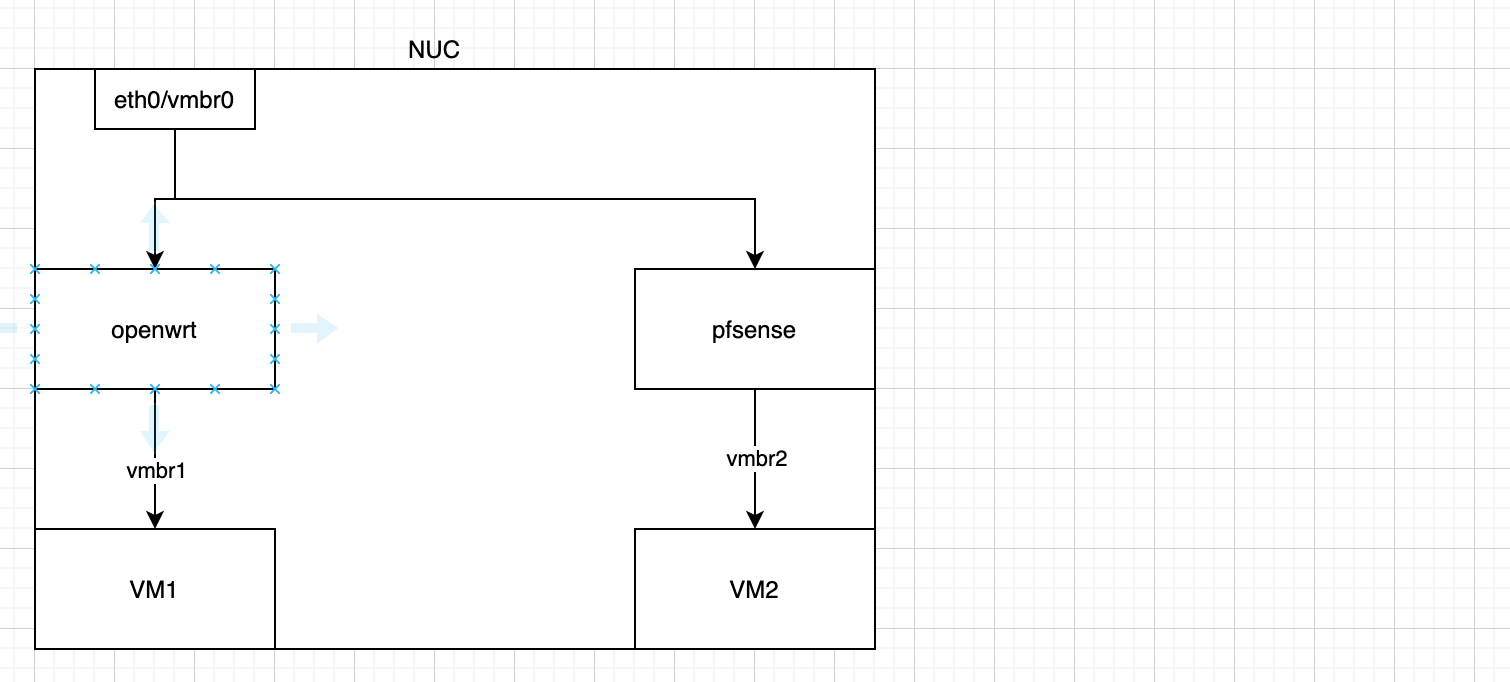

iperf3.Here's a simplified diagram of my experimental network setup. The host is running Promox VE. OpenWRT and pfSense are running as VMs. They both have their WAN NIC connected to vmbr0, which is the main virtual bridge on the hose. An

iperf3server is running on the host to test the speed.

In order to eliminate the influence from irrelevant variables, I used the same setup for OpenWRT and pfSense(same CPU, memory, NIC model).

In the experiment, the

iperf3speed from VM1(with OpenWRT) to the host is around 2.5Gbps, but only 1.2Gbps on VM2(with pfSense).Can I get some suggestions on tuning the performance of pfSense?

Thanks in advance!

Just by contrast, the

iperf3speed from OpenWRT(which is an VM) directly to the host is around 9Gbps without the need for NAT. I guess I can never reach that with a virtual firewall/router. -

My first thought there is that you might be using a NIC type that is single queue in pfSense(FreeBSD) but multiqueue in OpenWRT(Linux). If you have mode than 1 CPU core on each VM hosting the firewalls it may not be spreading that load across the cores.

Steve

-

@stephenw10 Thanks!

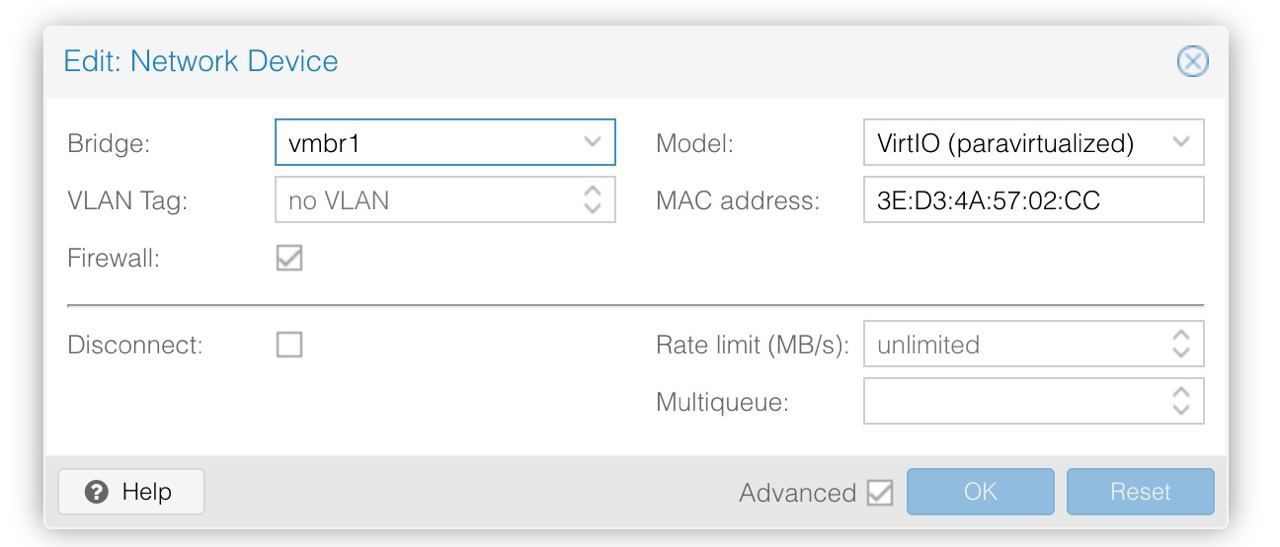

Is there a way to confirm this multiqueue settings? I don't see it in the Proxmox NIC settings page.I checked the setup and seems that multiqueue wasn't set in any VM. So seems that multiqueue isn't the cause?

-

Indeed, you may need to set that. Though I never have in Proxmox. I would expect that apply equally to OpenWRT through assuming you have that VM set to the same NIC type.

Steve

-

@stephenw10 I enabled multiqueue on both NIC. However that didn't help improve the speed.

Or is there a way to verify that multiqueue is indeed enabled in pfsense?From the output ofdmesg | grep rxqI'm still seeingrxqandtxqbeing one. So I guess I'm running into the problem described in this post.

I noticed that during the

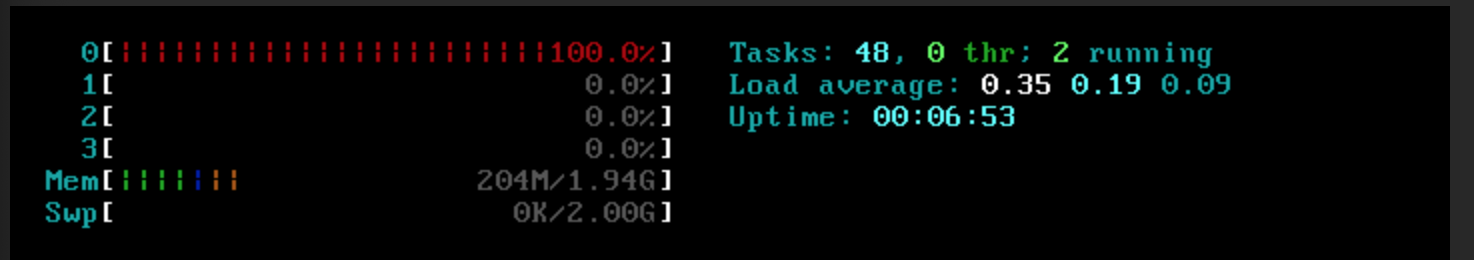

iperf3speedtest, the CPU load on pfsense server isn't quite high(this is the average load over 10 seconds) meaning that the bottleneck isn't on the CPU side. Also, only CPU 0 and 3 are doing heavy work during the test while the other two are idle.

If it's a problem that cannot be solved, I guess I'll move pfSense to a dedicated server, which I need to apply for budget from my wife..

-

Ah, yeah that looks likely.

You could try setting a different NIC type in Proxmox. -

This post is deleted! -

@left4apple I was about to reply its not possible to multi queue nics in proxmox, but you went ahead and did it, so my reply is now a qestion of how?.

As to the performance difference. Are you able to monitor cpu usage, interrupts etc. during the iperf?

Ok to update my reply, I can see the problem that I wanted to say, so on my pfsense instances where the host is proxmox, I observe you can configure queues in the proxmox UI but the nic's still have just 1 queue. I suggest you check dmesg to confirm, , also that the default ring size is only 256 for sending and 128 for rx on the virtio networking driver.

Found this, seems the driver is forced to 1 queue on pfsense.

https://forum.netgate.com/topic/138174/pfsense-vtnet-lack-of-queues/5

ALTQ Probably is better distributed as a module, so then it could still be supported whilst also allowing this driver to work in multiqueue mode for those who dont use ALTQ,

-

Or try using a different NIC type in Proxmox as I suggested. The emulated NIC might not be as fast but it would be able to use more queues/cores.

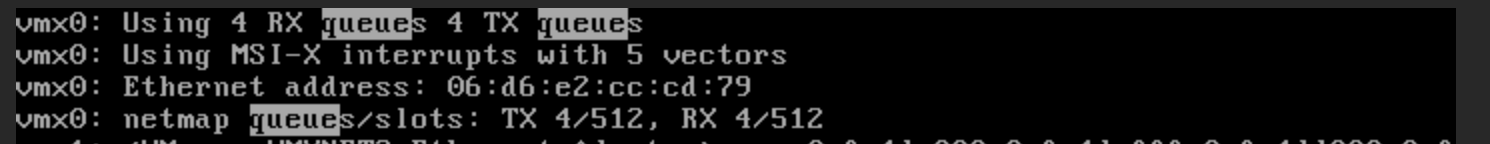

VMXnet will use more than one queue:

Aug 2 00:29:15 kernel vmx0: <VMware VMXNET3 Ethernet Adapter> mem 0xfeb36000-0xfeb36fff,0xfeb37000-0xfeb37fff,0xfeb30000-0xfeb31fff irq 11 at device 20.0 on pci0 Aug 2 00:29:15 kernel vmx0: Using 512 TX descriptors and 512 RX descriptors Aug 2 00:29:15 kernel vmx0: Using 2 RX queues 2 TX queues Aug 2 00:29:15 kernel vmx0: Using MSI-X interrupts with 3 vectors Aug 2 00:29:15 kernel vmx0: Ethernet address: 2a:66:dc:7d:78:b8 Aug 2 00:29:15 kernel vmx0: netmap queues/slots: TX 2/512, RX 2/512Steve

-

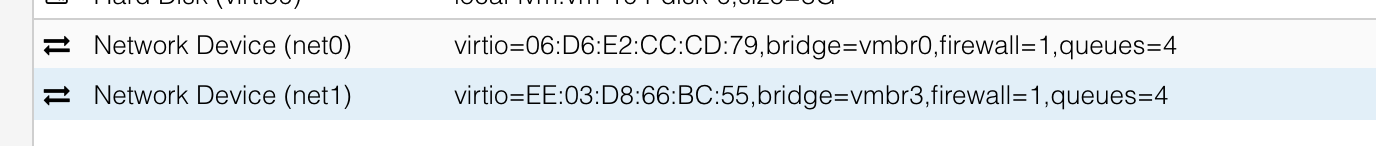

@chrcoluk I did check the queue inside pfsense and it is still 1 even if I set it to 8 in proxmox NIC settings. I'll try using VMXNET3 when I got home. Though I vaguely remember that VMXNET3 is not recommended to use unless you are porting VM from ESXi.

-

Yes, I expect vtnet to be fastest there. Certainly on a per core basis. Maybe not in absolute terms though if VMXnet can use multiple queues effectively.

Steve

-

@stephenw10 It's awkward that with VMXNET3 I'm getting less than 200Mbps..

I did see that the queue is recognized from the output of

dmesg. 4 is the number of cores I gave to pfSense.

However...

During the test, only one core is busy.

-

To figure out where the bottleneck is, I installed

iperf3directly on pfsense.So the experiment result is:

- OpenWRT(or any other VM except pfsense or opnsense) ---directly---> Host: 8.79~12Gbps

- pfSense or opnsense(fresh installed) ---directly---> Host: 1.8Gbps

- VM in pfsense LAN ------> pfSense: 1.3Gbps

This is so frustrating as they are having exactly the same hardware setup.

I know that pfSense is based on FreeBSD so I installed FreeBSD 13.0 as another VM. Ironically, FreeBSD can reach 10Gbps without any problem

.

.

-

-

You might need to add this sysctl to actually use those queue:

https://www.freebsd.org/cgi/man.cgi?query=vmx#MULTIPLE_QUEUESTesting to/from pfSense directly will always show a lower result than testing through it as it's not optimised as a TCP endpoint.

Steve

-

@gertjan Thanks for pointing out. I tried FreeBSD 12.2 and can also get around 10Gbps with it from fresh install.

@stephenw10 But I really don't think it's the problem of NIC itself. Using VirtIO as the NIC with FreeBSD 12.2 I can get the maximum speed without using multiqueue or tuning the kernel parameter. Also this doesn't seem to be related to the hardware offloading checksum as FreeBSD doesn't even have that option.

May I ask if there's anything regarding the NIC that pfSense is setting differently from raw FreeBSD?

If someone from pfSense team think this might be a bug, I'm happy to talk over chat and provide my environment for debugging.

-

Did you have pf enabled in FreeBSD?

If not try enabling that or disabling it in pfSense. That is what throttles throughput ultimately if nothing else is.

Steve