Playing with fq_codel in 2.4

-

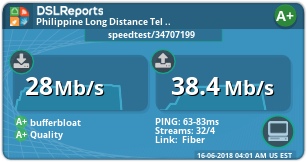

@xraisen According to openwrt wiki (only firewall where I was able to get A+ bufferbloat with fq_codel) ecn should only be enabled on inbound packets.

-

@mattund I still get those errors with Tail Drop as parent

-

@strangegopher said in Playing with fq_codel in 2.4:

@xraisen According to openwrt wiki (only firewall where I was able to get A+ bufferbloat with fq_codel) ecn should only be enabled on inbound packets.

Wow! Thanks man! It proves you are right about it. While my network is so busy right now (50Mbps total), I still got a+

-

Also isn't fq_codel doing head drop, then why is parent selected as tail drop?

-

@strangegopher I believe something has to be set there, otherwise it is is set "for you" (and in that case, I think it defaults to tail drop?). So, while you're absolutely right about the functionality of both, I think that at the greater level dummynet does need some sort of plan for packets that exceed the queue size. Whether or not that ever happens since FQ_CODEL is on, I can't really say for sure. You'd think that FQ_CODEL would ultimately be a gatekeeper and never let taildrop see an excess ... that's my guess.

I'm actually really curious about the behavior now; I'll get back to you on what dummynet does without taildrop selected so we have some concrete evidence other than my assumption. Who knows, we might be able to add a "None" option?

EDIT: Got the answer, check this out:

if (fs->flags & DN_IS_RED) /* RED parameters */ sprintf(red, "\n\t %cRED w_q %f min_th %d max_th %d max_p %f", (fs->flags & DN_IS_GENTLE_RED) ? 'G' : ' ', 1.0 * fs->w_q / (double)(1 << SCALE_RED), fs->min_th, fs->max_th, 1.0 * fs->max_p / (double)(1 << SCALE_RED)); else sprintf(red, "droptail");That is the dummynet code that is responsible constructing the output of the

ipfw pipe show(etc) commands. It indicates that, if RED/GRED is off, then it's going to just say "droptail"/tail drop. Which, tells me that droptail is the fallback default behavior; FQ_CODEL must super-cede it in some way when it's enabled. In other words, if you don't specify Tail Drop, it'll default to it anyways, which would make a "None" option as I'd previously suggested unnecessary. But, if you think about it, it makes sense - we constrain these queues to some ultimate size - so we must have a way to enforce that. I think that Tail Drop is just dummynet's way of ensuring that promise, even if FQ_CODEL is really doing all the legwork. -

@mattund said in Playing with fq_codel in 2.4:

super-cede

That answers the question why droptail is very persistent when "flowset busy" log.

I have no problem with droptail but Codel should be placed there as AQM and tried RED, but it reverts back to droptail.

I am downloading like 3x more than my bandwidth limit, but IT JUST WORKS!

-

Does anyone know why this might have appeared in my logs?

fq_codel_enqueue maxidx = 342 fq_codel_enqueue over limit fq_codel_enqueue maxidx = 342 fq_codel_enqueue over limit fq_codel_enqueue maxidx = 342 fq_codel_enqueue over limit fq_codel_enqueue maxidx = 342 fq_codel_enqueue over limit fq_codel_enqueue maxidx = 342 fq_codel_enqueue over limit fq_codel_enqueue maxidx = 342 fq_codel_enqueue over limit fq_codel_enqueue maxidx = 342 fq_codel_enqueue over limit fq_codel_enqueue maxidx = 342 fq_codel_enqueue over limit fq_codel_enqueue maxidx = 342 fq_codel_enqueue over limit fq_codel_enqueue maxidx = 342 fq_codel_enqueue over limitIn fact it appeared ~450 times:

[2.4.3-RELEASE][admin@x.x.x]/root: dmesg | grep fq_codel_enqueue | wc -l 902My config is simple:

[2.4.3-RELEASE][admin@x.x.x]/root: ipfw sched show 00001: 95.000 Mbit/s 0 ms burst 0 q65537 50 sl. 0 flows (1 buckets) sched 1 weight 0 lmax 0 pri 0 droptail sched 1 type FQ_CODEL flags 0x0 0 buckets 1 active FQ_CODEL target 5ms interval 100ms quantum 1514 limit 10240 flows 1024 ECN Children flowsets: 1 BKT Prot ___Source IP/port____ ____Dest. IP/port____ Tot_pkt/bytes Pkt/Byte Drp 0 ip 0.0.0.0/0 0.0.0.0/0 68 53304 0 0 0 00002: 18.000 Mbit/s 0 ms burst 0 q65538 50 sl. 0 flows (1 buckets) sched 2 weight 0 lmax 0 pri 0 droptail sched 2 type FQ_CODEL flags 0x0 0 buckets 1 active FQ_CODEL target 5ms interval 100ms quantum 1514 limit 10240 flows 1024 ECN Children flowsets: 2 0 ip 0.0.0.0/0 0.0.0.0/0 61 5849 0 0 0At the time the messages appeared, the box was receiving a large (basically 100Mb/s linerate) of UDP traffic (bacula backup running) and I was also doing some testing using iperf.

The reason I noticed it was becuase a TCP stream I had running at the time was terrible, it dropped to dialup speeds while the bacula traffic was running.I have a 100/20 connection, which you can see I have told fq_codel to have 95/18 of to ensure that things work well.

Does fq_codel struggle with large streams of incoming UDP traffic?

And does anyone know why I got those messages in my dmesg?Thanks! fq_codel has been otherwise amazing.

-

@muppet I have seen this too, although not with limits that high (the default of 10240). A number of people have reported this same issue, and in my experience it does also seem to be provoked by UDP traffic. The explanation in section 5.1 here:

https://tools.ietf.org/id/draft-ietf-aqm-fq-codel-02.html

implies - as expected based on the log message - that this indicates that your queue limit has been reached so packets are being dropped. Although it also suggests that in this case packets will be dropped "from the head of the queue with the largest current byte count." You would think that in this case that would be the queue handling all that UDP traffic, but if that were happening, it should mitigate the impact on other flows (which is not what you're seeing since TCP suffers badly). Additionally, I have seen others report that their pfSense machine crashes if they get enough of these sustained log messages. I don't know that it was ever clear whether the "over limit" event itself or the accompanying flood of log messages was to blame for those crashes though. Unfortunately, I don't have any solution. Section 4.1.3 of the same document linked above suggests that a limit of 10240 should be fine for connection speeds of up to 10Gbps, so there must be something else gong on here, but I don't know what. -

@TheNarc Thanks for the detailed reply.

One thing I forgot to mention, but that ties in with what you said, is that after this happened I noticed my MBUF usage jumped up and has stayed up.

Usually I have very little MBUF usage, but at exactly the time this problem happened my MBUF usage jumped up to 20%I wonder if when it happens there's a memory leak going on too and that's what explains the crashes people experience (when you run out of MBUFs it's game over)

Thanks for the detailed reply!

-

Is there a way to prioritize game traffic, and still have all the rest done via fq_codle? And if so how would i go about it ?

-

Has anyone has a guide configuring fq_codel in multiwan scenario? I tried to apply floating rule in WAN interface to assign the pipes/limiters but somehow the upload is capped at 1mbps.

I have symmetrical connection so the limiters in download and upload is exactly the same.

However, if I apply the floating rules in LAN interfaces the FQ_CODEL limiters works beautifully. This is not ideal for multi wan scenario as using bonded multiple wan links is not correctly configured here.

Anyone's help will be much appreciated :)

-

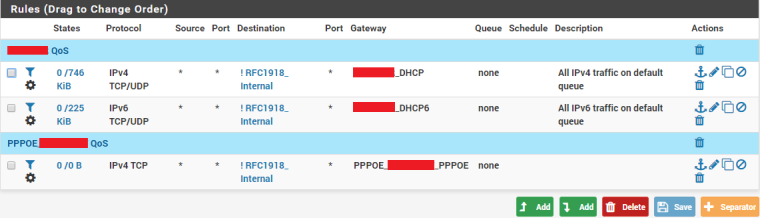

I have two WANs, several VLANs, and virtual gateways, and wrote this patch for the sole purpose of QoS'ing that traffic appropriately. I'm only using floating rules on the WAN interfaces I think. Can you share your configuration on your floating rules so we can see how you assign queues, and your queues themselves to see how what it's assigning to?

You may also benefit from changing/setting the queue length. I had some trouble with that on fast links.

-

@mattund do you have any guidance on what the queue length should be set for 10/100 and 1000Mbits?

-

@mattund I'll try to explain this briefly, apologize if this confusing to you as english is not my first language :)

Basically I have two WAN connection, primary 20/20mbps and additional 10/10mbps, and multiple LAN/VLAN and some VLAN only able to use WAN1, some vlan use WAN2, some VLAN able to use WAN1+WAN2 concurrently (bonded).

I've applied the patch then configure the limiters in the traffic shaper limiter tap, configuring download ISP 1 on 20 mbps, upload ISP 1 20 mbps, download ISP 2 10mbps, upload ISP 2 10mbps.

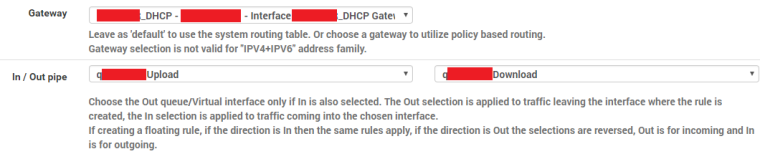

Then I made floating rules in each WAN interfaces, incoming traffic (download) from all to lan IP address then assigning in / out pipes, Then outgoing traffic from lan IP addresses to all then assigning in / out pipes (so each WAN interfaces has 2 floating rules)

However if I use above (making floating rules in the WAN interfaces) it does correctly shapes the download speed, however the upload capped at 1mbps) However if I apply the same rules in the each LAN interfaces, both upload and download correctly shaped at 20mbps (or the correct pipes I assigned to).

So now I'm bit stuck figuring out what I did wrong.

-

@zwck said in Playing with fq_codel in 2.4:

@mattund do you have any guidance on what the queue length should be set for 10/100 and 1000Mbits?

I am actually not sure now that Queue Length has an impact. I was going to write a really detailed explanation of what may be best, but I am not seeing a difference on a Queue Length set to 1... or 1000 with FQ_CODEL on. I think that FQ_CODEL is taking over at this point (note the limit parameter). So, I might have been very wrong. For the last several months I've used a queue limit of 1000, if it helps. I wager I would've been fine with it set to 1, 50, etc.

@tesna-0 said in Playing with fq_codel in 2.4:

Then I made floating rules in each WAN interfaces, incoming traffic (download) from all to lan IP address then assigning in / out pipes, Then outgoing traffic from lan IP addresses to all then assigning in / out pipes (so each WAN interfaces has 2 floating rules)

This might be it, I had trouble when I tried that. What I learned is to think of it in terms of state creation. When the WAN state is made is when you want to "catch" it and slap on a pipe. So, I use just one rule per WAN, two if it's dual stack using IPv4 and IPv6.

- I make a separate floating rule for both IPv4 and IPv6 on the desired WAN to shape

- Direction is set to out

- Assign an appropriate IPv4 or IPv6 gateway depending on the traffic class I picked in the first step.

- Set the Protocol to TCP/UDP... because ICMP will be really screwed up if you do a traceroute with Protocol set to any. And besides, who sends huge pipe-choking ICMP packets anyway :) we're taking a risk here but probably a safe one.

- And finally, I assign the pipes backwards (

) : In/Out becomes qUpload/qDownload.

) : In/Out becomes qUpload/qDownload.

I make sure I have a queue nested underneath each limiter, and am not assigning directly to a limiter -- instead I assign to a single queue underneath it.

- Limiter: Taildrop and FQ_CODEL (named lISPDownload or lISPUpload)

- Child Queue: CoDel (named qISPDownload or qISPUpload)

-

I have an idea for ya. If you don't want to use a metric ton of floating rules for classification, want to be lazy like me, and you're using Windows, you can get really hacky and use the built-in Windows QoS system. But, not to QoS on your PC (ew)! Just to set DSCP values/tags, which pfSense can interpret :)

You can use whatever DSCP value you want; pfSense has a filter in the Advanced section. You could make new rules at the tail of your floating rules section or wherever you have them, and match the DSCP value and set In/Out to none/none -- I think that would work. Full disclosure I'm not using this to classify traffic into a specific Limiter queue or lack thereof, I'm actually using this to change the gateway WAN from my cable (as is default) to my DSL connection. But, the idea would be the same.

Oh and, footnote, the idea is you generally don't need to have your games follow a different queue. FQ_CODEL is a "just works" algorithm that should fairly share wire time with a game which uses little bandwidth compared to something like a Steam download, high-def music, video streaming, etc. If you read upwards a bit I think we have others who were in a similar boat but were able to get low latency even with downloads running.

-

@muppet said in Playing with fq_codel in 2.4:

Does anyone know why this might have appeared in my logs?

fq_codel_enqueue maxidx = 342 fq_codel_enqueue over limit fq_codel_enqueue maxidx = 342 fq_codel_enqueue over limit fq_codel_enqueue maxidx = 342 fq_codel_enqueue over limit fq_codel_enqueue maxidx = 342 fq_codel_enqueue over limit fq_codel_enqueue maxidx = 342 fq_codel_enqueue over limit fq_codel_enqueue maxidx = 342 fq_codel_enqueue over limit fq_codel_enqueue maxidx = 342 fq_codel_enqueue over limit fq_codel_enqueue maxidx = 342 fq_codel_enqueue over limit fq_codel_enqueue maxidx = 342 fq_codel_enqueue over limit fq_codel_enqueue maxidx = 342 fq_codel_enqueue over limitIn fact it appeared ~450 times:

[2.4.3-RELEASE][admin@x.x.x]/root: dmesg | grep fq_codel_enqueue | wc -l 902My config is simple:

[2.4.3-RELEASE][admin@x.x.x]/root: ipfw sched show 00001: 95.000 Mbit/s 0 ms burst 0 q65537 50 sl. 0 flows (1 buckets) sched 1 weight 0 lmax 0 pri 0 droptail sched 1 type FQ_CODEL flags 0x0 0 buckets 1 active FQ_CODEL target 5ms interval 100ms quantum 1514 limit 10240 flows 1024 ECN Children flowsets: 1 BKT Prot ___Source IP/port____ ____Dest. IP/port____ Tot_pkt/bytes Pkt/Byte Drp 0 ip 0.0.0.0/0 0.0.0.0/0 68 53304 0 0 0 00002: 18.000 Mbit/s 0 ms burst 0 q65538 50 sl. 0 flows (1 buckets) sched 2 weight 0 lmax 0 pri 0 droptail sched 2 type FQ_CODEL flags 0x0 0 buckets 1 active FQ_CODEL target 5ms interval 100ms quantum 1514 limit 10240 flows 1024 ECN Children flowsets: 2 0 ip 0.0.0.0/0 0.0.0.0/0 61 5849 0 0 0At the time the messages appeared, the box was receiving a large (basically 100Mb/s linerate) of UDP traffic (bacula backup running) and I was also doing some testing using iperf.

The reason I noticed it was becuase a TCP stream I had running at the time was terrible, it dropped to dialup speeds while the bacula traffic was running.I have a 100/20 connection, which you can see I have told fq_codel to have 95/18 of to ensure that things work well.

Does fq_codel struggle with large streams of incoming UDP traffic?

And does anyone know why I got those messages in my dmesg?Thanks! fq_codel has been otherwise amazing.

What NIC's does your system have and what are their queue sizes set to? Also, are you running any IPS/IDS on your interfaces?

HTH

-

Hi @tman222 thanks for the reply.

I am running pfSense virtualised on a Proxmox host.

I am using vtnet as my interfaces, they report themselves as 10Gb interfaces.The only plugins I had active at the time were avahci and openvpn-exporter.

I'm unsure how to answer your question about queue sizes, I have included the output of a couple of commands in the hope they might capture the answer you're after?

hw.vtnet.rx_process_limit: 512 hw.vtnet.mq_max_pairs: 8 hw.vtnet.mq_disable: 0 hw.vtnet.lro_disable: 0 hw.vtnet.tso_disable: 0 hw.vtnet.csum_disable: 0 dev.vtnet.1.txq0.rescheduled: 0 dev.vtnet.1.txq0.tso: 0 dev.vtnet.1.txq0.csum: 0 dev.vtnet.1.txq0.omcasts: 2765830 dev.vtnet.1.txq0.obytes: 785586398380 dev.vtnet.1.txq0.opackets: 708668034 dev.vtnet.1.rxq0.rescheduled: 0 dev.vtnet.1.rxq0.csum_failed: 0 dev.vtnet.1.rxq0.csum: 198931285 dev.vtnet.1.rxq0.ierrors: 0 dev.vtnet.1.rxq0.iqdrops: 0 dev.vtnet.1.rxq0.ibytes: 116215930004 dev.vtnet.1.rxq0.ipackets: 482511639 dev.vtnet.1.tx_task_rescheduled: 0 dev.vtnet.1.tx_tso_offloaded: 0 dev.vtnet.1.tx_csum_offloaded: 0 dev.vtnet.1.tx_defrag_failed: 0 dev.vtnet.1.tx_defragged: 0 dev.vtnet.1.tx_tso_not_tcp: 0 dev.vtnet.1.tx_tso_bad_ethtype: 0 dev.vtnet.1.tx_csum_bad_ethtype: 0 dev.vtnet.1.rx_task_rescheduled: 0 dev.vtnet.1.rx_csum_offloaded: 0 dev.vtnet.1.rx_csum_failed: 0 dev.vtnet.1.rx_csum_bad_proto: 0 dev.vtnet.1.rx_csum_bad_offset: 0 dev.vtnet.1.rx_csum_bad_ipproto: 0 dev.vtnet.1.rx_csum_bad_ethtype: 0 dev.vtnet.1.rx_mergeable_failed: 0 dev.vtnet.1.rx_enq_replacement_failed: 0 dev.vtnet.1.rx_frame_too_large: 0 dev.vtnet.1.mbuf_alloc_failed: 0 dev.vtnet.1.act_vq_pairs: 1 dev.vtnet.1.requested_vq_pairs: 0 dev.vtnet.1.max_vq_pairs: 1 dev.vtnet.1.%parent: virtio_pci3 dev.vtnet.1.%pnpinfo: dev.vtnet.1.%location: dev.vtnet.1.%driver: vtnet dev.vtnet.1.%desc: VirtIO Networking Adapter [2.4.3-RELEASE][admin@x.x.x]/root: ifconfig vtnet1 vtnet1: flags=8843<UP,BROADCAST,RUNNING,SIMPLEX,MULTICAST> metric 0 mtu 1500 options=6c00b8<VLAN_MTU,VLAN_HWTAGGING,JUMBO_MTU,VLAN_HWCSUM,VLAN_HWTSO,LINKSTATE,RXCSUM_IPV6,TXCSUM_IPV6> ether 1a:b3:6c:0f:3c:61 hwaddr 1a:b3:6c:0f:3c:61 inet6 fe80::18b3:6cff:fe0f:3c61%vtnet1 prefixlen 64 scopeid 0x2 inet 192.168.0.1 netmask 0xffffff00 broadcast 192.168.0.255 nd6 options=21<PERFORMNUD,AUTO_LINKLOCAL> media: Ethernet 10Gbase-T <full-duplex> status: active -

https://github.com/pfsense/pfsense/pull/3941

The PR was just merged into HEAD, it hopefully will make into the next release.

-

@mattund said in Playing with fq_codel in 2.4:

https://github.com/pfsense/pfsense/pull/3941

The PR was just merged into HEAD, it hopefully will make into the next release.

This is great news! Thanks so much for your hard work here @mattund !