Playing with fq_codel in 2.4

-

@zwck The easiest thing from my perspective would be for you to compile netperf with

./configure --enable-demo

for this platform, run "netserver -N" on the box (open up the relevant port 12685 on both ipv6 and ipv4 if available), then I can flent test from anywhere if you give me the ip.

That's all flent needs to target tests at a box from the outside world. If you are extra ambitious you can also install irtt (it's in go) and open up it's udp port....

Flent has other good guidelines on flent.org if you wish to play with it yourself (the rrul test is the way to get the mostest fastest). but it involves installing a lot of python code somewhere.

Having ssh into the box also available would let me tcpdump and monitor other things (I'm not usually big on asking for access of that level, being able to target some tests at the box would be a start)

-

@pentangle You shouldn't have to fiddle with the quantum at all. You are saying that a quantum 300 works when a quantum 1514 does not? The quantum 300 thing is an optimization for very slow links, it shouldn't "cause connections to drop at the end of a test".

-

Hi @dtaht - the intuition behind my suggestion was to try to make the algorithm more "fair" since VoIP packets tend to be a bit smaller.

I was going off of what I read here:

https://www.bufferbloat.net/projects/codel/wiki/Best_practices_for_benchmarking_Codel_and_FQ_Codel/

"We have generally settled on a quantum of 300 for usage below 100mbit as this is a good compromise between SFQ and pure DRR behavior that gives smaller packets a boost over larger ones."

@Pentangle also mentioned that his connection speed was 80/20.

Is this not the right way to think about the quantum?

-

@dtaht said in Playing with fq_codel in 2.4:

@zwck The easiest thing from my perspective would be for you to compile netperf with

./configure --enable-demo

for this platform, run "netserver -N" on the box (open up the relevant port 12685 on both ipv6 and ipv4 if available), then I can flent test from anywhere if you give me the ip.

That's all flent needs to target tests at a box from the outside world. If you are extra ambitious you can also install irtt (it's in go) and open up it's udp port....

Flent has other good guidelines on flent.org if you wish to play with it yourself (the rrul test is the way to get the mostest fastest). but it involves installing a lot of python code somewhere.

Having ssh into the box also available would let me tcpdump and monitor other things (I'm not usually big on asking for access of that level, being able to target some tests at the box would be a start)

I mean i can set up an netperf server behind the pfsense, on it, might not be possible for me.

-

@tman222 It is a right way to think about the problem. :) However the OP was reporting "all my connections drop at the end of the test". There should be a "burp" at the beginning of the test as all the flows start, but i guess i don't know what he means by what he said.

-

@zwck If you can port forward or ipv6 for a box behind, that would work. Otherwise I tend to originate flent tests from the box(es) behind to one or more of our flent servers in the cloud.

-

@dtaht said in Playing with fq_codel in 2.4:

@zwck If you can port forward or ipv6 for a box behind, that would work. Otherwise I tend to originate flent tests from the box(es) behind to one or more of our flent servers in the cloud.

For that type of test should i just turn off any type of the traffic shaping or should i keep it running as i have it configured.

-

if the netgate folk could make that netperf (and irtt) available that would be very helpful overall. I really don't trust web based tests - most of them peak out at ~400mbit in the browser...

-

@zwck well, we're trying to determine how well the shaper is working, so we'd want it on for a string of tests and off for another string.

-

@dtaht I'll set this up tomorrow morning with the simplest shaper ( for my wan interface) and send you the needed information.

-

I've been following this thread for a couple of weeks now but I'm still running into an issue.

If I have an in and out limiter set on the WAN interface, using the exact steps @jimp lays out in the August 2018 hangout, I get packet loss. If I stress the connection using a client visiting dslreports.com/speedtest to create load and I run a constant ping to my WAN_DHCP gateway, I have seconds of echo response loss while the test runs. DSLreports shows overall A, bufferbloat A, quality A. If I move the limiters to the LAN interface, making the needed interface and in/out queue adjustments to the floating rules, I do not see loss and DSLreports shows the same AAA result.

Can anyone else recreate this? My circuit is rated at 50Mbps down and 10Mbps up and I am limiting at 49000Kbps and 9800Kbps respectively after finding this to consistently work well in the past with ALTQ and CODEL on WAN. I am running 2.4.4 CE.

-

You should see some loss, (that's the whole point), but ping should lose very few packets. Are you saying ping is dying for many seconds? or just dropping a couple not in a bunch? It's helpful to look at your retransmits on your dslreports test, and ping itself, dropping packets. what do you mean by a "constant ping". A ping -f test (flooding) WILL drop a ton of ping packets but a normal ping should drop, oh, maybe 3%?

Part of why I'd like to run flent is I can measure all that. :)

-

@dtaht thanks for the quick response. I am pinging every 500 ms during the dslreports test with a timeout of 400ms for the response - typical latency between my WAN interface and gateway is less than 3 ms. Over the coarse of the entire test, download and upload, including the pauses, I see the following.

Packets: sent=86, rcvd=62, error=0, lost=24 (27.9% loss) in 42.513662 sec

RTTs in ms: min/avg/max/dev: 1.042 / 3.546 / 11.348 / 1.575

Bandwidth in kbytes/sec: sent=0.121, rcvd=0.087I'll take a look at flent and see what I can gather.

-

@dtaht I'm happy to expand on it. I understand what you would expect from a "burp" at the start of the test, and I did sometimes see circa ~30-40ms of latency at that time, but I never saw (or heard in the case of my Sonos streaming radio) any drops at the start of the test (or anywhere during the download test), only at the end of the upload test exactly when the graph dove down from it's "hump" it was drawing. I did try it about 6-7 times and it was repeatable whereby the internet radio would pause for a good 5 seconds and you'd be chucked out of any RDP session to an internet host at that specific time. Changed the quantum only down to 300 from 1514 and it made the difference that no connections were dropped despite another 7 or so tests today with everyone in the office working at the time and the Sonos playing. It was repeatable, so despite you saying it shouldn't help it appears however that it did.

-

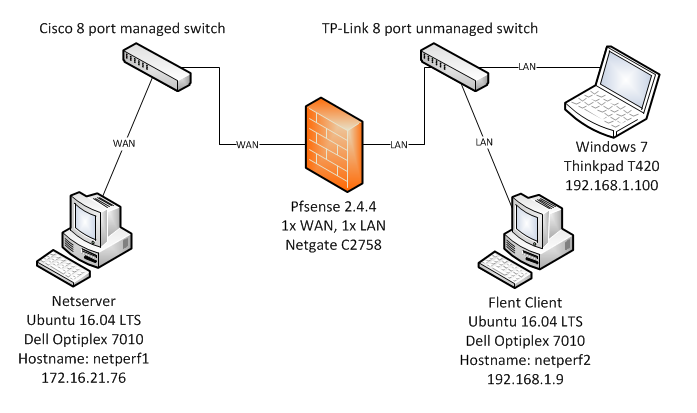

@dtaht Ok, here is what I have set up to test my issue using spare hardware. I've confirmed what I was seeing with other hardware using a different topology and using Flent to produce the traffic. The limiters are 49000Kbps and 9800Kbps.

The test lab is laid out as such - all network connections are copper GigE.

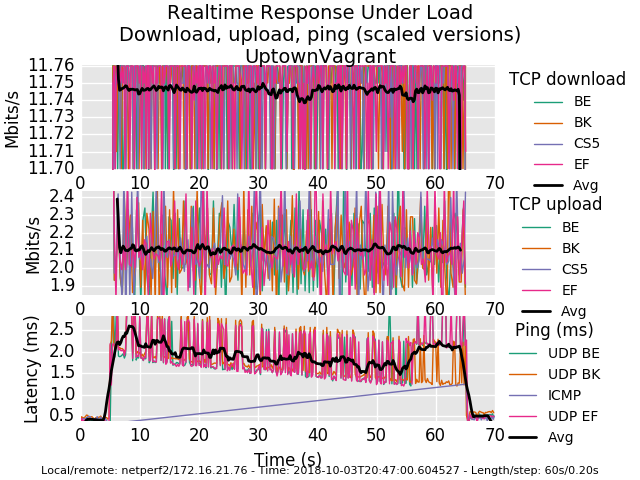

flentuser@netperf2:~/flent$ flent rrul -p all_scaled -l 60 -H 172.16.21.76 -t UptownVagrant -o RRUL_Test001.pngI have included the graph created by Flent as well as attached the pfsense configuration (very close to stock) and the gzip output from Flent. During the RRUL test I was pinging the WAN-DHCP gateway every 500 ms with 60 bytes and a reply timeout of 400 ms for each echo request from the Thinkpad using hrping. I'm seeing huge loss when simulating this tiny traffic while Flent RRUL is running.

Packets: sent=146, rcvd=26, error=0, lost=120 (82.1% loss) in 72.500948 sec RTTs in ms: min/avg/max/dev: 0.538 / 1.004 / 1.865 / 0.340 Bandwidth in kbytes/sec: sent=0.120, rcvd=0.021

0_1538625522209_config-pfSense.localdomain-20181003200505.xml

0_1538625551026_rrul-2018-10-03T204700.604527.UptownVagrant.flent.gz

-

Beautiful work, thank you. You're dropping a ton of ping . That's it. Everything else is just groovy. It's not possible to drop that much ping normally on this workload. Are icmp packets included in your filter by default? (udp/tcp/icmp/arp - basically all protocols you just want "in there", no special cases). udp is gettin through.

You can see a bit less detail with the "all" rather than the all_scaled plot.

Other than ping it's an utterly perfect fq_codel graph. way to go!

-

while you are here, care to try 500mbit and 900mbit symmetric? :) What's the cpu in the pfsense box? Thx so much for the flent.gz files, you really lost a ton of ping for no obvious reason. Looking at the xml file I don't see anything that does anything but tcp... and we want all protocols to go through the limiter.

For example a common mistake in the linux world looks like this

tc filter add dev $DEV parent ffff: protocol ip prio 50 u32 match ip src

0.0.0.0/0 more stuffwhich doesn't match arp or ipv6. The righter line is:

tc filter add dev ${DEV} parent ffff: protocol all match u32 0 0

-

also, you can run that test for 5 minutes. -l 300. IF you are also blocking arp,

you'll go boom in 2-3 minutes tops.this is btw, one of those things that make me nuts about web testers - we've optimized the internet to "speedtest", which runs for 20 seconds. Who cares if the network explodes at T+21 and if my users could stand it, I'd double the length of the flent test to 2 minutes precisely because of the arp blocking problem I've seen elsewhere.....

I run tests for 20 minutes... (ping can't run for longer than that on linux)... hours.... with irtt.... overnight....

-

@dtaht Thanks again for your quick responses - I really appreciate your guidance on this.

The floating match filter rules I have, one for WAN out and one for WAN in, are for IPv4 and all protocols so everything should be caught and processed by fq_codel limiter queues. The match rules that place the traffic in the queues start at line 178 of the xml file I attached previously.

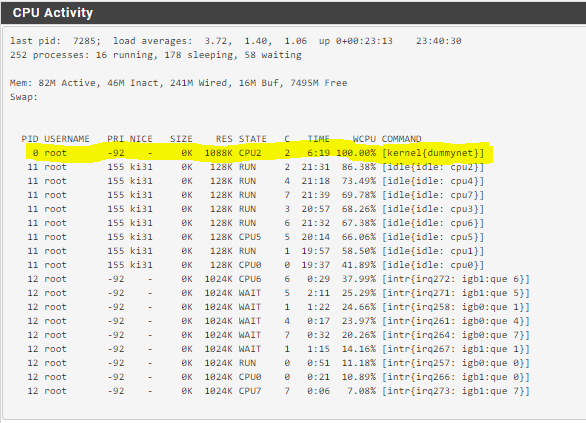

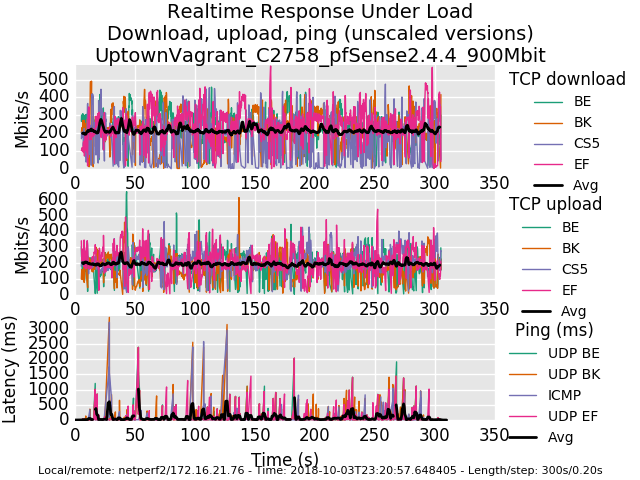

The CPU is an Intel Atom C2758 in the pfSense box. Below I've uploaded the graphs and flent.gz files for 500Mbit and 900Mbit - I ran each test for 5 minutes.

0_1538634767921_rrul-2018-10-03T231012.058529.UptownVagrant_C2758_pfSense2_4_4_500Mbit.flent.gz

0_1538634784575_rrul-2018-10-03T232057.648405.UptownVagrant_C2758_pfSense2_4_4_900Mbit.flent.gz

-

At 900Mbit a core is saturated on this C2758 by dummynet limiter.