Playing with fq_codel in 2.4

-

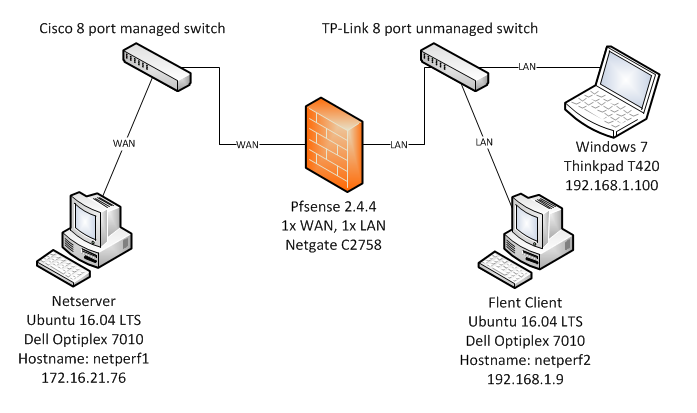

@dtaht Ok, here is what I have set up to test my issue using spare hardware. I've confirmed what I was seeing with other hardware using a different topology and using Flent to produce the traffic. The limiters are 49000Kbps and 9800Kbps.

The test lab is laid out as such - all network connections are copper GigE.

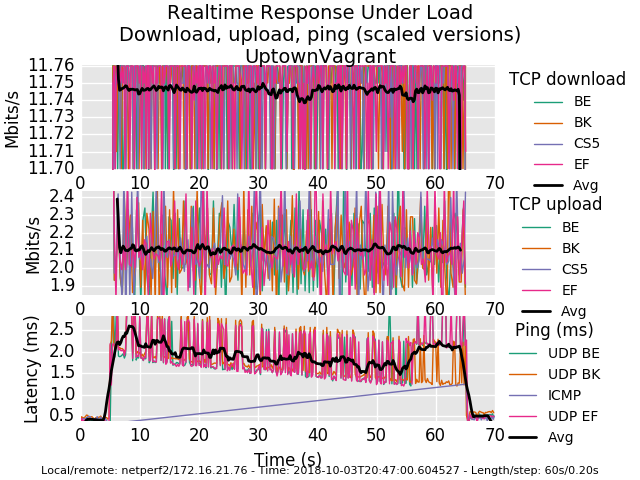

flentuser@netperf2:~/flent$ flent rrul -p all_scaled -l 60 -H 172.16.21.76 -t UptownVagrant -o RRUL_Test001.pngI have included the graph created by Flent as well as attached the pfsense configuration (very close to stock) and the gzip output from Flent. During the RRUL test I was pinging the WAN-DHCP gateway every 500 ms with 60 bytes and a reply timeout of 400 ms for each echo request from the Thinkpad using hrping. I'm seeing huge loss when simulating this tiny traffic while Flent RRUL is running.

Packets: sent=146, rcvd=26, error=0, lost=120 (82.1% loss) in 72.500948 sec RTTs in ms: min/avg/max/dev: 0.538 / 1.004 / 1.865 / 0.340 Bandwidth in kbytes/sec: sent=0.120, rcvd=0.021

0_1538625522209_config-pfSense.localdomain-20181003200505.xml

0_1538625551026_rrul-2018-10-03T204700.604527.UptownVagrant.flent.gz

-

Beautiful work, thank you. You're dropping a ton of ping . That's it. Everything else is just groovy. It's not possible to drop that much ping normally on this workload. Are icmp packets included in your filter by default? (udp/tcp/icmp/arp - basically all protocols you just want "in there", no special cases). udp is gettin through.

You can see a bit less detail with the "all" rather than the all_scaled plot.

Other than ping it's an utterly perfect fq_codel graph. way to go!

-

while you are here, care to try 500mbit and 900mbit symmetric? :) What's the cpu in the pfsense box? Thx so much for the flent.gz files, you really lost a ton of ping for no obvious reason. Looking at the xml file I don't see anything that does anything but tcp... and we want all protocols to go through the limiter.

For example a common mistake in the linux world looks like this

tc filter add dev $DEV parent ffff: protocol ip prio 50 u32 match ip src

0.0.0.0/0 more stuffwhich doesn't match arp or ipv6. The righter line is:

tc filter add dev ${DEV} parent ffff: protocol all match u32 0 0

-

also, you can run that test for 5 minutes. -l 300. IF you are also blocking arp,

you'll go boom in 2-3 minutes tops.this is btw, one of those things that make me nuts about web testers - we've optimized the internet to "speedtest", which runs for 20 seconds. Who cares if the network explodes at T+21 and if my users could stand it, I'd double the length of the flent test to 2 minutes precisely because of the arp blocking problem I've seen elsewhere.....

I run tests for 20 minutes... (ping can't run for longer than that on linux)... hours.... with irtt.... overnight....

-

@dtaht Thanks again for your quick responses - I really appreciate your guidance on this.

The floating match filter rules I have, one for WAN out and one for WAN in, are for IPv4 and all protocols so everything should be caught and processed by fq_codel limiter queues. The match rules that place the traffic in the queues start at line 178 of the xml file I attached previously.

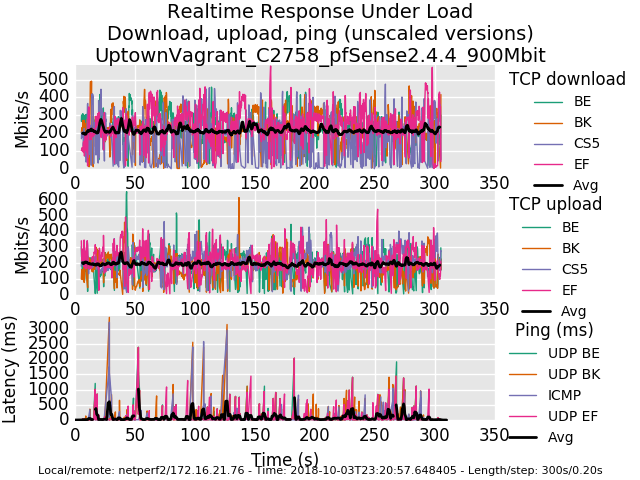

The CPU is an Intel Atom C2758 in the pfSense box. Below I've uploaded the graphs and flent.gz files for 500Mbit and 900Mbit - I ran each test for 5 minutes.

0_1538634767921_rrul-2018-10-03T231012.058529.UptownVagrant_C2758_pfSense2_4_4_500Mbit.flent.gz

0_1538634784575_rrul-2018-10-03T232057.648405.UptownVagrant_C2758_pfSense2_4_4_900Mbit.flent.gz

-

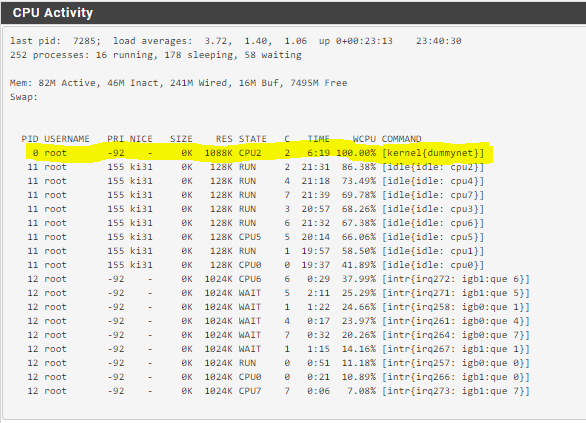

At 900Mbit a core is saturated on this C2758 by dummynet limiter.

-

This post is deleted! -

@strangegopher a floating match rule for your LAN interface should also do the trick - it works in my experience. That being said I'm also seeing strange behavior with ICMP and limiters applied to WAN when NAT is involved. I plan to test without NAT later today to see if the behavior changes. I believe I remember reading there were issues with limiters and NAT in the past but I thought they were remedied at this point.

-

@uptownVagrant yeah that works too, for me I have only 2 interface groups (guest vlans (3 of them) and lan vlans (2 of them)) so its not hard to apply the rules.

-

I followed jimp video on netgate monthly hangout, on a single wan setup. fq_codel works. but on my case I have dual wan. I have seperate rule for my two wan. If I enable the fq_codel firewall rule. my internet gets crappy.

00001: 24.000 Mbit/s 0 ms burst 0 q65537 50 sl. 0 flows (1 buckets) sched 1 weight 0 lmax 0 pri 0 droptail sched 1 type FQ_CODEL flags 0x0 0 buckets 0 active FQ_CODEL target 5ms interval 100fs quantum 1514 limit 10240 flows 1024 ECN Children flowsets: 1 00002: 764.000 Kbit/s 0 ms burst 0 q65538 50 sl. 0 flows (1 buckets) sched 2 weight 0 lmax 0 pri 0 droptail sched 2 type FQ_CODEL flags 0x0 0 buckets 0 active FQ_CODEL target 5ms interval 100fs quantum 1514 limit 10240 flows 1024 ECN Children flowsets: 2 00003: 12.228 Mbit/s 0 ms burst 0 q65539 50 sl. 0 flows (1 buckets) sched 3 weight 0 lmax 0 pri 0 droptail sched 3 type FQ_CODEL flags 0x0 0 buckets 0 active FQ_CODEL target 5ms interval 100fs quantum 1514 limit 10240 flows 1024 ECN Children flowsets: 3 00004: 764.000 Kbit/s 0 ms burst 0 q65540 50 sl. 0 flows (1 buckets) sched 4 weight 0 lmax 0 pri 0 droptail sched 4 type FQ_CODEL flags 0x0 0 buckets 0 active FQ_CODEL target 5ms interval 100fs quantum 1514 limit 10240 flows 1024 ECN Children flowsets: 4 ```! -

@strangegopher said in Playing with fq_codel in 2.4:

- For those experiencing traceroute issues, the fix is to not have a floating rule. Instead add pipes to your lan interface rule to the internet.

So is it safe to say this wouldn't work properly for multiwan setups? If WAN1=1000Mbit and WAN2=50Mbit (e.g.) then piping them both thru the same limiter seems like it would never achieve the correct results.

-

@luckman212 you can create 2 identical rules out to the internet in lan, one for wan1 and other for wan2. But make sure to select the wan interface (gateway) in advanced settings to be wan1 for first rule and wan2 for second rule.

-

@uptownvagrant from the xml file (thx for the guidance) you are running with the default quantum of 1514. Can you retry with 300 to see if the ping problem still exists?

I note that quantum should generally match the mtu - 1518 if you are vlan tagging - and yes, below 40mbit or so, 300 has been a good idea.

The other thing I can think of is adding an explicit match rule for icmp as a test.

The other other thing I notice is the slope changes a bit and the height of the graph is ~3ms on the 500Mbit test rather than about 2ms. This implies there's a great deal of

buffering on the rx side of the routing box, and your interrupt rate could be set higher to service less packets more often.Lastly, while you are here, if you enable ecn on the linux client and server also

sysctl -w net.ipv4.tcp_ecn=1

you'll generally see the tcp performance get "smoother". (but I haven't reviewed the patch and don't remember if ecn made it into the bsd code or not)

-

@luckman212 yes, if you have two different interfaces at two different speeds you should set the limiter separately.

-

@uptownvagrant yep, nat can be a major issue for any given protocol, and it would not surprise me if that was the source of the icmp lossage with load. I'm still puzzled about "the burp at the end of the test" thing (which also might be a nat issue, running out of some conntracking resource, etc)

-

@strangegopher cablemodems do ipv6 by default now, so I would stress that future shapers/limiters always match all protocols. Otherwise (see wondershaper must die).... as for your performance closer to the spec, does the limiter have support for docsis framing? The sch_cake shaper does, and we can nail the upstream rate exactly. It's like, 3 lines of code in sch_cake....

-

@dtaht please elaborate interested in cake

-

@dtaht Thanks again! I actually tested quantum 300, limit 600, on the just the 9800Kbps queue, and then on both, and saw roughly the same behavior with regard to ICMP. I also tried quantum 300, limit 600, on the 9800Kbps queue and quantum 1514, limit 1200, on the 49000Kbps queue without much change either. I also created en explicit ICMP match rule and tested but there was no significant change. :(

I'll grab a packet capture during limiter saturation to see if ECN is being flagged on the FreeBSD/pfSense box and will enable ECN on the Linux boxes in the lab. I'll also investigate the buffering you mentioned and finally I'll test without NAT.

@strangegopher my $.02, the match rule has the advantage of matching all traffic without having to add the queues to all of your explicit pass rules on the LAN. So for those running a deny all except explicitly allowed configuration it can be a little cleaner setup and less rules to futz with if there are queue changes.

-

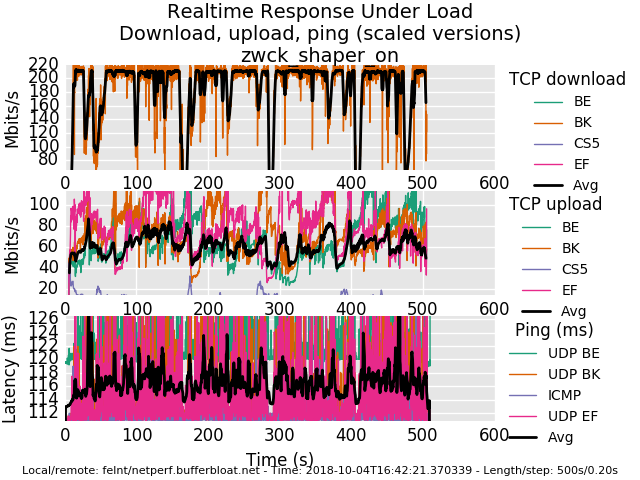

Hey i played a littlebit with flent after setting up a simple shaper.

1Gbit symetrical, and i used netperf.bufferbloat.net for my tests.

Not sure how usefull these results are.shaper off

shaper 900mbit on

0_1538667306903_rrul-2018-10-04T164221.370339.zwck_shaper_on.flent.gz

0_1538667308924_rrul-2018-10-04T172307.046314.zwck_shaper_off.flent.gz

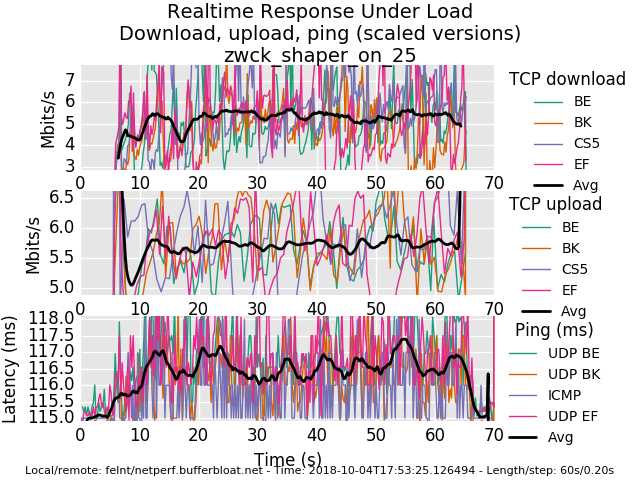

25mbit

0_1538668567021_rrul-2018-10-04T175325.126494.zwck_shaper_on_25.flent.gz

-

@zwck your first result shows you either out of cpu or that server out of bandwidth in both the shaped and unshaped cases at 1gbit. I have not gone to any great extent to get 1gbit+ to work in our cloud. I should probably start pursuing that....

peaking at 400mbit down 160 up unshaped. I imagine it can do either down or up at 1gbit?

It's interesting to see you doing mildly better shaped than unshaped. More bandwidth, lower latency. (still could be my server though). your enormous drops in throughput on the shaped download may be due to overrunning your rx ring, try boosting that and increasing the allowed interrupts/sec, and regardless, your users are experiencing issues at a gbit that you didn't know about, because flent tests up and down at the same time (like your users do), and web tests don't. Win for flent again, go get hw that can do 1gbit in both directions - even unshaped - at the same time!

correction it looks like only one download flow started? is that repeatable? try rrul_be also (doesn't set the tos/diffserv bits). that could be a another symptom of a nat problem....

your 25mbit result is reasonable (at this rtt), and shows ping working properly. However at this rtt there's not a lot of ping to be had... (irtt tool would be better) or a closer server.

I don't know where in the world you'd get a 115ms baseline rtt to that server??

If you are on the east coast of the us, try flent-newark.bufferbloat.net, west coast: flent-fremont... england: flent-london -