Playing with fq_codel in 2.4

-

@sensemann FQ_CODEL doesn't give you knobs to tweak flows, if you want that, you want to use ALTQ.

The idea of FQ_CODEL is that it doesn't let any single flow starve out the others, so your live videostream and your VoIP should just keep working even if a user triggers off a large torrent.Given the terrible upload speed, maybe you have a CPU limitation, what hardware are you doing this on?

-

@muppet My hardware is a Netgate SG-2220. There are no other packages running, so the CPU (in top via ssh) seems to peak at 25% load at 100 Mbit.

I experimented some more and find that fq_codel on upload and no queue on download seems to give me the best results. As soon as I enable fq_codel for downloads the bufferbloat jumps from 100ms to 2000ms under load and 15ms to 750ms idle according to dslreports. This is even with 30 Mbit set in the limiter. Similar results with PIE. Curious what could cause this. Tried from different clients, same results.

-

@goodthings It's a good question - I'm unsure how much testing FQ_CODEL has had on the ARM chipsets, maybe it's just not compatible?

EDIT: This is bollocks, the SG-2200 is not ARM based.

-

@muppet

What ARM chipset are you talking about?

-

@w0w Sorry, I totally farked up. I was conflating the SG-3100 post above which is ARM based, but @goodthings has an Intel Atom.

I think I will stop posting about stuff I don't fully understand, my apologies all.

-

@goodthings

Do you have any other shaper enabled simultaneously with limiters?

What packages are you using on firewall?

Post result of "ipfw sched show" and "pfctl -sr | grep dnqueue" commands and full result of dslreports test when you have those huge jumps, also -

I have a 120/20 connection and I just tried the FQ_CODEL approach but I get a strange result. When I use 20Mbit for upload limiter I get 2mbit effective upload on speedtest (ECN or not)... if I use 40Mbit, then I get 4Mbit. I have found if I simply use CODELQ I have better buffer bloat scores on dsl reports and actually 20mbit upload.

-

This post is deleted! -

I collected all the info but the forum software / akismet detects my postings as spam when i try to attach a dslreport. This all seems more trouble than it's worth so I'm giving up for now, thank you for trying to help.

-

A few newbie questions..... What is the best way to monitor what is going on with the fq_codel limiter: "ipfw sched show" from the command prompt, or Diagnostics > Limiter Info.... or it's the same? The latter seems to provide an additional line of info:

Queues: q00001 50 sl. 0 flows (1 buckets) sched 1 weight 0 lmax 0 pri 0 AQM CoDel target 5ms interval 100ms ECN q00002 50 sl. 0 flows (1 buckets) sched 2 weight 0 lmax 0 pri 0 AQM CoDel target 5ms interval 100ms ECNthough I guess it is same information.

Can anyone give some information (or point me to a resource elsewhere) on how to interpret the output of "ipfw sched show"? Here is a sample I posted above, but I'm confused about what each part means.....

Shell Output - ipfw sched show 00001: 50.000 Mbit/s 0 ms burst 0 q65537 50 sl. 0 flows (1 buckets) sched 1 weight 0 lmax 0 pri 0 droptail sched 1 type FQ_CODEL flags 0x0 0 buckets 1 active FQ_CODEL target 5ms interval 100ms quantum 1514 limit 10240 flows 1024 ECN Children flowsets: 1 BKT Prot ___Source IP/port____ ____Dest. IP/port____ Tot_pkt/bytes Pkt/Byte Drp 0 ip 0.0.0.0/0 0.0.0.0/0 2 2932 0 0 0 00002: 40.000 Mbit/s 0 ms burst 0 q65538 50 sl. 0 flows (1 buckets) sched 2 weight 0 lmax 0 pri 0 droptail sched 2 type FQ_CODEL flags 0x0 0 buckets 1 active FQ_CODEL target 5ms interval 100ms quantum 1514 limit 10240 flows 1024 ECN Children flowsets: 2 0 ip 0.0.0.0/0 0.0.0.0/0 2 112 0 0 0Why 0 buckets 1 active?

Why 0 flows?

Tot_pkt/bytes Pkt/Byte Drp? What do each of these refer to?

If you don't see any Drp (drops?) does that mean fq_codel was not having any effect?

What does target 5ms interval 100ms refer to?PS - It would be really cool if there were some kind of dashboard widget to show what's going on the traffic shaper/limit in realtime.

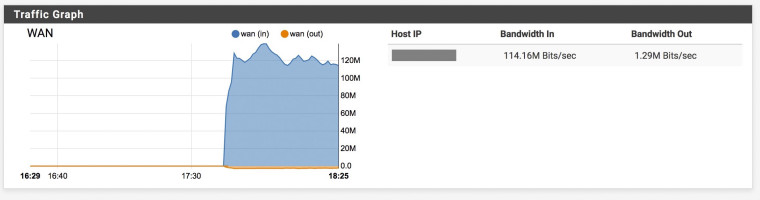

Edit: Also I still don't understand the relationship between the bandwidth limit set and what is subsequently possible. Here is a well-seeded torrent running with bandwidth set to 50mbit download 40mbit upload.....

00001: 50.000 Mbit/s 0 ms burst 0 q65537 50 sl. 0 flows (1 buckets) sched 1 weight 0 lmax 0 pri 0 droptail sched 1 type FQ_CODEL flags 0x0 0 buckets 1 active FQ_CODEL target 5ms interval 100ms quantum 1514 limit 10240 flows 1024 ECN Children flowsets: 1 BKT Prot ___Source IP/port____ ____Dest. IP/port____ Tot_pkt/bytes Pkt/Byte Drp 0 ip 0.0.0.0/0 0.0.0.0/0 164 236431 13 19396 0 00002: 40.000 Mbit/s 0 ms burst 0 q65538 50 sl. 0 flows (1 buckets) sched 2 weight 0 lmax 0 pri 0 droptail sched 2 type FQ_CODEL flags 0x0 0 buckets 1 active FQ_CODEL target 5ms interval 100ms quantum 1514 limit 10240 flows 1024 ECN Children flowsets: 2 0 ip 0.0.0.0/0 0.0.0.0/0 43 2132 0 0 0....and yet download bandwidth was able to far exceed the limit set.

-

@goodthings said in Playing with fq_codel in 2.4:

I collected all the info but the forum software / akismet detects my postings as spam when i try to attach a dslreport. This all seems more trouble than it's worth so I'm giving up for now, thank you for trying to help.

I am not sure what are you talking about, I wanted just the link like that http://www.dslreports.com/speedtest/39412173

-

@bafonso

Can you post you configuration, screenshots or what ever you like?

You are not the first person who reported this misbehavior, but I am not sure what is the reason for it. -

Is everybody applying their rules "WAN" side? I was a bit surprised that the hangout adds them "WAN" side. I normally either apply them on the "LAN" side or via a floating rule on multiple "LAN" side interfaces.

-

Almost all of my rules are applied LAN-side, because almost all flows are initiated by hosts on the LAN. But if you run a WAN-facing server (e.g. SSH) for which traffic that initiates a flow will come in on the WAN interface, you'd want to assign it to a limiter queue there. At least, that's my understanding of how it works.

-

Would this also be handy, for example, if one would want to assign different limiters to each segment. For example:

WAN - 480/480

LAN1 - 90/90

LAN2 - 200/200

etc.What would happen if the product of the bandwidths of the LANs is more than the WAN? I would assume that fq_codel should do its magic.

Another handy scenario I can think of:

WAN - 300/300

LAN1 - 10/10 per host/IP

LAN2 - 100/100 per host/IP

etc. -

Well in a typical single-WAN scenario, you'd usually only have two limiters . . . one for upload and one for download. You could divide bandwidth among different LAN segments with limiters, but the only way I'd know to do that would impose hard limits on them. For example, say you have 500Mbps downstream and you set a 200Mbps limiter on LAN1 download and a 300Mbps limiter on LAN2 download. Even if LAN2 is using no bandwidth at all, LAN1 is still only going to be allowed to use 200Mbps.

You could instead add child queues to your limiters with different weights. For example, you have your download limiter with 500Mbps, and you could then make two child queues - say Lan1DownQ and Lan2DownQ, with the weight of the former being 40 and the weight of the latter being 60 (the weights are just proportional and can be anything, but conceptually I find it easiest to have them sum to 100 so that you can interpret them as percentages). Then if you assign all LAN1 download traffic to Lan1DownQ and all LAN2 traffic to Lan2DownQ, if both LAN segments are "maxing out" their download, LAN1 should get about 200Mbps and LAN2 should get about 300Mbps. But if LAN2 is idle, LAN1 should get all 500Mbps.

-

@w0w said in Playing with fq_codel in 2.4:

Can you post you configuration, screenshots or what ever you like?

You are not the first person who reported this misbehavior, but I am not sure what is the reason for it.I'd happily revert to that configuration and post whatever you'd like. For the FQ_CODEL, I set it up using the method described in the youtube video, ie, using limiters and then floating rules to catch all the WAN traffic. It's really bizarre behavior, the ipfw sched showed the right pipe throughputs but somehow I was only getting 10% of it. I got the same results through wired cable and using wifi.

-

It makes sense to me to apply the rules on the WAN side, because it means that my OpenVPN connections will also be mixed into the pool of the bandwidth shared around. So someone at home downloading a torrent doesn't impact my ability to remote VPN in to the network and move files/data around.

-

@thenarc said in Playing with fq_codel in 2.4:

Well in a typical single-WAN scenario, you'd usually only have two limiters . . . one for upload and one for download. You could divide bandwidth among different LAN segments with limiters, but the only way I'd know to do that would impose hard limits on them. For example, say you have 500Mbps downstream and you set a 200Mbps limiter on LAN1 download and a 300Mbps limiter on LAN2 download. Even if LAN2 is using no bandwidth at all, LAN1 is still only going to be allowed to use 200Mbps.

You could instead add child queues to your limiters with different weights. For example, you have your download limiter with 500Mbps, and you could then make two child queues - say Lan1DownQ and Lan2DownQ, with the weight of the former being 40 and the weight of the latter being 60 (the weights are just proportional and can be anything, but conceptually I find it easiest to have them sum to 100 so that you can interpret them as percentages). Then if you assign all LAN1 download traffic to Lan1DownQ and all LAN2 traffic to Lan2DownQ, if both LAN segments are "maxing out" their download, LAN1 should get about 200Mbps and LAN2 should get about 300Mbps. But if LAN2 is idle, LAN1 should get all 500Mbps.

Very interesting. What I normally do(since my clients don't get preferential treatment) is the following:

Have one limiter with one queue each for upload, where I set 90% of achievable bandwidth for the limiter. For the queues and the limiter I set a "MASK" which covers all my LAN side networks(I think the term is "supernet"). Then I apply the limiter via a floating rule, with source alias with all the LAN side networks in it. Effectively this leads to all the LANs sharing the total bandwidth configured for each limiter.

I'll try your way when I have some time. Maybe it's more efficient.

-

I can tell you the way I set up my network (simple single WAN single LAN), which may or may not be what you want. I have a 100/10 connection, so I have two limiters: DownloadLimiter and UploadLimiter, with bandwidth limits of 100Mbps and 10Mbps respectively. Neither has a mask set.

DownloadLimiter has two child queues: DownloadQueueDefault - with a weight of 30 - and DownloadQueueHighPriority - with a weight of 70. I have a 32-bit Destination mask set on each, which means that each host will get its own queue as opposed to all download traffic from all LAN hosts being dumped into a single queue. The theory behind that - although I must admit that my understanding at this level is limited - is that the available bandwidth will be shared more equitably among all hosts that way.

UploadLimiter also has two child queues: UploadQueueDefault - with a weight of 30 - and UploadQueueHighPriority - with a weight of 70. I have a 32-bit Source mask set on each, for the same reasons as described above.

The theory here is perhaps best illustrated with an example. Suppose I have four hosts: A, B, C, and D. A and B are assigned to my "Default" upload and download queues and C and D are assigned to my "HighPriority" upload and download queues. For simplicity, let's consider only download, and assume that all four hosts are attempting to download as fast as they can. What should happen, to the best of my knowledge, is that four dynamic download queues are created (because of the mask setting), one per host. The two queues for A and B should get about 30% of my total download, which should be roughly shared between them (i.e. 15% each). Similarly, the two queues for C and D should get about 70% of my total download, which should also be roughly shared between them (i.e. 35% each).