RAM Disk enabled, but still constant writes to disk…

-

There is no way that a modern SSD would die after such a short period of time with only several TB written. It was defective.

-

@KOM I mistakenly put several. It was a lot more than that. It was a lower cost one, I suspect it died so fast because it was lacking a DRAM cache, so it was doing thousands of small writes per day (if not more, my disk light was blinking all the time when Pfsense's VM was running). Having to write each block for a pointlessly small write will kill a cell just as fast as a full cell write.

TLC memory won't take much and when you write dozens of TB to a 128 GB disk over the course of a couple months. Hell, my barely used Samsung 850 Pro 256 GB is already at 61 TB of total writes because of this damn thing - it was barely used before I put it in the server and it literally has: ESXi with FreeNAS, PFsense, and a couple other small VMs. The only one which did any writing to the disk was the Pfsense one.

-

Make sure your root is mounted 'noatime'. Check

mount -p. Edit the fstab and reboot to apply it if it's not.https://redmine.pfsense.org/issues/9483

Hard to imagine it writing that much though.

Steve

-

@stephenw10 It's not that this issue was writing a lot of data, it was the number of writes per minute of little chunks. I'm 99% sure the zero swap install fixes the problem, and was the root cause. The whole point of having the RAM disk enabled was to save flash based storage, and for some reason it just wrote all the RAM disk info to swap as it came in (I suspect, I didn't look too much into the data being written, other than it was syncer doing the writing and it was all the time). It could be some interaction between ESXi and Pfsense since it doesn't seem to be a very widely reported issue.

While testing I tried reinstalls (default, with swap), killing all the logging I could find, removing all packages (even VM tools, just in case), changing RAM disk settings, etc. It just kept writing away, 24/7 until I did the no swap install. :D The no swap install used a restore of the exact same config I was using.

-

Hmm, interesting. I usually install without swap anyway on devices running from flash. However I would not on an SSD and I've never noticed drive writes anywhere near that. Did it actually show swap being used?

Steve

-

@stephenw10 I'm not actually sure, I was really in the "just try things to get this damn thing to stop" mode so I didn't look too much into what exactly it was doing other than calling syncer constantly. I have no idea why it would care about swap, it had 8 gigs of memory (later 32 GB just to test it), and it was still writing. Note I use ZFS, I believe I tried a UFS install a while back to test and it still did it.

I made a new VM, installed it. Disk writes. Restored my config and it still was writing to the disk. When I made a VM and installed without swap it went away both before and after I restored my config. Like I said in another reply, I don't think this is very widespread so I would like to he from the original poster since they seemed to have the exact same problem.

-

Hmm, interesting catch. Yeah 61TB in 2 months is waaaaay outside the range of anything I've seen. Even those systems that were not mounted noatime for a while.

Steve

-

Just providing some input to this thread because it very nicely captured a problem which I am (have?) experiencing.

I have a 2.4.4 pfSense system running on a Proxmox Virtual Environment (6.1-3) and I was surprised to see that my SSD's (128GB LiteOn m.2 SATA SSD - consumer grade stuff) Wear Leveling SMART number dropped 4% in about 3 weeks. So I started to investigate optimizing proxmox and pfsense to reduce writes to the drive.

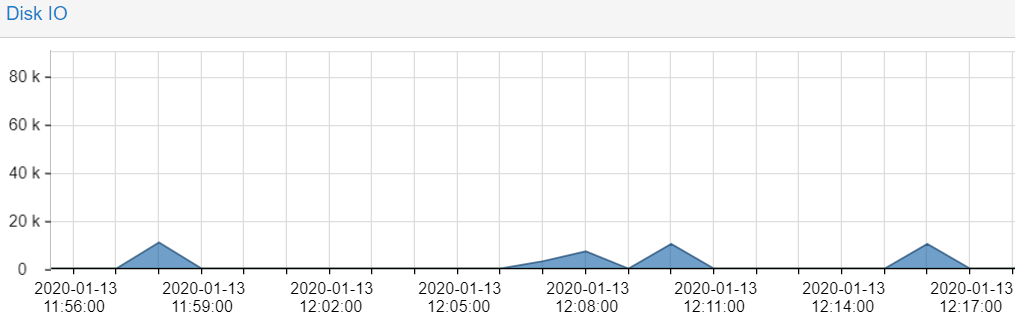

On the pfSense side, I have been observing regular writes on the hypervisor (via iotop -a --only) which show the kvm of the pfSense system is writing to the disk rather constantly. Proxmox history shows it's around 10k on average:

On my 2.4.4 setup I have RAM disk enabled. I have a constant connection to a OpenVPN server and a not much else.

Based on the observations in this thread, I reinstalled pfSense with a manually partitioned drive where I deleted the swap file (and enabled trim on the virtio based disk).

After reinstalling I still observed writes on the VM, so I enabled noatime as well on the root mount.

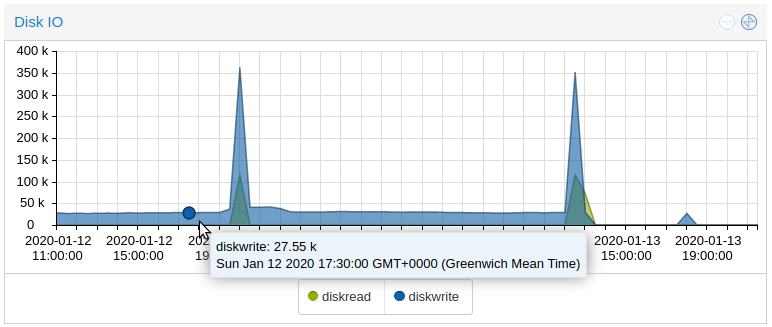

In this plot you can sort of see the effects of that (it shows up around 22:50-22:55 on the plot).

Now the writes are not so constant, but there are still a few periodically.

Based on the change observed with the noatime setting, vs reinstalling without swap, I'm not sure if the swap was the problem. It would be interesting to know if PeterBrockie's successful setup did include the noatime mount option.Although I am definitely not in the range where the drive use is going to kill my SSD, but I think it's worthwhile noting that such writes do exist, when in theory we might expect no writing to occur. I'm still trying to understand what the other writes are - I'll try not to log into the pfsense system in the next little while and see what the hypervisor detects.

Thanks to everyone who contributed to this post.

-

Mmm, interesting. I too have a Proxmox system here with a least two pfSense VMs running in it continually. I also see 20-30k drive writes in each. I've set noatime manually now, I'll let you know.

The smartctl status from it is interesting:=== START OF SMART DATA SECTION === SMART overall-health self-assessment test result: PASSED SMART/Health Information (NVMe Log 0x02) Critical Warning: 0x00 Temperature: 32 Celsius Available Spare: 100% Available Spare Threshold: 10% Percentage Used: 0% Data Units Read: 696,237 [356 GB] Data Units Written: 1,119,658 [573 GB] Host Read Commands: 3,490,850 Host Write Commands: 11,895,271 Controller Busy Time: 66 Power Cycles: 10 Power On Hours: 341 Unsafe Shutdowns: 2 Media and Data Integrity Errors: 0 Error Information Log Entries: 0 Warning Comp. Temperature Time: 0 Critical Comp. Temperature Time: 0 Temperature Sensor 1: 32 Celsius Temperature Sensor 2: 32 Celsius Error Information (NVMe Log 0x01, max 64 entries) No Errors LoggedI'm not totally sure about that since the power on hours seem low, I've had that running for significantly longer than 2 weeks.

Steve

-

@emobo I have a ZFS pool and haven't touched atime. As far as I can tell my specific disk write problem was solely an interaction between swap and the VM. Despite having plenty of free RAM and having all logs go to RAMdisks, it still wrote something to the swap constantly.

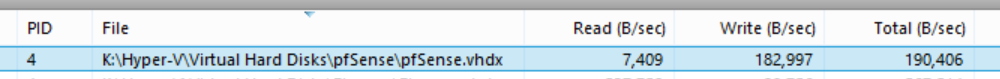

After removing swap:

-

@stephenw10

Ah sounds familiar. Is that smartctl status output from your proxmox Debian host or the pfsense Freebsd. I don't know the right command line arguments to get it from the pfSense VM. I'm not sure if it can be accessed there.@PeterBrockie

Thanks, that is impressive and it's in the last hour so the resolution is high. That is exactly what I would hope to achieve as well. I have no swap now, so I'm unsure what else could be causing it.I have more history now on my host log and it still shows activity:

Focusing on the last hour it's still showing periodic writes.

There is an old thread here from back in 2012 https://forum.netgate.com/topic/130424/ram-disk-enabled-but-still-constant-writes-to-disk

-

Anything from 2012 is largely irrelevant at this point.

I only have 2.4.5 and 2.5 snapshots running but the results were broadly similar. Very basic installs.

Mounting root noatime produces ~50% decrease in drive writes. ~30kBps to ~18kBps.

Enabling RAM drives reduces it to 0 most of the time. There are obviously still some writes when the config updates etc.

Steve

-

Thanks - yes the noatime has a noticeable effect.

I'm puzzled how PeterBrockie's configuration could be so quiet while the other setups still have regular activity.As a test, I tried disabling local logging but it seems to have little to no effect. This makes sense if the logs were being written to the ramdisk anyway.

-

Pardon the interruption, but is this a Proxmox, VM, SSD or swap specific issue?

-

@provels That'd what we are trying to figure out. I am running VMware and it killed a ssd in no time. Disabling swap fixed it for me and not for others, so we are trying to figure out exactly what it is.

-

It's not VM specific, it's just far easier to see the disk IO in a VM. What the actual cause of the OPs issue where he had to remove SWAP is a mystery. I could not replicate.

-

@stephenw10 I personally didn't have the problem outside a VM. I was running Pfsense for years and years on a small 32GB SSD which would have failed 10 times over at the rate it killed my larger drive. The little drive passed SMART tests, etc and is still going.

Same config file (although I did test a fresh install).

-

Without noatime set I have seen some high drive write numbers, much higher than I expected. I've yet to see anything kill a drive though. At least not with drive writes alone.

With RAM drives enabled I'm seeing effectively 0 drive writes until I save a change etc. I think that's the same as you are pretty much.

Steve

-

@PeterBrockie Well, FWIW, with noatime, ramdisks, and swap enabled I see no disk activity at all on my pfSense VM VHDX in Hyper-V (2012R2).

Without noatime, but else same, as below.

-

It would seem very strange if this was caused by the choice of Hypervisor. I'm less familiar with the other hypervisors - does anyone know if Proxmox is the only one that uses the virtioblock device for the hard disk? If it was VM host related perhaps that could be related?

@stephenw10 - in the case of the writes I'm curious about - I believe those are not initiated by me directly - I am purposely trying to avoid touching the pfsense system while those writes are occurring. I don't login, or make any changes to the environment - it should be just routing (and logging). I can accept that there will be a few jobs on timers which occur (i.e. the ramdisk is dumped to disk periodically - but I have that set to 24hours) but I am surprised it would be anything so frequent.

I do find this truly intriguing. To me, this is less about killing SSD's, than it is about not really having a good handle on the what the system is doing. These are security focused platforms so it would be ideal if an administrator can make sense of what's happening.

I wonder if an experiment like this would work - on a test pfsense install - can we remount the / partition as ro and see what gets upset? It might be time to start breaking out more VM's...