[SOLVED] Virtio NIC Performance - High CPU Usage

-

Solved - I disabled ntopng and throughput went back up to 1gbps as expected.

===========================

Hi All,

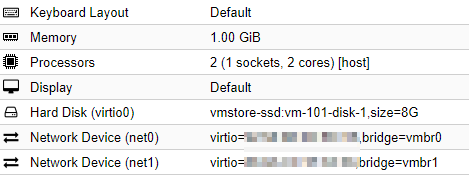

I've been trying to chase down an issue I am having with pfsense and virtio NICs. (Note: my instance of pfsense v2.4.4 is virtualized using proxmox 5.2-8.) Below is what I see when trying to transfer large files between subnets:

12 root -92 - 0K 432K CPU1 1 0:38 86.96% [intr{irq260: virtio_pci2}]

If I actually run top vs. looking in "system activity" on the webUI, it is pegged at 100%. I'm only getting about 400 mbps instead of the expected gigabit. The above results occur with various types of usage scenarios (NFS, iperf, etc.).

Things I have tried:

- Disabling hardware checksum offload

- Disabling hardware checksum offload at the NIC level in pfsense VM via sysctl (hw.vtnet.csum_disable=1)

Things I have tried for comparison purposes:

- Same test on latest opnsense (I think they are on 11.1 of freebsd), same VM config - Transfer at wirespeed, much lower cpu usage

- Vanilla Freebsd 11.2 VM, same VM config, on the same subnet (no routing occurring) - Transfer at wirespeed

- iperf server running on pfsense (shouldn't require routing) - ~400 mbps

- pcie passthrough of NIC to pfsense - Slightly better results, but still only ~550-600mbps (using em driver for my NIC)

Does anyone have any suggestions on how I can further troubleshoot? Is anyone else experiencing this?

-

That interrupt load is where you see the load generated by pf itself. If you're also seeing that restriction on a connection to the firewall using iperf try disabling pf to make sure it's not something to the hypervisor. You will lose NAT doing that and obviously you never want to run with pf disabled except as a test.

pfctl -dat the command line to disable it.pfctl -eor make any change in the GUI to re-enable it.Steve

-

Hi @stephenw10 ,

Thanks for the reply. After disconnecting my WAN temporarily, I tried to disable pf and run the test again. Routing between two subnets, performance was slightly better (~500mbps), but again that saturated a single core. (I made sure to re-enable pf.)

I'm not sure what else I should try either at the NIC level or the hypervisor level. As mentioned, a vanilla FreeBSD 11.2 VM with the same NIC config in proxmox sent 1gbps easily (which was bottlenecked by the switch my physical client was connected to).

-

What are you hosting this on? What resources have you given pfSense?

Steve

-

It is an older used desktop.

(The opnsense test and vanillia freebsd test were performed on the same machine.)

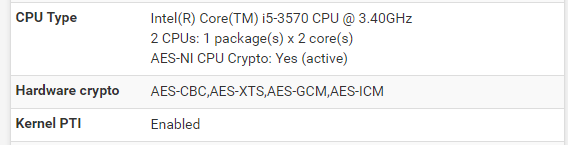

Intel(R) Core(TM) i5-3570 CPU (3.40 GHz / 3.8 turbo)

8gb Memory

HP NC364T PCI Express Quad Port Gigabit Server Adapter (Two Intel 82571EB chipsets)TL-SG108E switch

Promox 5.2-8

Pfsense 2.4.4-RELEASE (amd64)

built on Thu Sep 20 09:03:12 EDT 2018

FreeBSD 11.2-RELEASE-p3

#################

#################

-

Could you please share the solution that solved your issue?

I'm having the same problem right now with a server that was running ESXi before.EDIT: sorry, I missed the first line :) No ntopng on my pfsense so it must be something else...