General system failure (still)

-

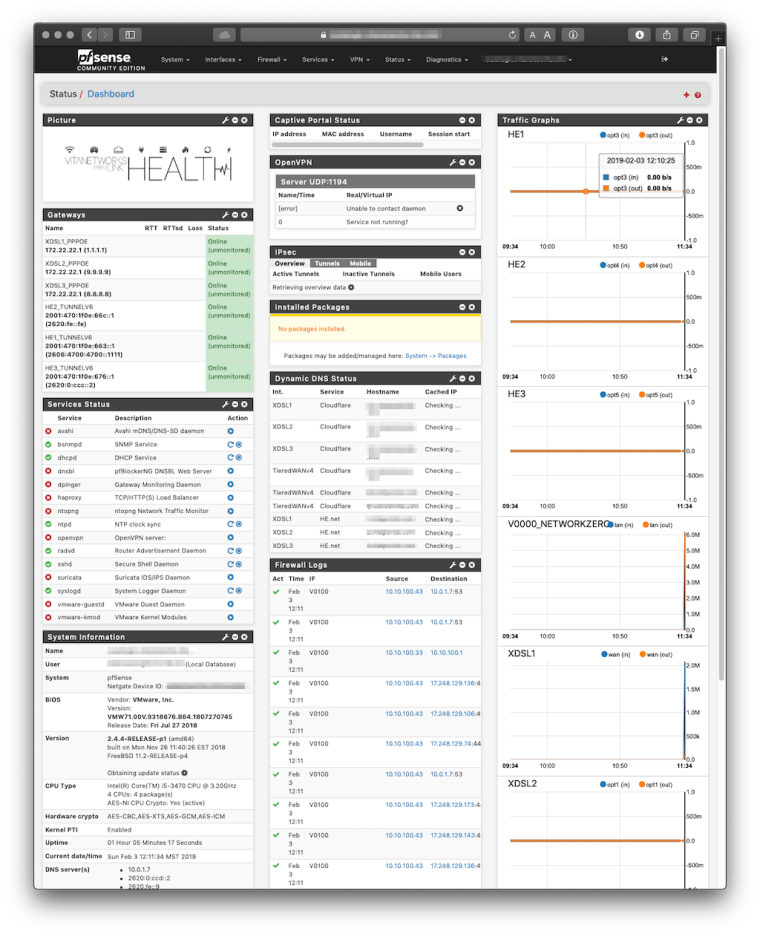

Since 2.4.4 I've been dealing with this problem where pfSense would become drastically slower when changes are made, and would drop massive amounts of connections when it reaches this point. In the last three days I felt it taking a little more time between config changes but it was yesterday when it's now needing a reinstallation. I actually need to reinstall from ISO, restoring the defaults isn't enough.

I've installed bare-metal, on several systems and even took one and started swapping out components to see if I had better luck but, not a chance. I've also ran the system on three different hypervisors and eventually it gets to this points.

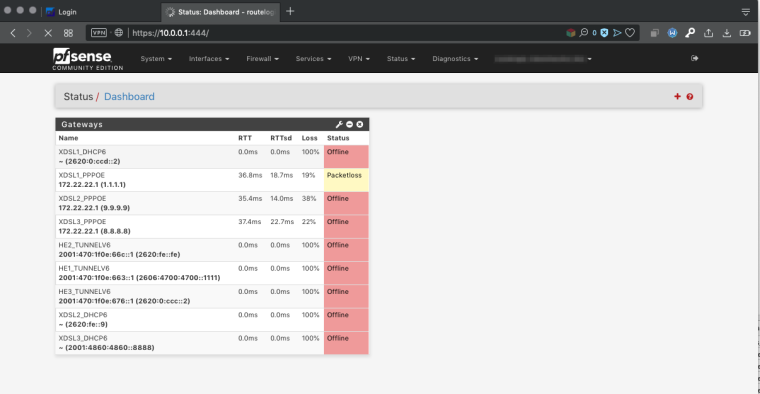

At first I though it was related to OpenVPN tunnels since it was a sure way to trigger this, just add some OpenVPN tunnels, let it run and in a short time the system would be unusable I was then using external appliances to handle tunnels. Now what I've noticed is that an offline gateway seems a possible culprit since I don't know what else too analyze if I ruled out hardware already.

Other than a rather high php-fpm, there's no other indicator I'm aware of that's out of place. php-fpm does take high CPU when changes are made but doesn't saturate all cores in the system, memory is less than a quarter occupied from the total assigned, MBUF also near nothing utilized, disks, [/] 5% and [/var/run] 5%. State tables, near empty too.

When I run netstat on console I get several long pauses on the lines that read TIME_WAIT under the state column. To be honest I don't have the slighest clue of what this command is for, I'm just looking for metrics. When I make changes, at the state the system is now, it would hang for a while, then after returns the page that the server did not respond after a few retries it finally responds, but, shorly after it would not respond for about 3 minutes, active Internet connections would break, even those bursty, like YouTube. Inter-VLAN routing is dropping every few seconds now, e.g; an RDP session but reconnect over and over from hosts on different subnets, even with low network traffic.

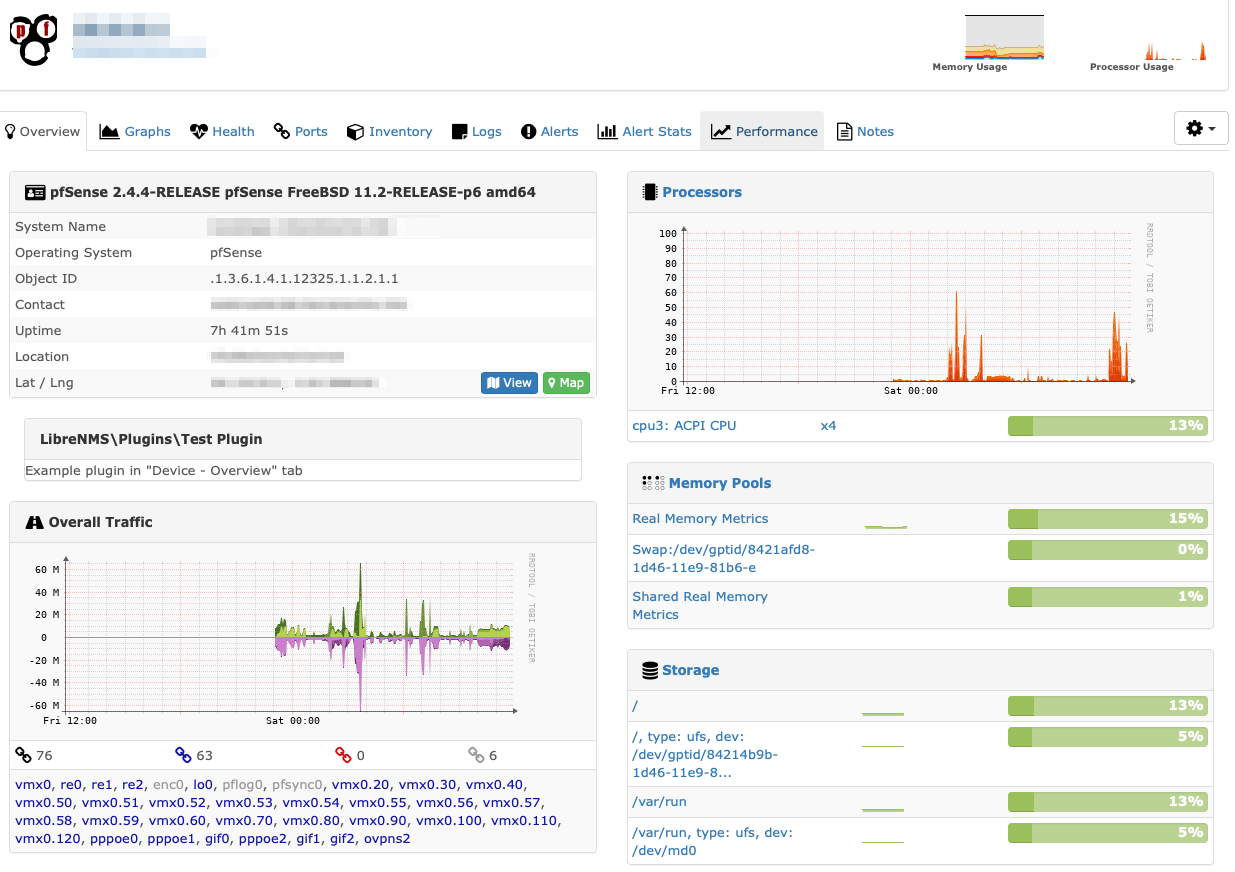

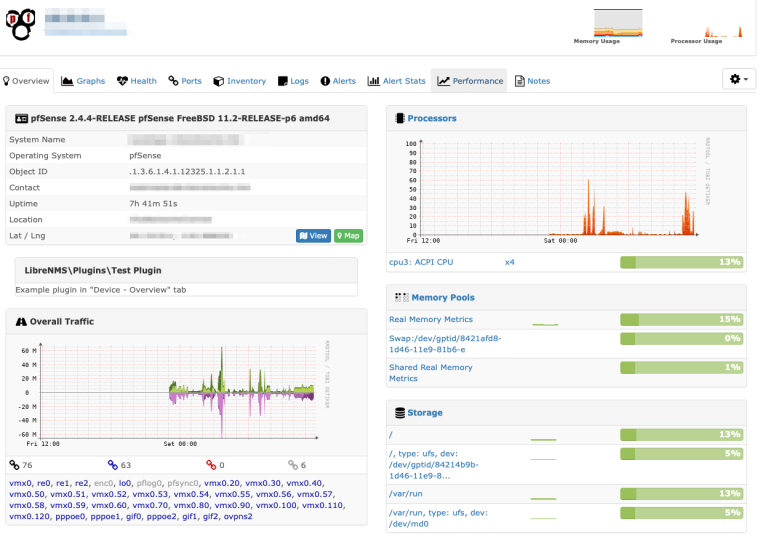

Here are some stats from SNMP:

I have also noticed, just as a downed gateway, something that would max out one of the WAN uplinks, going out to the internet, like an iCloud sync, would basically do the same and exacerbate the bad conditions. Even if the other connections are idle, or in the case of gateways, even if these are not assigned to any interface.

Where else can I check what's going on? I think I really have run out of ideas for good. I found OPNsense a few weeks back, immediately I felt at home because it resembles pfSense so much, even on some descriptions of things but it was quirky load balancing WANs so I got back to pfSense, plus I have copied my complete DHCP reservation tables 7-to-9 times by hand, I cannot doing again. If I can't fix it I'll have no option but getting something like Fortinet or Forti-something, I'm exhausted. :(

Anyway, thanks guys!

-

For testing remove all the widgets from your dashboard.

-Rico

-

Are you on 2.4.4 or 2.4.4-p2?

-

I'm on 2.4.4-RELEASE-p2. I have done that (removing the widgets) it doesn't make any difference. That's why I don't get, I can't find anything abnormally high except for the response times. The closest I've gotten was when saw netstat hang while printing out the results of the command. Some of the lines involved I remember were related to port forward, I tested the server, but it is responding fine within the subnet, from another subnet and from the Internet.

I got the FreeBSD Handbook last night, I just dropped it into an iPad to take it with me while I walk my dog. Not that I'll be able to concentrate much with the puppy tugging on the leash but the might do me well. :)

-

Forgot to mention, these last few times, a reboot restores some of the firewall's responsiveness. It doesn't get back to normal all the way and it's more or less observable when the sluggishness creeps in (it starts very very slowly then escalates super quick) but at least it's something. My very uninformed opinion is that it is a memory leak...if memory leaks aren't accounted for by the system, 'cause otherwise it appears as free.

I'll keep poking around before reinstalling. It just occurred to me that if I'm right about failure to reach gateways is somehow affecting the firewall--I can just disable gateway monitoring and see what happens. It would probably screw up VoIP and latency-selected DDNS, but I'll try this this tunnel service from Cloudflare with which I can go around the firewall altogether. It's metered but for a home user the base quota seems fine.

-

If you navigate to Status - Monitoring, is there anything of note?

I asked about what version of pfSense earlier; as there was a memory leak on 2.4.4 with unbound, that has been resolved.

I have not heard of other people having these issues; and I don't see similar posts in the forum. To me this means the issues is isolated to your install/network/configuration.

On this device, i'm seeing realtec nics, it's entirely possible this is the problem.

-

I FOUND IT !

I cannot begin to tell you how happy I am. I've been suffering for months about this. What clued me is was: since my personal computer is on one of the subnets for users, and inter-VLAN routing is unusable I couldn't SSH into the router, I'd just get the "broken pipe" thingy.

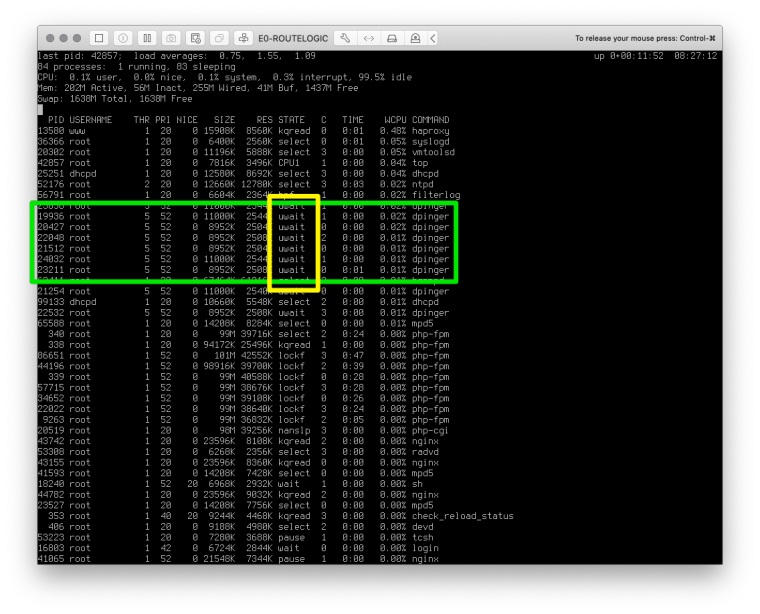

So I added the VLAN and connected to console instead, where I noticed this:

...from what I learned by installing OPNsense recently, apinger and dpinger stuck with me. Specifically since none of which work in OPNsense, they show online no matter what. Second I noticed was the wait; from netstat I also got a wait, and I started putting two and two together.

Trying to relieve a little from duties and to make the virtual machine more homogenous by removing its assigned PCI cards on the hypervisor, enabling it to vMotion powered on and HA without the CARP complexity; I used an old load balancer I forgot I had, and send the PPPoE lines already with the link up, so essentially 1:1 NAT. On the reboot it couldn't ping any of the gateways 1, preempting my panic state and 2, confirming what I thought was effing up the whole setup.

I SSHed into the router and digged for a config backup and I guess today's my lucky day because I found one. I removed the snapshots and disk from the VM and reinstalled, skipped setup, and fortunately it could contact more gateways this time; apparently the more gateways offline, the worst the situation gets.

The crazy thing is that it's got Internet connectivity, there's just some hiccup with the gateway thing. I am using reliable--well, maybe not Google's--public DNS servers, it's odd.

Anyway, I trimmed the fat (meaning I removed gateways after the interface change) and turned off the monitor service. It didn't take on the first try, as usual, a little disheartened I insisted and done--page loaded. I went to Dashboard and added all the widgets available, and navigated away to the interface assigning pages, changed stuff, it paused a little but very normal and there it was the page loaded again blazingly fast.

I ran out of widgets and can't make it slow down now.

I still have to install packages because it's not detecting a connection (duh..) for itself, so didn't pull them but usually updating from console fixes it.

I still have to install packages because it's not detecting a connection (duh..) for itself, so didn't pull them but usually updating from console fixes it.Thank you all for your answers, just by getting any reply at all helps me a lot to calm the F down when on edge and keep trying.

-

Hmm, so multiple dpinger targets that were all down at that time caused both the gui become unusuably slow and eventually drop traffic though the firewall?

All those gateways were actually down or just the firewall unable to send traffic across them?

Even with all the gateways shown as down as long as you have a default gateway set and a default route present the firewall should be able to connect out itself.

Steve

-

Sorry, I took some much needed sleep. Yeah, default gateway was set. On both stacks. Right now I'm trying to setup up IPv6 but even though I have native IPv6 I just can't ping out. And normally the gateway all send echos just fine--well, the IPv4 ones--but I imagine now that I moved some services like DHCP back into the same box as the firewall, the network must've been going crazy with the server down or something. I actually logged in today to search a little for answers.

While missing response from gateway I just confirmed to be triggering this, I've been having this problem since september in the 2.4.4 update. I thought first it was OpenVPN tunnels I made, then I stopped adding tunnels to the firewall and setup another appliance for the firewall to redirect each wan link (after NAT) into another firewall, this one would bring up the tunnels and loop back to the NATting firewall the load balanced tunnels. Then I observed crazyness again while setting up HAProxy, it was the same story, it would start getting

progressivelyexponentially slow after each page load until unusable, I moved the proxy off the main firewall as well, now I had 6 pfSense boxes, main, FreeRADIUS, DHCP, OpenVPN, HAProxy and DNS/TLS. From the first time it took a couple extra seconds I knew I had to snapshot back immediately if 'cause it would be painful for then on. That's if I had a snapshot ready, 'cause I tend do it after everything is stable but because this is all first steps, I must've had started over several hundred times the the last few months.At one time since I already had so many instances deployed, I decided to structure it a little better and just have fun with it, so I separated NAT/PPPoE from the main box, totaling 7 boxes. All the complexity has a few perks, for instance, losing the main firewall will not leave me offline, DHCP will always work and VLAN assignments for userless clients too thanks to FreeRADIUS, even with all domain controllers down.

I've two "remote ISPs", one of them has some issues sometimes and tunnels would fall, leading to an unresponsive gateway from the main firewalls point of view--this is when I first isolated this mid-November, but I thought it was silly because it's supposed to be able to withstand all of that so the latency-sensitive gateway tiering/load-balancing kicks in. I never mentioned it because it made sense. So...that happened...

Yesterday I figured it out, I kept testing and since I'm adding and removing interfaces, they get automatically a dynamic gateway, and it is hell again except now that I know what it is I patiently wait some few forevers for the pages to load to reach the page with the checkbox to disable monitoring on that gateway and it's zippy again. With the detector deactivated I can even change the parent of an interface, such as PPPoE, and it seriously fast. I have gotten a few "Network Protocol Failed" errors but these usually come after reloading rules or doing anything that would've altered the data paths anyway. I'm feel confident once I get IPv6 in order, I'll restart, snapshot and it should be all good...on the other hand, pfSense might be lulling me into a false sense of security.

IDK anymore, but I'm well-slept and I'll bite.

IDK anymore, but I'm well-slept and I'll bite. -

I've been testing for a few days now; every function that was not working anymore is working again. The system is plenty fast. It currently is running 3x always-on OpenVPN tunnels, , AES256CBC+SHA1+BSDcryptosomething... as well as managing 3x PPPoE VDSL2 lines, about a dozen internal interfaces, HAProxy, DHCP, 3x GIFs, 2x Suricata interfaces, and tomorrow I guess I'll train another 2.

I tried my VPN provider the quirky one; their connection profile is completely overkill (more so because they're based in Canada, member on the nosy governments), it's; AES256[CBC|GCM]+a TLS key+super high SHA512 for the digest, and this last one I think is one of the settings that has a huge impact on the system. It starts failing to load pages, and changes/commits--or when the pages errors out, to be more precise, stop sticking on, or they whould but the same page is either returned by the system or cached and returned by the browser. Opening a second browser and finding a way to navigate close to the page but not directly to the page (to avoid sending more commits) is how I was getting around this, because the pages errors out after a really long time you avoid waiting another time at all costs, the breadcrumb bar is immensely helpful in these cases.

And that's about it, SHA512 and a missing gateway is what I've found so far to crimp the system. If those aren't present it's can handle a million tasks again--no biggie. With all the tasks I've thrown at it, it's only using 600MB+ of memory. I thought I should write this somewhere hopefully it helps someone. :)

BTW, the processor I'm using is an Intel Core i5 at 3.2GHz I believe, with the crypto thingies. It also has happened on Xeons. When it's acting up thought, there's no load in any of the CPUs I've seen this happened beyond idle.

-

Hmm, odd. Doesn't seem like anything I've seen but I'll be watching for it now. Thanks for the write up.

Steve