Slow IPSEC Performance

-

I have a site to site tunnel between two Cisco routers. The Cisco routers are limited to 50Mbps due to licensing, and I would like to take advantage of the full 150Mbps for file replication over smb.

Using the same circuits as the Cisco routers, just using different public IPs on the subnets, I setup two PFsense VMs. One is running on vmware and the other on kvm. Both are running the same 2.4.4 p2 PFSense. Both hosts have Intel Xeon CPUs with AES.

The problem I run into appears to deal with latency. When I search for all jpgs on the remote file system, it takes 5 minutes on the Cisco routers but 15 minutes between the PFSense VMs. Sequential file transfers are still faster on the PFSense tunnel.

I played around with ipcomp and multithreaded encryption, but this didn't make any difference. I expected as much since the CPU is not being hit very hard with just the two systems talking over the tunnel. Nothing else uses this tunnel.

Any thoughts on where else I should start looking?

dd if=/dev/zero of=/mnt/pfsense/testfile bs=100M count=1

1+0 records in

1+0 records out

104857600 bytes (105 MB) copied, 5.83647 s, 18.0 MB/sdd if=/dev/zero of=/mnt/cisco/testfile bs=100M count=1

1+0 records in

1+0 records out

104857600 bytes (105 MB) copied, 15.5118 s, 6.8 MB/stime find /mnt/cisco -iname '*jpg*'

...

real 5m54.175s

user 0m0.121s

sys 0m0.457stime find /mnt/pfsense -iname '*jpg*'

...

real 14m7.064s

user 0m0.109s

sys 0m0.496svmware CPU

Intel(R) Xeon(R) CPU E5-2643 v3 @ 3.40GHz

2 CPUs: 2 package(s)

AES-NI CPU Crypto: Yes (active)kvm CPU

Intel Xeon E3-12xx v2 (Ivy Bridge, IBRS)

2 CPUs: 2 package(s)

AES-NI CPU Crypto: Yes (active) -

@luxadmin

Hey

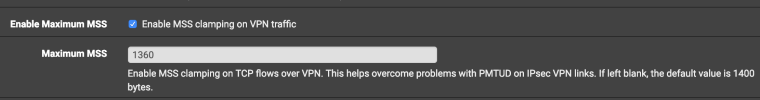

Try decreasing the value of MSS on both sides of the tunnel

VPN/IPsec/Advanced Settings/Maximum MSS

for example,my settings

-

SMB is a very chatty protocol designed for low latency local links. When running SMB over slow, higher latency links you will get slow performance out of the VPN. A better bet is to use a different protocol for file transfers over the VPN link.

-

@chrismacmahon said in Slow IPSEC Performance:

SMB is a very chatty protocol designed for low latency local links. When running SMB over slow, higher latency links you will get slow performance out of the VPN. A better bet is to use a different protocol for file transfers over the VPN link.

Though technically correct, this does not account for discrepancy on using the same protocol over a Cisco vpn vs pfsense vpn.

-

Do you know if the cisco's have a vpn accelerator built in: https://www.cisco.com/c/en/us/td/docs/ios/12_2/12_2y/12_2ye9/feature/guide/12ye_vam.html

As @Konstanti stated, you can try seeing if MSS clamping has some impact on the link.

-

@chrismacmahon Will need to check if they have this installed, the Cisco team won't let us look at their equipment.

Just killed all rsync jobs and about to enable the MSS clamping.

-

Tried messing with the clamping and no improvement. Used both a small MSS and the max my connection supports.

The load average is 0.17, 0.19, 0.15 for dual cores which is rather low, so I doubt it's a CPU bottleneck.

Found a number of suggestions online, but they are geared towards throughput and not this "chatty" type of traffic.

-

Have you tested IPERF through the tunnel?

-

@chrismacmahon What would I look for that we do not already know? (not being snarky, I am really asking)

I tried messing with the TCP offloading on the KVM side as this has been known to cause issues. I now remember last year I installed PFSense as a VM in Ovirt. It performed terrible with OpenVPN being nearly unusable. Switched to a standard CentOS installation and running OpenVPN and it was flawless.

I may end up trying VyOS and see what I can get from that.

-

Have you removed hardware offloading?

WARNING: because the hardware checksum offload is not yet disabled, accessing pfSense WebGUI might be sluggish. This is NORMAL and is fixed in the following step.

To disable hardware checksum offload, navigate under System > Advanced and select Networking tab. Under Networking Interfaces section check the Disable hardware checksum offload and click save. Reboot will be required after this step.

The iperf tests through the tunnel will tell you if there is an issue in the tunnel or elsewhere. If you are getting the full 150m between the 2 points on an iperf test, we know it's the SMB protocol that is the issue.

-

Yes I tried disabling TCP offloading, and it reduced my throughput by 80%. I re-enabled it.

I am getting the full 150Mbps over SMB on the PFSense tunnel, that is not the issue. I am sorry, it can be difficult to explain these issues using only text and I may not be explaining this correctly.

Throughput is good!

Random filesystem access is bad.Example:

I search the SMB shared for all jpg files. Using the 50Mbps Cisco tunnel, it takes 5 minutes. Using the 150Mbps PFsense tunnel, it takes 15 minutes.

It is an odd issue to have, and one I have not seen before.