10GB lan speeds

-

MTU ?

was it the same? should be 9000 for 10gbe on all interfaces -

It was at 1500 on everything then I set every lan interface, and my windows 10 pc to 9000. But now my PC to unraid transfer speed has gone from 52MB/s to 18

-

@12Sulljo Stuff like, what pfSense version, hardware, RAM, NIC, and packages installed to help diagnose a throughput decline...seems that you are making progress, are you?

-

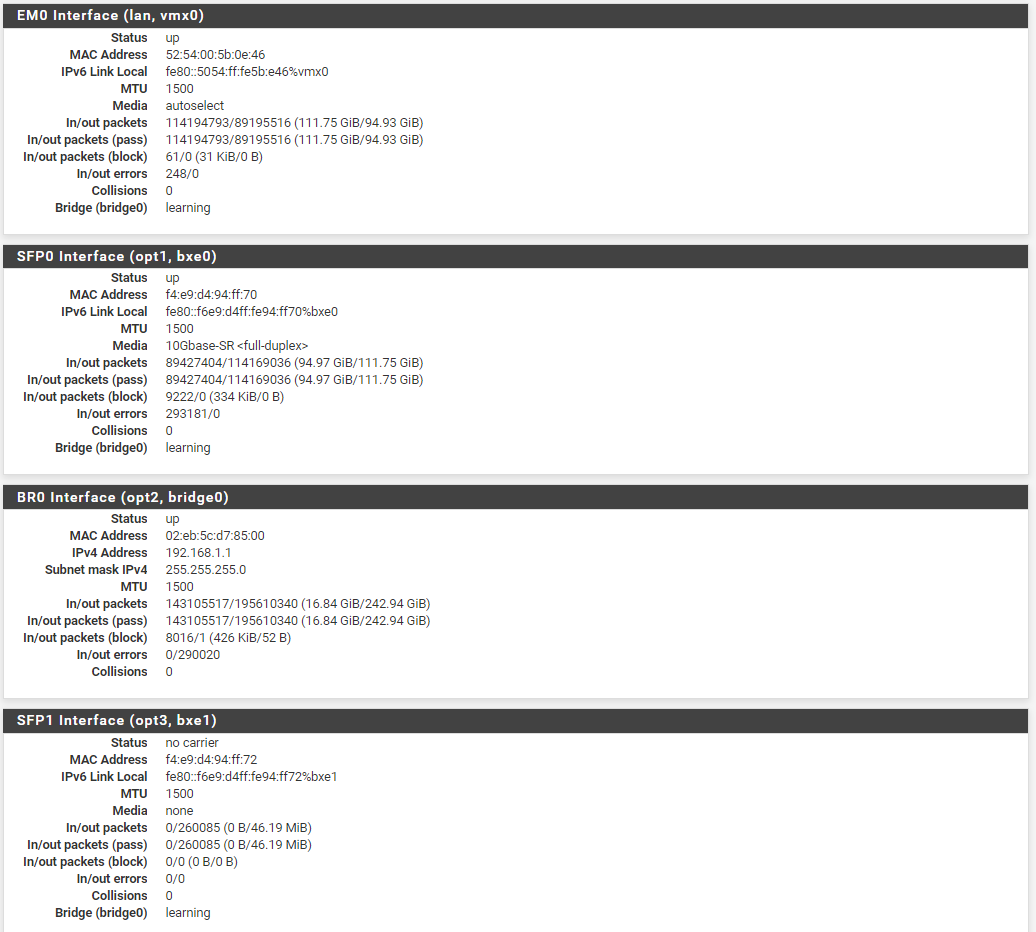

Yeah, so your load is not spreading across the cores well at all. Particularly on the bxe NIC.

How are those NICs assigned? bxo0 and vmx0 seem to be doing all the work there.

If you setup multiple connections do you see more queues loaded in the top output?

You probably need this:

MULTIPLE QUEUES The vmx driver supports multiple transmit and receive queues. Multiple queues are only supported by certain VMware products, such as ESXi. The number of queues allocated depends on the presence of MSI-X, the number of configured CPUs, and the tunables listed below. FreeBSD does not enable MSI-X support on VMware by default. The hw.pci.honor_msi_blacklist tunable must be disabled to enable MSI-X sup- port.You definitely would in VMWare.

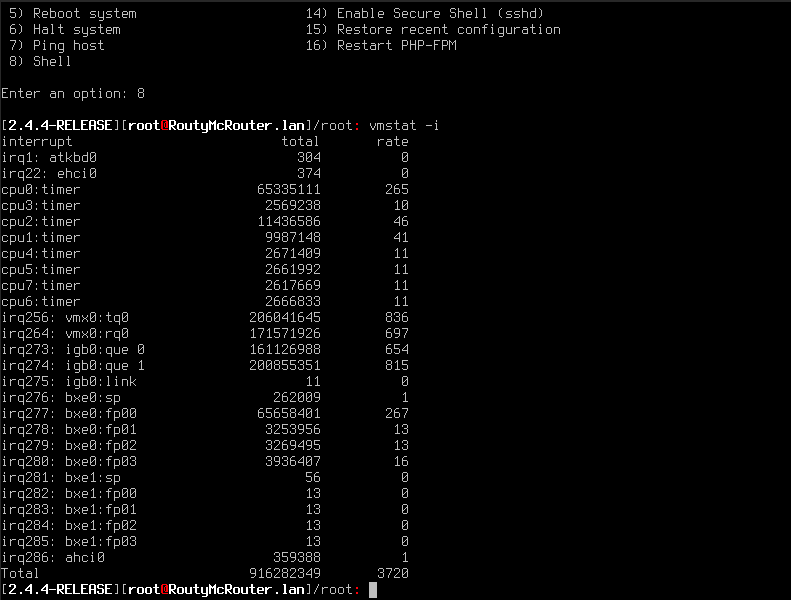

Try running

vmstat -ito see how many queues are created.The bxe driver looks to be creating the expected 4 default queues but using only one of them. That could be simply because of how you're testing.

Steve

-

-

@NollipfSense said in 10GB lan speeds:

@12Sulljo Stuff like, what pfSense version, hardware, RAM, NIC, and packages installed to help diagnose a throughput decline...seems that you are making progress, are you?

pfSense version 2.4.4-RELEASE-p3

hardware: unraid VM with 8 threads of a Ryzen 2700 which is passed through using vmx3net, the card then goes into a dell force 10 s55 switch with all ports on a single vlan, the back of the switch has 4 sfp+ ports, one of those connects to my workstation which uses a 2700x and a Supermicro AOC-STGN-i2S rev 2.0 Dual SFP+ Intel 82599 10GbE Controller NIC

The other ethernet ports on the switch connect to my wifi and other devices.

RAM 8GB

pfSense NIC: Dell Y40PH broadcom 10GB dual port pci-e sfp+ nic

packages installed: none yet

-

-

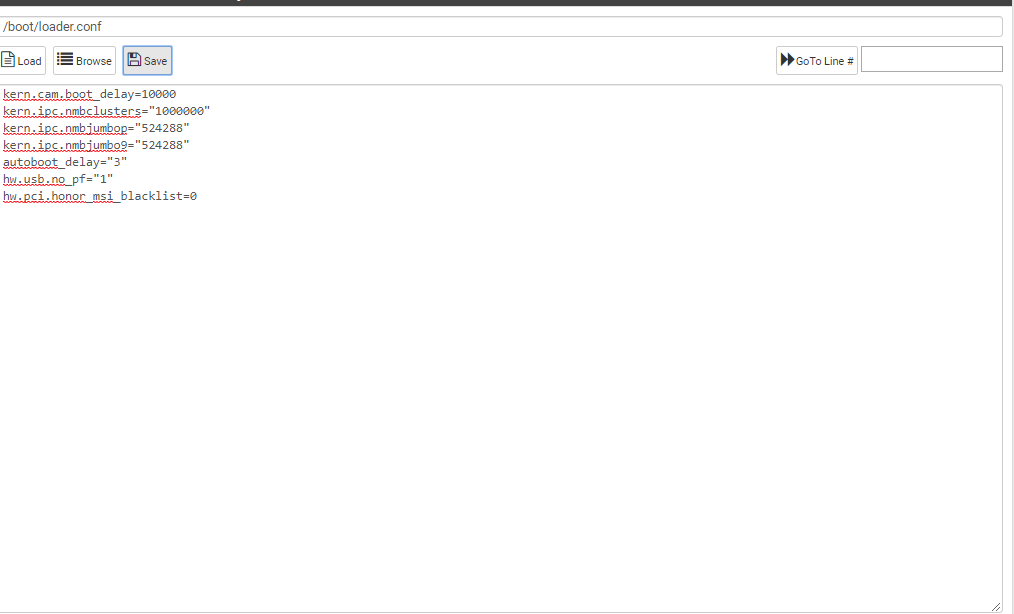

Ok so you only have one queue for the vmx NIC like I suspected. Add that loader tunable I posted to /boot/loader.conf.local and reboot.

What's in bridge0? But more to the point why do you have a bridge? That's probably killing your throughput.

What's on igb0? The interrupt rate is quite high there.

Steve

-

igb0 is the wan

I setup the bridge because it was recommended to me on an unraid forum on how to have the dockers and everything else on my server be visible to the rest of my physical lan network.

before setting that up my server was unable to communicate with the rest of the network, only pfsense and the rest of the physical network was able to communicate.

I'm still new at this stuff, I'm not sure what you mean by adding the loader tunable, all I see on this post is something called multiple queues but I'm not sure how I would turn that on.

Thank you all for this help by the way, I'm eager to understand where my mistakes are so I can learn from them.

-

@12Sulljo said in 10GB lan speeds:

I setup the bridge because it was recommended to me on an unraid forum on how to have the dockers and everything else on my server be visible to the rest of my physical lan network.

That would probably be a bridge on unraid, not on your firewall.

-

The options for vmx are detailed here: https://www.freebsd.org/cgi/man.cgi?query=vmx

But you want to create the file /boot/loader.conf.local (if it doesn't exist) and add to it the line:

hw.pci.honor_msi_blacklist=0You can do that from the webgui in Diag > Edit File.

Then when you reboot the vmx driver will enable MSI and you will get multiple queues on the NIC. That should allow you to use multiple cores and get far better throughput for multiple connections. Though you may still be restricted somewhere else.

Steve

-

@stephenw10 ok so I added it but before I do anything should I remove the usb one?

-

Also, how can my vmx and physical lan be on the same subnet if they aren't in a bridge?

-

Use a switch?

-

I have a switch, How does a virtual nic which communicates between unraid and a VM of pfsense use a switch?

-

Like I said before. you do not need a pfSense bridge to communicate across your virtual environment. You need a bridge in the virtual environment.

-

ok, but then how does unraid communicate with the physical environment without assigning an ip on a different subnet?

-

you connect your hypervisor to the bridge by bridging in a hypervisor physical NIC.

-

That line should should go in /boot/loader.conf.local to avoid being overwritten at upgrade. You will probably need to create that.

Don't remove the default values from loader.conf.

Steve

-

@stephenw10 the line increased my speed to 54MB/s and only two of my threads are running at 100%