DNS Resolver not caching correct?

-

Yes it gets more clear for me now, thanks.

Heres the traceroute:

1 37.49.100.1 6.696 ms 6.047 ms 6.332 ms 2 172.30.22.97 6.166 ms 6.018 ms 6.383 ms 3 84.116.191.221 8.367 ms 9.098 ms 8.967 ms 4 84.116.130.102 7.959 ms 7.905 ms 7.490 ms 5 129.250.9.29 8.914 ms 8.023 ms 8.070 ms 6 129.250.4.16 8.048 ms 8.483 ms 8.894 ms 7 129.250.4.96 94.929 ms 94.882 ms 96.235 ms 8 129.250.3.189 160.419 ms 160.473 ms 164.702 ms 9 129.250.3.238 160.700 ms 160.501 ms 160.755 ms 10 129.250.2.16 161.365 ms 160.543 ms 160.335 ms 11 129.250.198.182 158.326 ms 158.105 ms 158.365 ms 12 162.144.240.163 182.527 ms 182.299 ms 182.470 ms 13 162.144.240.127 182.288 ms 182.736 ms 183.292 ms 14 198.57.151.250 158.162 ms 159.572 ms 158.137 msSome names are resolved with 0ms now on morning, others I used yesterday not. Why? Does unbound cached more used names longer?

Why stopped unbound this night? Has it something to do with pfblockerNG-devel?

Aug 31 00:00:16 unbound 5433:0 info: start of service (unbound 1.9.1). Aug 31 00:00:16 unbound 5433:0 notice: init module 1: iterator Aug 31 00:00:16 unbound 5433:0 notice: init module 0: validator Aug 31 00:00:15 unbound 5433:0 notice: Restart of unbound 1.9.1. Aug 31 00:00:15 unbound 5433:0 info: 0.524288 1.000000 4 Aug 31 00:00:15 unbound 5433:0 info: 0.262144 0.524288 7 Aug 31 00:00:15 unbound 5433:0 info: 0.131072 0.262144 54 Aug 31 00:00:15 unbound 5433:0 info: 0.065536 0.131072 98 Aug 31 00:00:15 unbound 5433:0 info: 0.032768 0.065536 68 Aug 31 00:00:15 unbound 5433:0 info: 0.016384 0.032768 43 Aug 31 00:00:15 unbound 5433:0 info: 0.008192 0.016384 28 Aug 31 00:00:15 unbound 5433:0 info: 0.004096 0.008192 2 Aug 31 00:00:15 unbound 5433:0 info: 0.000000 0.000001 42 Aug 31 00:00:15 unbound 5433:0 info: lower(secs) upper(secs) recursions Aug 31 00:00:15 unbound 5433:0 info: [25%]=0.0219088 median[50%]=0.0607172 [75%]=0.116694 Aug 31 00:00:15 unbound 5433:0 info: histogram of recursion processing times Aug 31 00:00:15 unbound 5433:0 info: average recursion processing time 0.082558 sec Aug 31 00:00:15 unbound 5433:0 info: server stats for thread 3: requestlist max 19 avg 0.542125 exceeded 0 jostled 0 Aug 31 00:00:15 unbound 5433:0 info: server stats for thread 3: 1239 queries, 893 answers from cache, 346 recursions, 473 prefetch, 0 rejected by ip ratelimiting Aug 31 00:00:15 unbound 5433:0 info: 2.000000 4.000000 2 Aug 31 00:00:15 unbound 5433:0 info: 1.000000 2.000000 4 Aug 31 00:00:15 unbound 5433:0 info: 0.524288 1.000000 9 Aug 31 00:00:15 unbound 5433:0 info: 0.262144 0.524288 45 Aug 31 00:00:15 unbound 5433:0 info: 0.131072 0.262144 236 Aug 31 00:00:15 unbound 5433:0 info: 0.065536 0.131072 366 Aug 31 00:00:15 unbound 5433:0 info: 0.032768 0.065536 363 Aug 31 00:00:15 unbound 5433:0 info: 0.016384 0.032768 248 Aug 31 00:00:15 unbound 5433:0 info: 0.008192 0.016384 100 Aug 31 00:00:15 unbound 5433:0 info: 0.004096 0.008192 10 Aug 31 00:00:15 unbound 5433:0 info: 0.002048 0.004096 3 Aug 31 00:00:15 unbound 5433:0 info: 0.001024 0.002048 3 Aug 31 00:00:15 unbound 5433:0 info: 0.000512 0.001024 2 Aug 31 00:00:15 unbound 5433:0 info: 0.000256 0.000512 2 Aug 31 00:00:15 unbound 5433:0 info: 0.000000 0.000001 152 Aug 31 00:00:15 unbound 5433:0 info: lower(secs) upper(secs) recursions Aug 31 00:00:15 unbound 5433:0 info: [25%]=0.0239319 median[50%]=0.0555612 [75%]=0.114912 Aug 31 00:00:15 unbound 5433:0 info: histogram of recursion processing times Aug 31 00:00:15 unbound 5433:0 info: average recursion processing time 0.086276 sec Aug 31 00:00:15 unbound 5433:0 info: server stats for thread 2: requestlist max 28 avg 1.21664 exceeded 0 jostled 0 Aug 31 00:00:15 unbound 5433:0 info: server stats for thread 2: 4486 queries, 2941 answers from cache, 1545 recursions, 1580 prefetch, 0 rejected by ip ratelimiting Aug 31 00:00:15 unbound 5433:0 info: 1.000000 2.000000 1 Aug 31 00:00:15 unbound 5433:0 info: 0.524288 1.000000 12 Aug 31 00:00:15 unbound 5433:0 info: 0.262144 0.524288 27 Aug 31 00:00:15 unbound 5433:0 info: 0.131072 0.262144 75 Aug 31 00:00:15 unbound 5433:0 info: 0.065536 0.131072 213 Aug 31 00:00:15 unbound 5433:0 info: 0.032768 0.065536 180 Aug 31 00:00:15 unbound 5433:0 info: 0.016384 0.032768 124 Aug 31 00:00:15 unbound 5433:0 info: 0.008192 0.016384 60 Aug 31 00:00:15 unbound 5433:0 info: 0.004096 0.008192 3 Aug 31 00:00:15 unbound 5433:0 info: 0.002048 0.004096 1 Aug 31 00:00:15 unbound 5433:0 info: 0.000512 0.001024 1 Aug 31 00:00:15 unbound 5433:0 info: 0.000000 0.000001 71 Aug 31 00:00:15 unbound 5433:0 info: lower(secs) upper(secs) recursions Aug 31 00:00:15 unbound 5433:0 info: [25%]=0.0237832 median[50%]=0.0553415 [75%]=0.107381 Aug 31 00:00:15 unbound 5433:0 info: histogram of recursion processing times Aug 31 00:00:15 unbound 5433:0 info: average recursion processing time 0.082412 sec Aug 31 00:00:15 unbound 5433:0 info: server stats for thread 1: requestlist max 23 avg 0.68534 exceeded 0 jostled 0 Aug 31 00:00:15 unbound 5433:0 info: server stats for thread 1: 2418 queries, 1650 answers from cache, 768 recursions, 910 prefetch, 0 rejected by ip ratelimiting Aug 31 00:00:15 unbound 5433:0 info: 2.000000 4.000000 2 Aug 31 00:00:15 unbound 5433:0 info: 1.000000 2.000000 5 Aug 31 00:00:15 unbound 5433:0 info: 0.524288 1.000000 11 Aug 31 00:00:15 unbound 5433:0 info: 0.262144 0.524288 37 Aug 31 00:00:15 unbound 5433:0 info: 0.131072 0.262144 288 Aug 31 00:00:15 unbound 5433:0 info: 0.065536 0.131072 409 Aug 31 00:00:15 unbound 5433:0 info: 0.032768 0.065536 398 Aug 31 00:00:15 unbound 5433:0 info: 0.016384 0.032768 258 Aug 31 00:00:15 unbound 5433:0 info: 0.008192 0.016384 133 Aug 31 00:00:15 unbound 5433:0 info: 0.004096 0.008192 9 Aug 31 00:00:15 unbound 5433:0 info: 0.002048 0.004096 7 Aug 31 00:00:15 unbound 5433:0 info: 0.001024 0.002048 2 Aug 31 00:00:15 unbound 5433:0 info: 0.000512 0.001024 3 Aug 31 00:00:15 unbound 5433:0 info: 0.000256 0.000512 3 Aug 31 00:00:15 unbound 5433:0 info: 0.000128 0.000256 1 Aug 31 00:00:15 unbound 5433:0 info: 0.000000 0.000001 199 Aug 31 00:00:15 unbound 5433:0 info: lower(secs) upper(secs) recursions Aug 31 00:00:15 unbound 5433:0 info: [25%]=0.0217342 median[50%]=0.0547917 [75%]=0.115329 Aug 31 00:00:15 unbound 5433:0 info: histogram of recursion processing times Aug 31 00:00:15 unbound 5433:0 info: average recursion processing time 0.084765 sec Aug 31 00:00:15 unbound 5433:0 info: server stats for thread 0: requestlist max 23 avg 1.41447 exceeded 0 jostled 0 Aug 31 00:00:15 unbound 5433:0 info: server stats for thread 0: 5246 queries, 3481 answers from cache, 1765 recursions, 1842 prefetch, 0 rejected by ip ratelimiting Aug 31 00:00:15 unbound 5433:0 info: service stopped (unbound 1.9.1). -

not a traceroute a dig +trace

$ dig www.whatever.com +trace ; <<>> DiG 9.14.4 <<>> www.whatever.com +trace ;; global options: +cmd . 27190 IN NS h.root-servers.net. . 27190 IN NS k.root-servers.net. . 27190 IN NS d.root-servers.net. . 27190 IN NS l.root-servers.net. . 27190 IN NS c.root-servers.net. . 27190 IN NS a.root-servers.net. . 27190 IN NS m.root-servers.net. . 27190 IN NS f.root-servers.net. . 27190 IN NS e.root-servers.net. . 27190 IN NS j.root-servers.net. . 27190 IN NS i.root-servers.net. . 27190 IN NS g.root-servers.net. . 27190 IN NS b.root-servers.net. . 27190 IN RRSIG NS 8 0 518400 20190912170000 20190830160000 59944 . DtQjgY6hTjQoBx95E2qR9YHr/VIiwFqkjYjvBuX21XlBEYjlH3Rq0+sF 0XkyzUwp6xq2SXW3ZPgK0SHf2/hv+3fx0sricuQ5mAhvlw9yVVIwQTq5 dr2B0hfs6tfZNiX+CDNMK6DzjEAlX34gnVZmtSuv5KG87PG9ztBoygPd AxobqaiBksHS8DsCNpVwRunZCZ0Wd59LlWl72etkTft779F8YxvIa9B4 MOf497UcW+Wk38utZ4LRtJL0nTk5BeP0jf6oPi95Sp80SgkOGlOAkwvM c10ZiG5NrH0CtBJYQtOpAG4SamwxhxzK1TElq2SZY7lLOTtrFCQYNK53 0Y5yVA== ;; Received 525 bytes from 192.168.3.10#53(192.168.3.10) in 3 ms com. 172800 IN NS m.gtld-servers.net. com. 172800 IN NS c.gtld-servers.net. com. 172800 IN NS e.gtld-servers.net. com. 172800 IN NS a.gtld-servers.net. com. 172800 IN NS d.gtld-servers.net. com. 172800 IN NS b.gtld-servers.net. com. 172800 IN NS g.gtld-servers.net. com. 172800 IN NS f.gtld-servers.net. com. 172800 IN NS k.gtld-servers.net. com. 172800 IN NS j.gtld-servers.net. com. 172800 IN NS l.gtld-servers.net. com. 172800 IN NS i.gtld-servers.net. com. 172800 IN NS h.gtld-servers.net. com. 86400 IN DS 30909 8 2 E2D3C916F6DEEAC73294E8268FB5885044A833FC5459588F4A9184CF C41A5766 com. 86400 IN RRSIG DS 8 1 86400 20190913050000 20190831040000 59944 . ZmaE6S3yTVnYVXNywBnPO1hD4iHQ/DaBiMDi2+mRC88NXTH1Qrsnflnm fIInk6AnQAtl9uS3LM+qXinwCUMrpVGupSi9FQ3QneZgnilRzhyuloxM xJi/22+WulaBE7UzDZJrpA572P3dWBHl296vw3oCoF8OENW/D2Z16gWw xOBJD57Jocnhghm9ONXoE60WPWSOQD9xytzc5vl1oZIRYpmcYsNe1wsq NYm+WUSuM1+AaG0tyjdbwxR23nkRowRxTJyARkc4wcaIEQaNXyEm7Iad ToAyiKVxpCGs2B7JKHuVL9sXsNYo/+awj5yGXuWz1tLBk3teXKgMI0Yu qjSSig== ;; Received 1204 bytes from 192.33.4.12#53(c.root-servers.net) in 12 ms whatever.com. 172800 IN NS ns6217.hostgator.com. whatever.com. 172800 IN NS ns6218.hostgator.com. CK0POJMG874LJREF7EFN8430QVIT8BSM.com. 86400 IN NSEC3 1 1 0 - CK0Q1GIN43N1ARRC9OSM6QPQR81H5M9A NS SOA RRSIG DNSKEY NSEC3PARAM CK0POJMG874LJREF7EFN8430QVIT8BSM.com. 86400 IN RRSIG NSEC3 8 2 86400 20190904044529 20190828033529 17708 com. vtK0SnwKj0v250DLs1saXgDLxjCfNIdwgX/HHiCRQtvwxI3gMdZbEkM2 iOCv2Sdzo0dnz4RxN6BqXXbB8ZwWqG632PgCwFZluYzSi+stiZY2RX31 FlFzE2VgSf9xB/cElOJp94o2sYEW/n4Gqp73bPbE/HFcVeklYm0MI0bA JvU= RNHABBI0G00MKABON3HNN10VBLL72I2F.com. 86400 IN NSEC3 1 1 0 - RNHCNLOM2HJP5G1RNIDHEF5664U20CFO NS DS RRSIG RNHABBI0G00MKABON3HNN10VBLL72I2F.com. 86400 IN RRSIG NSEC3 8 2 86400 20190905043336 20190829032336 17708 com. pHdhtwlMfX9QPxdOk6xuO4D+naVZOSfIqGqYB1B/QWlCzxRQa97pfUrn sffyo2mChJWntL6XHutDZHB+YGlvBLg4VqvdwUmoeoaZpVqwlSMtAB4x B1cyW+jf0byLvNjJELetC8JFhmH1LpJIRyuvsFhps3f+Nd6RoVUNLWpz FHM= ;; Received 614 bytes from 192.5.6.30#53(a.gtld-servers.net) in 39 ms www.whatever.com. 14400 IN CNAME whatever.com. whatever.com. 14400 IN A 198.57.151.250 whatever.com. 86400 IN NS ns6218.hostgator.com. whatever.com. 86400 IN NS ns6217.hostgator.com. ;; Received 159 bytes from 50.87.144.144#53(ns6217.hostgator.com) in 44 msthat is what happens when you have to resolve from roots.

So sure if the authoritative NS or any of those others in the path are on the other side of the planet it might take a second... Doesnt matter in the big picture - its only once and after that its direct to the authoritative ns for the domain vs going all the way down from the root .

-

; <<>> DiG 9.12.2-P1 <<>> www.whatever.com +trace ;; global options: +cmd . 6497 IN NS i.root-servers.net. . 6497 IN NS h.root-servers.net. . 6497 IN NS d.root-servers.net. . 6497 IN NS j.root-servers.net. . 6497 IN NS g.root-servers.net. . 6497 IN NS b.root-servers.net. . 6497 IN NS k.root-servers.net. . 6497 IN NS m.root-servers.net. . 6497 IN NS a.root-servers.net. . 6497 IN NS e.root-servers.net. . 6497 IN NS f.root-servers.net. . 6497 IN NS c.root-servers.net. . 6497 IN NS l.root-servers.net. . 6497 IN RRSIG NS 8 0 518400 20190912050000 20190830040000 59944 . a18HBLRxbDklfb/5azG80cAJFAwNd4luRiFgFM6QUhVNkCcYfHEPN86t H2TiEwxxwQE+gfKdMFc6F+2GT5MqMgJocYS4hxyai54iMtzN9/HzUxFQ IVeOWU2g2piycqavfFqMp4pfmbESjGj3zBs3BemvD8nS9JVc7PtDnYEN HJ6iYLCSZlLp3HPTOGqd2Kh9uBmujnsVqbUoVWT7H5vT3yblT2J3MdhV XcUYAwl8CneBJGql1VT1ZS5lvGriOnrRuX9evjgHlGZuRk5tiR8oc4aH ndEc28HdihJH4fmj6P0Zq2DnP3KOMV/voHCsF29hEyT3YhpCDng5U99E 994KgA== ;; Received 525 bytes from 127.0.0.1#53(127.0.0.1) in 0 ms com. 172800 IN NS f.gtld-servers.net. com. 172800 IN NS e.gtld-servers.net. com. 172800 IN NS g.gtld-servers.net. com. 172800 IN NS c.gtld-servers.net. com. 172800 IN NS d.gtld-servers.net. com. 172800 IN NS m.gtld-servers.net. com. 172800 IN NS h.gtld-servers.net. com. 172800 IN NS i.gtld-servers.net. com. 172800 IN NS k.gtld-servers.net. com. 172800 IN NS l.gtld-servers.net. com. 172800 IN NS b.gtld-servers.net. com. 172800 IN NS j.gtld-servers.net. com. 172800 IN NS a.gtld-servers.net. com. 86400 IN DS 30909 8 2 E2D3C916F6DEEAC73294E8268FB5885044A833FC5459588F4A9184CF C41A5766 com. 86400 IN RRSIG DS 8 1 86400 20190913050000 20190831040000 59944 . ZmaE6S3yTVnYVXNywBnPO1hD4iHQ/DaBiMDi2+mRC88NXTH1Qrsnflnm fIInk6AnQAtl9uS3LM+qXinwCUMrpVGupSi9FQ3QneZgnilRzhyuloxM xJi/22+WulaBE7UzDZJrpA572P3dWBHl296vw3oCoF8OENW/D2Z16gWw xOBJD57Jocnhghm9ONXoE60WPWSOQD9xytzc5vl1oZIRYpmcYsNe1wsq NYm+WUSuM1+AaG0tyjdbwxR23nkRowRxTJyARkc4wcaIEQaNXyEm7Iad ToAyiKVxpCGs2B7JKHuVL9sXsNYo/+awj5yGXuWz1tLBk3teXKgMI0Yu qjSSig== ;; Received 1176 bytes from 2001:7fe::53#53(i.root-servers.net) in 18 ms whatever.com. 172800 IN NS ns6217.hostgator.com. whatever.com. 172800 IN NS ns6218.hostgator.com. CK0POJMG874LJREF7EFN8430QVIT8BSM.com. 86400 IN NSEC3 1 1 0 - CK0Q1GIN43N1ARRC9OSM6QPQR81H5M9A NS SOA RRSIG DNSKEY NSEC3PARAM CK0POJMG874LJREF7EFN8430QVIT8BSM.com. 86400 IN RRSIG NSEC3 8 2 86400 20190904044529 20190828033529 17708 com. vtK0SnwKj0v250DLs1saXgDLxjCfNIdwgX/HHiCRQtvwxI3gMdZbEkM2 iOCv2Sdzo0dnz4RxN6BqXXbB8ZwWqG632PgCwFZluYzSi+stiZY2RX31 FlFzE2VgSf9xB/cElOJp94o2sYEW/n4Gqp73bPbE/HFcVeklYm0MI0bA JvU= RNHABBI0G00MKABON3HNN10VBLL72I2F.com. 86400 IN NSEC3 1 1 0 - RNHCNLOM2HJP5G1RNIDHEF5664U20CFO NS DS RRSIG RNHABBI0G00MKABON3HNN10VBLL72I2F.com. 86400 IN RRSIG NSEC3 8 2 86400 20190905043336 20190829032336 17708 com. pHdhtwlMfX9QPxdOk6xuO4D+naVZOSfIqGqYB1B/QWlCzxRQa97pfUrn sffyo2mChJWntL6XHutDZHB+YGlvBLg4VqvdwUmoeoaZpVqwlSMtAB4x B1cyW+jf0byLvNjJELetC8JFhmH1LpJIRyuvsFhps3f+Nd6RoVUNLWpz FHM= ;; Received 614 bytes from 192.41.162.30#53(l.gtld-servers.net) in 15 ms www.whatever.com. 14400 IN CNAME whatever.com. whatever.com. 14400 IN A 198.57.151.250 whatever.com. 86400 IN NS ns6217.hostgator.com. whatever.com. 86400 IN NS ns6218.hostgator.com. ;; Received 159 bytes from 198.57.151.238#53(ns6218.hostgator.com) in 184 ms -

@mrsunfire said in DNS Resolver not caching correct?:

(ns6218.hostgator.com) in 184 ms

So you see the authoritative ns are that far away from you - lot farther than 7ms ;)

At any given time you might of talked to something in the path that took longer than normal, maybe something in path was congested, maybe the ns took longer to answer, etc.

But that is how something is resolved - when none of it is cached.. now the once the ns for .com are cached no reason to ask roots for those, once the ns for whatever.com is cached no reason to talk to the gtld-servers.net ns - so you can just go ask the authoritative NS directly - but since its a lot farther than 7ms away from you - there can be delays... But in the big picture makes no matter. Since its only going to be once, and then its cached for the length of the TTL, and if you have prefetch setup and or zero answer - you will get an answer from unbound instantly and it will go refresh its stuff in the background..

So what does it matter if took 1000ms - that is not even going to be really noticed since I doubt a site couple hundred ms away from you is going to load instantly anyway.. Worse case you added a whole second to the page load time (ONCE)

There is little need of concerning your self if a site resolves in 30ms or 300ms, or even 1000ms.. Since once its cached - none of that matters any more. And in the big picture a fraction of a second in addition to the overall first load time of the page is meaningless.

-

Hi johnpoz,

could you post your DNS Resolver, General Settings and Advanced Settings please.

It would be very handy for us all!

Thanks, Perlen

-

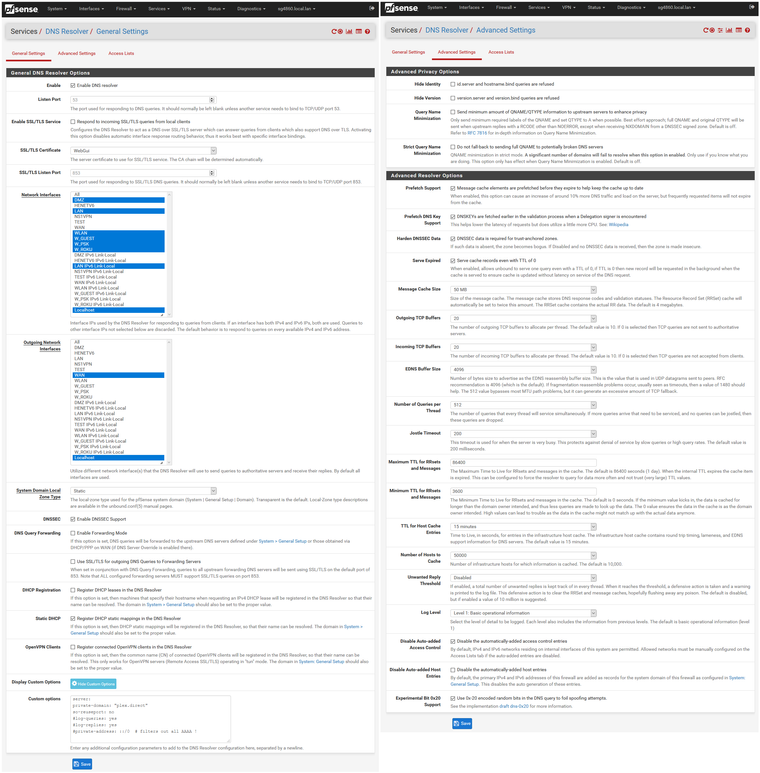

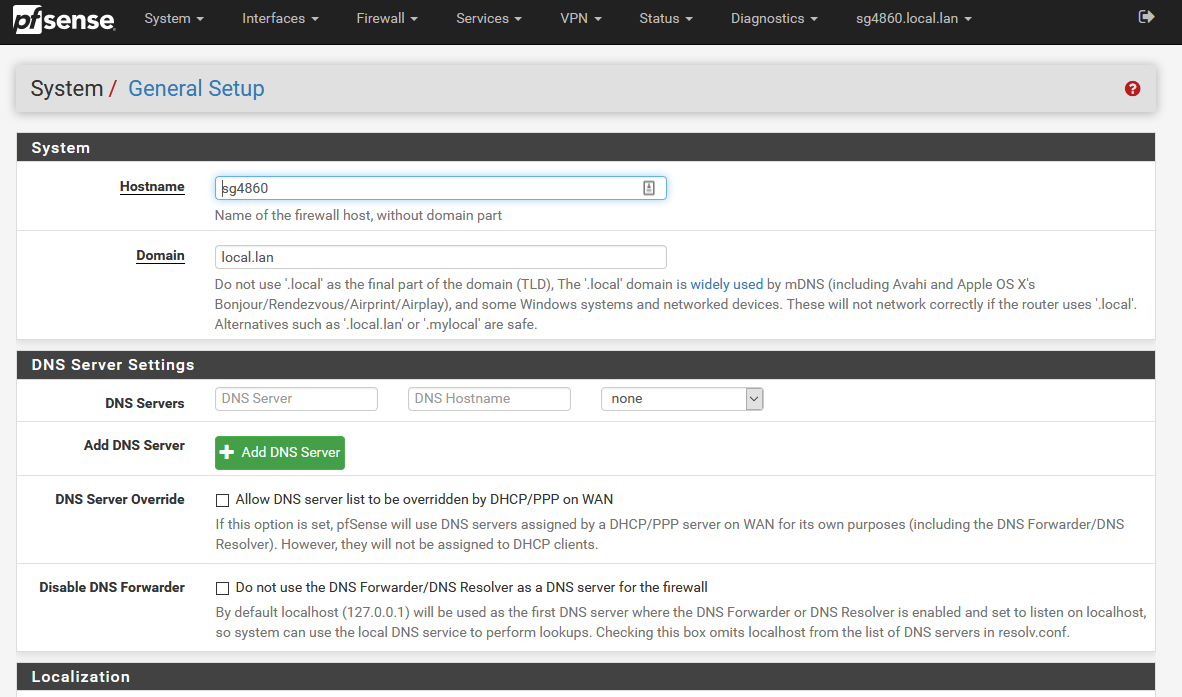

Sure here you go

Notice that I have disabled automatic ACLs so you will have to create your own to allow queries.

I have also changed from transparent to static for my zone.. Make sure you actually understand what settings do before changing them.. Any questions on what anything specifically does, just ask. Don't think this is some sort of guide to how you should set yours up.. These are my settings for my network and use case.. Most of them are just default.. Only a couple of changes really from out of the box settings. Which may or may not be good for your actual needs.

Generally speaking - out of the box should be fine for pretty much everyone.

As to the general settings - there are no dns set other than local... Here

-

Very interesting, much appreciated. Thanks!

-

For info: this is the result of cacheoutput after two days and I'm happy with it:

total.num.queries=21177 total.num.queries_ip_ratelimited=0 total.num.cachehits=15554 total.num.cachemiss=5623 total.num.prefetch=8627 total.num.zero_ttl=9232 total.num.recursivereplies=5623Thanks a lot @johnpoz !

-

@mrsunfire said in DNS Resolver not caching correct?:

total.num.zero_ttl=9232

that seems like a lot of low TTLs - you might want to play with uping the min vs letting them set like 60 seconds and 5 min ttls

-

So with what should I start? And what does this change? Isn't this dangerous?

-

In the advanced tab change the min ttl to say 1800 or 3600 vs letting them set such low ttls.. Could it cause issues - maybe. But I doubt it really..

Lets say you had something that was using a 60 second TTL, do the IPs even change - I had issue with some software where it checked a fqdn all the time, and the ttl was 60 seconds.. But upon check it multiple times the IP wasn't even changing - so what is the F'ing point of having dns query for it every 60 seconds.

Then you have them doing load balancing nonsense via dns

example

;scribe.logs.roku.com. IN A;; ANSWER SECTION:

scribe.logs.roku.com. 60 IN A 52.21.150.70

scribe.logs.roku.com. 60 IN A 35.172.120.217

scribe.logs.roku.com. 60 IN A 34.233.159.203

scribe.logs.roku.com. 60 IN A 35.170.206.212

scribe.logs.roku.com. 60 IN A 52.200.248.59

scribe.logs.roku.com. 60 IN A 52.1.249.132

scribe.logs.roku.com. 60 IN A 34.226.55.236

scribe.logs.roku.com. 60 IN A 52.21.44.213So while you might use 52.21.150.70 one time, next time is 25.172.120.217, etc. Only issue you could have is that 52.21.150.170 no longer works.

Have really never seen it be an issue.. But I wouldn't suggest you set the min to like 24 hours or anything.. If you find your having an issue getting somewhere, you can always just flush that specific entry from your cache.

This should lower the amount of both local queries and external queries.. Since now vs your local client asking for something every min with such a low ttl, it would only need to query 1 an hour for example.. Same goes for external queries. If your local device is not using a local cache - doesn't really matter what the ttl is for local queries - but would keep your dns from having to resolve it every freaking 60 seconds because some dumb iot device keeps asking for it every 30 freaking seconds, etc. because it has no local cache.

But hey if you got some local devices asking for whatever.something.tld every minute - you just got a 60x reduction in the number of external queries you have to do ;) if you change the min ttl to 1 hour. Multiply that by a few devices and few different fqdn being queried and in the course of 24 you could be doing 10s of 1000's of less queries.. Again prob not all that big of deal in the big picture.. But why do them if you don't really need to, etc.

-

I don't know if I should play around with that because everything runs fast and good for now. But I see if my WAN goes down unbound restarts. Any chance to disable this function and keep it running?

-

@mrsunfire said in DNS Resolver not caching correct?:

But I see if my WAN goes down unbound restarts

It's possible that the restart of unbound wasn't needed. If the WAN IP stays the same, then maybe yes, it might not be needed.

I guess you should investigate why the WAN NIC goes down ? Missing an UPS ? Then add one.There are many reasons that a WAN interface change has more effect, and then unbound should restart.

Loadbalancing. VPN usage (the tunnel is rebuild, and unbound should use the tunnel), etc.However : there is no GUI setting that let you choose what to restart, or not.

Just stop ripping out the cable, or have people play with the power plug, and you'll be fine. -

The IP stays the same. I use cable and the inhouse coax is getting renewed. The old one sometimes has ingress and the connection goes down shortly. I have to say that pfSense then switches to my failover WAN2.

Any chance to disable unbound restart?

-

Do you mean your upstream WAN Ethernet goes really down ? The upstream device resets ?

It's a cable modem - your mentioned 'coax' ?@mrsunfire said in DNS Resolver not caching correct?:

Any chance to disable unbound restart?

Not without you actually changing the code.

Btw : the monitoring process will also reset the interface if the upstream gateway becomes unreachable. You could change these settings.

-

Yes the cable modem loses connection to the upstream gateway of my ISP. Then a gateway alarm appears and it switches to my WAN2.

-

@mrsunfire said in DNS Resolver not caching correct?:

Then a gateway alarm appears and it switches to my WAN2.

Ok. Pretty good reason to inform unbound about that event ^^

-

Sure but why is it clearing the cache? An option to prevent that would be nice.

-

@mrsunfire said in DNS Resolver not caching correct?:

Sure but why is it clearing the cache? An option to prevent that would be nice.

Ah, now we are getting to the bottom of the subject.

It's unbound that needs to restart when the state of one of it's interfaces change.

I guess (my words) that unbound can't dynamic bind and unbind to IPs and/or ports.

This means : shutting down, Start again, thus reading the config (which is probably is rebuild because some interface came up = a viable or is missing now).

Side effect : cache is flushed / reset.As far as I know, the cache isn't written when unbound stops to some cache file and read back in when starting.

Don't know if that is even possible - and I'm pretty sure this isn't done by pfSense.Other side effect : people that use packages like pfBlockerNG with huge DNSBL lists will see something else : it will take long time (several tens of seconds) for unbound to start up because it has to read throughout all these lists and loading them.

If unbound restarts often - because, for example, it restarts when a DHCP lease comes in, they will experience DNS outages. -

You could load the cache back

You have to dump it first

unbound-control dump_cache > unbound.dumpThen reload it

cat unbound.dump | unbound-control load_cacheHere is where you could run into a problem with that - petty sure the ttls would be frozen at that moment in time. I have not done much playing with doing something like that - just don't really see the point.. So your stuff resolves again... So first query would be a couple extra ms.. But if your ttl's are frozen at the moment of the dump, then counters restart on reload then you could be serving up expired ttls - and depending on the how long it was down, etc. They could be expired by very long time.. And still have lots of time left on them, so might be getting bad info for whatever the time left on the ttl was?

But sure - guess it could be coded to dump the cache every X minutes or something, and then on restart reload it. Would need easy way for users to flush other than restart.