Is my hardware sufficient?

-

I have a CPU D-1521 (4 core 8 HT) CPU, 8GB RAM, and am using the build in Ethernet Connection X552/X557-AT 10GBASE-T NIC (ix driver).

I have enabled Suricata (on all 8 LAN interfaces, not WAN), enabled snort and suricata rules, logging to json, packet logging in suricata, and security IPS policy in inline mode. I disabled the hardware offloading as recommended by Suricata when in IPS mode. I have about 8 VLANs, and one WAN interface. All 8 VLANs share the same physical ethernet adapter.

Networking equipment is all ubiquity switches.

My problem is when I perform an iperf3 test between two LAN interfaces (routing through the FW being on different VLANs), I get this when suricata is disabled on the interface:

Connecting to host X, port 5201 [ 5] local Y port 50773 connected to X port 5201 [ ID] Interval Transfer Bitrate [ 5] 0.00-1.00 sec 109 MBytes 910 Mbits/sec [ 5] 1.00-2.00 sec 108 MBytes 910 Mbits/sec [ 5] 2.00-3.00 sec 108 MBytes 908 Mbits/sec [ 5] 3.00-4.00 sec 109 MBytes 911 Mbits/sec [ 5] 4.00-5.00 sec 108 MBytes 908 Mbits/sec [ 5] 5.00-6.00 sec 109 MBytes 910 Mbits/sec [ 5] 6.00-7.00 sec 108 MBytes 909 Mbits/sec [ 5] 7.00-8.00 sec 108 MBytes 909 Mbits/sec [ 5] 8.00-9.00 sec 108 MBytes 909 Mbits/sec [ 5] 9.00-10.00 sec 108 MBytes 909 Mbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bitrate [ 5] 0.00-10.00 sec 1.06 GBytes 909 Mbits/sec sender [ 5] 0.00-10.00 sec 1.06 GBytes 909 Mbits/sec receiverand this when suricata is enabled:

Connecting to host X, port 5201 [ 5] local Y port 50443 connected to X port 5201 [ ID] Interval Transfer Bitrate [ 5] 0.00-1.00 sec 73.9 MBytes 620 Mbits/sec [ 5] 1.00-2.00 sec 76.7 MBytes 644 Mbits/sec [ 5] 2.00-3.00 sec 75.2 MBytes 630 Mbits/sec [ 5] 3.00-4.00 sec 74.6 MBytes 626 Mbits/sec [ 5] 4.00-5.00 sec 74.6 MBytes 626 Mbits/sec [ 5] 5.00-6.00 sec 74.0 MBytes 621 Mbits/sec [ 5] 6.00-7.00 sec 75.0 MBytes 629 Mbits/sec [ 5] 7.00-8.00 sec 75.4 MBytes 632 Mbits/sec [ 5] 8.00-9.00 sec 74.7 MBytes 627 Mbits/sec [ 5] 9.00-10.00 sec 73.2 MBytes 614 Mbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bitrate [ 5] 0.00-10.00 sec 747 MBytes 627 Mbits/sec sender [ 5] 0.00-10.00 sec 746 MBytes 626 Mbits/sec receiverI thought this CPU would be sufficient? It is only running at a maximum of 100 - 150% CPU when iperf3 is running, with 8 cores it could go up to 800%, and taking into consideration HT cores do not scale the same, it still seems like I have extra headroom.

What can I tune to make this work at 1Gbps?

-

Hi,

With hardware offloading disabled, Ethernet packets really travel through one NIC, are loaded by the CPU into memory and the moved to another NIC, by the CPU.

Normally, this wouldn't impact your system.

The 'get' from one NIC, and the 'put' to another one are probably just one machine instruction with little overhead.Btw : hardware offloading should be stopped because you - Snort and Suri - need to have access to the packets for inspection.

Now, I'll answer with a question : does Snort support multi core ? Same thing for Suricata ? Google these two and be ready for a surprise.

Added to that : each of these programs do execute millions of instruction for each received packet ...... this is what is DPI is all about.

Bonus : if by any chance these two (Snort and Suricata) run on the same core, your 630 Mbits/sec is actually quit good.edit : a question - for me : what is this DPI all about knowing that most of all traffic is SSL encoded ? Or are you doing MITM ? And in that case : add another huge boatload of CPU cycles to handle the SSL decode and encode part of your traffic.

-

So hardware offloading - sure, I get that it has an impact but my two tests both have hardware offloading disabled, yet one is 270Mb/s slower than the other, when the only difference is Suricata being turned on.

Then, I am only running Suricata - not Snort. I am using Snort rules in Suricata but it is only Suricata that is running. The rules that I use makes a difference. For instance, switching from Balanced policy to Security drops about 80Mbps.

Suricata is fully multi threaded - I can see that in the usage patterns and know from the architecture of the code. Snort 2 is not. But snort is not relevant here. As to the load - I get that Suricata adds load and takes time to process, but my CPU is far from saturated (most of the time the total CPU - i.e. out of 800% is less than 100%) with this test so why is it still too slow to process it at full 1Gbps?

DPI is not just about inspecting traffic itself, it is for detecting and blocking bad hosts (dshield), detecting non SSL rogue traffic over SMTP, IMAP etc.

I am not doing MiTM with SSL.

-

Try running

top -aSHat the command line while testing to see how that load is spread across the cores.I suspect you will see one at 100%

Steve

-

Oh that is what I meant when I said " As to the load - I get that Suricata adds load and takes time to process, but my CPU is far from saturated (most of the time the total CPU - i.e. out of 800% is less than 100%) with this test so why is it still too slow to process it at full 1Gbps?"

I used htop to check the cores. None are saturated.

PS: It seems top and htop disagree on CPU load... top shows it is 99%, htop shows 70 - 80%.

-

What does it actually show?

-

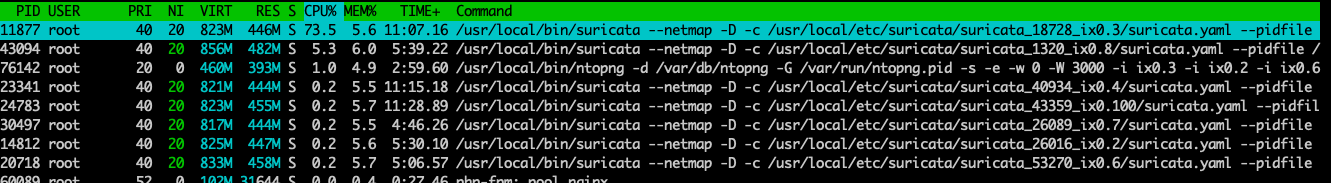

htop shows:

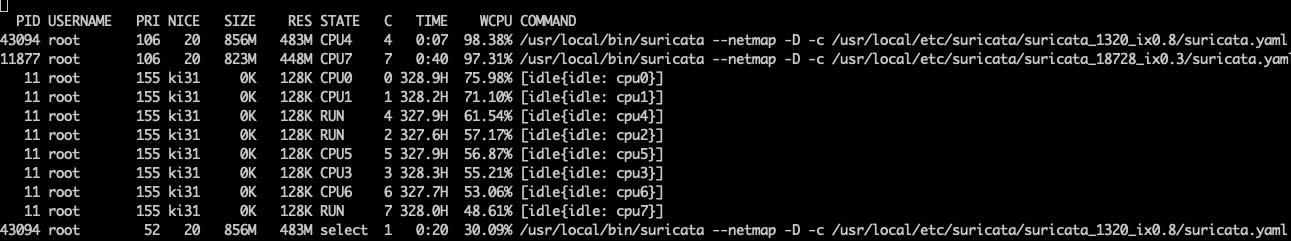

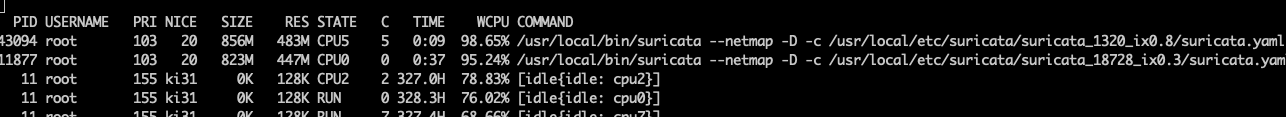

top shows:

Also htop causes the network stack to timeout and do this:

Connecting to host X, port 5201 [ 5] local Y port 60549 connected to X port 5201 [ ID] Interval Transfer Bitrate [ 5] 0.00-1.00 sec 12.5 MBytes 105 Mbits/sec [ 5] 1.00-2.00 sec 15.1 MBytes 127 Mbits/sec [ 5] 2.00-3.00 sec 2.83 KBytes 23.2 Kbits/sec [ 5] 3.00-4.00 sec 0.00 Bytes 0.00 bits/sec [ 5] 4.00-5.00 sec 17.8 MBytes 149 Mbits/sec [ 5] 5.00-6.00 sec 0.00 Bytes 0.00 bits/sec [ 5] 6.00-7.00 sec 23.0 KBytes 189 Kbits/sec [ 5] 7.00-8.00 sec 2.83 KBytes 23.1 Kbits/sec [ 5] 8.00-9.00 sec 2.83 KBytes 23.2 Kbits/sec iperf3: error - control socket has closed unexpectedlytop does not.

-

Really I want to see idle processes there for each CPU at above 0% rates showing no core is maxed out.

But it looks like you're hitting close to 100% on those Suricata processes.

Steve

-