2.4.5.a.20200110.1421 and earlier: High CPU usage from pfctl

-

I continue to encounter this problem with Hyper-V VM test installs running the latest RC version of 2.4.5, even with no other packages installed and nothing else configured, on both UFS and ZFS filesystems.

I caught a glimpse of Diagnostics->System Activity during one of these instances:

16355 root 101 0 8828K 4960K CPU1 1 0:27 96.00% /sbin/pfctl -o basic -f /tmp/rules.debug 0 root -92 - 0K 4928K - 0 2:08 92.97% [kernel{hvevent0}] 0 root -92 - 0K 4928K - 2 1:11 89.99% [kernel{hvevent2}]The rest were around zero.

Should I limit my "production" pfSense VM (2.4.4p3 for just a home network, problem does not occur on this one) to only one virtual CPU before 2.4.5 is released? (If I give the VM four virtual CPUs, pfSense considers the four to be individual cores, not separate CPUs.)

-

I do not have a lot of familiarity with Hyper-V, but I know that it can have NUMA or Virtual NUMA turned on by default and, I believe, FreeBSD in general, and gateways/Routers in particular, are not very happy with it. I would try disabling NUMA for that VM, and ensure a 1:1 cpu ratio, and see if that makes a difference. Assuming it is even on, of course, as you seem to have pretty good handle on your Hyper-V.

-

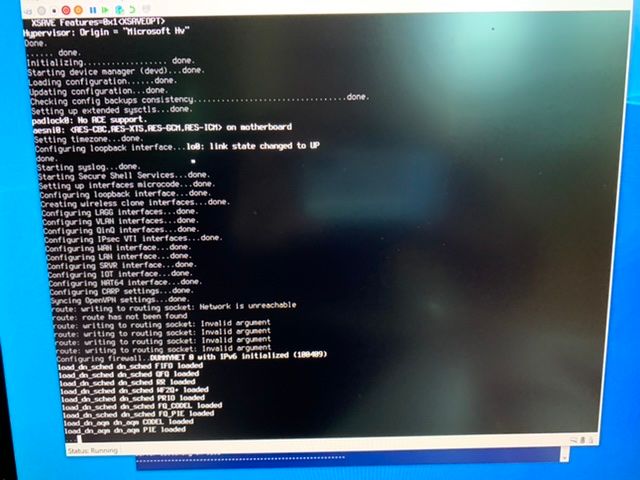

@jimp I noticed that during boot heavy cpu usage with hyper-v

The packages I have installed are:

Acme certificate

Anahi

Opnvpn clientI do have fqcodel limiters set up

It seems to spend a lot of time 100% cpu just after:

load_dn_aqm dn_aqm PIE loaded

-

The update below seemed to fix the boot time delay:

Current Base System2.4.5.r.20200211.0854

Latest Base System2.4.5.r.20200212.1633This seems to have fixed the speed on boot. Went from about 2 minutes to 1 minute boot time.

-

Same troubles after upgrade from 2.4.4 to 2.4.5 under Windows Server 2019 Hyper-V, very long boot, sometimes pfct processl CPU usage almost 100% and system became very slow and unresponsive.

-

Same issue.

-

@aydinj

Do you have pfBlockerNG package installed? If yes, maybe it related:

https://forum.netgate.com/topic/151690/increased-memory-and-cpu-spikes-causing-latency-outage-with-2-4-5/19

https://forum.netgate.com/topic/151726/pfblockerng-2-1-4_21-totally-lag-system-after-pfsense-upgrade-from-2-4-4-to-2-4-5 -

No. It has a serious problem, even at boot. With a clean install, it is extremely slow and stock for minutes on "Firewall configuration," even on a fresh installed OS. I think this new version has a problem with hyper-v

-

Same issue on Hyper-V. Only workaround is drop CPU to 1.

-

I can also confirm Hyper-V server core 2019 (Dell T20 Xeon) after upgrading my 2.4.4 VM to 2.4.5, I now have extremely High CPU when assigning all of my 4 cores to the Pfsense VM at boot. This is also being caused by the pfctl process (it seems) and consumes the boot process (up to 10 minutes to actually boot past configuring interface WAN > LAN etc)

After reducing the cores to 2 to the VM, it still uses high CPU at boot (2 minutes to boot), but I can at least use the internet and webgui (CPU under 20% - still high for my setup usually 1 - 3%).I can also observe the new OpenVPN version service when utilising my WAN (4mb/sec down only) the CPU is 23% for the openvpn process in top which is unusually high.

It seems the OS is not optimised for Hyper-V usage so I am reverting back to 2.4.4 and hoping this thread on the forum will be updated by Netgate Dev's.

-

@Magma82

Now i am using almost 2 days with 2 CPU cores pfSense 2.4.5 under Hyper-V Server 2019, no issues with high CPU usage at all after disabling pfBlockerNG GEOip lists. -

@Gektor Check out this thread. Worked for me.

https://www.reddit.com/r/pfBlockerNG/comments/fqjdc5/pfblockerngdevel_downloading_lists_but_not_able/flqzkgp/ -

I am seeing this too. Running Hyper-V on a Dell R720. VM had 4 CPU assigned. No packages installed. CPU on the VM would spike to 100% for a few minutes at a time, then drop to normal briefly (no more than 30 seconds or so), then back to 100%.

Following recommendations earlier in the thread, I dropped the VM down to 1 CPU and that made everything operate normally again, as far as I can tell. Because it's not really a busy firewall, this is no real issue for me to have 1 CPU. Therefore I don't have a really urgent need to roll back to 2.4.4. I'll stay where I am until a patch is issued.

Sounds like the problem is specific to multiple CPUs on Hyper-V only.

-

I am seeing this too. We are running Qemu 4.1.1, Kernel 5.3 (KVM) and CPU emulation Skylake-Client.

Problem started with 2.4.4 and a upgrade to 2.4.5 did not solve the issue.

Workaround is to downgrade to one (1) core. -

Netgate DEVS, the CPU performance in HyperV is definitely broken in 2.4.5 - are there any Hyper-V integration tools or libraries that are perhaps missing in the OS build?

Boot up CPU with 4 cores assigned = 100% constant at an early stage of the boot process and is barely accessible once booted.

OpenVPN = CPU is also considerably higher under load (as if the CPU isn't optimised for the VM)

I have reverted to 2.4.4 and its rock solid and under 3% CPU in use and just feels a lot more optimised.

Hardware

stable 2.4.4-RELEASE-p3 (amd64)

Intel(R) Xeon(R) CPU E3-1225 v3 @ 3.20GHz

4 CPUs: 1 package(s) x 4 core(s)

AES-NI CPU Crypto: Yes (active)

Hardware crypto AES-CBC,AES-XTS,AES-GCM,AES-ICM -

Same issue, Server 2019 and Hyper-V, no packages installed on custom HW (Ryzen 2700) after upgrade. Pegs CPU upon boot and is basically unusable.

Set VM to 1 virtual processor to get it working but it is sub-optimal for OpenVPN clients. Even experimented with just assigning 2 virtual processors - it runs sluggish.

Will look to revert to 2.4.4-p3 snapshot in the near future.Edit: since I had nothing to lose and this is in a test lab, I bumped up to 2.5.0 development (2.5.0.a.20200403.1017). 2.5.0 does not seem to have the Hyper-V CPU issue.

-

Its the same in a VM on Vsphere. I run 32 cores on a test system and they all go to almost 100% shortly after boot.

I noticed that the server started spinning its fans a lot harder and looked in the hypervisor and sure enough. Almost 100% and not handling traffic at all....

I was running 2.4.4 p3 and no issues until Suricata wont start. Then I had to upgrade and it died....

-

i made a clean install on my esxi with 4 cpu

and upgraded from 2.4.4-p3 to 2.4.5 on another server with qemu/kvm with 4 cpu westmere

both have suricata installed, never had such a problem. and i'm unable to reproduce on my test lab, must be some settings -

Same problem here too Hvper V 2016 version 2.4.5

5GB RAM

4 CPU

pfblocker NGSits for ages on 'firewall' & Also DHCPv6 before booting really sluggish dropped packets galore

Dropped back to single CPU and all ok on 2.4.5

-

Same problem, pfsense 2.4.4 installed on Vmware Esxi. I have suricata, pfblockerng, squid, squidguard and lightsquid installed. After upgrading to 2.4.5 the latency went haywire. However, I've managed resolve my problem, I reduced 8 vcpu to 1vcpu then did the upgrade to 2.4.5. So far everything worked fine except suricata wouldn't start, so i did a Forced pkg Reinstall. Everything worked fine after that, then I added an additional 3vcpu and it's been working fine ever since.