2.4.5 High latency and packet loss, not in a vm

-

@jwj said in 2.4.5 High latency and packet loss, not in a vm:

@jdeloach You can do that in a Virtual Machine, bare metal hardware not so much.

Yeah, that is what I thought. Need some more coffee this morning. I haven't used virtual memory for running programs in the past. Will have to give that a try someday. Thanks.

-

@Cool_Corona said in 2.4.5 High latency and packet loss, not in a vm:

Downgraded to 1 CORE and everything came up quickly and everything is working as expected.

This is a great observation! Testing now.

-

2 Cores worked great for me on my test box, but when I went up to 8 cores like the production box, everything went downhill. luckily I changed the cores on the test box before I tried on the production box.

-

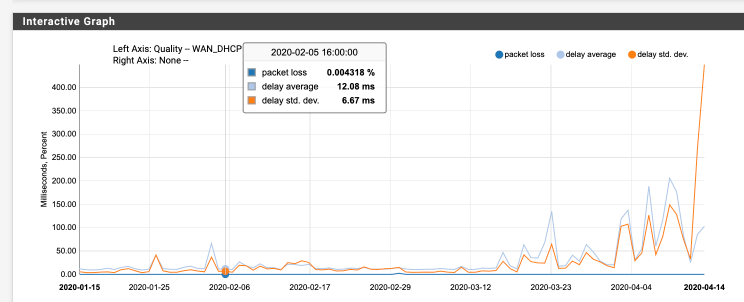

Heres my 3 month WAN latency graph showing the clear increase in average latency since the 2.4.5 upgrade. I just added this to my support ticket. I am not seeing a typical short timeframe ISP latency increase.

-

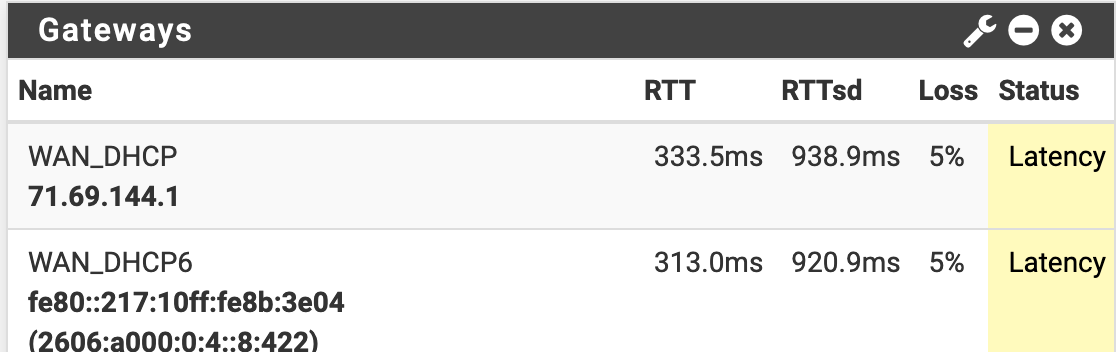

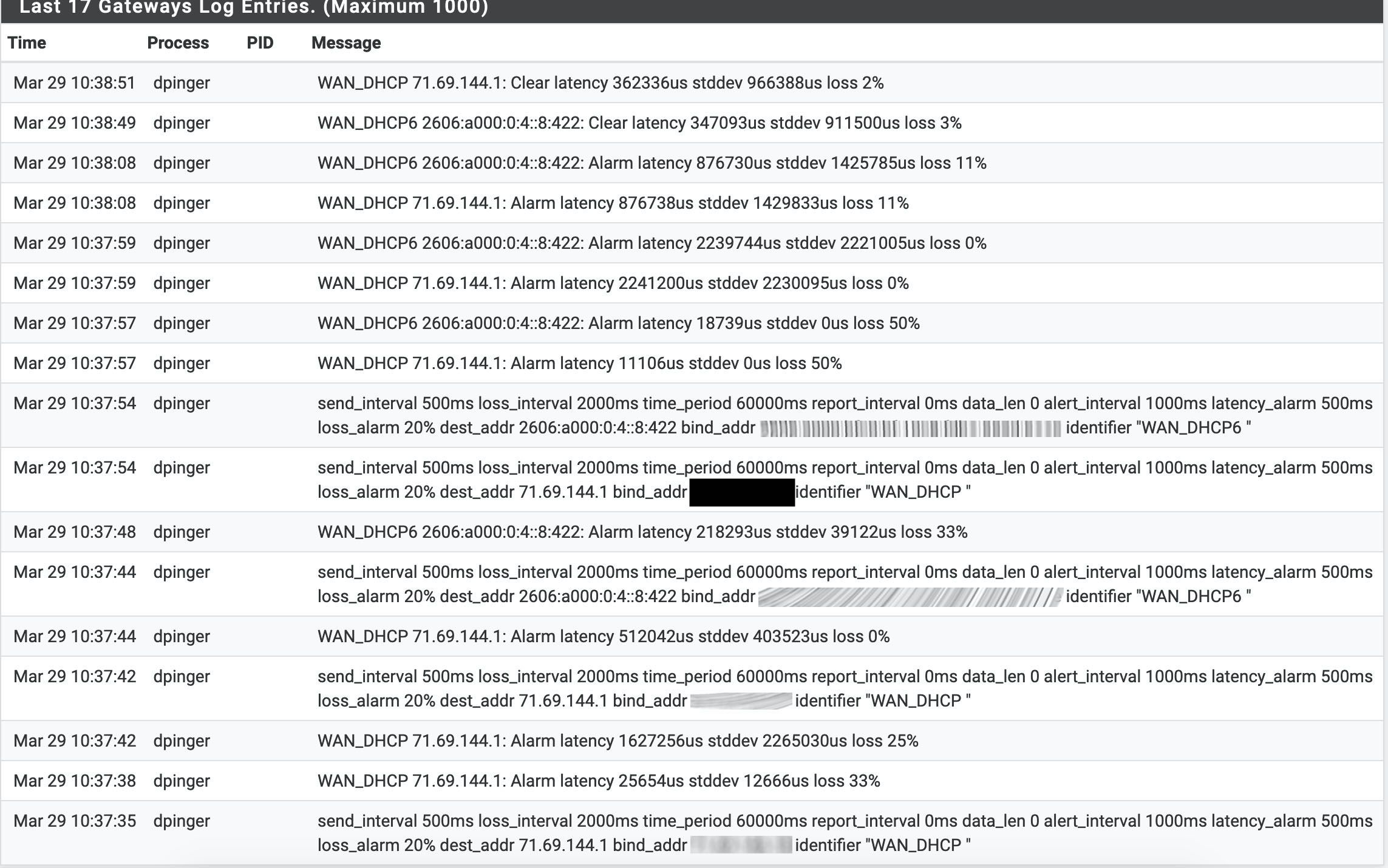

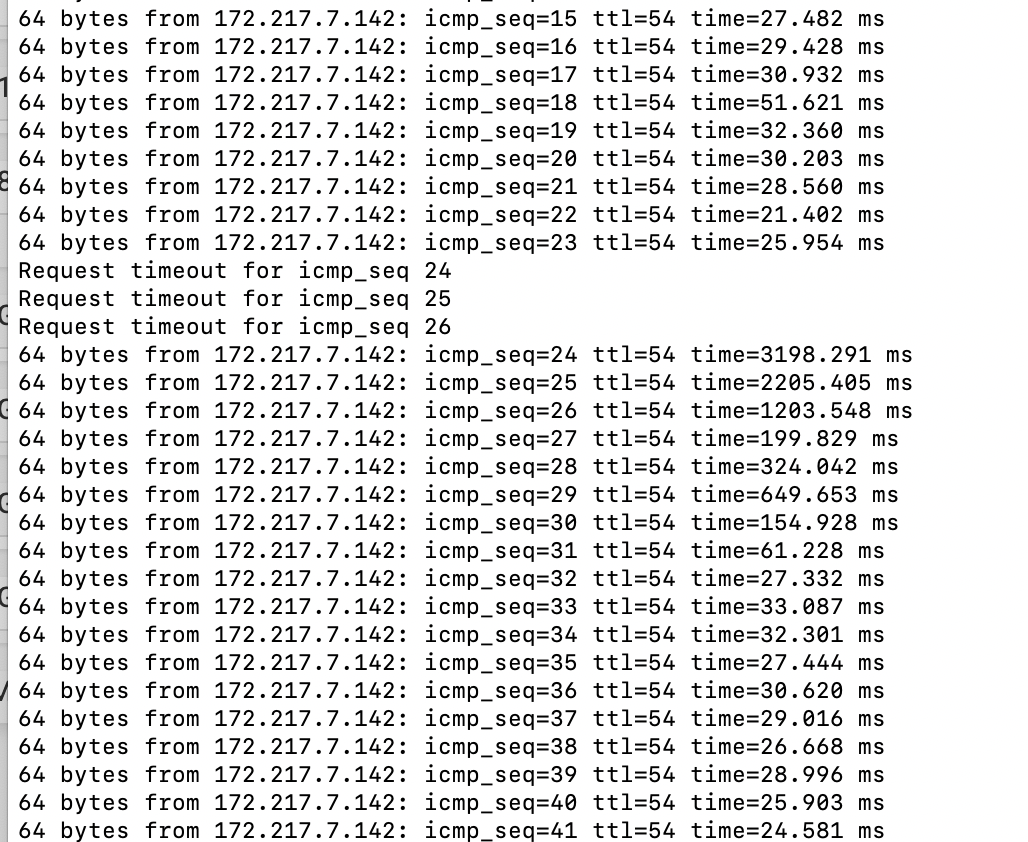

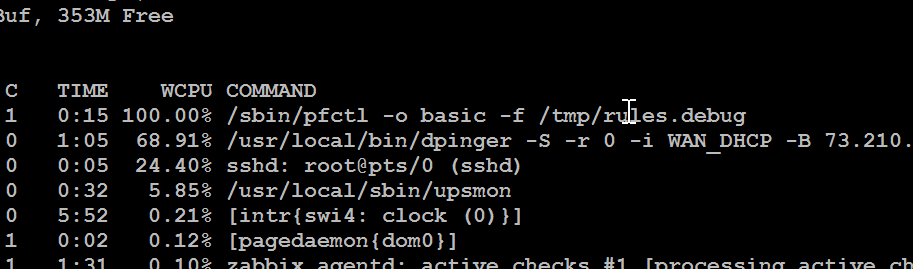

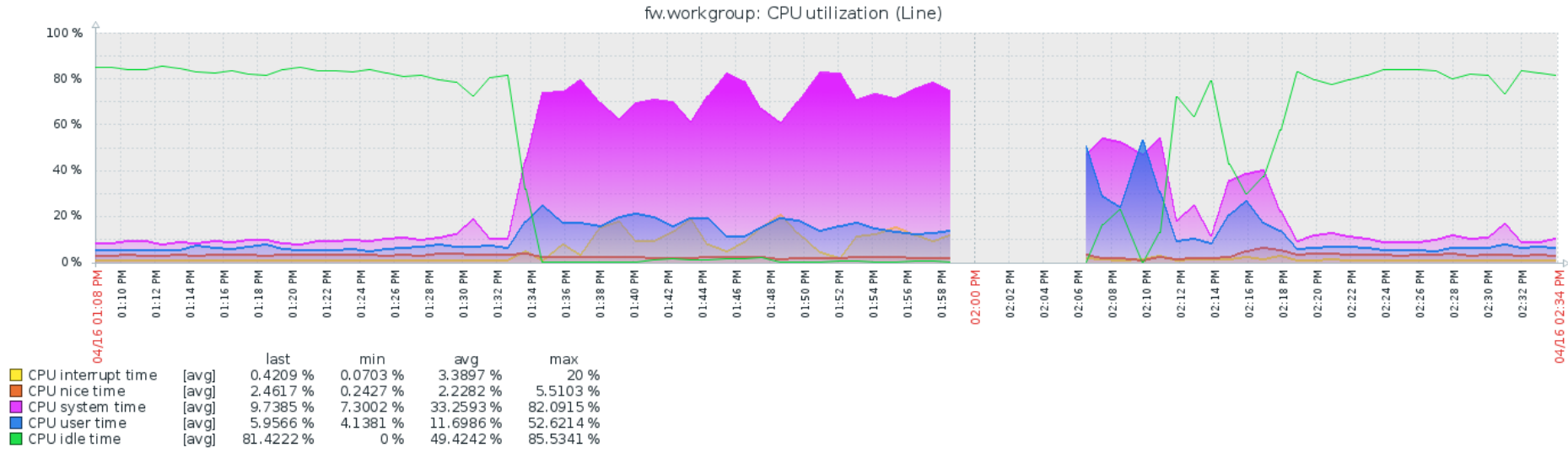

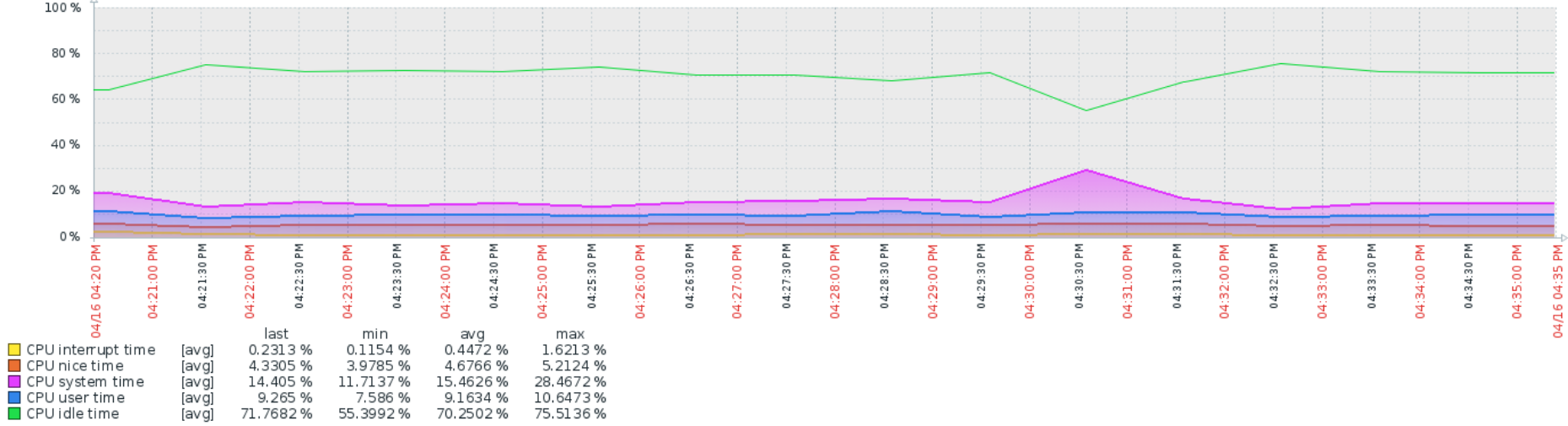

No idea if this is related, but I tried to add 2 monitor IPs to my v4 and v6 gateways and immediately I had latency and drops. Even when they were removed, I could barely get control of the web GUI. Had to reboot. Below are clips of top -aSH and proc load.

-

@provels That is exactly the bug, yup! Fix at the moment appears to be to drop to one vCPU if you're able.

-

@muppet Thanks. Think I'd rather keep the procs! My Hyper-V host is pretty lame!

-

@provels Then I suggest rolling back to your 2.4.4p3 snapshot.

-

@muppet It's just li'l ole me here. I have 2.4.4-p3, 2.4.5 UFS and 2.4.5 ZFS available on the lab.

-

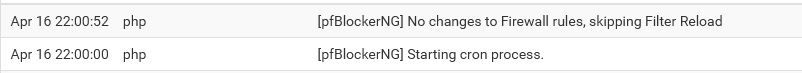

I get timeouts when filter is reloading.

Still running 8 core setup and only minor issues. Its not production ready yet since it has timeouts when this happens:

Apr 16 22:06:33 xinetd 13368 Reconfigured: new=0 old=4 dropped=0 (services)

Apr 16 22:06:33 xinetd 13368 readjusting service 19001-udp

Apr 16 22:06:33 xinetd 13368 readjusting service 19001-tcp

Apr 16 22:06:33 xinetd 13368 readjusting service 19000-udp

Apr 16 22:06:33 xinetd 13368 readjusting service 19000-tcp

Apr 16 22:06:33 xinetd 13368 Swapping defaults

Apr 16 22:06:33 xinetd 13368 Starting reconfiguration

Apr 16 22:06:32 php-fpm 68319 /rc.openvpn: Gateway, none 'available' for inet6, use the first one configured. ''

Apr 16 22:06:32 php-fpm 68319 /rc.openvpn: Gateway, none 'available' for inet, use the first one configured. 'WANGW'

Apr 16 22:06:31 check_reload_status Reloading filter

Apr 16 22:06:31 check_reload_status Restarting OpenVPN tunnels/interfaces

Apr 16 22:06:31 check_reload_status Restarting ipsec tunnels

Apr 16 22:06:31 check_reload_status updating dyndns WANGW

Apr 16 22:06:31 rc.gateway_alarm 66212 >>> Gateway alarm: WANGW (Addr:81.19.224.67 Alarm:0 RTT:1.945ms RTTsd:.093ms Loss:14%)

Apr 16 22:04:44 xinetd 13368 Reconfigured: new=0 old=4 dropped=0 (services)

Apr 16 22:04:44 xinetd 13368 readjusting service 19001-udp

Apr 16 22:04:44 xinetd 13368 readjusting service 19001-tcp

Apr 16 22:04:44 xinetd 13368 readjusting service 19000-udp

Apr 16 22:04:44 xinetd 13368 readjusting service 19000-tcp

Apr 16 22:04:44 xinetd 13368 Swapping defaults

Apr 16 22:04:44 xinetd 13368 Starting reconfiguration

Apr 16 22:04:44 php-fpm 61919 /rc.openvpn: Gateway, none 'available' for inet6, use the first one configured. ''

Apr 16 22:04:44 php-fpm 61919 /rc.openvpn: Gateway, none 'available' for inet, use the first one configured. 'WANGW'

Apr 16 22:04:43 check_reload_status Reloading filter

Apr 16 22:04:43 check_reload_status Restarting OpenVPN tunnels/interfaces

Apr 16 22:04:43 check_reload_status Restarting ipsec tunnels

Apr 16 22:04:43 check_reload_status updating dyndns WANGW

Apr 16 22:04:43 rc.gateway_alarm 2496 >>> Gateway alarm: WANGW (Addr:81.19.224.67 Alarm:1 RTT:928.897ms RTTsd:5161.512ms Loss:18%)

Apr 16 22:04:00 xinetd 13368 Reconfigured: new=0 old=4 dropped=0 (services)

Apr 16 22:04:00 xinetd 13368 readjusting service 19001-udp

Apr 16 22:04:00 xinetd 13368 readjusting service 19001-tcp

Apr 16 22:04:00 xinetd 13368 readjusting service 19000-udp

Apr 16 22:04:00 xinetd 13368 readjusting service 19000-tcp

Apr 16 22:04:00 xinetd 13368 Swapping defaults

Apr 16 22:04:00 xinetd 13368 Starting reconfiguration

Apr 16 22:03:27 php-fpm 32951 /rc.openvpn: Gateway, none 'available' for inet6, use the first one configured. ''

Apr 16 22:03:27 php-fpm 32951 /rc.openvpn: Gateway, none 'available' for inet, use the first one configured. 'WANGW'

Apr 16 22:03:26 check_reload_status Reloading filter

Apr 16 22:03:26 check_reload_status Restarting OpenVPN tunnels/interfaces

Apr 16 22:03:26 check_reload_status Restarting ipsec tunnels

Apr 16 22:03:26 check_reload_status updating dyndns WANGW

Apr 16 22:03:26 rc.gateway_alarm 62528 >>> Gateway alarm: WANGW (Addr:81.19.224.67 Alarm:0 RTT:4.679ms RTTsd:26.791ms Loss:20%)

Apr 16 22:03:16 xinetd 13368 Reconfigured: new=0 old=4 dropped=0 (services)

Apr 16 22:03:16 xinetd 13368 readjusting service 19001-udp

Apr 16 22:03:16 xinetd 13368 readjusting service 19001-tcp

Apr 16 22:03:16 xinetd 13368 readjusting service 19000-udp

Apr 16 22:03:16 xinetd 13368 readjusting service 19000-tcp

Apr 16 22:03:16 xinetd 13368 Swapping defaults

Apr 16 22:03:16 xinetd 13368 Starting reconfiguration

Apr 16 22:03:16 php-fpm 19187 /rc.openvpn: Gateway, none 'available' for inet6, use the first one configured. ''

Apr 16 22:03:16 php-fpm 19187 /rc.openvpn: Gateway, none 'available' for inet, use the first one configured. 'WANGW'

Apr 16 22:03:15 check_reload_status Reloading filter

Apr 16 22:03:15 check_reload_status Restarting OpenVPN tunnels/interfaces

Apr 16 22:03:15 check_reload_status Restarting ipsec tunnels

Apr 16 22:03:15 check_reload_status updating dyndns WANGW

Apr 16 22:03:15 rc.gateway_alarm 38824 >>> Gateway alarm: WANGW (Addr:81.19.224.67 Alarm:1 RTT:1.923ms RTTsd:.079ms Loss:21%)

Apr 16 22:00:52 php [pfBlockerNG] No changes to Firewall rules, skipping Filter Reload

Apr 16 22:00:00 php [pfBlockerNG] Starting cron process.6 minutes where the network in unreachable.

-

This is all the same thing. We are past the point of 'is this a problem'. It is. Netgate is working on it. They have solicited configuration data and some users have provided that.

The impact of changing gateway configuration (monitor ip, polling freq, averaging time, etc) was reported at least once back on April 4 (look back in this thread) and acknowledged by Netgate.

The simplest way to manage this:

- If you can manage on 2.4.5 without disruption, stay put.

- If not, downgrade to 2.4.4-p3. Adjust the settings in System->Upgrade as needed.

Now we wait to see what happens, at some point 2.4.5-p1 will come out. When? I don't think anyone one knows at the moment.

Not in a passive mood? Open a ticket at Netgate Support and ask what you can provide that they would find helpful.

Just a note: When you post system log data with gateway alarms you might want to obscure your public IP, especially if it's a static IP. Just saying...

-

@jwj said in 2.4.5 High latency and packet loss, not in a vm:

This is all the same thing. We are past the point of 'is this a problem'. It is. Netgate is working on it. They have solicited configuration data and some users have provided that.

The impact of changing gateway configuration (monitor ip, polling freq, averaging time, etc) was reported at least once back on April 4 (look back in this thread) and acknowledged by Netgate.

The simplest way to manage this:

- If you can manage on 2.4.5 without disruption, stay put.

- If not, downgrade to 2.4.4-p3. Adjust the settings in System->Upgrade as needed.

Now we wait to see what happens, at some point 2.4.5-p1 will come out. When? I don't think anyone one knows at the moment.

Not in a passive mood? Open a ticket at Netgate Support and ask what you can provide that they would find helpful.

Just a note: When you post system log data with gateway alarms you might want to obscure your public IP, especially if it's a static IP. Just saying...

Its the monitor IP and not our own systems :)

-

Hyper-V VM

2,000,000 table entries

bogons enabled

pfBNG enabled

monitor IPs enabled

single core

no problem.Monitor IPs is the only thing that affected my system with 2 procs, all else same.

-

Yes, that's replicable everywhere I've tried it. The devs working on this are aware.

Steve

-

I have been told that this issue has been replicated in the Netgate lab and they working on a fix.

I would also remind all that are impatient for a fix to this issue that the support and development staff at Netgate have been challenged in ways no one could have predicted a few months ago. A massive surge in the need for remote access across their installed customer base and all that goes with that. They have offered up support to non-paying pfsense users as well as their customers on contract to meet these changing needs.

So, they are on it.

https://www.netgate.com/blog/usns-mercy-steps-up-its-network-for-covid-19-support.html

-

https://forum.netgate.com/post/908806

-

N nsmhd referenced this topic on