pfSense 2.5.2 crash when enable Synchronize states

-

I upgraded pfSense from 2.5.1 to 2.5.2 today and "Synchronize states" is disabled now.

When I try to enable it, it hangs, crashes and restart after.After restart pfSense can't determine on which version it is.

In package manager it shows: There are no packages currently installed.

After next reboot it working again, but still can't enable HA Synchronize states.I have crash dump files, but I don't see anything in those file. I attach those anyway.

info.0

textdump.tar -

HA sync for XMLRPC and states is working fine here for me on 2.5.2.

I don't recognize the specific backtrace you're getting, though.

Not much changed at the OS level between 2.5.1 and 2.5.2.

Sleeping thread (tid 100169, pid 67656) owns a non-sleepable lock KDB: stack backtrace of thread 100169: sched_switch() at sched_switch+0x630/frame 0xfffffe00027e6070 mi_switch() at mi_switch+0xd4/frame 0xfffffe00027e60a0 sleepq_timedwait() at sleepq_timedwait+0x2f/frame 0xfffffe00027e60e0 _sleep() at _sleep+0x1c8/frame 0xfffffe00027e6160 pause_sbt() at pause_sbt+0xf1/frame 0xfffffe00027e6190 e1000_write_phy_reg_mdic() at e1000_write_phy_reg_mdic+0xed/frame 0xfffffe00027e61d0 e1000_access_phy_wakeup_reg_bm() at e1000_access_phy_wakeup_reg_bm+0x6c/frame 0xfffffe00027e6210 __e1000_write_phy_reg_hv() at __e1000_write_phy_reg_hv+0x8f/frame 0xfffffe00027e6260 e1000_update_mc_addr_list_pch2lan() at e1000_update_mc_addr_list_pch2lan+0xae/frame 0xfffffe00027e62a0 em_if_multi_set() at em_if_multi_set+0x1d1/frame 0xfffffe00027e62f0 iflib_if_ioctl() at iflib_if_ioctl+0x119/frame 0xfffffe00027e6360 lagg_ioctl() at lagg_ioctl+0x155/frame 0xfffffe00027e6470 if_addmulti() at if_addmulti+0x442/frame 0xfffffe00027e6500 vlan_setmulti() at vlan_setmulti+0x15d/frame 0xfffffe00027e6550 vlan_ioctl() at vlan_ioctl+0x99/frame 0xfffffe00027e65c0 if_addmulti() at if_addmulti+0x442/frame 0xfffffe00027e6650 in_joingroup_locked() at in_joingroup_locked+0x267/frame 0xfffffe00027e6710 in_joingroup() at in_joingroup+0x52/frame 0xfffffe00027e6750 pfsyncioctl() at pfsyncioctl+0x7d4/frame 0xfffffe00027e67d0 ifioctl() at ifioctl+0x499/frame 0xfffffe00027e6890 kern_ioctl() at kern_ioctl+0x2b7/frame 0xfffffe00027e68f0 sys_ioctl() at sys_ioctl+0x101/frame 0xfffffe00027e69c0 amd64_syscall() at amd64_syscall+0x387/frame 0xfffffe00027e6af0 fast_syscall_common() at fast_syscall_common+0xf8/frame 0xfffffe00027e6af0 --- syscall (54, FreeBSD ELF64, sys_ioctl), rip = 0x8004544fa, rsp = 0x7fffffffe368, rbp = 0x7fffffffe3b0 --- panic: sleeping thread cpuid = 1 time = 1625770766 KDB: enter: panicdb:0:kdb.enter.default> show pcpu cpuid = 1 dynamic pcpu = 0xfffffe007f091380 curthread = 0xfffff8000c85a740: pid 85290 tid 100272 "haproxy" curpcb = 0xfffff8000c85ace0 fpcurthread = 0xfffff8000c85a740: pid 85290 "haproxy" idlethread = 0xfffff8000435d740: tid 100004 "idle: cpu1" curpmap = 0xfffff801c6a2e138 tssp = 0xffffffff83717688 commontssp = 0xffffffff83717688 rsp0 = 0xfffffe004d2bdbc0 kcr3 = 0x80000001c6e5510d ucr3 = 0x80000001c6e5690d scr3 = 0x1c6c4094f gs32p = 0xffffffff8371dea0 ldt = 0xffffffff8371dee0 tss = 0xffffffff8371ded0 tlb gen = 20053 curvnet = 0xfffff800040b5bc0 db:0:kdb.enter.default> bt Tracing pid 85290 tid 100272 td 0xfffff8000c85a740 kdb_enter() at kdb_enter+0x37/frame 0xfffffe004d2bcbc0 vpanic() at vpanic+0x197/frame 0xfffffe004d2bcc10 panic() at panic+0x43/frame 0xfffffe004d2bcc70 propagate_priority() at propagate_priority+0x282/frame 0xfffffe004d2bcca0 turnstile_wait() at turnstile_wait+0x30c/frame 0xfffffe004d2bccf0 __mtx_lock_sleep() at __mtx_lock_sleep+0x199/frame 0xfffffe004d2bcd80 pfsync_defer() at pfsync_defer+0x234/frame 0xfffffe004d2bcde0 pf_test_rule() at pf_test_rule+0x1be8/frame 0xfffffe004d2bd280 pf_test() at pf_test+0x2448/frame 0xfffffe004d2bd4e0 pf_check_out() at pf_check_out+0x1d/frame 0xfffffe004d2bd500 pfil_run_hooks() at pfil_run_hooks+0xa1/frame 0xfffffe004d2bd5a0 ip_output() at ip_output+0xb4f/frame 0xfffffe004d2bd6e0 tcp_output() at tcp_output+0x1b1b/frame 0xfffffe004d2bd890 tcp_usr_connect() at tcp_usr_connect+0x195/frame 0xfffffe004d2bd8e0 soconnect() at soconnect+0xae/frame 0xfffffe004d2bd920 kern_connectat() at kern_connectat+0xe2/frame 0xfffffe004d2bd980 sys_connect() at sys_connect+0x75/frame 0xfffffe004d2bd9c0 amd64_syscall() at amd64_syscall+0x387/frame 0xfffffe004d2bdaf0 fast_syscall_common() at fast_syscall_common+0xf8/frame 0xfffffe004d2bdaf0 --- syscall (98, FreeBSD ELF64, sys_connect), rip = 0x800a3067a, rsp = 0x7fffffffe5f8, rbp = 0x7fffffffe630 ---It does appear to be in

pfsync, though.Are both nodes on 2.5.2? Do both crash?

I'd try resetting the state table on both units before enabling pfsync again.

-

@jimp thanks for response!

Only single node resetting itself on 2.5.2, it is master node if it's important.

It was working on 2.5.1.One weird thing though, but it started in 2.5.1 (I think it was fine it 2.5.0).

Nrpe service is syncing settings between firewalls (bind IP) so it's not working properly.

I will test resetting states and check if this will crash this afternoon.

-

Config sync and state sync are unrelated, so problems in one area are unlikely to be related to the other (and vice versa).

Though if something isn't syncing correctly usually that's due to some other inconsistency in the HA configuration, such as interfaces not being assigned in the correct identical order, or somehow the internal interface names don't match up.

-

@jimp I resetted states and it hangs anyway.

Interfaces are in identical order, I rechecked. Any other way to troubleshot except reinstall from scratch? -

Is the hardware setup identical? Meaning are the two nodes either the exact same make/model or at least have the same network interface names?

I'm not aware of anything that would cause trouble like what you are seeing, so it's not quite clear what the next step might be to narrow it down.

What are your pfsync settings in the GUI on each node?

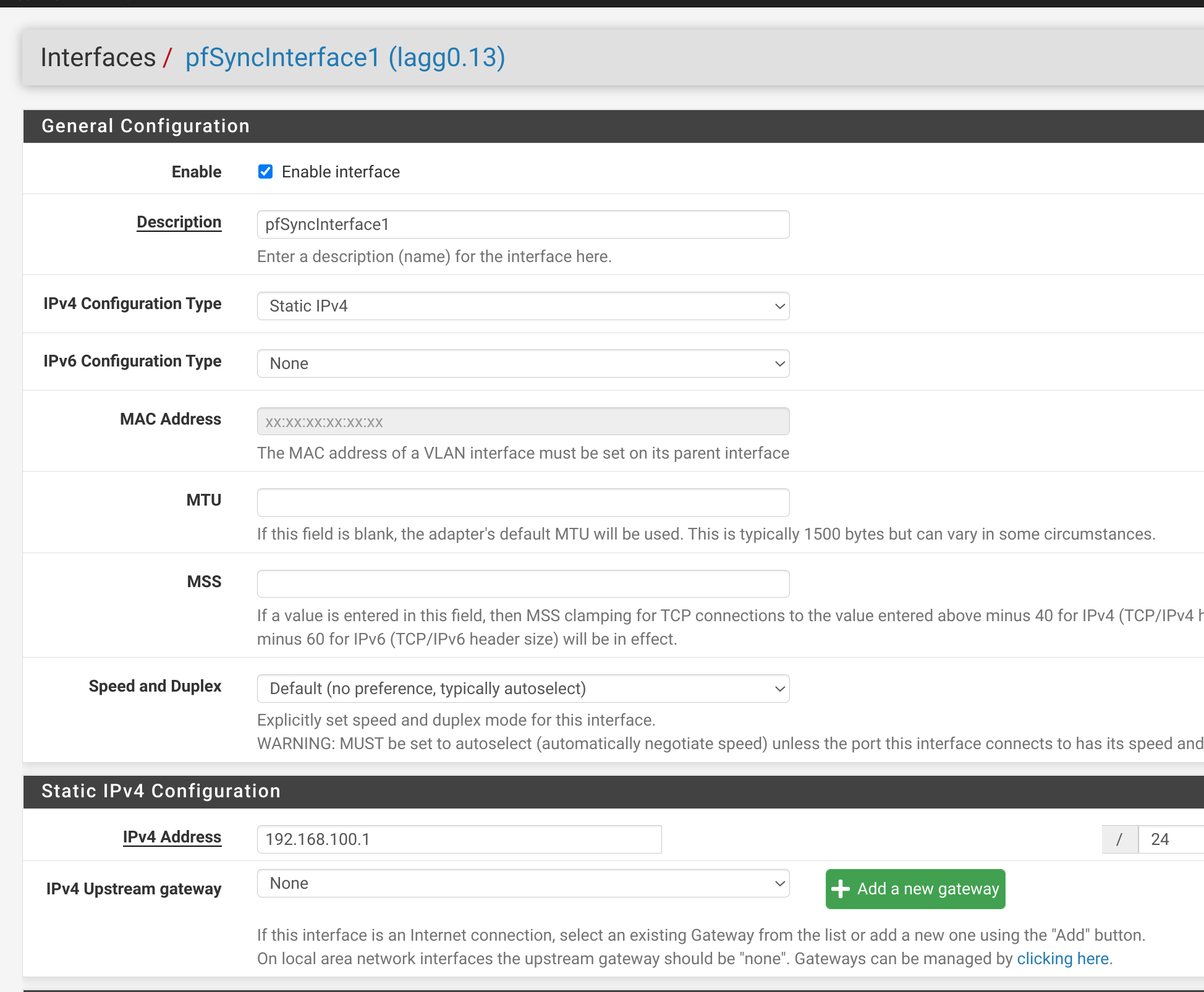

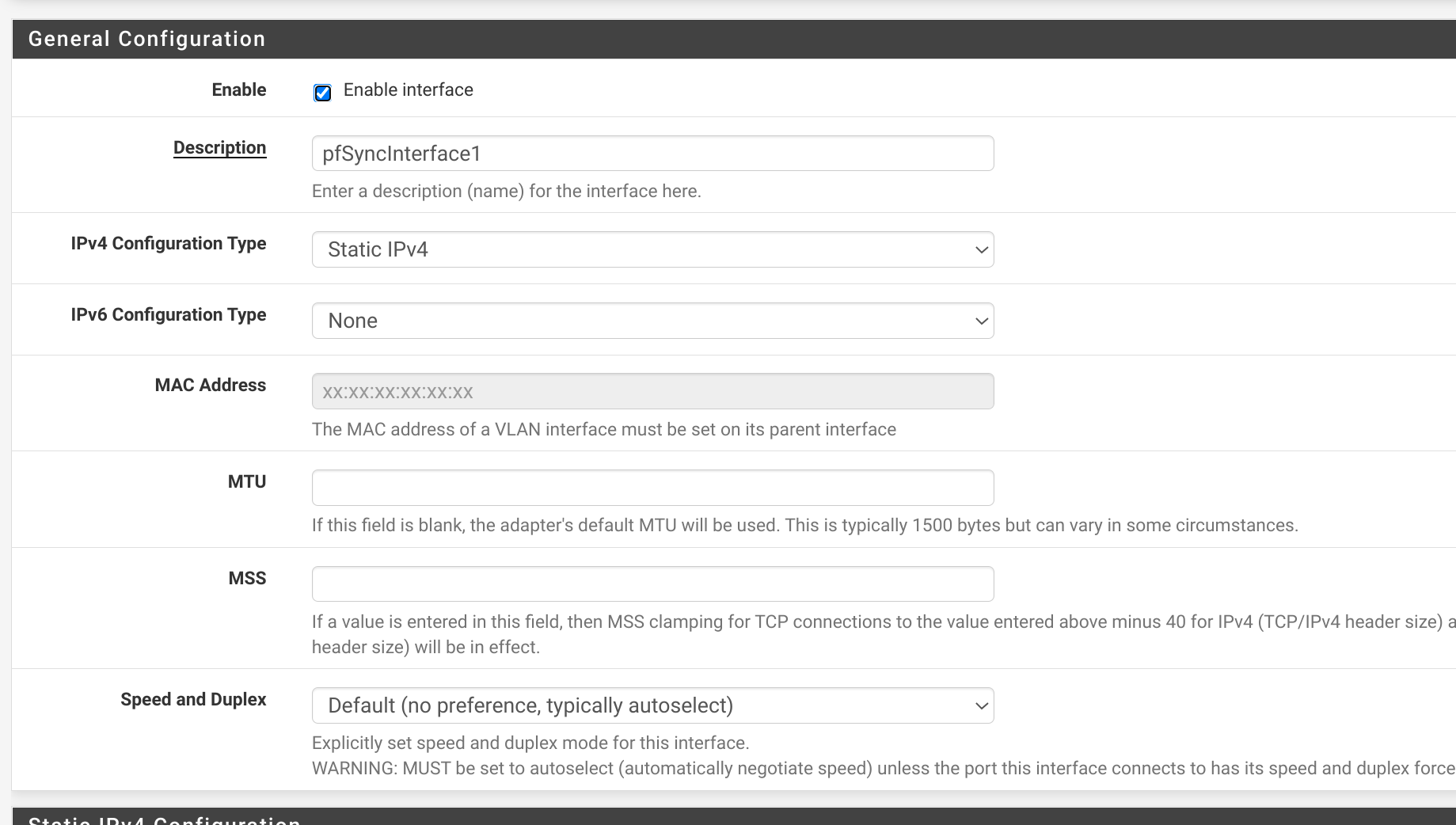

What does the interface config on both look like for the interface where the sync happens (e.g.

ifconfig igbX)?What does the status of the

pfsync0interface look like (ifconfig pfsync0)? -

@jimp said in pfSense 2.5.2 crash when enable Synchronize states:

Is the hardware setup identical? Meaning are the two nodes either the exact same make/model or at least have the same network interface names?

Hardware is different. Backup router is VM. Interface names are identical, but interface id's are different. First one has base lagg0 and second one has vtnet0

I'm not aware of anything that would cause trouble like what you are seeing, so it's not quite clear what the next step might be to narrow it down.

What are your pfsync settings in the GUI on each node?

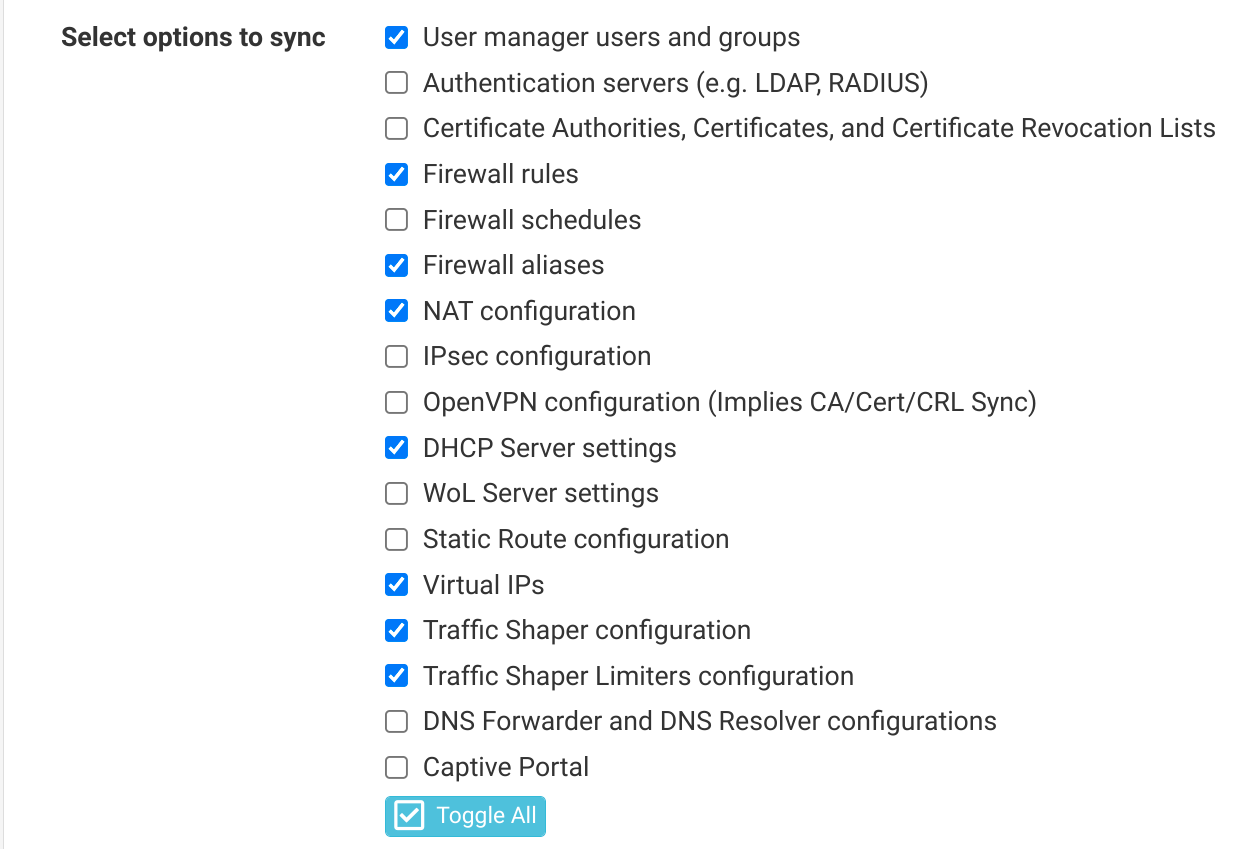

pfSync on 1st node:

pfSync on 2nd node:

What does the interface config on both look like for the interface where the sync happens (e.g.

ifconfig igbX)?What does the status of the

pfsync0interface look like (ifconfig pfsync0)?For the first node as HA is disabled right now it looks like:

pfsync0: flags=0<> metric 0 mtu 1500 groups: pfsyncfor second one:

pfsync0: flags=41<UP,RUNNING> metric 0 mtu 1500 pfsync: syncdev: vtnet0.13 syncpeer: 192.168.100.1 maxupd: 128 defer: off syncok: 1 groups: pfsyncI have pfsense in second branch of our office with similar setup so hardware router1 and virtual router2 on "old" pfSense 2.5.0 and it still works there without problem.

-

OK, if the interface names are different then pfsync isn't doing anything for you at the moment.

States are bound to specific interfaces and if those names are not identical on both (e.g. both

lagg0for the same interface like WAN/LAN or whatever) then the states would never match on the secondary node and vice versa.That will change on future versions but is still true for now.

That may be the source of your problem, though I don't recall seeing it crash like that before.

For now I'd recommend keeping state synchronization off since it doesn't give you any benefits. That, or find a way to make the interface names line up, like changing the VM to use laggX interfaces even if the laggs only have a single member.

-

@jimp I have it off for now, but I'm curious what's wrong.

You're saying that interface names should be the same, but would it end up for pfSense to crash?

If so, if I turn off second node, then enable pfSync over multicast in theory it should enable itself without crash? -

I don't expect it to crash, but if the interfaces don't match then state sync does nothing for you. The states are there but they cannot match traffic.

Using an example here, say the LAN on the primary is

ix0and on the secondary it isigb0. The state will have something like this (very much simplified)ix0 from a.a.a.a:xxxx -> b.b.b.b:yyySo even if the addresses match on the secondary, because the interface does not match, the state is ignored and the traffic would be dropped.

If you failover from one node to the other, all the connections have to be rebuilt by clients. In practice, you won't notice it a ton since most connections are short lived or things like UDP which are technically stateless. You'll see it most with persistent / long-term connections.

If you just do something like ping you may not notice since it's stateless as well.

What I'm saying is you have two choices:

- Make the interface names match one way or another and keep pfsync enabled. I don't know if your crash is related to this, so it could still happen in this case, but it would narrow down the cause at least.

- Disable pfsync and live with just config sync and let states be held individually on the HA nodes, which is essentially what you're doing now and didn't know.

-

@jimp said in pfSense 2.5.2 crash when enable Synchronize states:

That will change on future versions

Yay :) will be nice when replacing hardware.

@pszafer State sync does work with a one-NIC LAGG but that doesn't work with traffic shaping.

-

If you can tag a VLAN on that interface, traffic shaping does work with LAGG+VLANs since at that point traffic shaping is only on the VLAN, not the LAGG directly.

We hoped to have the updated pf code to let this work would be in 2.5.2 but it still needed some work and had to be backed out.

It's in 2.6.0 snapshots already but still needs work yet, may be a couple weeks before it's in a state were this would be testable in a viable way.