Slow DNS Resolver Infrastructure Cache Speed

-

I am having some issues with DNS

I am running unbound with Forwarding Mode enabled and have noticed from time to time DNS grinds to a complete halt

Ive noticed that this coincides with when pings shown on Status / DNS Resolver are very high

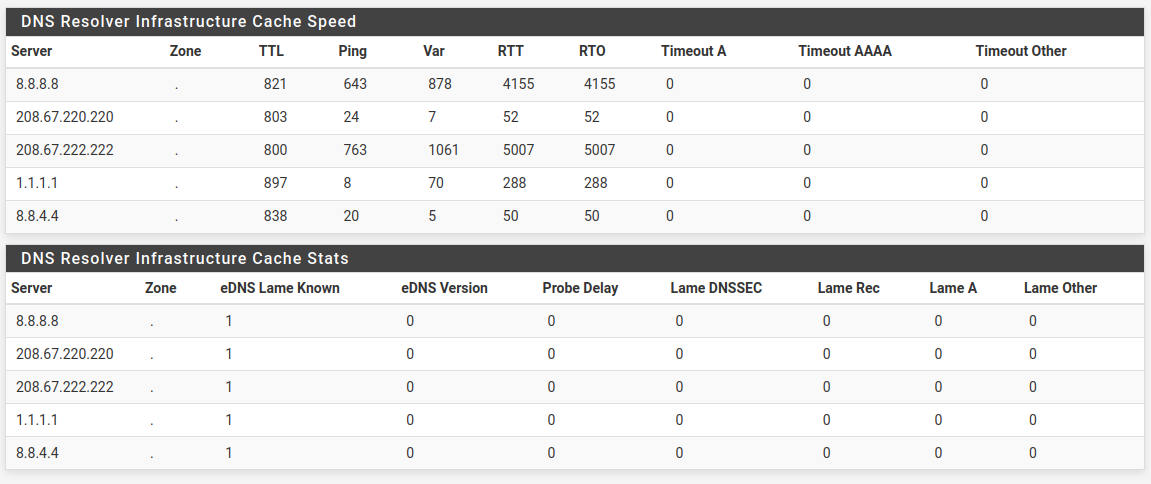

My current pings are:

When I ping each of the servers I get 8ms to each IP but when I run out of servers under ~1000ms DNS stops working even though ping to each remains at ~8ms

When I run unbound-control -c /var/unbound/unbound.conf stats_noreset | grep rate

thread0.num.queries_ip_ratelimited=0

thread1.num.queries_ip_ratelimited=0

thread2.num.queries_ip_ratelimited=0

thread3.num.queries_ip_ratelimited=0

total.num.queries_ip_ratelimited=0

num.query.ratelimited=0When I run unbound-control -c /var/unbound/unbound.conf stats_noreset | grep total.num

total.num.queries=56264

total.num.queries_ip_ratelimited=0

total.num.cachehits=25097

total.num.cachemiss=31167

total.num.prefetch=1508

total.num.expired=0

total.num.recursivereplies=31167The average recursion times dont reflect the high level of ping:

unbound-control -c /var/unbound/unbound.conf stats_noreset | grep recursion

thread0.recursion.time.avg=0.267957

thread0.recursion.time.median=0.0242643

thread1.recursion.time.avg=0.224297

thread1.recursion.time.median=0.0244268

thread2.recursion.time.avg=0.206835

thread2.recursion.time.median=0.0242813

thread3.recursion.time.avg=0.129227

thread3.recursion.time.median=0.0234074

total.recursion.time.avg=0.191963

total.recursion.time.median=0.024095I dont believe this is ISP related because a I have a few pfsense systems over multiple ISPs in different regions (Same DNS servers specified) and each show high levels of latency

Has anyone encountered this?

-

Did you try the default Resolver mode ?

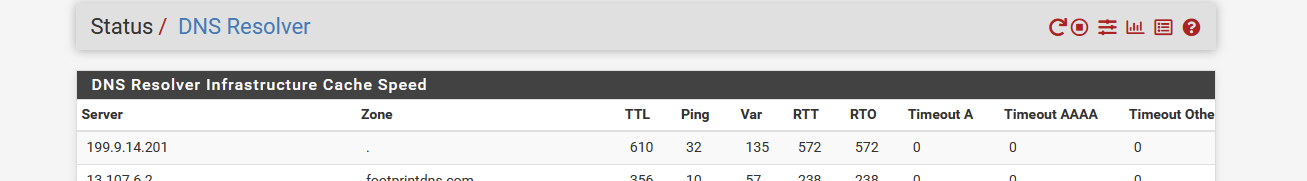

Forwarding tends to be a couple of ms faster, but with "+700" on the scale I wouldn't bother.This is considers 'not fast' - even on my 23 Mbits down, 2 Megabits up ADSL type of connection :

Note : 199.9.14.201 is the k-root server. One of the 13 real Internet root DNS servers. Not a commercial one.

Btw : it's always the ISP ! Your uplink is saturated, or, more up stream, their peering to 8.8.8.8 etc is plain bad.

Do a buffer bloat test.Your " Infrastructure Cache Stats" is empty ?! Did you just restarted unbound ?

Mine restarted a week or so ago. Keep in mind that restarts will empty the cache => all the speed benefits are gone. My cache contains several thousands of entries. -

@eria211 its a bad idea to forward to NS that do different stuff.. You have opendns in there "filtering" and then you have non filtering NS as well.

So which is it - do you want stuff filtered, or do you want stuff not filtered? Because you have no idea which one is going to get asked..

-

@gertjan Thanks for your reply

I will toggle a few over to default resolver mode and report back

I have not restarted unbound since yesterday, I did think it was odd that there was nothing in the infrastructure cache but when I run

unbound-control -c /var/unbound/unbound.conf dump_cache

I do get output seemingly thousands of lines long

Could this be a GUI bug on the cache or do I have a cache problem? I am getting identical behavior on multiple pfsense units

@johnpoz I wasnt aware that 208.67.222.222 or 208.67.220.220 had filtering in place, I thought these opendns servers were the unfiltered ones

-

@eria211 said in Slow DNS Resolver Infrastructure Cache Speed:

I thought these opendns servers were the unfiltered ones

They don't have those - unless you went into your control panel and set filtering to none for the IP doing the queries. And even if you do that, I do believe they still actually filter some stuff.

-

@johnpoz Ok thank you for that, I will make the change to disable forwarding mode later when the devices arent in use

Should I have entries in the DNS Resolver Infrastructure Cache Stats? or am I encountering a GUI bug? I do get results when I dump the cache in terminal but not when viewing it in the GUI

-

@eria211 what do you think should be in in your cache stats.. It would be the NS you have talked to.. That probe delay and lame should normally would be zeros

When you forward, the only NS listed there would be the ones your forwarding too. I have hundreds of entries because I resolve, not forward.

If you want to forward, have at it.. I personally do not understand why someone would want to even. Unless they had a problematic internet connection, or super high latency like sat connection or something.

Look at your recursion times.. Now look at mine, and I resolve.

thread0.recursion.time.avg=0.077631 thread0.recursion.time.median=0.0387047 thread1.recursion.time.avg=0.101424 thread1.recursion.time.median=0.0554224 thread2.recursion.time.avg=0.080589 thread2.recursion.time.median=0.0506946 thread3.recursion.time.avg=0.075362 thread3.recursion.time.median=0.0423724 total.recursion.time.avg=0.079575 total.recursion.time.median=0.0467985Why anyone would send all their queries to some service (guess you trust them very much).. Now if what you wanted was their filtering - ok sure.. You trading off trust for service they provide, etc.

-

@eria211 said in Slow DNS Resolver Infrastructure Cache Speed:

or am I encountering a GUI bug?

The GUI runs something like "unbound-control -c /var/unbound/unbound.conf dump_cache" for you and then shows what was being dumped.

This might answer a future question : run this :

grep 'start' /var/log/resolver.logand you know how often unbound restarts == how often the cache gets wiped == how often your local DNS stops functioning while it is restating.

-

@gertjan said in Slow DNS Resolver Infrastructure Cache Speed:

The GUI runs something like "unbound-control -c /var/unbound/unbound.conf dump_cache"

No the gui is showing you the INFRASTRUCTURE cache.. Not the actual cache.. which is not the same thing. The infrastructure cache is the the info it has about talking to NS. how fast they respond, etc. Which ones they are for domains, etc.

Not the cache of say www.whatever.tld..

The gui is showing you this

dump_infra show ping and edns entries

-

@johnpoz

Oops, you're right. '_dump' isn't '_infra'. -