[solved] pfSense (2.6.0 & 22.01 ) is very slow on Hyper-V

-

Nearly!

You probably want to filter by the client IP you are running the test on, if you're capturing on LAN. And set the capture to, say, 5000 packets.

Steve

-

Okay thanks for the acknowledgment here is the new capture, I had to put the download on my webserver for download since its 5MB

https://zebrita.publicvm.com/files/packetcapture(2).cap

-

@dominixise

Here is another one with just my host iphttps://zebrita.publicvm.com/files/packetcapture(3).cap

-

A bit of digging and it looks like 2 issues to me.

One in Hyper-V which I have now got resolved, fix below (well for me anyhow)

One in pfsense that is missreporting throughput (I can live with that till a fix comes)For Hyper-V I found this article on RSC https://www.doitfixit.com/blog/2020/01/15/slow-network-speed-with-hyper-v-virtual-machines-on-windows-server-server-2019/

Once I disabled RSC on all virtual switches my speed was back to normal. No restart needed, just go on to Hyper-V host, open powershell and input commands to disable RSC on each virtual switch.These are commands I used

Get-VMSwitch -Name LAN | Select-Object RSC

Checks status, if true run next command LAN is my vswitch nameSet-VMSwitch -Name LAN -EnableSoftwareRsc $false

This disables RSC, re run first command to confirm it is disabledIf your vSwitch has a space in the name add "" around the name

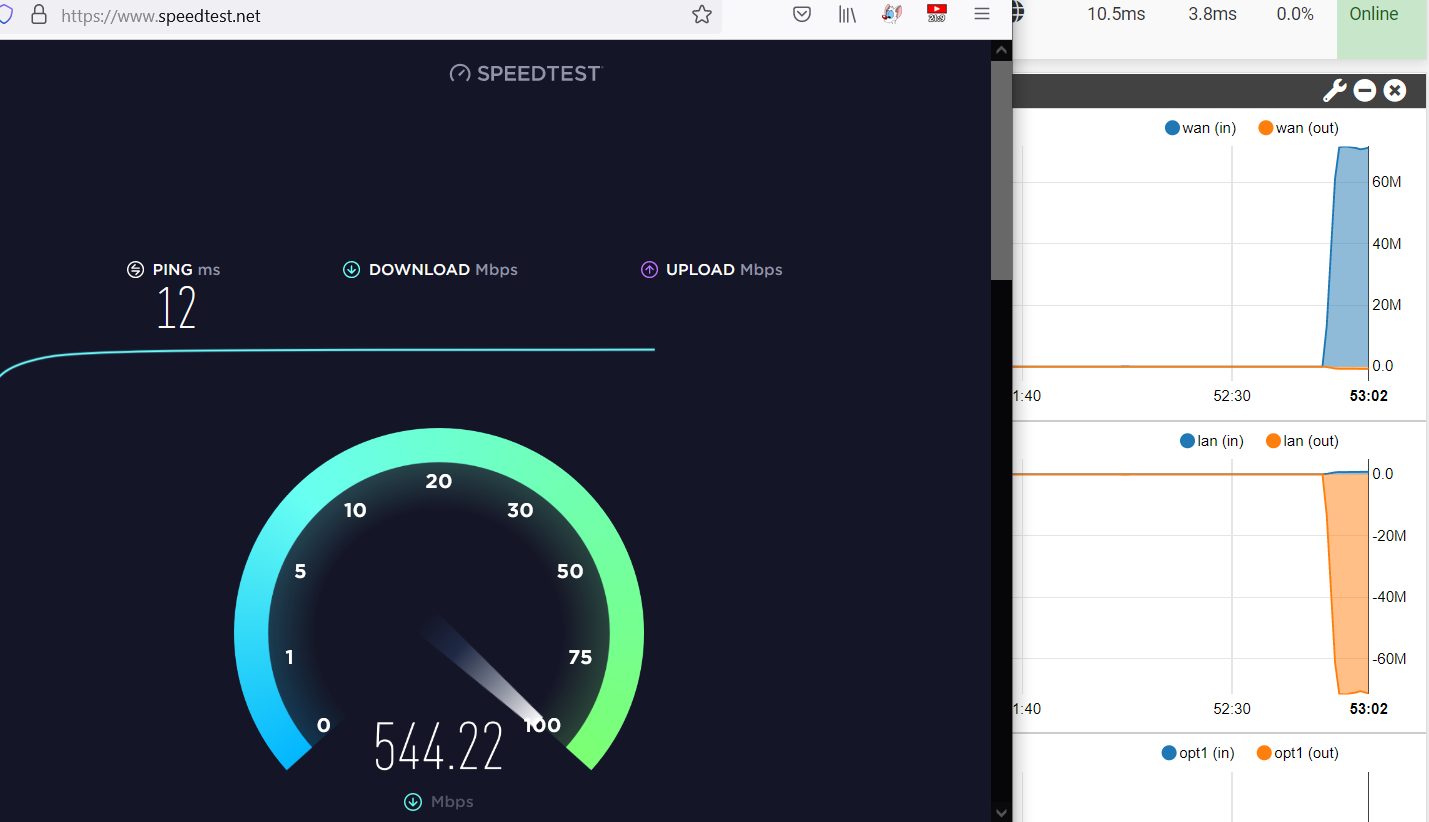

Get-VMSwitch -Name "WAN #1" | Select-Object RSCAfter applying speed is back to normal but pfsense seems to top out showing throughput at 60mb, even though I was getting over 500mb.

Anyhow, hope it helps thers on Hyper-V (this is a 2019 instance of Hyper-V)

-

@rmh-0 fantastic find!

I can confirm this has resolved it for me to, I'll leave it as is until a fix comes out.

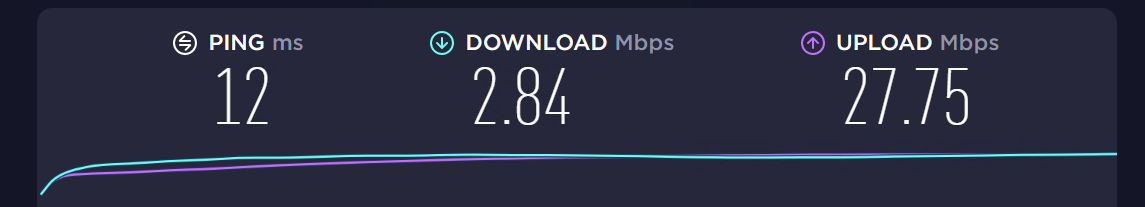

Speed with RSC enabled:

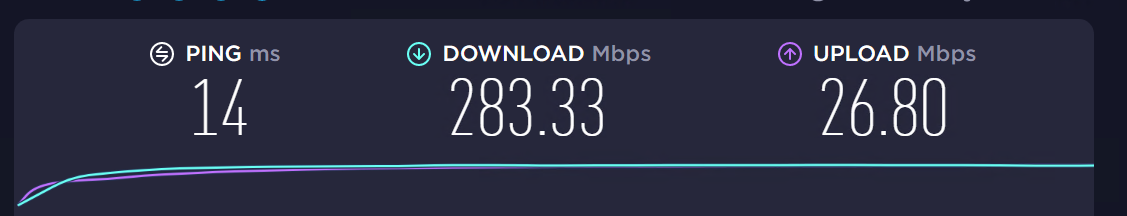

Speed with RSC disabled:

-

Disabling RSC did nothing good for me at least. Problem with super slow SMB-Share over VLAN persists.

-

Mmm, RSC is TCP only so I guess that explains why you saw much better throughput with a UDP VPN.

But I'm unsure how the pfSense update would trigger that... -

@stephenw10 maybe with the new FreeBSD kernel release it activated some incompatible functions for Windows Server on a network card level? e.g. thanks to @RMH-0 I disabled RSC and it returned to a normal level.

I know that about 7 years ago I needed to turn off VMQ in a large environment due to a bug in it that caused the guest VMs to all work incredibly slow...

-

V viktor_g referenced this topic on

V viktor_g referenced this topic on

-

V viktor_g referenced this topic on

V viktor_g referenced this topic on

-

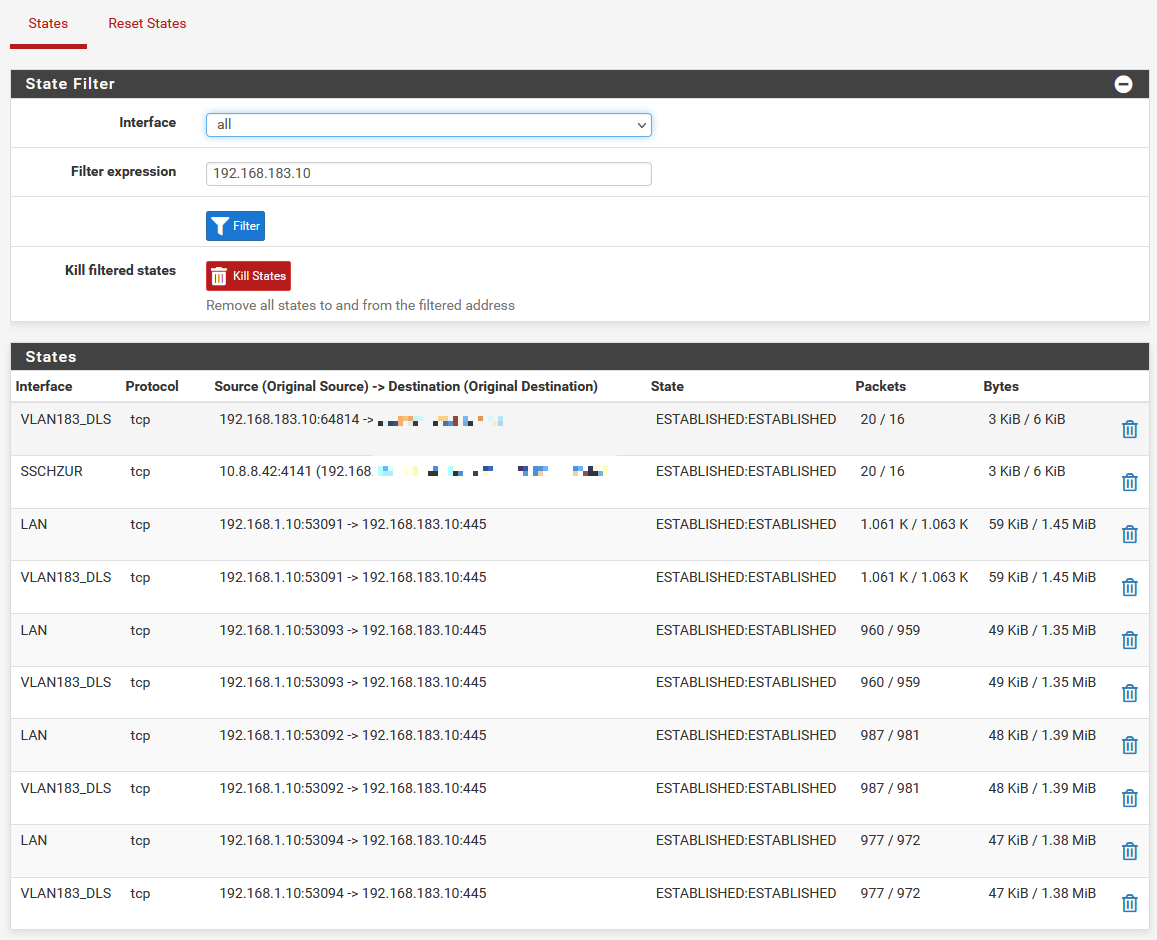

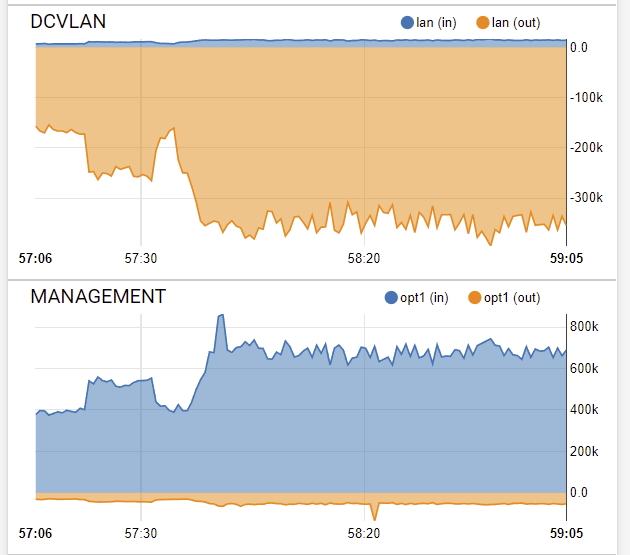

@donzalmrol If you do not mind a quick query as yours is OK now. Do you get a similar representation of throughput in pfsense in comparison to the spee test.

I get the below which is way different. Trying to see if I have another issue or if others have the same.

-

Disabling RSC did nothing for my environment. Inter-vLAN rate are still a fraction of what they were. Between machines on the same vLAN a file copy takes 3 seconds, between vLANs via the pfSense this jumps to 45-90 minutes.

-

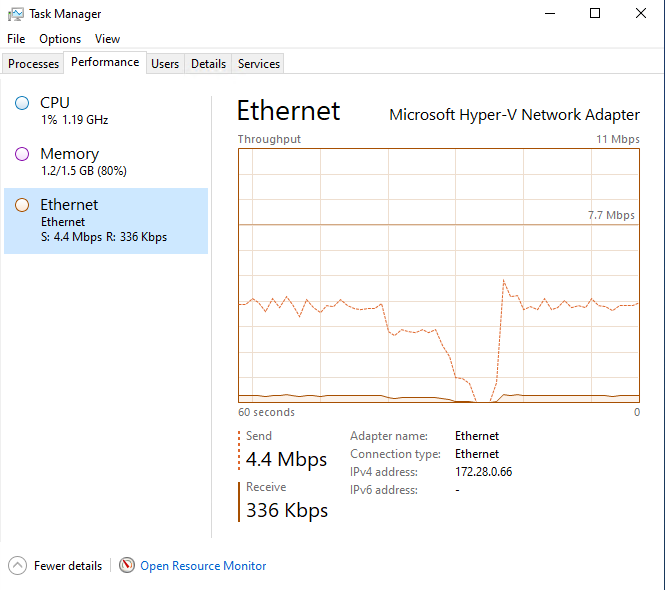

@paulprior This is a file copy in action between vLANs. There are 10Gb\s virtual adapters!

-

@paulprior From Windows:

-

Maybe they are different problems, I for myself had no problem with my WAN speed from the beginning.

-

So, disabling RSC has restored the network speed between VMs behind the pfSense and the internet (HTTPS download speeds), but the inter-vlan SMB file copy speeds are awful. Not quite dial-up modem speeds but almost.

-

Neither of you using hardware pass-through?

You both have VLANs on hn NICs directly?

I could definitely believe it was some hardware VLAN off-load issue.What do you see in:

sysctl hw.hnSteve

-

@stephenw10 said in After Upgrade inter (V)LAN communication is very slow on Hyper-V, for others WAN Speed is affected:

sysctl hw.hn

hw.hn.vf_xpnt_attwait: 2

hw.hn.vf_xpnt_accbpf: 0

hw.hn.vf_transparent: 1

hw.hn.vfmap:

hw.hn.vflist:

hw.hn.tx_agg_pkts: -1

hw.hn.tx_agg_size: -1

hw.hn.lro_mbufq_depth: 0

hw.hn.tx_swq_depth: 0

hw.hn.tx_ring_cnt: 0

hw.hn.chan_cnt: 0

hw.hn.use_if_start: 0

hw.hn.use_txdesc_bufring: 1

hw.hn.tx_taskq_mode: 0

hw.hn.tx_taskq_cnt: 1

hw.hn.lro_entry_count: 128

hw.hn.direct_tx_size: 128

hw.hn.tx_chimney_size: 0

hw.hn.tso_maxlen: 65535

hw.hn.udpcs_fixup_mtu: 1420

hw.hn.udpcs_fixup: 0

hw.hn.enable_udp6cs: 1

hw.hn.enable_udp4cs: 1

hw.hn.trust_hostip: 1

hw.hn.trust_hostudp: 1

hw.hn.trust_hosttcp: 1

Is looking the same on both "machines".

-

@stephenw10 I moved the Windows machine to a new vNIC and vSwitch, this time without VLAN. Problem stays, so seems not VLAN related.

-

There are two loader variables we set in Azure that you don't have:

hw.hn.vf_transparent="0" hw.hn.use_if_start="1"I have no particular insight into what those do though. And that didn't change in 2.6.

How is your traffic between internal interfaces different to via your WAN in the new setup?

Steve

-

@stephenw10 There is no difference at all.

For the last two hours I tried to test with iperf between the hosts, with the old and new pfsense, and I couldn't measure any differences... so it might be SMB specific?

I only see one other person having the same problem.

It wouldn't been the first time I had to install pfSense fresh from the get-go after a new version. Whatever my usecase is, it might be special...

So I guess "This is the Way". -

Finally had to revert back to v2.5.2, the performance is just too poor on 2.6.0 to cope with. I'll have another shot at testing 2.6.0 at the weekend.

Lesson learned on my part here; always take a checkpoint before upgrading the firmware.

On the plus side, 2.5.2 is blisteringly fast!