[solved] pfSense (2.6.0 & 22.01 ) is very slow on Hyper-V

-

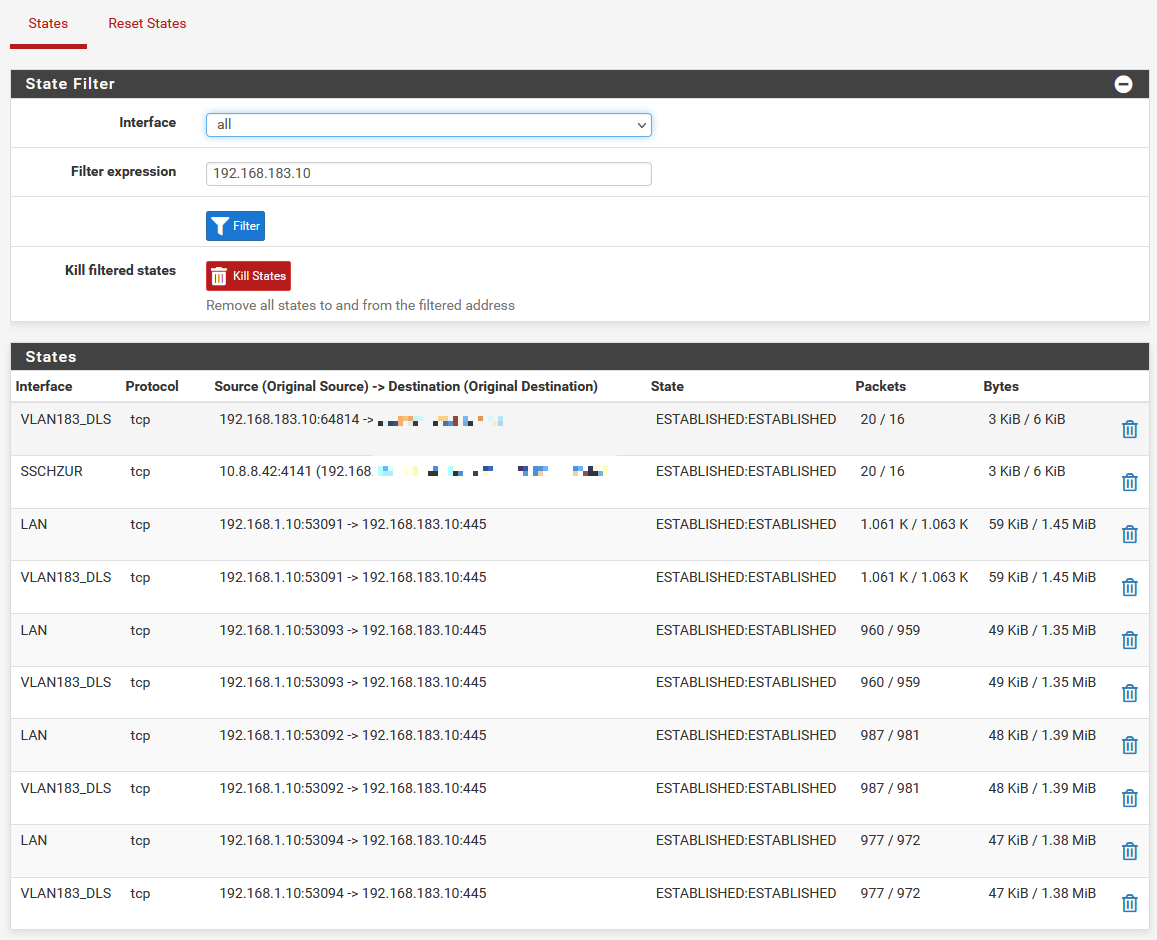

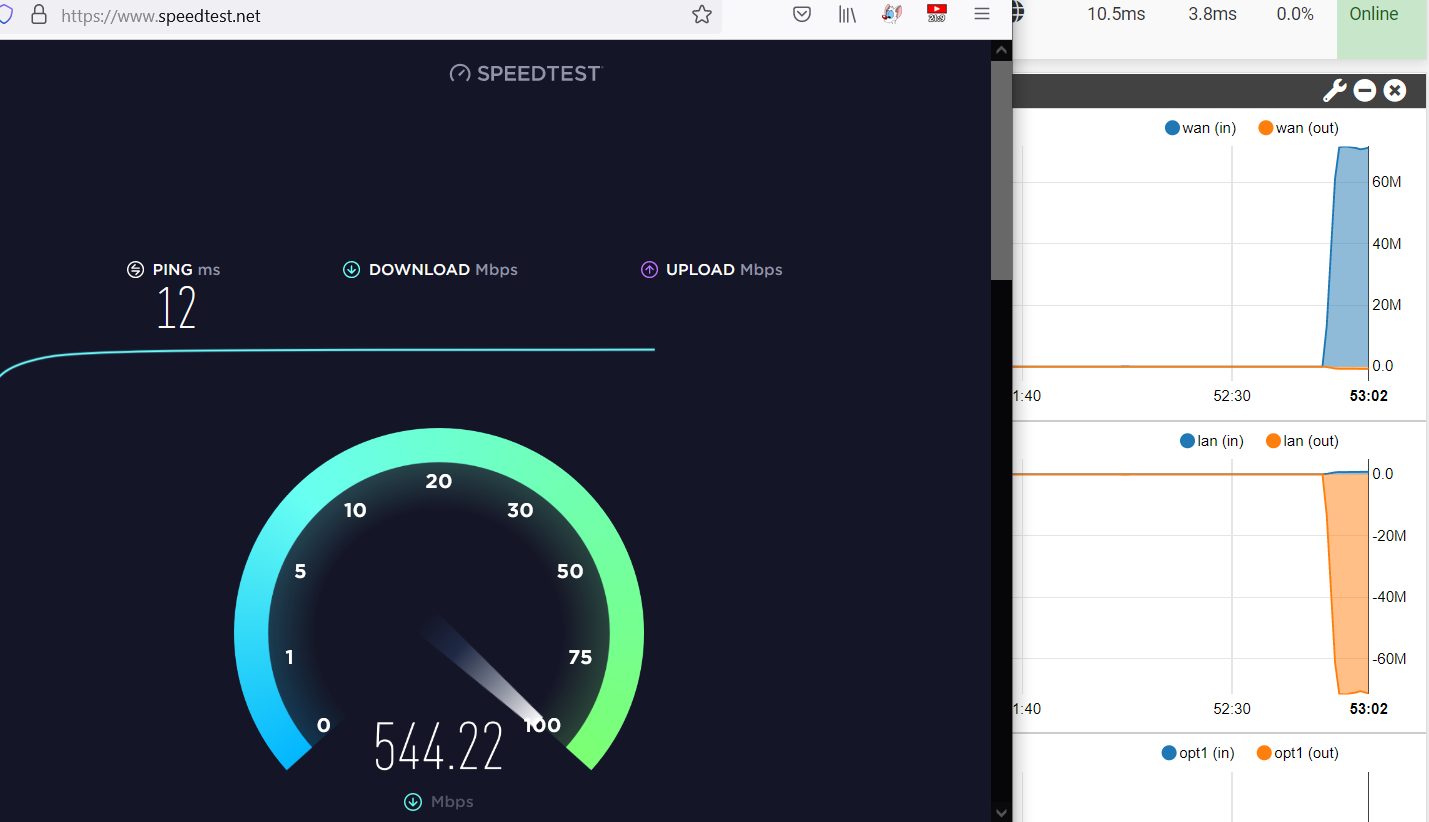

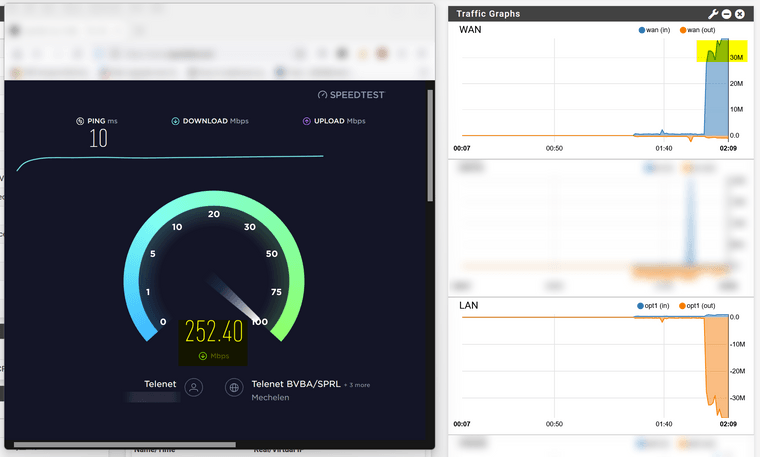

@donzalmrol If you do not mind a quick query as yours is OK now. Do you get a similar representation of throughput in pfsense in comparison to the spee test.

I get the below which is way different. Trying to see if I have another issue or if others have the same.

-

Disabling RSC did nothing for my environment. Inter-vLAN rate are still a fraction of what they were. Between machines on the same vLAN a file copy takes 3 seconds, between vLANs via the pfSense this jumps to 45-90 minutes.

-

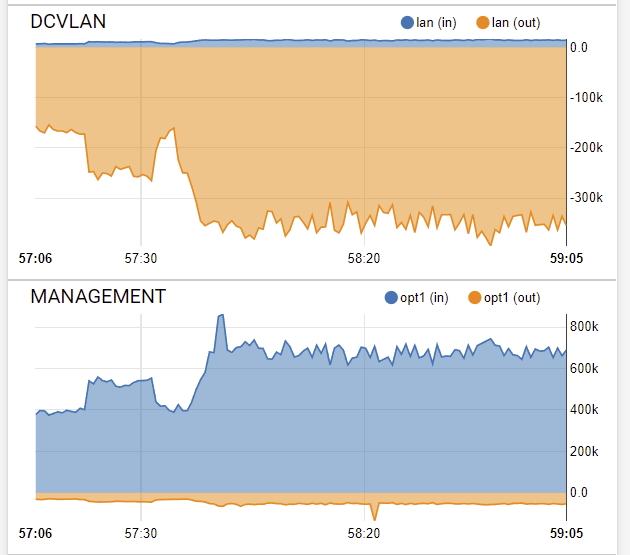

@paulprior This is a file copy in action between vLANs. There are 10Gb\s virtual adapters!

-

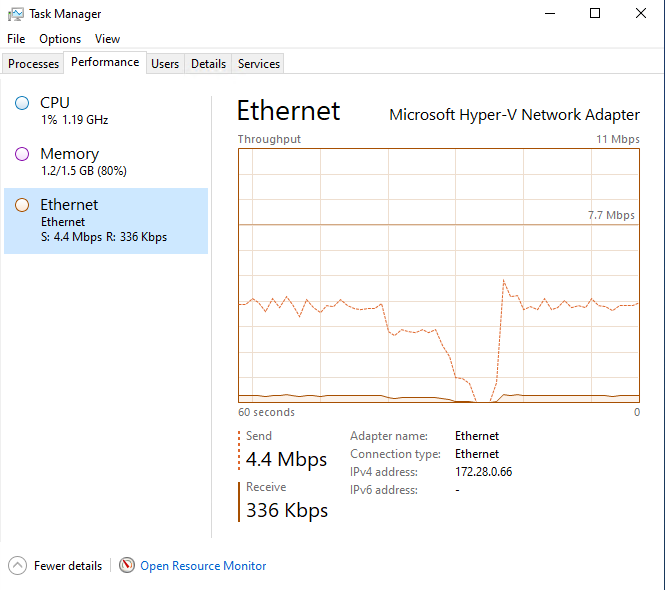

@paulprior From Windows:

-

Maybe they are different problems, I for myself had no problem with my WAN speed from the beginning.

-

So, disabling RSC has restored the network speed between VMs behind the pfSense and the internet (HTTPS download speeds), but the inter-vlan SMB file copy speeds are awful. Not quite dial-up modem speeds but almost.

-

Neither of you using hardware pass-through?

You both have VLANs on hn NICs directly?

I could definitely believe it was some hardware VLAN off-load issue.What do you see in:

sysctl hw.hnSteve

-

@stephenw10 said in After Upgrade inter (V)LAN communication is very slow on Hyper-V, for others WAN Speed is affected:

sysctl hw.hn

hw.hn.vf_xpnt_attwait: 2

hw.hn.vf_xpnt_accbpf: 0

hw.hn.vf_transparent: 1

hw.hn.vfmap:

hw.hn.vflist:

hw.hn.tx_agg_pkts: -1

hw.hn.tx_agg_size: -1

hw.hn.lro_mbufq_depth: 0

hw.hn.tx_swq_depth: 0

hw.hn.tx_ring_cnt: 0

hw.hn.chan_cnt: 0

hw.hn.use_if_start: 0

hw.hn.use_txdesc_bufring: 1

hw.hn.tx_taskq_mode: 0

hw.hn.tx_taskq_cnt: 1

hw.hn.lro_entry_count: 128

hw.hn.direct_tx_size: 128

hw.hn.tx_chimney_size: 0

hw.hn.tso_maxlen: 65535

hw.hn.udpcs_fixup_mtu: 1420

hw.hn.udpcs_fixup: 0

hw.hn.enable_udp6cs: 1

hw.hn.enable_udp4cs: 1

hw.hn.trust_hostip: 1

hw.hn.trust_hostudp: 1

hw.hn.trust_hosttcp: 1

Is looking the same on both "machines".

-

@stephenw10 I moved the Windows machine to a new vNIC and vSwitch, this time without VLAN. Problem stays, so seems not VLAN related.

-

There are two loader variables we set in Azure that you don't have:

hw.hn.vf_transparent="0" hw.hn.use_if_start="1"I have no particular insight into what those do though. And that didn't change in 2.6.

How is your traffic between internal interfaces different to via your WAN in the new setup?

Steve

-

@stephenw10 There is no difference at all.

For the last two hours I tried to test with iperf between the hosts, with the old and new pfsense, and I couldn't measure any differences... so it might be SMB specific?

I only see one other person having the same problem.

It wouldn't been the first time I had to install pfSense fresh from the get-go after a new version. Whatever my usecase is, it might be special...

So I guess "This is the Way". -

Finally had to revert back to v2.5.2, the performance is just too poor on 2.6.0 to cope with. I'll have another shot at testing 2.6.0 at the weekend.

Lesson learned on my part here; always take a checkpoint before upgrading the firmware.

On the plus side, 2.5.2 is blisteringly fast!

-

@bob-dig

Sorry to derail your topic but I am searching google too (maybe its a NAT issue with Hyper-V)

Here is some links with info that might be helpful:

https://superuser.com/questions/1266248/hyper-v-external-network-switch-kills-my-hosts-network-performancehttps://anandthearchitect.com/2018/01/06/windows-10-how-to-setup-nat-network-for-hyper-v-guests/

Dom

-

@stephenw10 said in After Upgrade inter (V)LAN communication is very slow (on Hyper-V).:

Neither of you using hardware pass-through?

You both have VLANs on hn NICs directly?

I could definitely believe it was some hardware VLAN off-load issue.What do you see in:

sysctl hw.hnSteve

-

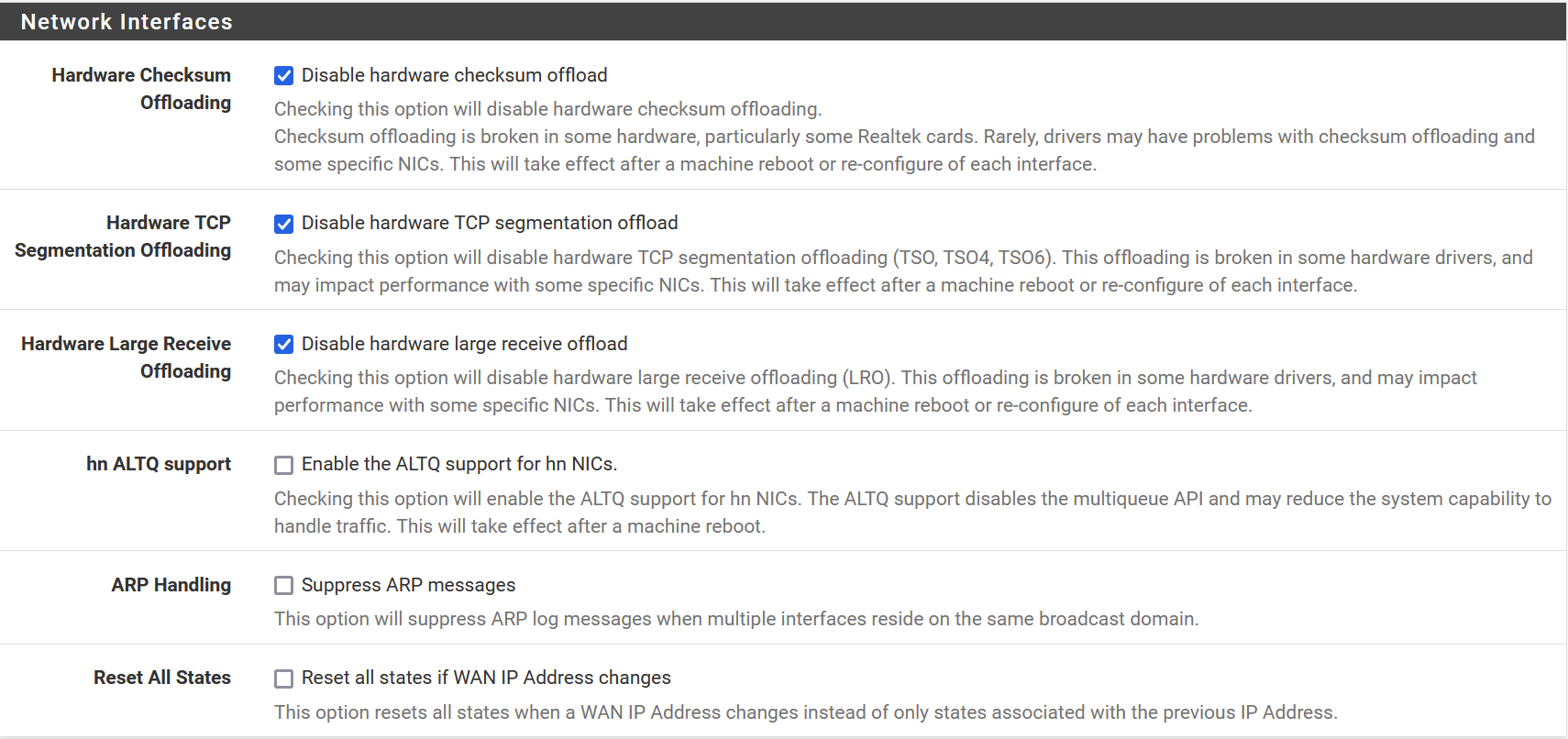

No, disabled in PFSense:

Enabling/ disabling ALTQ seems to have no measurable impact at this moment.

Enabling/ disabling ALTQ seems to have no measurable impact at this moment. -

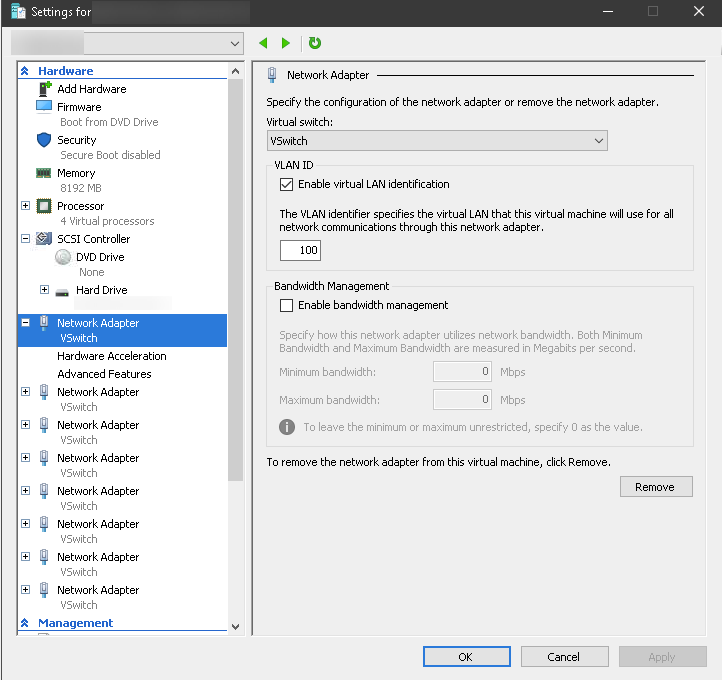

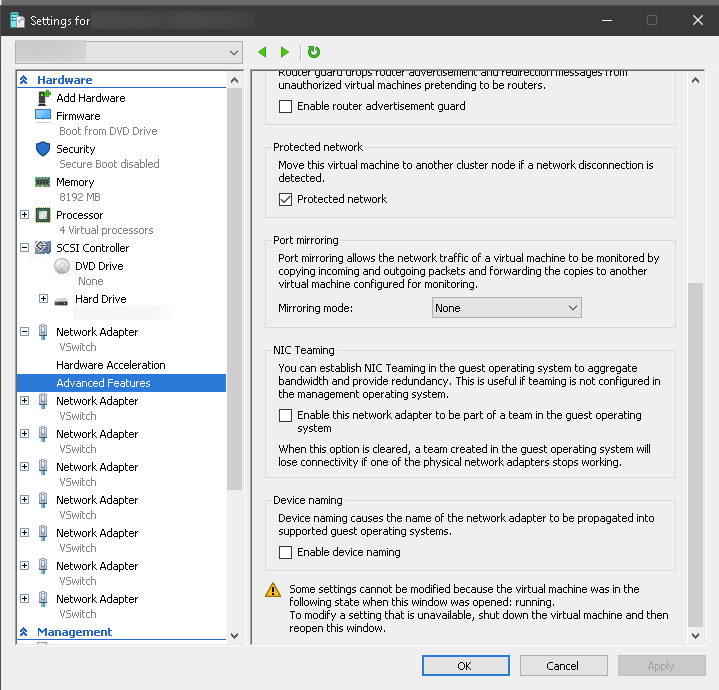

No, on Hyper-V its a direct virtual hardware adapter with a VLAN assigned to it, so for PFSense the interface is just an interface, I do not use any VLANs in PFSense. This is repeated for 8 interfaces.

-

sysctl hw.hn output:

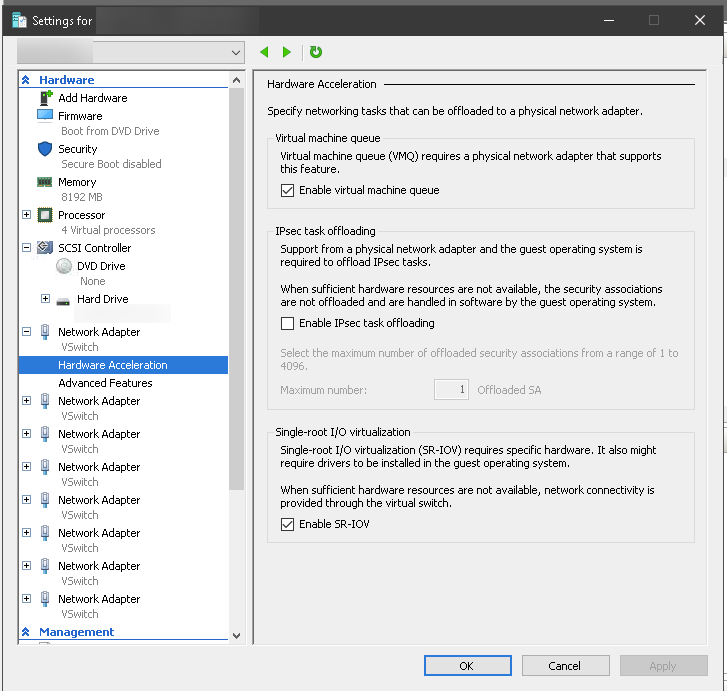

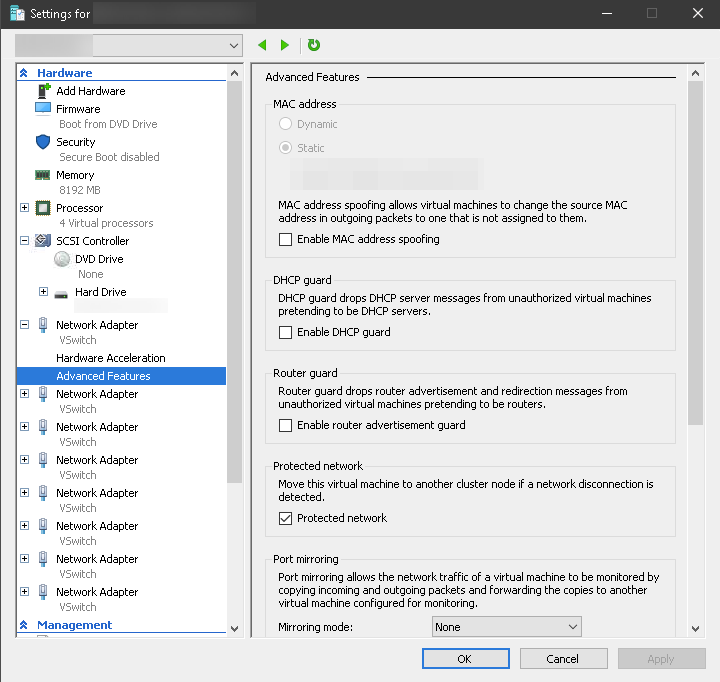

hw.hn.vf_xpnt_attwait: 2 hw.hn.vf_xpnt_accbpf: 0 hw.hn.vf_transparent: 0 hw.hn.vfmap: hw.hn.vflist: hw.hn.tx_agg_pkts: -1 hw.hn.tx_agg_size: -1 hw.hn.lro_mbufq_depth: 0 hw.hn.tx_swq_depth: 0 hw.hn.tx_ring_cnt: 0 hw.hn.chan_cnt: 0 hw.hn.use_if_start: 1 hw.hn.use_txdesc_bufring: 1 hw.hn.tx_taskq_mode: 0 hw.hn.tx_taskq_cnt: 1 hw.hn.lro_entry_count: 128 hw.hn.direct_tx_size: 128 hw.hn.tx_chimney_size: 0 hw.hn.tso_maxlen: 65535 hw.hn.udpcs_fixup_mtu: 1420 hw.hn.udpcs_fixup: 0 hw.hn.enable_udp6cs: 1 hw.hn.enable_udp4cs: 1 hw.hn.trust_hostip: 1 hw.hn.trust_hostudp: 1 hw.hn.trust_hosttcp: 1Some images on how the PFSense guest is set up:

NW Adapter

HW Acceleration

Advanced features 1/2

Advanced features 2/2

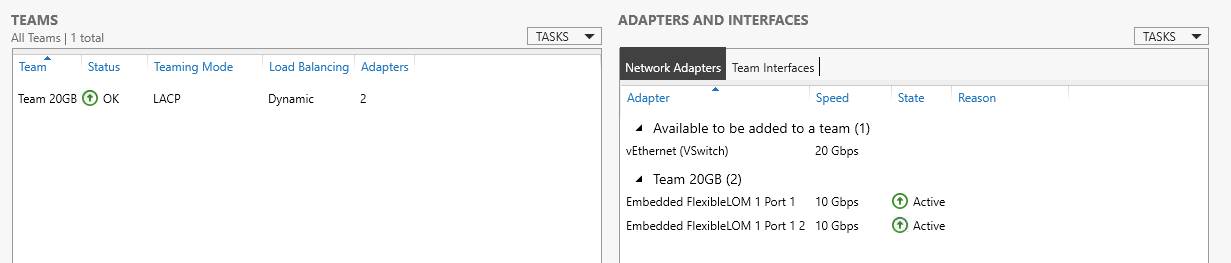

My server's physical NW adapter is teamed in LACP:

Using a HPE 10G 2-Port 546FLR-SFP+ (FLR -> Flexible LOM (Lan On Motherboard) Rack ) card which uses a Mellanox X-3 Pro processor which is supported by FreeBSD.

Datasheet: https://www.hpe.com/psnow/doc/c04543737.pdf?jumpid=in_lit-psnow-getpdf@RMH-0 It matches my speedtest, the test is in Mbps (megabits/s) while PFSense is in MBps (megabytes/s), this is my speedtest output:

-

-

@stephenw10 I disabled all of the hardware offloading (and many combinations of partially on and off). The only setting that increased speed was to disable ALTQ support which doubled the throughput but since it has already become about 10-20 times slower a doubling wasn't great.

All of my adapters are Hyper-V virtual adapters except for the one on the WAN interface which bonds to a physical intel adapter.

Back on v2.5.2 now and inter-vlan performance is an order of magnitude better. -

@paulprior glad to hear that, I'm going to rollback my other site (B) and keep this one on 2.6.0 for further troubleshooting.

-

@dominixise said in After Upgrade inter (V)LAN communication is very slow (on Hyper-V).:

Here is some links with info that might be helpful:

https://superuser.com/questions/1266248/hyper-v-external-network-switch-kills-my-hosts-network-performanceI already tried using just private vSwitches, nothing changed.

@stephenw10 said in After Upgrade inter (V)LAN communication is very slow (on Hyper-V).:

There are two loader variables we set in Azure that you don't have:

I added those to "SystemAdvancedSystem Tunables" and did a reboot but it didn't changed anything.

I did some more iperfing, this time also the other way around, so I changed client and server and there it shows:

C:\>iperf2.exe -c 192.168.1.10 -p 4711 -t 60 -i 10 ------------------------------------------------------------ Client connecting to 192.168.1.10, TCP port 4711 TCP window size: 64.0 KByte (default) ------------------------------------------------------------ [ 1] local 192.168.183.10 port 55124 connected with 192.168.1.10 port 4711 [ ID] Interval Transfer Bandwidth [ 1] 0.00-10.00 sec 1.38 MBytes 1.15 Mbits/sec [ 1] 10.00-20.00 sec 128 KBytes 105 Kbits/sec [ 1] 20.00-30.00 sec 256 KBytes 210 Kbits/sec [ 1] 30.00-40.00 sec 256 KBytes 210 Kbits/sec [ 1] 40.00-50.00 sec 128 KBytes 105 Kbits/sec [ 1] 50.00-60.00 sec 256 KBytes 210 Kbits/sec [ 1] 0.00-123.86 sec 2.38 MBytes 161 Kbits/sec C:\>iperf2.exe -c 192.168.183.10 -p 4711 -t 60 -i 10 ------------------------------------------------------------ Client connecting to 192.168.183.10, TCP port 4711 TCP window size: 64.0 KByte (default) ------------------------------------------------------------ [ 1] local 192.168.1.10 port 56363 connected with 192.168.183.10 port 4711 [ ID] Interval Transfer Bandwidth [ 1] 0.00-10.00 sec 6.29 GBytes 5.41 Gbits/sec [ 1] 10.00-20.00 sec 6.28 GBytes 5.40 Gbits/sec [ 1] 20.00-30.00 sec 6.94 GBytes 5.97 Gbits/sec [ 1] 30.00-40.00 sec 6.81 GBytes 5.85 Gbits/sec [ 1] 40.00-50.00 sec 6.99 GBytes 6.01 Gbits/sec [ 1] 50.00-60.00 sec 6.94 GBytes 5.96 Gbits/sec [ 1] 0.00-60.00 sec 40.3 GBytes 5.77 Gbits/secOnly tcp is affected and it only shows when "my" machine is the server, not the other way around. It is not SMB specific and I already mentioned that connecting to socks proxy in another vlan also makes these problems.

-

I made yet another test, just private switches between two VMs and the pfSense VM, no external, no physical switch involved and no VLAN.

Again TCP is problematic, this time in both directions. One UDP bench for reference.

C:\>iperf2.exe -c 192.168.45.100 -p 4711 -t 60 -i 10 ------------------------------------------------------------ Client connecting to 192.168.45.100, TCP port 4711 TCP window size: 64.0 KByte (default) ------------------------------------------------------------ [ 1] local 192.168.44.100 port 52446 connected with 192.168.45.100 port 4711 [ ID] Interval Transfer Bandwidth [ 1] 0.00-10.00 sec 384 KBytes 315 Kbits/sec [ 1] 10.00-20.00 sec 256 KBytes 210 Kbits/sec [ 1] 20.00-30.00 sec 128 KBytes 105 Kbits/sec [ 1] 30.00-40.00 sec 256 KBytes 210 Kbits/sec [ 1] 40.00-50.00 sec 256 KBytes 210 Kbits/sec [ 1] 50.00-60.00 sec 128 KBytes 105 Kbits/sec [ 1] 0.00-121.14 sec 1.38 MBytes 95.2 Kbits/sec C:\>iperf2.exe -c 192.168.44.100 -p 4711 -t 60 -i 10 ------------------------------------------------------------ Client connecting to 192.168.44.100, TCP port 4711 TCP window size: 64.0 KByte (default) ------------------------------------------------------------ [ 1] local 192.168.45.100 port 55314 connected with 192.168.44.100 port 4711 [ ID] Interval Transfer Bandwidth [ 1] 0.00-10.00 sec 4.00 MBytes 3.36 Mbits/sec [ 1] 10.00-20.00 sec 3.88 MBytes 3.25 Mbits/sec [ 1] 20.00-30.00 sec 3.88 MBytes 3.25 Mbits/sec [ 1] 30.00-40.00 sec 3.88 MBytes 3.25 Mbits/sec [ 1] 40.00-50.00 sec 3.88 MBytes 3.25 Mbits/sec [ 1] 50.00-60.00 sec 3.88 MBytes 3.25 Mbits/sec [ 1] 0.00-61.97 sec 23.5 MBytes 3.18 Mbits/sec C:\>iperf2.exe -c 192.168.44.100 -p 4712 -u -t 60 -i 10 -b 10000M ------------------------------------------------------------ Client connecting to 192.168.44.100, UDP port 4712 Sending 1470 byte datagrams, IPG target: 1.12 us (kalman adjust) UDP buffer size: 64.0 KByte (default) ------------------------------------------------------------ [ 1] local 192.168.45.100 port 62027 connected with 192.168.44.100 port 4712 [ ID] Interval Transfer Bandwidth [ 1] 0.00-10.00 sec 3.61 GBytes 3.10 Gbits/sec [ 1] 10.00-20.00 sec 3.63 GBytes 3.12 Gbits/sec [ 1] 20.00-30.00 sec 3.67 GBytes 3.15 Gbits/sec [ 1] 30.00-40.00 sec 3.63 GBytes 3.12 Gbits/sec [ 1] 40.00-50.00 sec 3.67 GBytes 3.15 Gbits/sec [ 1] 50.00-60.00 sec 3.70 GBytes 3.18 Gbits/sec [ 1] 0.00-60.00 sec 21.9 GBytes 3.14 Gbits/sec [ 1] Sent 16009349 datagrams [ 1] Server Report: [ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams [ 1] 0.00-60.00 sec 21.6 GBytes 3.09 Gbits/sec 0.915 ms 254222/16009348 (1.6%)I am kinda done.

-

@bob-dig how is your Hyper-V guest set up? Similar to mine? Perhaps you need to disable VMQ and SR-IOV and test again?

-

@donzalmrol Already did this, didn't helped.