J1900 quadcore low power PC not quite making 1Gbps throughput with NAT

-

Low power PC (J1900 Quotom PC) commonly sold for use with PFsense..

CPU is a small 10W quadcore from end of 2013 and widely sold and popular for this purpose

Been using these for a few years.

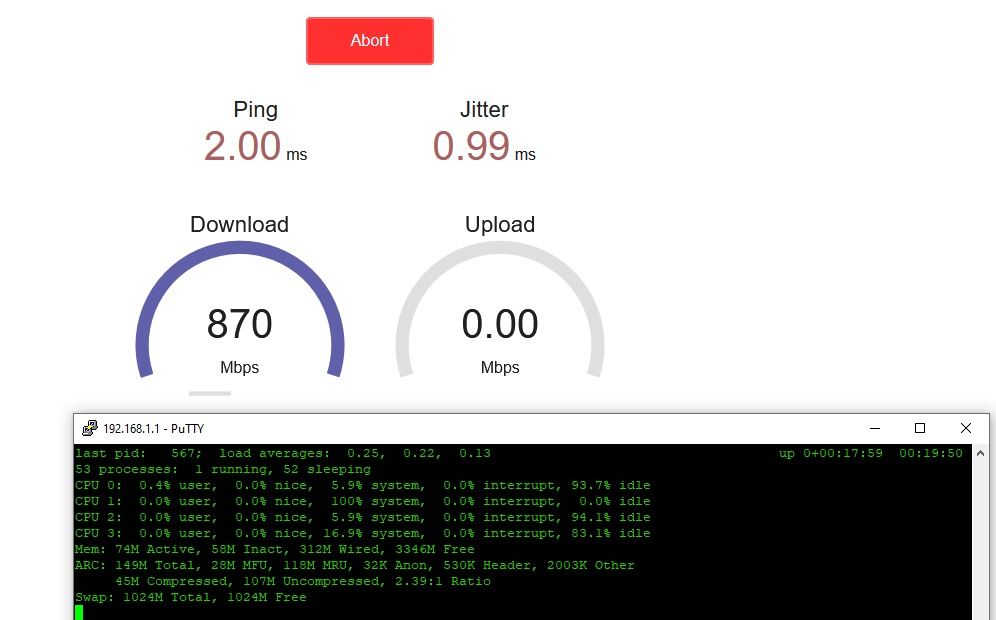

This is my first 1Gbps throughput testing behind PFSense and NAT on on of these PCs.I am using Libre speed test (HTTP/Browser based) to run my "standard 1GBps throughput test that I have been using for about 5 years.

On other hardware I always get the full 1Gbps in both directions (upload/download) to the test server.

Test server is on the WAN connected to PFsense system under test.

Test client is a browser on the LAN connected to PFsense system under test.Very much to my surprise these J1900 firewall boxes are not quite making it on the 1Gbps speed test.

Download test comes out to around 890mbps every time and upload is always 1000mbps

I am getting the full 1Gbps outbound but not inbound.

I am also noticing that during the test ONE CPU core gets maxed out to 100% during the suffering download test.

No sure if a single TCP stream should be shared among cores or not but it looks like this might

be the bottleneck and why it is falling short of 1 Gbps.For now we just have to go with a more powerful firewall box.

Popping a small gen7 i5 instead.But I never would have guessed the little J1900 workhorse would not handle 1GBPS.

It's a pretty good quality box, with Intel NICS.I'm also wanting to learn more about the one singe CPU core getting maxed during the test!

-

More for entertainment than anything-

-

@N8LBV

If you are testing through the WAN interface you should lower down counting the complete throughput by;- Firewall rules

- NAT process (later stage inside of the packet filter - pf)

- DPI like snort or suricata will narrow it down once more!

870 - 890 plus the overhead counting on top of this,

means for me personally that you will be are in the

range of ~900+ MBit/s and this on a low cost

hardware is awesome. -

@n8lbv said in J1900 quadcore low power PC not quite making 1Gbps throughput with NAT:

No sure if a single TCP stream should be shared among cores or not but it looks like this might

be the bottleneck and why it is falling short of 1 Gbps.If it's a PPPoE connection it is sadly limited to a single thread. So there is no usage of multiple cores at all.

And one of your CPU core shows already 100% load. -

@dobby_ Not sure how to follow this but I understand those stages consume CPU.

I am testing with pretty much out of the box settings and default NAT.

no other features have been turned on.

If you are suggesting that I can turn anything off that is on by default to speed it up I would like to know more mainly out of curiosity.

Other more powerful boxes I have tested make the mark and hit 1Gbps.

The J1900 are falling short by 100-120mbps

And it looks like one single CPU core is maxing out at 100% when I run my single HTTP stream test that I have been using. -

@viragomann Not testing PPPoe. Testing GB ethernet. "factory" out of box setup single WAN+LAN NAT.

Test client web browser speed test is on LAN.

Test http speed test server is connected on WAN network.

Been using same test for ~5 years for very basic router throughput tests.

It works well. -

Yes, the load spreading across the cores there is very bad.

What NICs are those exactly? If they are igb I would expect at least 2 queues.Unless it's a PPPoE WAN as mentioned. If it is then try this:

https://docs.netgate.com/pfsense/en/latest/hardware/tune.html#pppoe-with-multi-queue-nicsSteve

-

If you are suggesting that I can turn anything off that

is on by default to speed it up I would like to know

more mainly out of curiosity.pfSense Hardware Requirements and Guidance

501+ Mbps Multiple cores at > 2.0GHz are required. Server class hardware with PCI-e network adapters.The J1900 are falling short by 100-120mbps

I would have a look under the link given from @stephenw10 and then read about three main points

there, but normally you have to try it out often to match your criteria's, that said before.- mbuf size

- mbuf cluster

- amount of queues

- state table size

Would be my try out. Deactivating some things like

MSIX, Flow control and/or other things, it can be

based on one or more things, how knows.

-

Those hardware specs badly need updating. They probably date back to pentium 4!

The NICs used here are key. If they are igb I expect to see at least 2 queues per NIC so loading across multiptle CPU cores if it;s working correctly. If they are em NICs they are single queue and you are limited by the individual core speeds.

Steve