Wireguard poor throughput.

-

@stephenw10 Need your expertise again. You helped me figure out poor throughput on an IPsec VPN i had a few months ago. Solution to that was to enable NAT-T. The theory being that the ISPs were somehow throttling vpn traffic.

Today I have a similar issue but this time with the Wireguard protocol. Similar-ish issue in that performance is poor. I should be able to pull around 100Mbps on an iPerf speedtest but the best i can do is 50Mbps. I have set the interface MSS on the PFsense side down to 1300 but that doesnt seem to have helped much. This is a site2site to the same hardware as the IPsec tunnel in the past. The only difference now is protocol. -

So route and endpoints are the same? And you could pull 100Mbps over IPSec across the same route?

Are you using the standard Wireguard port?

Steve

-

@stephenw10 No 51821 and 51822 are being used.

51820 is used for remote warrior clients. Funny enough connecting road warrior clients and having them do speed tests (full tunnel) shows excellent throughput so i narrowed it down to a site2site issue specifically. -

To/from one end specifically?

Connections from road warrior clients almost certainly don't use the same route. Unless you happen to be testing from the other site.

But, again, is this replacing the site-to-site IPSec tunnel? And you were able to see 100Mbps across that?

If not then the first thing to do is run a test outside the tunnel to see if there is some general restriction in the route.

Steve

-

@stephenw10

Hey Stephen. To your points- The road warrior test for each site was to ensure that im getting good throughput from the protocol itself. Ruling out a configuration issue.

- This is replacing a S2S running IPsec where i was getting around 110-120Mbps.

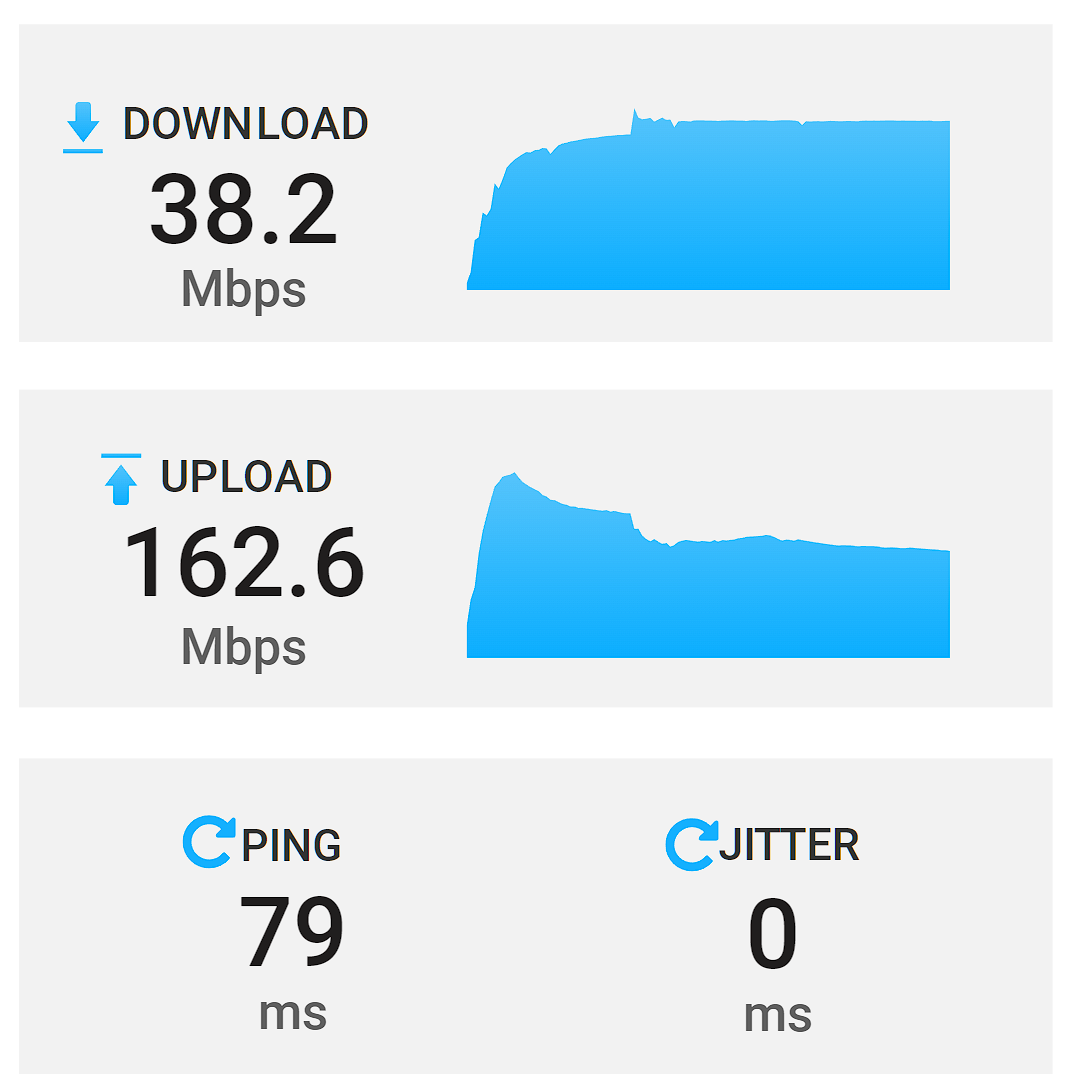

- As a test bypassing the wireguard tunnel, i set up HA proxy on one end of the tunnel and i hit my speedtest server - over the internet - not through a tunnel. Results are way better than wireguard

Keep in mind that the site with HA proxy has a 200/35 bandwidth profile.

-

Hmm, Ok so not something in the route affecting all traffic.

When you ran the road warrior tests was that to servers behind pfSense or up and back out to external servers?

Were you able to see >100Mbps to both sites like that?

What is the available bandwidth at the other site?

Steve

-

@stephenw10 said in Wireguard poor throughput.:

hat is the available bandwidth at the other s

Site 1 (HA proxy): Bandwidth is 200/35

Site 2 - where traffic is being initialized - 500/500When running road warrior tests it was to a server behind the firewall. I see similar traffic bandwidth as the screenshot above.

EDIT: To be clear, its a roadwarrior tests to the speedtest server NOT through a proxy like cloudflare. I wanted to go direclty to the firewall and to the server. -

Ok so you should be able to see >100M from site2 to site1 if latency allows it and IPSec throughput shows it does. I assume you were seeing at least that in the test outside the tunnel?

50Mbps sure looks like it could be packet fragmentation. Are you able to pcap for it to check?

There's really not that much you can tweak in Wireguard.

Steve

-

@stephenw10 Fragmentation is my thought as well BUT if im already setting the MSS to 1300 and im not sure what more i can do. Should I adjust the interface MTU as well?

Latency is unchanged 33ms. -

MSS only applies to TCP traffic so if the fragmentation is in the UDP Wireguard packets it might not help. Though if that was the case I might expect it to fail more spectacularly!

-

@stephenw10 MSS does apply to TCP connections but i thought for tcp conversations going through the wireguard tunnel, that is where the firewall will step in and say "nope instead of 1460 lets do 1300" and send that new MSS signaled value to the other side.

MTU value is for the total size of the frame before going through the tunnel (additional encap) . -

Exactly, it should. And that would reduce the size of the Wiregurad packets accordingly which you would expect to pass. But if you are passing any large udp packets it would do nothing.

It's worth running a packet capture to check. It's usually pretty obvious if it is fragmentation.Steve

-

@stephenw10

Am I running a pcap on the Wireguard interface?

What should i look for to find fragmentation? -

I would run it on the wireguard interface to look at traffic inside the tunnel and also on the interface wireguard is running on to check for fragmented wg traffic.

Packet fragments are shown like:16:06:11.757955 IP 172.21.16.206 > 172.21.16.226: ICMP echo request, id 64544, seq 2, length 1480 16:06:11.757959 IP 172.21.16.206 > 172.21.16.226: ip-proto-1 16:06:11.758319 IP 172.21.16.226 > 172.21.16.206: ICMP echo reply, id 64544, seq 2, length 1480 16:06:11.758325 IP 172.21.16.226 > 172.21.16.206: ip-proto-1That's a 2000B ping over a 1500B link.

Steve

-

@stephenw10 Update on this. Ran an iPerf test between two machines on two different vlans at the site with 500/500. I get excellent throughput ~940Mbps.

At the site with 200/35 bandwidth, i ran an iPerf test between a server and the firewall and im getting ~455Mbps...

Not sure what has changed between the IPsec and Wireguard change but clearly the issue seems to be local to the site. Will have to investigate. -

Not necessarily. Running iperf to or from the firewall directly will always be a worse result than through it. Usually that's because the single threaded nature of iperf can use all of one CPU core on the firewall.

What hardware is the firewall at that end?Steve

-

@stephenw10

Intel(R) Celeron(R) CPU J3060 @ 1.60GHz (2 cores, 2 threads)In theory that should be more than enough,

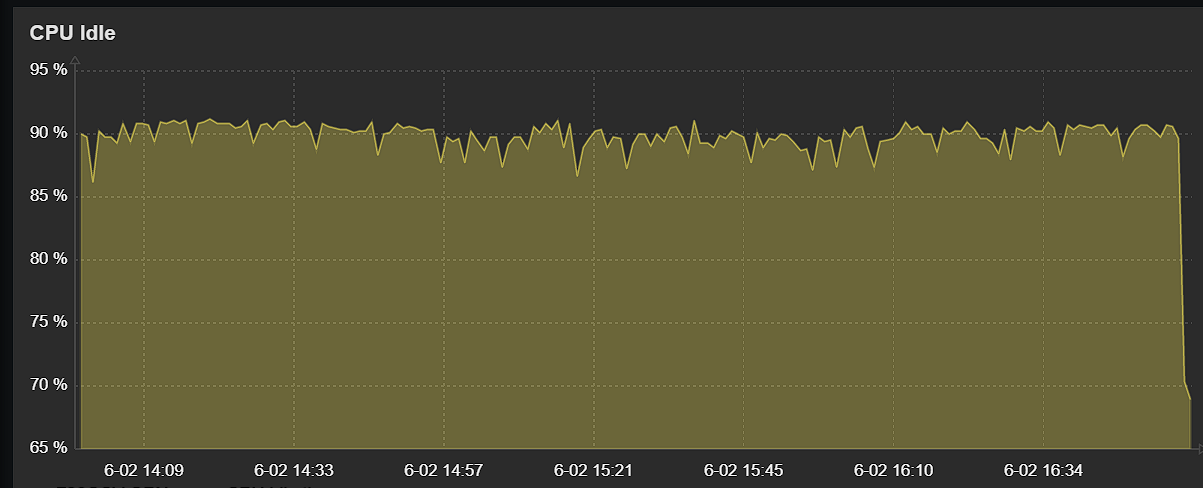

Monitoring shows very good cpu utilizaiton.

-

@michmoor The graph looks as if the CPU became less idle at the end of the test. Seems a bit odd.

Ted

-

@tquade This wasnt during any test just over the span of 3hrs.

-

Run

top -HaSPon it during the test and see what's actually happening. I'm betting one core will be pegged at 100%.