Netgate 2100 ARP problem after replugging WAN port

-

Hmm, you have clients logs leading up the 'stuck in pending' state?

-

@stephenw10 not more than this. This was the last time I've seen something related to the mentioned VPN tunnel in the logs.

I think it's the same error state as I mentioned in the original problem. By replugging the WAN port, I tear down the VPN tunnel unexpectedly for the functioning part of the tunnel.

After a while both sides try to reestablish the connectionClient Side

Jul 27 22:35:47 php-fpm 345 /rc.openvpn: OpenVPN: One or more OpenVPN tunnel endpoints may have changed its IP. Reloading endpoints that may use HQ_TO_BUELACH_VPNV4. Jul 27 22:38:07 rc.gateway_alarm 1141 >>> Gateway alarm: HQ_TO_BUELACH_VPNV4 (Addr:10.0.0.1 Alarm:0 RTT:24.269ms RTTsd:1.975ms Loss:5%) Jul 27 22:38:07 check_reload_status updating dyndns HQ_TO_BUELACH_VPNV4 Jul 27 22:38:07 check_reload_status Restarting ipsec tunnels Jul 27 22:38:07 check_reload_status Restarting OpenVPN tunnels/interfaces Jul 27 22:38:07 check_reload_status Reloading filter Jul 27 22:38:08 php-fpm 99524 /rc.openvpn: Gateway, none 'available' for inet6, use the first one configured. '' Jul 27 22:38:08 php-fpm 99524 /rc.openvpn: OpenVPN: One or more OpenVPN tunnel endpoints may have changed its IP. Reloading endpoints that may use HQ_TO_BUELACH_VPNV4Server Side

Jul 27 03:11:44 WHQ-FW01 php-fpm[41160]: /rc.openvpn: Gateway, NONE AVAILABLE Jul 27 03:11:44 WHQ-FW01 php-fpm[41160]: /rc.openvpn: OpenVPN: One or more OpenVPN tunnel endpoints may have changed its IP. Reloading endpoints that may use BUELACH_VPNV4. Jul 27 03:14:00 WHQ-FW01 sshguard[21891]: Exiting on signal. Jul 27 03:14:00 WHQ-FW01 sshguard[55980]: Now monitoring attacks. Jul 27 03:14:04 WHQ-FW01 rc.gateway_alarm[97993]: >>> Gateway alarm: BUELACH_VPNV4 (Addr:10.0.0.2 Alarm:0 RTT:26.625ms RTTsd:22.486ms Loss:5%) Jul 27 03:14:04 WHQ-FW01 check_reload_status[414]: updating dyndns BUELACH_VPNV4 Jul 27 03:14:04 WHQ-FW01 check_reload_status[414]: Restarting IPsec tunnels Jul 27 03:14:04 WHQ-FW01 check_reload_status[414]: Restarting OpenVPN tunnels/interfaces Jul 27 03:14:04 WHQ-FW01 check_reload_status[414]: Reloading filter -

There's only one client on that tunnel I assume?

With the server side assigned as an interface like that both sides reset when the link goes down because of the gateway failures. You might try disabling the gateway monitoring on the server side so it doesn't reset twice. There could be a timing issue.

Steve

-

@stephenw10 Yes it's a one client tunnel.

Can i disable it on the client side, I'd like to manage everything on one device.Thanks Steve

-

That's worth testing. It's far more common to have the client side assigned though. I wouldn't expect that to cause a problem.

-

I made the change you suggested. It improved the time it takes to reestablish the VPN connection, but I still have the "Waiting for response from peer" status on the server side.

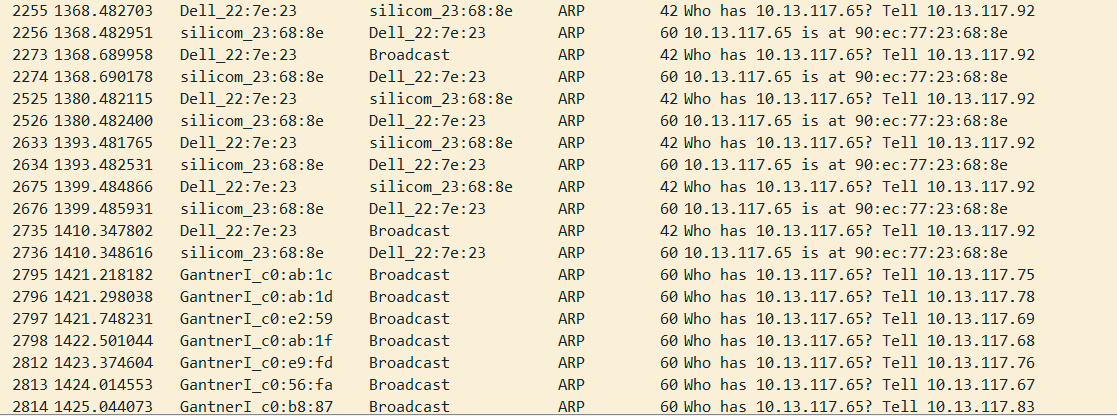

(Client side is fine)I think I've figured out the cause of my problem:

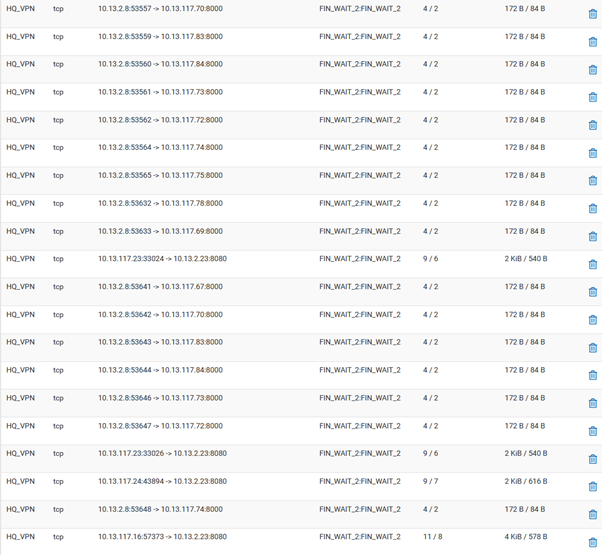

When the VPN tunnel breaks, some states remain in the FIN_WAIT_2 state, which won't allow a new tcp connection until it's been definitely closed.

Restarting the VPN tunnel kills the states and that's why it works as a solution.Is there any way to automatically kill these states after a certain amount of time?

-

You can set 'State Killing on Gateway Failure' to 'Kill states for all gateways that are down' in Sys > Adv > Misc. As long as you have a gateway defined there it should kill those states.

Or you can set the TCP state timeout dircetly in Sys > Adv > Firewall&NAT. That set's it globally though.

However you can see there are states there for duplicate connections with different source ports. So it would be possible for that to open another state using a new source port with the old states still present.Steve

-

@stephenw10

This makes it even more complicated.

So if it can create a new state, why doesn't it close the previous one.I don't get it, why it doesn't close the tcp connection at all. why does it keep getting stuck at FIN_WAIT2

-

That's normal for TCP states. They will close when the state times out but if the firewall doesn't see the full close sequence between the end points it won't immediately close them. I don't expect that to cause a problem though since a new state will be opened with a different source port and that will be unaffected by any existing states.

Steve

-

@stephenw10

I think you're right.

I've set the Firewalll Optimization to normal, which should've set the time out for "FIN WAIT" to 900 seconds, but it still doesn't kill the state.

So I guess back to the roots to find out what the problem is.EDIT

Well uhm, somehow it solved my problem automatically without restarting the VPN tunnel. I think the Firewall Optimization worked, need to do more testing though.