22.05 - CP clients have connectivity issues after x amount of time

-

It definitely 'feels' like Limiters and all of that code changed in 22.05 with the removal of ipfw.

It's probably related to that ticket because it similarly pulls in the expected values when the rulset is reapplied.

Still looking into this...

-

i've found a fancy new party trick to be able to reproduce it on my system:

- disconnect a user from captiveportal by status->captiveportal

- All traffic for all users stop

- reconnect a different user to captiveporal

- traffic starts flowing again for all devices

- repeat this a couple of times to get the blood of users boiling

- create a screencapture

could not attach screencapture gif to this post (>2MB)

see here: https://imgur.com/a/GKCesgF -

If was about to ask about your point 2 : are you sure ?

Then I watched your movie .....

I'm pretty confident some will now know where to look. -

@gertjan

I hope someone will figure this out quickly...If not they'll burn me at the stake

-

You've said that a a save on the captive portal settings page made things flow again.

I mean, when you change your

3.reconnect a different user to captiveporal

for

3 save the portal settings

is the blood temperature going down ?

If I fabricate a small script that runs every minute that compares the list with connected users with the previous minute old list. If something changed, then the script executes a "captive portal save".

Just as a work around, for the time being.

-

@gertjan

just hitting save does not fix the problem.

i've mentioned this in one of the previous posts in this thread:-

status -> filterreload: situation remains the same.

-

status -> services -> captiveportal restart: situation remains the same.

-

services -> CP -> edit -> save: situation remains the same

-

services -> CP -> edit -> change idle timeout + save: situation remains the same

-

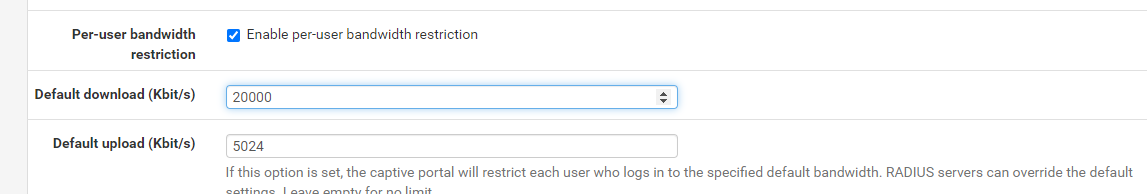

services -> CP ->edit -> change 'per-user-bandwidth-restriction' setting to anything different (doesnt matter if its enable / disable / change of speed) ==> FIXED

-

-

"Save" with a minor change (like change of speed from 10 to 12 Mbits or 12 to 10) => FIXED.

Ok - I work something out. -

with the information i've found today, the issue occurs on disconnect.

i'm currently running an experiment:

i wonder if backend code treats manual disconnects the same way as IDLE timeouts.

Because if the same "bug" is triggered by IDLE-timeouts that could explain the randomness i'm experiencing.So i've currently set the CP idle-timeout to blank

and i'll increase the dhcp leasetime to 8 hours or so to artificially prevent CP-clients from "disconnecting".will post results when i have them

preliminary results:

last 3 hours from 12:25pm -> now (3:10pm) i haven't noticed any more outages.

so it appears the workaround to disable idle-timeout & increasing dhcp-lease-time has a positive effect.

Schools out in less then an hour - so i will continue to monitor the situation on monday. -

Ah, that is a good discovery! Yeah, that has to narrow it down...

-

@heper said in 22.05 - CP clients have connectivity issues after x amount of time:

everytime when nginx.log gets rotated & bzip'd.

re: Bzip, is your 6100 running ZFS? If so you should turn off log compression:

https://docs.netgate.com/pfsense/en/latest/releases/22-01_2-6-0.html#general

"Log Compression for rotation of System Logs is now disabled by default for new ZFS installations as ZFS performs its own compression.Tip

The best practice is to disable Log Compression for rotation of System Logs manually for not only existing ZFS installations, but also for any system with slower CPUs. This setting can be changed under Status > System Logs on the Settings tab." -

@heper said in 22.05 - CP clients have connectivity issues after x amount of time:

i'm currently running an experiments

Not sure if I actually broke mine ....

Read from bottom to top :

2022-09-10 11:12:31.228623+02:00 logportalauth 77440 Zone: cpzone1 - ACCEPT: 001, 78:e4:00:1f:67:05, 192.168.2.122 2022-09-10 11:12:30.969566+02:00 logportalauth 77440 Zone: cpzone1 - Ruleno : 2008 2022-09-10 11:11:58.724810+02:00 logportalauth 54493 Zone: cpzone1 - ACCEPT: 203, 94:08:53:c0:47:63, 192.168.2.6 2022-09-10 11:11:58.462396+02:00 logportalauth 54493 Zone: cpzone1 - Ruleno : 2008 2022-09-10 11:07:22.560836+02:00 logportalauth 3105 Zone: cpzone1 - ACCEPT: x, ea:1a:04:4f:cc:a1, 192.168.2.6 2022-09-10 11:07:22.380192+02:00 logportalauth 3105 Zone: cpzone1 - Ruleno : 2008All portal clients get assigned the same '$pipeno' 2008 ? ?

What I understand :

My pipe numbers :

2000 (2001) For my "Allowed IP Addresses" 192.168.2.2 - an AP1" - no speed limits set

2002 (2003) For my "Allowed IP Addresses" 192.168.2.3 - an AP2" - no speed limits set

2004 (2005) For my "Allowed IP Addresses" 192.168.2.4 - an AP3 " - no speed limits set

2006 (2007) For my "Allowed Host Name" - no speed limits set " - no speed limits setso portal user get assigned pipe number 2008 (+2009); 2010 (+2011), etc.

note : I've added a log line in the function captiveportal_get_next_dn_ruleno() so the returned "pipeno" gets logged.

If a portal user gets delete and 'his' pipe '2008' (+'2009') get deleted, what happens with all the other user using the same pipe ?? ( I do have some ideas )

This also explained the horror video from @heper : all user are are clipped at a total 10 Mbits speed => because they all use the same pipe ?

Could it be that easy as that, a GUI issue ??

I've looked in my radius radacct (mysql table) where I have all my connected users activity : way back (using 2.6.0, not 22.05) I can clearly see that every user gets its own "rulenumber" is "pipeno".

True, I'm using FreeRadius so maybe I see smoke from another fire.

edit :

A script that dumps the content of the "captive portal connected user database" :

All user have the de same

[1] => 2008 [pipeno] => 2008#!/usr/local/bin/php -q <?php /* captiveportal_xxxxxx.php No rights reserved. */ require_once("/etc/inc/util.inc"); require_once("/etc/inc/functions.inc"); require_once("/etc/inc/captiveportal.inc"); /* Read in captive portal db */ /* Determine number of logged in users for all zones */ $count_cpusers = 0; $users = array(); /* Is portal activated ? */ if (is_array($config['captiveportal'])) /* For every zone, do */ foreach ($config['captiveportal'] as $cpkey => $cp) /* Sanity check */ if (is_array($config['captiveportal'][$cpkey])) /* Is zone enabled ? */ if (array_key_exists('enable', $config['captiveportal'][$cpkey])) { $cpzone = $cpkey; $users = captiveportal_read_db(); foreach ($users as $user => $one) print_r($one); } ?> -

@gertjan said in 22.05 - CP clients have connectivity issues after x amount of time:

Could it be that easy as that, a GUI issue ??

i doubt it's a gui issue.

because currently i'm under the impression that i might be hitting the same issue:- with or without per-user-bandwidth enabled (i guess when disabled, everyone gets added to the same unlimited pipe-pair?)

- with manual disconnect on gui

- when CP-client triggers the idle-timeout

on monday i'll resume testing & will try to create some sort of flow-chart (IF x then y | IF a then b)

- with or without per-user-bandwidth enabled (i guess when disabled, everyone gets added to the same unlimited pipe-pair?)

-

random question below possibly not related to get to the bottom of the issue i'm having

disclaimer:i don't know chttps://github.com/pfsense/FreeBSD-ports/blob/9bed0f49e8e21353ae10033112eb4d3b2f0f00dc/devel/php-pfSense-module/files/pfSense.c#L3832

ret = pfctl_clear_eth_rules(dev, path);so 2 arguments are passed to the function

i went looking for the source of the function and found this:

https://github.com/pfsense/FreeBSD-src/blob/10749beca3da9cbbe56ee650dd0a936540cccf7c/sbin/pfctl/pfctl.c#L463pfctl_clear_eth_rules(int dev, int opts, char *anchorname)this function expects 2 integers and a char - but receives a single int and a char.

why does/would this work?edit: perhaps the sources I've linked are not the versions of the compiled files on 22.05?

-

Let me continue.

It all start here :

https://github.com/pfsense/pfsense/blob/9484a1cbdc2fa73cfe24681c342327729ffb6d61/src/usr/local/captiveportal/index.php#L233This is where the classic "user + password" login is happening.

Now, go down to and find line 249 :

$pipeno = captiveportal_get_next_dn_ruleno('auth', 2000, 64500, true);This is where a check (only !!) is executed to see if a "ruleno" or "pipeno" (the name for the same thing varies) is avaible.

The last parameter "true" is the key here. The function is called in 'check mode only'.

This call is correct : if "0" is return then the portal limit is reached, the user can't login.

That's why a check is done here.Look what happens in the function

function captiveportal_get_next_dn_ruleno() :

https://github.com/pfsense/pfsense/blob/9484a1cbdc2fa73cfe24681c342327729ffb6d61/src/etc/inc/captiveportal.inc#L1626A $ruleno ($pipeno) is return, but the file isn't written back - the modification is discarded.

So, at that moment, the proposed $ruleno is still available for the system - and not "used" or "reserved".I'm pretty sure we're in for a race condition situation here. But that will be another story.

Let's continue, back in our index.html.

If $pipeno was zero, a message is shown, the portal user is informed and bye.

Lets continue :

Function captiveportal_authenticate_user() is called with a valid $pipeno, one that was available, as it was tested just above.

$pipeno is used here to hand the value over to a radius auth server, if you use one. Radius want $pipeno to store it

its database, and probably does nothing else with it.Now it happens :

This is executed when the authentification (pfSense local user Manager, FreeRadius, whatever) said "ok for login" :

https://github.com/pfsense/pfsense/blob/9484a1cbdc2fa73cfe24681c342327729ffb6d61/src/usr/local/captiveportal/index.php#L268The proposed (but not reserved) $pipeno is transmitted here :

portal_allow($clientip, $clientmac, $user, $passwd, $redirurl, $auth_result['attributes'], $pipeno, $auth_result['auth_method'], $context);Here is it where things go bad :

If $pipeno was 0 or null, the a $pipeno is retrieved : take note of the fact that the check flag is false now :

line 2081 : $pipeno = captiveportal_get_next_dn_ruleno('auth');(the fourth parameter of function captiveportal_get_next_dn_ruleno is : $check_only = false)

and from now on, $pipeno is used, and captiveportal_get_next_dn_ruleno('auth') is never called again : the code uses a pipe number that doesn't exist.

A next login will get the same $pipeno proposed.

And it won't get registered neither.Because I have 3 IP addresses as "allowed" and one host name, $pipeno 2000 -> 2007 are already used.

When they are added, there is no "test for reservation" used as it is the start of the portal : there won't be other users yet.

My $pipeno stays blocked at 2008, and every portal user uses 2008+2009.

What I actually hope that I'm wrong.

I would have takes some of your time, sorry for that.I'm using 22.05 and I'm using stock files.

Easy fix ......

edit I removed the easy fix ......edit :

@heper if this is not your issue, then it is a new issue.

I had to leave work (where the portal is ) and do testing over VPN from home.I'm sure the Netgate guys will know what to do ;)

-

@gertjan will check it out when i'm on location on monday

don't have ability to test this remotely & the building is closed till mondaydoes every CP-user get it's own pipe-pair even when there are no bandwidth restrictions set ?

-

@heper said in 22.05 - CP clients have connectivity issues after x amount of time:

does every CP-user get it's own pipe-pair even when there are no bandwidth restrictions set ?

I asked myself the same question.

When I was using 2.6.0, it did. My connection 'history, the radius 'radaact' table says so.

Now, mine are all using "2008" regardless if the bandwidth is set, or different, or empty (== unlimited).

But regardless, the issue shown above stays. -

Yes, this seems very likely. I also expect each user to get their own pipe(s). Otherwise the bandwidth set is shared by all users.

It's possible to use dynamic pipes to share bandwidth like that deliberately but that's not what's intended here. -

@gertjan

@stephenw10

arrived at work "early" to verify the things you've discovered this weekend.

current CP settings:

idle timeout: 600minutes (to prevent anyone from idle-timeout during the entire day)

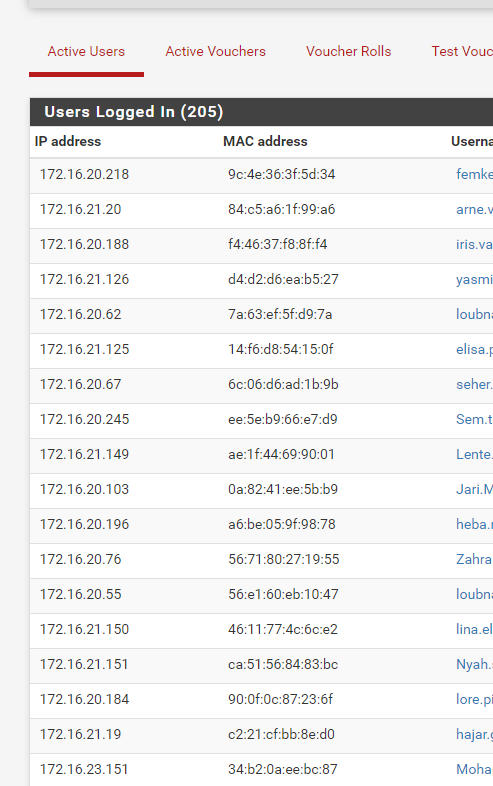

per-user-bw: 240d | 20u mbit/si can confirm all users are using the same pipeno:

username: Ines. pipeno: 2002 MAC: 5e:50:66:f3:e8:7e IP: 172.16.20.57 ************************************************ username: Bchir pipeno: 2002 MAC: 9e:a8:ce:44:7a:e4 IP: 172.16.23.155 ************************************************ username: Jeroe pipeno: 2002 MAC: 9a:7b:b9:e4:c4:1c IP: 172.16.20.50 ************************************************snippet of pfanchordrill:

cpzoneid_2_auth/172.16.23.164_32 rules/nat contents: ether pass in quick proto 0x0800 from 04:b4:29:2d:30:83 l3 from 172.16.23.164 to any tag cpzoneid_2_auth dnpipe 2002 ether pass out quick proto 0x0800 to 04:b4:29:2d:30:83 l3 from any to 172.16.23.164 tag cpzoneid_2_auth dnpipe 2003 cpzoneid_2_auth/172.16.23.23_32 rules/nat contents: ether pass in quick proto 0x0800 from 9e:36:73:93:d4:20 l3 from 172.16.23.23 to any tag cpzoneid_2_auth dnpipe 2002 ether pass out quick proto 0x0800 to 9e:36:73:93:d4:20 l3 from any to 172.16.23.23 tag cpzoneid_2_auth dnpipe 2003 cpzoneid_2_auth/172.16.23.42_32 rules/nat contents: ether pass in quick proto 0x0800 from a4:45:19:64:0c:b8 l3 from 172.16.23.42 to any tag cpzoneid_2_auth dnpipe 2002 ether pass out quick proto 0x0800 to a4:45:19:64:0c:b8 l3 from any to 172.16.23.42 tag cpzoneid_2_auth dnpipe 2003below limiter information in chronological order:

1 working

2 client disconnect = not working

3 client (re)connect = working1 output /sbin/dnctl pipe show:

02002: 20.000 Mbit/s 0 ms burst 0 q133074 100 sl. 0 flows (1 buckets) sched 67538 weight 0 lmax 0 pri 0 droptail sched 67538 type FIFO flags 0x0 16 buckets 1 active BKT Prot ___Source IP/port____ ____Dest. IP/port____ Tot_pkt/bytes Pkt/Byte Drp 0 ip 0.0.0.0/0 0.0.0.0/0 7 606 0 0 0 02003: 240.000 Mbit/s 0 ms burst 0 q133075 100 sl. 0 flows (1 buckets) sched 67539 weight 0 lmax 0 pri 0 droptail sched 67539 type FIFO flags 0x0 16 buckets 1 active 0 ip 0.0.0.0/0 0.0.0.0/0 37 45954 0 0 0 02000: unlimited 0 ms burst 0 q133072 100 sl. 0 flows (1 buckets) sched 67536 weight 0 lmax 0 pri 0 droptail sched 67536 type FIFO flags 0x0 16 buckets 1 active 0 ip 0.0.0.0/0 0.0.0.0/0 2 156 0 0 0 02001: unlimited 0 ms burst 0 q133073 100 sl. 0 flows (1 buckets) sched 67537 weight 0 lmax 0 pri 0 droptail sched 67537 type FIFO flags 0x0 16 buckets 0 active2 output /sbin/dnctl pipe show AFTER MANUAL DISCONNECT:

02000: unlimited 0 ms burst 0 q133072 100 sl. 0 flows (1 buckets) sched 67536 weight 0 lmax 0 pri 0 droptail sched 67536 type FIFO flags 0x0 16 buckets 0 active 02001: unlimited 0 ms burst 0 q133073 100 sl. 0 flows (1 buckets) sched 67537 weight 0 lmax 0 pri 0 droptail sched 67537 type FIFO flags 0x0 16 buckets 0 active3 output /sbin/dnctl pipe show WHEN A new(old) CLIENT (re)CONNECTS:

02002: 20.000 Mbit/s 0 ms burst 0 q133074 100 sl. 0 flows (1 buckets) sched 67538 weight 0 lmax 0 pri 0 droptail sched 67538 type FIFO flags 0x0 16 buckets 1 active BKT Prot ___Source IP/port____ ____Dest. IP/port____ Tot_pkt/bytes Pkt/Byte Drp 0 ip 0.0.0.0/0 0.0.0.0/0 41 3143 0 0 0 02003: 240.000 Mbit/s 0 ms burst 0 q133075 100 sl. 0 flows (1 buckets) sched 67539 weight 0 lmax 0 pri 0 droptail sched 67539 type FIFO flags 0x0 16 buckets 1 active 0 ip 0.0.0.0/0 0.0.0.0/0 260 374935 0 0 0 02000: unlimited 0 ms burst 0 q133072 100 sl. 0 flows (1 buckets) sched 67536 weight 0 lmax 0 pri 0 droptail sched 67536 type FIFO flags 0x0 16 buckets 0 active 02001: unlimited 0 ms burst 0 q133073 100 sl. 0 flows (1 buckets) sched 67537 weight 0 lmax 0 pri 0 droptail sched 67537 type FIFO flags 0x0 16 buckets 0 active -

@heper said in 22.05 - CP clients have connectivity issues after x amount of time:

i doubt it's a gui issue.

because currently i'm under the impression that i might be hitting the same issue:with or without per-user-bandwidth enabled (i guess when disabled, everyone gets added to the same unlimited pipe-pair?)

with manual disconnect on gui

when CP-client triggers the idle-timeoutIn any case, captiveportal_disconnect() gets called, and this will delete conditionally delete the pipe ($pipeno) of that user.

If all users use the same pipe, then that will will impact all the users that use that pipe.

edit : removed my script, you - @heper know how to dump the connected portal user database and see pipes used per user..edit : lol, 12 minutes to late : all your users use the same pipe number.

I did this to restore unique pipeno usage :

/usr/local/captiveportal/index.html

$pipeno = captiveportal_get_next_dn_ruleno('auth', 2000, 64500, true); ....... } $auth_result = captiveportal_authenticate_user($user, $passwd, $clientmac, $clientip, $pipeno, $context);I changed the true for false on the first line

Added an 'else' condition.$pipeno = captiveportal_get_next_dn_ruleno('auth', 2000, 64500, false); ....... } else $auth_result = captiveportal_authenticate_user($user, $passwd, $clientmac, $clientip, $pipeno, $context);Now, if a pipe gets attributed, but the login fails, the pipe will get freed up again.

My users have now unique pipes.

-

@gertjan said in 22.05 - CP clients have connectivity issues after x amount of time:

edit : removed my script, you - @heper know how to dump the connected portal user database and see pipes used per user..

i copy/pasted your script yesterday & adjusted it to not print_r the entire array, but only the information i wanted. =)