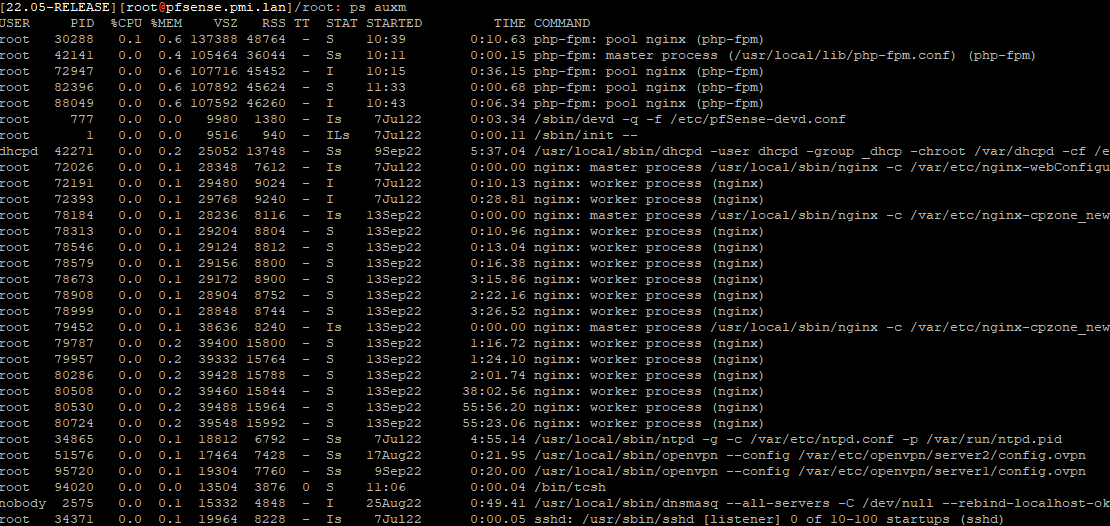

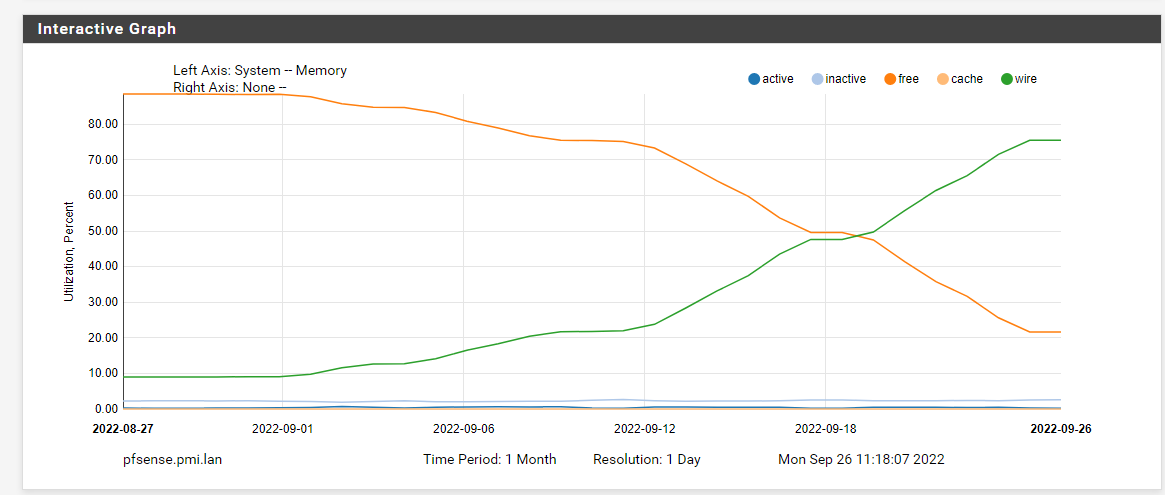

NG6100 MAX - pf+22.05 "wired" memory increasing over time

-

@stephenw10 said in NG6100 MAX - pf+22.05 "wired" memory increasing over time:

It's patched in 23.01 if you're able to test that?

Is this Patch also in 2.7.0?

-

-

@stephenw10 test 23.01 on a production system with roughly 1k users ?

also: it's not mentioned in the 23.01 release notes posted alongside the beta announcement

-

Likely because it hadn't been marked as feedback yet. It is in 2.7 and 23.01 though. I've updated the ticket.

Steve

-

We need someone who was hitting this in 22.05 to test it in 23.01 to confirm it is fixed there.

Otherwise if it's not fixed in the current code it may not be before release.

Steve

-

@stephenw10 is netgate willing to verify/test the config of the school that hit this issue on 23.01 before i attempt the upgrade?

i can not afford to upgrade to a non/semi-working system that has bigger issue's then what i currently have.

so the verify / test would have to include the upgrade itself & basic connectivity appears to work in a lab environment. there are no addon packages installed.also: schools in belgium close down for the holidays. So between now & january 9th there will be no clients.

memory seems to increase only when there are "lots" of clients connected to CP. i can not observe memory-leakage during the weekends.also2: i read that the current builds still have some debugging enabled that cause decrease performance. how much % are we talking about on a ng6100?

conclusion: i'm willing to test this from january 9th if netgate can confirm there don't appear to be any issues upgrading from 22.05 to 23.01 with the config i have.

-

Well I can test a config in a 6100 running 23.01 but I obviously can't test it in your network environment.

-

@stephenw10 what's the preferred way to send you that config ?

-

You can upload it to me directly here:

https://nc.netgate.com/nextcloud/s/6ZZEPy4RsnWi6mb -

@stephenw10 you probably received the config at the link you provided.

there is a cron to restart dpinger to work around a different bug.

believe its https://redmine.pfsense.org/issues/11570 - anyhow in some scenarios dpinger keeps saying gateway is offline but in reality gw has been back up for a while - it has something todo with a PID getting stuck or something ?)could you let me know if the provided config seems to work on the alpha/beta/rc versions (whatever it is at this time).

-

The config looks good. It installed all the packages. The only issue I saw importing it was I had to reboot to populate the aliases. On the first boot there were a number of alerts indicating they had not been populated yet.

Is there anything specific you want me to test here? -

it would be good if the interfaces work and it doesn't crash :)

what is the expected performance hit with the debugging still enabled ?

-

Well it's been up for 20hrs without crashing but that's with no traffic. The interfaces look to be configured correctly but without your infrastructure connected to it I have no way to really test that.

I guess maybe the VLAN_BEZOEKERSWIFI interface with the captive portal on it is the one you might most expect to have issues? -

@stephenw10 said in NG6100 MAX - pf+22.05 "wired" memory increasing over time:

I guess maybe the VLAN_BEZOEKERSWIFI interface with the captive portal on it is the one you might most expect to have issues?

i hope i won't have issues with the interface itself.

i'm guessing there will be issues with CP ... that seems to be a trend ;)would it be possible to easily rollback using the zfs "snapshot"/boot environment thing that is builtin ? (in case of catastrophic failure during/after the upgrade)

does it still handle >=1Gbit NAT with debugging enabled?

-

Yes you can roll back from 23.01 to 22.05 using ZFS snaps.

Yes it passes 1Gbps with the debugging options easily. Here's what the loading looks like when passing 940Mbps between L1_managementinterface and L3_WAN_TELENET0 (after adding an OBN rule):

last pid: 68653; load averages: 0.29, 0.32, 0.24 up 0+22:18:09 15:47:00 241 threads: 6 running, 196 sleeping, 39 waiting CPU 0: 0.4% user, 0.0% nice, 30.6% system, 0.0% interrupt, 69.0% idle CPU 1: 0.0% user, 0.0% nice, 15.3% system, 0.0% interrupt, 84.7% idle CPU 2: 0.4% user, 0.0% nice, 22.7% system, 0.0% interrupt, 76.9% idle CPU 3: 0.0% user, 0.0% nice, 1.2% system, 0.0% interrupt, 98.8% idle Mem: 202M Active, 434M Inact, 1108M Wired, 773M Buf, 6044M Free Swap: 3881M Total, 3881M Free PID USERNAME PRI NICE SIZE RES STATE C TIME WCPU COMMAND 11 root 187 ki31 0B 64K RUN 3 21.8H 100.00% [idle{idle: cpu3}] 11 root 187 ki31 0B 64K CPU1 1 21.8H 85.53% [idle{idle: cpu1}] 11 root 187 ki31 0B 64K CPU2 2 21.9H 81.41% [idle{idle: cpu2}] 11 root 187 ki31 0B 64K RUN 0 22.1H 76.04% [idle{idle: cpu0}] 0 root -60 - 0B 880K - 0 0:05 23.69% [kernel{if_io_tqg_0}] 0 root -60 - 0B 880K CPU2 2 0:02 18.39% [kernel{if_io_tqg_2}] 0 root -60 - 0B 880K - 1 0:08 14.29% [kernel{if_io_tqg_1}] 0 root -64 - 0B 880K - 0 2:18 0.19% [kernel{dummynet}] 50087 root 20 0 14M 4228K CPU3 3 0:00 0.10% top -HaSPSteve

-

@stephenw10 ok thx for testing.

will attempt to find an upgrade-window at the end of this week.

hopefully i will not regret this. -

@stephenw10 update went ok last night.

will monitor memory usage over the next couple of days. on 22.05 memory increased around 7% every schoolday

-

"wired" memory has increased only 0.8% from monday till thursday

will keep monitoring it over the next couple of weeks.the huge memory leak appears to be fixed but perhaps it's a bit too soon to close the redmine ticket?

-

Nice. Yeah more data is better but that is very promising.

Add that to the ticket when you can.

Steve

-

@stephenw10 i've updated the ticket.