6100 SLOW in comparison to Protectli FW6E

-

@manilx said in 6100 SLOW in comparison to Protectli FW6E:

Protectli had Intel NIC's as I posted above.

Can you give me the exact model or else the specific FreeBSD driver being used? For example, em, igb, ix, etc.? All Intel NICs are not using the same driver in FreeBSD.

-

@manilx said in 6100 SLOW in comparison to Protectli FW6E:

Yes, it's definitely caused by heavy traffic.

Unfortunately, I don't have a test bed that seems able to reproduce the problem. Ditto for the upstream Suricata developer working on this same issue. And not even the OPNsense principal developer has been able to duplicate the stall/hang so far as I know. But there is a European user posting in the Suricata Redmine thread here that can reliably reproduce the problem. He is using a virtual environment, so virtual NIC drivers instead of actual hardware.

There is obviously an issue, but so far we have been unable to identify the cause so we can fix it. The first step is being able to reliably reproduce the stall/hang, and thus far that has not been done on a developer's machine

.

. -

@bmeeks https://eu.protectli.com/product/fw6e/

Interfaces were em0 and em1

This unit is a 6100. The freeze has got me panicked and I really can't afford it to loose connectivity "at random"

-

@bmeeks Perhaps netgate can provide you a 6100 for this. It's in their interest also as pfsense is only as good as the packages.....

-

@manilx said in 6100 SLOW in comparison to Protectli FW6E:

@bmeeks https://eu.protectli.com/product/fw6e/

Interfaces were em0 and em1

This unit is a 6100. The freeze has got me panicked and I really can't afford it to loose connectivity "at random"

Understand. My request was just "if possible", but I certainly appreciate the need for stability.

Some other questions, though, if you will answer them --

On the Protectli hardware, were you also using Inline IPS Mode and able to achieve essentially line-rate speed tests on the Gigabit link with Suricata running? I think that answer is "yes" from reading your posts in this thread, but I want to be sure.

And you say the Protectli Ethernet drivers were the

eminterfaces. That is potentially a valuable clue as like I said previously, there are some differences in the drivers that support Intel NIC families on FreeBSD.I have shared your experience in the Suricata Redmine issue thread I referenced earlier.

-

@bmeeks ANY questions you need!!!!

I used exactly the same config on the Protectli. I imported the config from there actually.

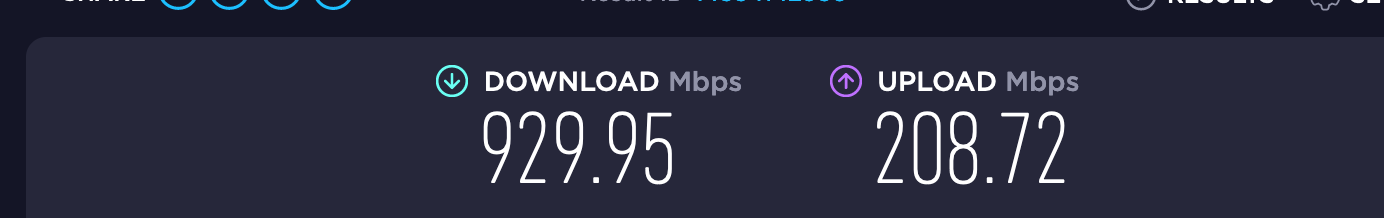

Inline IPS mode, with all ETopen rules activated and I got full 980 download speed. -

@manilx said in 6100 SLOW in comparison to Protectli FW6E:

@bmeeks ANY questions you need!!!!

I used exactly the same config on the Protectli. I imported the config from there actually.

Inline IPS mode, with all ETopen rules activated and I got full 980 download speed.Thanks. I will continue looking into this. There are three of us still looking at this issue: me, a Suricata developer, and from time to time the OPNsense principal developer. Whatever fix is found will be incorporated into Suricata upstream, so you can follow the Suricata Redmine Issue if you would like. It might also turn out to be something that needs fixing in FreeBSD itself. This actually seems most likely to me as no Suricata users on Linux have reported anything similar.

-

@manilx said in 6100 SLOW in comparison to Protectli FW6E:

@bmeeks Perhaps netgate can provide you a 6100 for this. It's in their interest also as pfsense is only as good as the packages.....

I have an SG-5100 which, if I recall, has the same NICs. But I am using that one for my production firewall at the moment so kind of in the same situation as you with regards to needing it up and running.

I will soon have some other spare PC hardware, and perhaps I can grab an Intel NIC card for it that uses the ix and/or igc drivers.

-

@bmeeks I actually started with OPNsense on Proxmox. Run fine for 9 months until https://forum.opnsense.org/index.php?topic=31338.0

This is when I switched to pfsense.

-

@manilx said in 6100 SLOW in comparison to Protectli FW6E:

@bmeeks I actually started with OPNsense on Proxmox. Run fine for 9 months until https://forum.opnsense.org/index.php?topic=31338.0

This is when I switched to pfsense.

Yes, the issue you linked to there is part of the same Suricata problem. In fact, the issues thread you linked to in OPNsense were the reason the OPNsense developer opened the Suricata Redmine Issue I linked earlier (#5744).

What happened is that starting with Suricata 6.0.9 the upstream group merged in the same netmap device changes for multiple host rings support that we have been using in pfSense since August 2021. I created the original patch to use multiple host rings with Suricata Inline IPS netmap mode and submitted it upstream. It was rather quickly merged into the Suricata 7.x development branch, but not into the 6.0.x Master release branch at that time. But I did merge the patch into the 6.0.x version of Suricata we were using on pfSense. It has been in all Suricata versions used with pfSense since August 2021.

There were no stalling issues that I am aware reported on pfSense when the change was merged. I can only recall a single poster (and he posted on the Suricata forum and not here on the Netgate forum) that has had an issue, and his issue was reported about two months ago. But immediately upon rolling out the patch with the release of Suricata 6.0.9, OPNsense users began experiencing the stall/hang. Eventually OPNsense rolled back to the netmap code that was in Suricata 6.0.8 (essentially reverting the multiple host rings patch). That stabilized Suricata for their users. We are still trying to determine what's up with the new patch.

One key difference, and your experience today reiterates the importance of this difference, is that runmode "workers" is the default on OPNsense while runmode "autofp" is the default on pfSense. Seems "workers" mode is the problem child for some reason. Per your testing, switching to "workers" runmode results in the hang/stall. Running with "autofp" mode seems to be stable, but produces lower throughput.

-

@manilx

I'll ask a simple question.

Is it really necessary to use Inline mode?

I repeatedly tried to run various network cards in this mode, most of them are Intel (ix, igb, igс) and even one of them, no matter how ridiculous, Realtek ... Sooner or later some problems arose, such as a kernel panic or some other. That's why I use legacy mode in production.

The unavailability of the service outweighed my paranoia -

@w0w You might have a point there.

Guess as it was the newer way to do this and it was there I thought it should work.... -

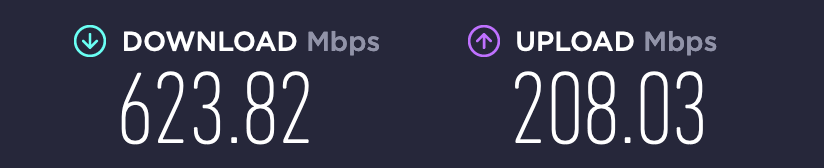

@manilx Tried legacy and it's even slower than inline.

Not an option! -

@manilx

Did you reboot your firewall after switching to legacy, is not it?

I don’t know what’s wrong this time, but usually everything is exactly the opposite, something like this:

Should performance differ so much LEGACY/INLINE IDS?

Of course, I understand that the production firewall is completely unsuitable for various kinds of tests, but nevertheless, most likely the problem is in some kind of configuration ... I think so. One of my two firewalls worked fine with Suricata, and its parameters are about 6100... it's a Celeron(R) CPU N3160 with 16GB of memory, but my bandwidth is only about 600 Mbit, so it is possible I did not reach some limit, but I remember that one of the NICs that I have been tested showed slow speed with Inline mode and normal with Legacy, that's why I was thinking it can help.

... -

@w0w Yes, I rebooted.

Yesterday netgate support logged into my 6100 and found nothing unusual!

I find inline working better than legacy here. It's just that the speed is SO much lower than on the Protectl unit. This CPU is really weak for it's job! They sell it otherwise, marketing....

The solution would be the "worker" mode if it wouldn't "crash" the unit.

Will have to wait for a future working version.Until then I have Suricata with a very reduced set of rules.

Or I'll try to replace this with the 8200 or go back to my Protectli ;) -

I'll try to address a few of the many questions raised in the last few posts.

Which is better - Legacy Mode or Inline IPS Mode?

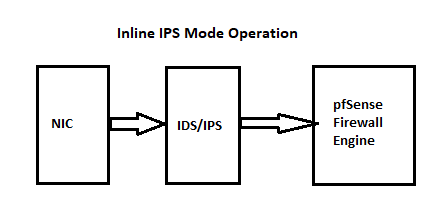

The answer depends somewhat on your security goals, but also it depends on your specific NIC hardware. Let's start first with how the two modes actually function, then proceed to the security goal question.Inline IPS Mode places the Suricata inspection engine directly in the line of packet flow from the physical NIC to the kernel network stack. Suricata inserts itself in between those two points using the kernel netmap device. Netmap allows a userland application to interact directly with kernel-mapped memory buffers and thus gain a large speed advantage for certain types of network packet operations. When using Inline IPS Mode, Suricata will see inbound traffic on the interface before the firewall engine. That means Suricata sees the traffic before any firewall rules have been evaluated. This is of particular note when you run Suricata on the WAN, because there it will see and process a lot of Internet noise for no measurable increase in security. It will just waste processing cycles analyzing the noise traffic the firewall rules are likely going to drop anyway.

With Inline IPS Mode Suricata intercepts network packets, assigns them to a network flow, and then analyzes them using the signatures configured in the rules engine. Once a verdict is obtained for a given flow, that flow is either allowed (passed) or dropped (blocked). If the flow verdict is "pass", then all additional traffic on that flow is passed with no further inspection. It is important to note that Suricata does not inspect every packet every time. It only gathers and buffers enough packets to satisfy the requirements of the configured rules and then either declares a verdict of "pass" or "drop" for that traffic flow. Depending on the particular signature, the verdict may be obtained after as little as a single packet, or it may require buffering and analyzing several dozen packets before a verdict can be rendered. But with Inline IPS Mode, no packets continue on to their destination until AFTER the verdict has been obtained. So we say Inline IPS Mode has no packet "leakage". The flow is buffered until a verdict is obtained, and then the buffered data is either passed on to the kernel or it is simply dropped and not passed on to the kernel.

Here is a diagram I have shared many times on the forum showing how Inline IPS Mode operates:

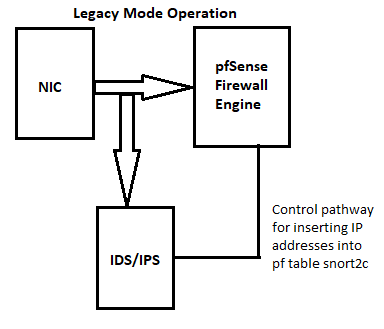

Now let's switch to Legacy Mode. In Legacy Mode Suricata obtains copies of inbound packets as the packets traverse the path from physical NIC to kernel network stack using

libpcap(the PCAP library). Of note here is the word "copy". The original packet continues on uninterrupted to the kernel stack and eventually to the intended host. Only the copied packet flows to Suricata for inspection. As described earlier with Inline IPS Mode, Suricata will buffer the inbound packets in a flow until it has accumulated enough to reach a verdict on the rule signature being evaluated. If the verdict is "alert" or "drop" (meaning the rule triggered), then Suricata makes a pfSense system call to insert the IP address of the offending traffic flow into thepffirewall engine table named snort2c. IP addresses added to this table are blocked by a built-in hidden firewall rule created by pfSense at startup. This is how Legacy Mode "blocks" -- it puts the offending IP address into thatpftable. Once in the table, all traffic to or from that IP address is blocked. It is a very big hammer -- all traffic on any port or any protocol with that IP is blocked. Inline IPS Mode is not nearly so blunt. It drops individual packets (flows, actually) without blocking everything bound for the IP in question. Think of Inline IPS Mode as a fine-bladed diamond scalpel and Legacy Mode as a big sledgehammer. Here is a diagram describing how Legacy Mode works:

So Which Should I Choose?

This is where your security goal comes in. First, Inline IPS Mode guarantees you that no packet leakage will occur. Nothing flows to the destination host until Suricata has issued a verdict on the network flow. But with Legacy Mode, all the initial packets of the flow have continued on to the destination host while Suricata has been busy deciding upon the verdict using the copied packets. So packet leakage has happened. The case can certainly be made that Legacy Mode is not nearly as secure as Inline IPS Mode. Legacy Mode will block subsequent traffic to or from that malicious host, but it will also let the initial burst through to the victim. That may well be all it takes to "infect" or otherwise exploit the victim host.So it seems at first blush Inline IPS Mode is what you should always use. But it's not that simple. For starters, Inline IPS Mode requires the use of the netmap kernel device, and that device is not supported by all NIC hardware. Even different NICs from the same vendor may not have the same level of support. And the netmap device is still a bit buggy in FreeBSD. When it works, it works great. But if your hardware has problems with netmap, then netmap and Inline IPS Mode is not going to be reliable. Inline IPS Mode will also adversely affect throughput. And since it is inline with traffic flow, things actually slow down. Legacy Mode, by virtue of using PCAP to obtain copies of packets, does not slow things down much at all. But it can also result in "silent drops" of packets getting over to Suricata. What that means is

libpcapwas not able to keep up with the traffic flow when copying and thus did not forward all of the needed packet copies over to Suricata for inspection. With either mode, you want a lot of CPU processing power and quite a bit of RAM available for the best performance.Summary

There is not a golden bullet here. Each mode has its tradeoffs. You as the admin must decide what makes the most sense for your network environment. And I will repeat once more to keep it fresh in your head -- Suricata cannot see anything inside encrypted traffic unless you configure third-party MITM, and nearly all Internet traffic today is encrypted (HTTPS, TLS, DoH, DoT, etc.). So the vast majority of the traffic crossing your firewall is hidden from your IDS/IPS. Factor that into your decisions about configuring and deploying an IDS/IPS. Security today is much better served on the final endpoints (workstations, desktops, servers, and mobile devices) than it is on the firewall when talking about IDS/IPS. -

@bmeeks Thanks again for all this info.

Considering this and all above I have stopped using Suricata for the time being (am at this for the better part of 3 months, starting with OPNsense failing, switching to pfsense).

I have pfblockng (BIG advantage over OPNsense) configured with a good set of IP rules and it's catching a lot, so guess I'm safe as can be.

-

@manilx Will get a 8200 MAX tomorrow to replace this 6100. Will see how that one goes with my "normal" suricata setup (in comparision to Protectli).

Will post results...... -

@manilx said in 6100 SLOW in comparison to Protectli FW6E:

@manilx Will get a 8200 MAX tomorrow to replace this 6100. Will see how that one goes with my "normal" suricata setup (in comparision to Protectli).

Will post results......More CPU horsepower will certainly help throughput, but I would not expect much of an improvement in stability when switching the runmode to "workers" in Suricata.

The throughput improvement will come from having extra CPU capacity to allocate to rules signature analysis while still leaving adequate processing for basic network I/O. A box with a more limited CPU can start to run out of juice for network I/O (read that as being able to quickly respond to NIC driver interrupts) if it is also heavily taxed analyzing network flows in Suricata.

-

@bmeeks Yes, I do not intend to switch to worker (hadn't also on the Protectli).

Just looking for more CPU to increase bandwidth at this point.....