6100 SLOW in comparison to Protectli FW6E

-

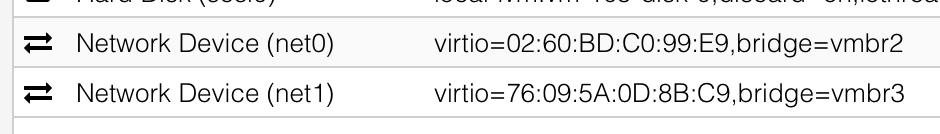

2 Virtio network cards. No passthrough.

I'll run a few speedtests but will not keep it running for a longer time... Hope this helps.

-

@manilx P.S. I'll do it later tonight in a couple of hours.

-

@manilx said in 6100 SLOW in comparison to Protectli FW6E:

2 Virtio network cards. No passthrough.

I'll run a few speedtests but will not keep it running for a longer time... Hope this helps.

The main thing I want to see is if there is a change in the positive direction. Meaning either it works without stalling, or it works noticeably longer before stalling.

Did this setup work okay with Suricata prior to November of last year? If so, I'm hoping it returns the same level of reliability you had prior to the end of last year when the new version of Suricata 6.0.9 was rolled out with OPNsense.

-

@bmeeks Well, I have posted my issue with OPNsense in a link above.

I lost internet connection out of the blue. But not consistently and I couldn't force it!

I don't know if it was suricata back then. What I know it was when I upgraded OPNsense to a newer release (all in the post above).SO I don't think this test can help. I won't have the VM running for longer than a few speedtests.

If this is OK I'll do it, if not then I'll spare me the hassle.

I moved for good to pfsense (because of the above and the "attitude" I got from the developer!!).

I now have pfsense hardware and all. -

@manilx said in 6100 SLOW in comparison to Protectli FW6E:

@bmeeks Well, I have posted my issue with OPNsense in a link above.

I lost internet connection out of the blue. But not consistently and I couldn't force it!

I don't know if it was suricata back then. What I know it was when I upgraded OPNsense to a newer release (all in the post above).SO I don't think this test can help. I won't have the VM running for longer than a few speedtests.

If this is OK I'll do it, if not then I'll spare me the hassle.

I moved for good to pfsense (because of the above and the "attitude" I got from the developer!!).

I now have pfsense hardware and all.I think I recall checking out your post in the link, but the details I have forgotten. Been juggling a lot of balls in the air with various Suricata changes recently, and I'm not a spring chicken anymore and things can escape my brain into the ether

.

.Is your VM setup of pfSense using CE or Plus? If using either 23.01 Plus, or 2.7 CE DEVEL, I can send you a "fixed" Suricata binary for pfSense you can test with. I can also make you one for 2.6 CE, but it will take a bit to build.

-

@bmeeks VM is only the "old" OPNsense.

I'm running pfsense+ 23.01 on a brand new 8200max now. -

@manilx said in 6100 SLOW in comparison to Protectli FW6E:

@bmeeks VM is only the "old" OPNsense.

I'm running pfsense+ 23.01 on a brand new 8200max now.That's fine. If you can fire up the old VM and test with the Suricata package Franco linked in the Suricata Redmine Issue thread that would be helpful. Just bring it up and run a few speed tests. Based on some of the earlier reports from other users, I would expect a speed test to trigger the stall fairly quickly: or at least several back-to-back speed tests on Gigabit or higher links should trigger it. If the speed tests work well, then that increases my confidence in the patch.

My problem with testing locally is that currently I don't have a suitable setup in place. I hope to free up some hardware next week that will help. The bug needs to be exercised with really heavy traffic loads in order to show up. I've never been able to get it to show with my feeble VMware Workstation testing setup (not surprised at all there, though).

-

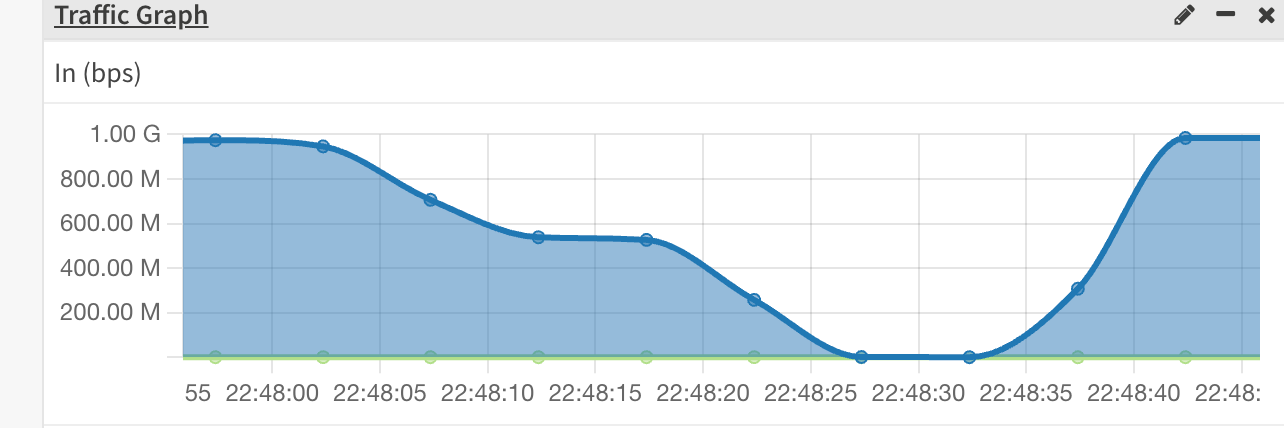

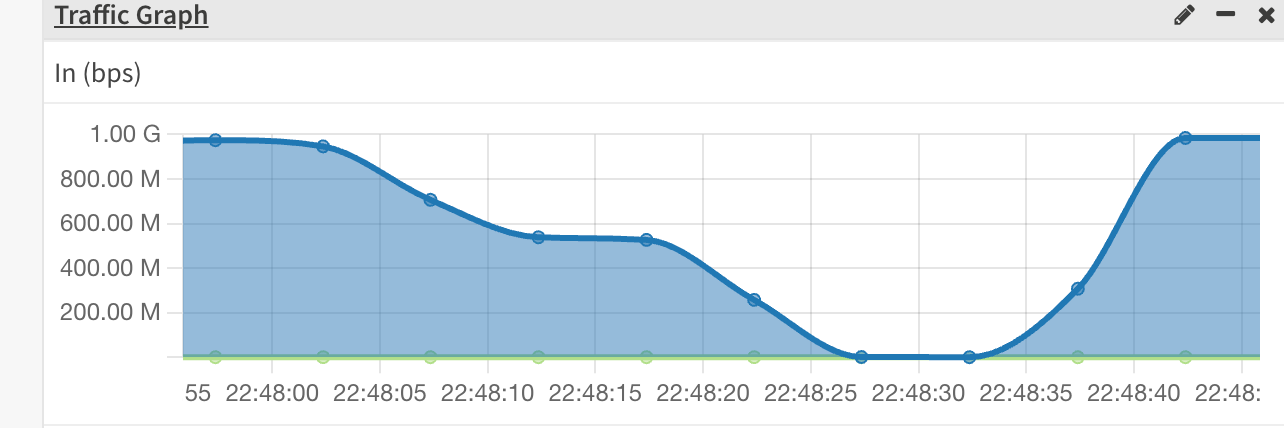

@bmeeks OK!

Updated OPNsense to the latest version.

Installed the suricata test package.

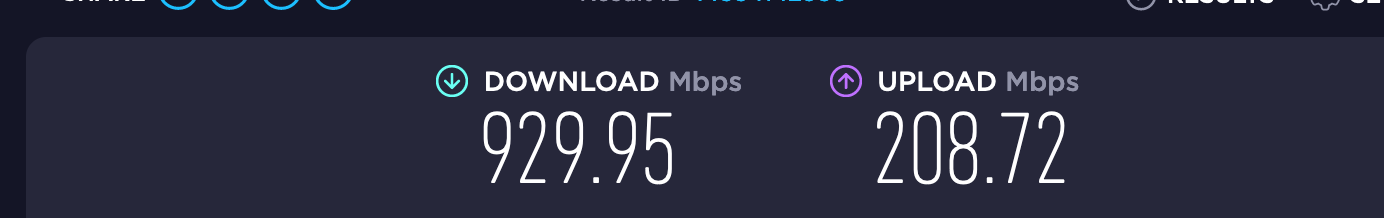

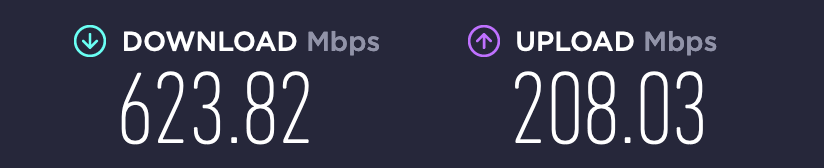

Run half a dozen speedtests saturating the line at 930+

No problem so far.

Reverted to 8200.

Hope this helps

-

@manilx said in 6100 SLOW in comparison to Protectli FW6E:

@bmeeks OK!

Updated OPNsense to the latest version.

Installed the suricata test package.

Run half a dozen speedtests saturating the line at 930+

No problem so far.

Reverted to 8200.

Hope this helps

Yes! This is very helpful. It proves the patch works. Sorry to be a little late replying. Had to leave home to attend a meeting and just returned.

Thank you very much for testing. I will pass the word on to the Suricata team.

-

@bmeeks You’re welcomed. Hope to see the results in pfsense soon

-

@manilx said in 6100 SLOW in comparison to Protectli FW6E:

@bmeeks You’re welcomed. Hope to see the results in pfsense soon

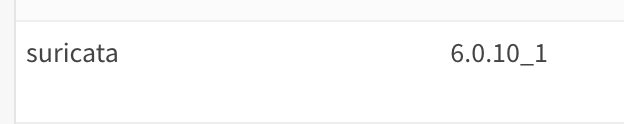

The pull request is posted now and awaiting review and approval by the Netgate team. They have some more important fires to beat out relative to the just released 23.01 update, but I expect them to get the new package version merged soon. It will show as Suricata-6.0.10 when released. The pull request for updating the binary portion is here: https://github.com/pfsense/FreeBSD-ports/pull/1226.

-

@manilx said in 6100 SLOW in comparison to Protectli FW6E:

@bmeeks You’re welcomed. Hope to see the results in pfsense soon

The pull requests for updating Suricata have been accepted by the Netgate team. For the moment, automatic package builds are disabled in the 23.01 branch so the new version will not show up yet. The automatic builds should be re-enabled soon, and after that you will see a Suricata-6.0.10 package available containing the latest fixes.

Once you have that installed, go to the INTERFACE SETTINGS tab and change the Run Mode under the Performance and Detection Engine Settings section to "workers", save the change, and then restart Suricata on the interface. You should see a marked increase in throughput using Inline IPS Mode after that. And with the new fix, the stall/hang condition is hopefully gone!

-

@bmeeks Awesome. Once it's there I'll try it immediately and post back here.

-

@manilx:

The new updated Suricata package containing the netmap stall fix has been posted now for pfSense 23.01. The new Suricata package version is 6.0.10_1.I am interested in how this new package performs for you. For peak throughput when using Inline IPS Mode, remember to change the Run Mode parameter from its default of

autofptoworkers. Save that change and then restart Suricata on the interface. -

@bmeeks Hi. Updated suricata. Changed run mode to workers.

Restarted on interface. Doen't start. Tried several times to restart, doesn't.

Rebooted. Suricata not started.

reverted to snaphot (updated suricata but not workers mode). Working. -

@manilx said in 6100 SLOW in comparison to Protectli FW6E:

@bmeeks Hi. Updated suricata. Changed run mode to workers.

Restarted on interface. Doen't start. Tried several times to restart, doesn't.

Rebooted. Suricata not started.

reverted to snaphot (updated suricata but not workers mode). Working.Dang! Post the output of the

suricata.logfile for a failed startup inworkersrun mode. -

@bmeeks Will have to boot back to other snapshot and try.

-

21/2/2023 -- 20:14:21 - <Notice> -- This is Suricata version 6.0.10 RELEASE running in SYSTEM mode 21/2/2023 -- 20:14:21 - <Info> -- CPUs/cores online: 8 21/2/2023 -- 20:14:22 - <Info> -- HTTP memcap: 67108864 21/2/2023 -- 20:14:22 - <Info> -- Netmap: Setting IPS mode 21/2/2023 -- 20:14:22 - <Info> -- fast output device (regular) initialized: alerts.log 21/2/2023 -- 20:14:22 - <Info> -- http-log output device (regular) initialized: http.log 21/2/2023 -- 20:14:24 - <Info> -- 1 rule files processed. 6453 rules successfully loaded, 0 rules failed 21/2/2023 -- 20:14:24 - <Warning> -- [ERRCODE: SC_ERR_EVENT_ENGINE(210)] - can't suppress sid 2260002, gid 1: unknown rule 21/2/2023 -- 20:14:24 - <Info> -- Threshold config parsed: 2 rule(s) found 21/2/2023 -- 20:14:24 - <Info> -- 6453 signatures processed. 910 are IP-only rules, 0 are inspecting packet payload, 5376 inspect application layer, 108 are decoder event only 21/2/2023 -- 20:14:25 - <Info> -- Going to use 8 thread(s) 21/2/2023 -- 20:14:26 - <Info> -- devname [fd: 6] netmap:ix3-0/R@conf:host-rings=8 ix3 opened 21/2/2023 -- 20:14:26 - <Info> -- devname [fd: 7] netmap:ix3^0/T@conf:host-rings=8 ix3^ opened 21/2/2023 -- 20:14:26 - <Info> -- devname [fd: 11] netmap:ix3-1/R ix3 opened 21/2/2023 -- 20:14:26 - <Info> -- devname [fd: 12] netmap:ix3^1/T ix3^ opened 21/2/2023 -- 20:14:26 - <Info> -- devname [fd: 14] netmap:ix3-2/R ix3 opened 21/2/2023 -- 20:14:27 - <Info> -- devname [fd: 15] netmap:ix3^2/T ix3^ opened 21/2/2023 -- 20:14:27 - <Info> -- devname [fd: 16] netmap:ix3-3/R ix3 opened 21/2/2023 -- 20:14:27 - <Info> -- devname [fd: 17] netmap:ix3^3/T ix3^ opened 21/2/2023 -- 20:14:27 - <Info> -- devname [fd: 18] netmap:ix3-4/R ix3 opened 21/2/2023 -- 20:14:28 - <Info> -- devname [fd: 19] netmap:ix3^4/T ix3^ opened 21/2/2023 -- 20:14:28 - <Info> -- devname [fd: 20] netmap:ix3-5/R ix3 opened 21/2/2023 -- 20:14:28 - <Info> -- devname [fd: 21] netmap:ix3^5/T ix3^ opened 21/2/2023 -- 20:14:28 - <Info> -- devname [fd: 22] netmap:ix3-6/R ix3 opened 21/2/2023 -- 20:14:28 - <Info> -- devname [fd: 23] netmap:ix3^6/T ix3^ opened 21/2/2023 -- 20:14:29 - <Info> -- devname [fd: 24] netmap:ix3-7/R ix3 opened 21/2/2023 -- 20:14:29 - <Info> -- devname [fd: 25] netmap:ix3^7/T ix3^ opened 21/2/2023 -- 20:14:29 - <Info> -- Going to use 8 thread(s) 21/2/2023 -- 20:14:29 - <Info> -- devname [fd: 26] netmap:ix3^0/R@conf:host-rings=8 ix3^ opened 21/2/2023 -- 20:14:29 - <Info> -- devname [fd: 27] netmap:ix3-0/T@conf:host-rings=8 ix3 opened 21/2/2023 -- 20:14:30 - <Info> -- devname [fd: 28] netmap:ix3^1/R ix3^ opened 21/2/2023 -- 20:14:30 - <Info> -- devname [fd: 29] netmap:ix3-1/T ix3 opened 21/2/2023 -- 20:14:30 - <Info> -- devname [fd: 30] netmap:ix3^2/R ix3^ opened 21/2/2023 -- 20:14:31 - <Info> -- devname [fd: 31] netmap:ix3-2/T ix3 opened 21/2/2023 -- 20:14:31 - <Info> -- devname [fd: 32] netmap:ix3^3/R ix3^ opened 21/2/2023 -- 20:14:31 - <Info> -- devname [fd: 33] netmap:ix3-3/T ix3 opened 21/2/2023 -- 20:14:31 - <Info> -- devname [fd: 34] netmap:ix3^4/R ix3^ opened 21/2/2023 -- 20:14:31 - <Info> -- devname [fd: 35] netmap:ix3-4/T ix3 opened 21/2/2023 -- 20:14:32 - <Info> -- devname [fd: 36] netmap:ix3^5/R ix3^ opened 21/2/2023 -- 20:14:32 - <Info> -- devname [fd: 37] netmap:ix3-5/T ix3 opened 21/2/2023 -- 20:14:32 - <Error> -- [ERRCODE: SC_ERR_POOL_INIT(66)] - alloc error 21/2/2023 -- 20:14:32 - <Error> -- [ERRCODE: SC_ERR_POOL_INIT(66)] - pool grow failed 21/2/2023 -- 20:14:32 - <Error> -- [ERRCODE: SC_ERR_MEM_ALLOC(1)] - failed to setup/expand stream session pool. Expand stream.memcap? 21/2/2023 -- 20:14:32 - <Info> -- devname [fd: 38] netmap:ix3^6/R ix3^ opened 21/2/2023 -- 20:14:32 - <Info> -- devname [fd: 39] netmap:ix3-6/T ix3 opened 21/2/2023 -- 20:14:32 - <Error> -- [ERRCODE: SC_ERR_POOL_INIT(66)] - alloc error 21/2/2023 -- 20:14:32 - <Error> -- [ERRCODE: SC_ERR_POOL_INIT(66)] - pool grow failed 21/2/2023 -- 20:14:32 - <Error> -- [ERRCODE: SC_ERR_MEM_ALLOC(1)] - failed to setup/expand stream session pool. Expand stream.memcap? 21/2/2023 -- 20:14:33 - <Info> -- devname [fd: 40] netmap:ix3^7/R ix3^ opened 21/2/2023 -- 20:14:33 - <Info> -- devname [fd: 41] netmap:ix3-7/T ix3 opened 21/2/2023 -- 20:14:33 - <Error> -- [ERRCODE: SC_ERR_POOL_INIT(66)] - alloc error 21/2/2023 -- 20:14:33 - <Error> -- [ERRCODE: SC_ERR_POOL_INIT(66)] - pool grow failed 21/2/2023 -- 20:14:33 - <Error> -- [ERRCODE: SC_ERR_MEM_ALLOC(1)] - failed to setup/expand stream session pool. Expand stream.memcap? 21/2/2023 -- 20:14:33 - <Error> -- [ERRCODE: SC_ERR_THREAD_INIT(49)] - thread "W#06-ix3^" failed to initialize: flags 0145 21/2/2023 -- 20:14:33 - <Error> -- [ERRCODE: SC_ERR_FATAL(171)] - Engine initialization failed, aborting... -

@manilx Also changing back to autofp (when suricata doesn't start any longer) and trying to start on the interface results in it NOT starting.

Will revert to working snapshot! Not further testing for the time being. -

@manilx:

Your problem has a simple fix. Using more threads and a high core-count CPU requires more TCP stream-memcap memory be allocated. Go to the FLOW/STREAM tab and increase the amount ofStream Memory Capunder Stream Engine Settings. Try 256 MB and continue increasing in 128 MB or 256 MB chunks until Suricata starts succesfully.This line in the log is the problem:

21/2/2023 -- 20:14:32 - <Error> -- [ERRCODE: SC_ERR_MEM_ALLOC(1)] - failed to setup/expand stream session pool. Expand stream.memcap?Likely this is triggered by a change in the 6.0.10 binary upstream. Previously Suricata on pfSense was running the 6.0.8 binary. The default is 128 MB, and that may not be enough with some hardware setups.