23.1 using more RAM

-

After some time and all packets up to date (yesterday)

all went fine again, there is not so high usage of RAM (90%)

and Swap will be also not reaching out of space on top of

all the CPU is going back to normal too.All in all, now the specs will be normal again like before

the update to 23.01.Never again I will go with hard soldered RAM, the next

box will be sorted with upgrade able memory banks. -

Honestly the cron thing is mostly a perception thing as well -- it just keeps a high disk activity job from crunching all the data on the disk and thus driving up ARC. Any high disk activity job could do it over time.

The real "solution" here is for you do tune the value of things like

vfs.zfs.arc_maxto levels you are comfortable with on your setup so it doesn't grow larger than you think it should. Note the weasel words there as that's primarily not a technical limit it will just give you a warm fuzzy feeling about not letting your RAM be used for caching to speed things up :-)I opened https://redmine.pfsense.org/issues/14016 to address the cron changes.

You can install the System Patches package and then create an entry for

ff715efce5e6c65b3d49dc2da7e1bdc437ecbf12to apply the fix. After applying the patch you can either reboot or if you have the cron package installed, edit and save a job (without making changes) to trigger a rewrite of the cron config.EDIT: For good measure I went back to a 22.05 ZFS install and ran

periodic dailyand ZFS ARC+wired usage shot up like mad, so even though the underlying behavior is the same, disabling the cron job should at least get back to not having it run ARC up every night. -

@jimp said in 23.1 using more RAM:

Honestly the cron thing is mostly a perception thing as well -- it just keeps a high disk activity job from crunching all the data on the disk and thus driving up ARC. Any high disk activity job could do it over time.

The real "solution" here is for you do tune the value of things like

vfs.zfs.arc_maxto levels you are comfortable with on your setup so it doesn't grow larger than you think it should. Note the weasel words there as that's primarily not a technical limit it will just give you a warm fuzzy feeling about not letting your RAM be used for caching to speed things up :-)I opened https://redmine.pfsense.org/issues/14016 to address the cron changes.

You can install the System Patches package and then create an entry for

ff715efce5e6c65b3d49dc2da7e1bdc437ecbf12to apply the fix. After applying the patch you can either reboot or if you have the cron package installed, edit and save a job (without making changes) to trigger a rewrite of the cron config.EDIT: For good measure I went back to a 22.05 ZFS install and ran

periodic dailyand ZFS ARC+wired usage shot up like mad, so even though the underlying behavior is the same, disabling the cron job should at least get back to not having it run ARC up every night.Glad you were able to replicate it. I edited the crontab file and commented out those 3 lines and rebooted. Will report back tomorrow morning! Thank you, Mr. P.!

-

S SteveITS referenced this topic on

-

S SteveITS referenced this topic on

-

S SteveITS referenced this topic on

-

S SteveITS referenced this topic on

-

S SteveITS referenced this topic on

-

@jimp said in 23.1 using more RAM:.

… there as that's primarily not a technical limit it will just give you a warm fuzzy feeling about not letting your RAM be used for caching to speed things up :- …

While for a static system this is technically correct. However in a real time software system there is a difference between

- currently free memory and

- could be made available if required.

The difference is essentially time, how long does the requesting process have to be stalled waiting for the required memory to be allocated to it. Where will this memory be allocated from, swap or zfs buffer garbage collection. Does this delay reduced throughput, or result in intermittent data loss / or crashes.

Where the memory is being used for user measurable device performance enhancement then the trade off can be justified. When it only helps after hours housekeeping, the cost is harder to justify. When it is used for redundant task run once a day, I’m at a loss to know any way of justify it.

Ideally ARC would release cache which is very rarely used but I’m not aware of that option being supported. The closest I have found is to manually set the ARC upper buffer limit to cover active buffer use, forcing ARC to prune rarely used buffer data. By default ARC uses 50% of all available ram to accelerate disk access. For a real time system such as pfsense which is not disk access limited, this is unlikely to be optimal. In the past pfsense severely restricted ARC buffer size however this appears to have been removed from the latest version. Looks like a regression to me.

-

@jimp said in 23.1 using more RAM:

it just keeps a high disk activity job from crunching all the data on the disk and thus driving up ARC. Any high disk activity job could do it over time.

Hmmm ....

I have used ZFS since 2.4.5-p1.

I noticed a jump in ram consumption, when i switched to pfSense+ (22.??) first plus.

I have 8GB installed, and am quite sure i was using around 18% when on 2.6.0.When i switched to Plus, it started out somewhat the same 18% , but during the next days it would gradually increase to around 40..43% usage, but it stayed there ... So it didn't look like a leak.

And i was not too worried, as it seemed stable at around low 40%., and i still had 4GB free.I have NtopNG active on 20+ interfaces, and it seems like it starts a process per interface that gobbles up some mem,

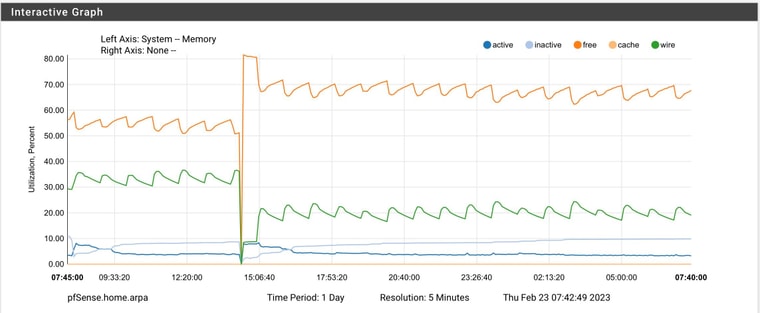

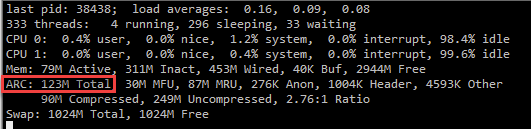

But if NtopNG also logs the collected data to disk, then combined with the "ZFS & Disk Activity statement above" , that could be the reason for the increased usage.This is my 23.01 Test Box (i3 / 8GB Ram) running my "home config" , uptime around 2 days

Dashboard shows 39% Mem usage.Home 23.01 - i3/8G Ram - Uptime 2 days

.

.

.This is my 22.05 Summerhouse Box (i5 / 8GB Ram) running my "summerhouse config" , uptime 173 days

Dashboard shows 46% Mem usage.Summerhouse - 22.05 - i5/8G Ram - Uptime 173 days

Both w. NtopNG and 20+ Vlans , and running basically the same config.

Both are (sys)logging to a "remote linux" .../Bingo

-

D Dobby_ referenced this topic on

D Dobby_ referenced this topic on

-

As I mentioned above, if the wired usage is from ZFS ARC, you have the option to tune its usage at any time by setting a tunable for

vfs.zfs.arc_maxto a specific number of bytes (e.g.268435456for 256M). On FreeBSD 14 that isn't a loader tunable like it was on older versions, you can change the value live and it is immediately respected. -

@jimp said in 23.1 using more RAM:

As I mentioned above, if the wired usage is from ZFS ARC, you have the option to tune its usage at any time by setting a tunable for

vfs.zfs.arc_maxto a specific number of bytes (e.g.268435456for 256M). On FreeBSD 14 that isn't a loader tunable like it was on older versions, you can change the value live and it is immediately respected.Mystery solved. Disabling the periodic cron jobs fixed the issue. Thank you for your help, Mr. P!

-

Good to know that disabling the unnecessary cron bits helped, but be aware that any process that heavily hits the disk will trigger similar high ARC usage in the future as it tries to cache data.

If you are worried about the wired usage, I still recommend setting a value for

vfs.zfs.arc_maxto whatever amount of your RAM you feel comfortable with it using. Higher values will result in overall better disk performance, but disk performance isn't usually important for most firewall roles. -

There is definitely an issue with how memory is consumed/released in 23.01

I have been using the tftp package for years to store a image of my Raspberry PI on my pfSense (on a remote site). Ever since 23.01, wired memory grows with about the size of files i transfer to pfSense, and is apparantly not released again (even though I stop and restart the TFTP service).

So it just takes a tftp transfer of 6 Gb to bring my 6100 to 100% memory consumption (2Gb is the normal level). Stopping services does not release it, only a restart does.

I havent tried (and would rather not) deleting my 22.05 boot environment to see if it is a ZFS issue when an older clone exists (from upgrade) of the running "default" environment.

edit: And I cannot see a process or get top to show where the wired memory is used. Everything in top only adds up to about about 1.6Gb of the 2Gb used by standard on my system

-

@jimp I tried creating a system tunable called vfs.zfs.arc_max and setting it 256Mb. It has no effect on the 100% wired memory usage (at least not without a reboot).

Also deleting the TFTP transferred file has no effect either (if it was somehow locked and kept in cache because of that).

-

@keyser Please start your own thread as that is probably a different issue.

-

@jimp in the past users did not need to explicitly control ARC max memory usage as the pfsense default was appropriate. The default is no longer appropriate in the current software version. It is true users could compensate for the degraded pfsense behaviour but surely a better solution is to correct the regression.

-

@patch said in 23.1 using more RAM:

@jimp in the past users did not need to explicitly control ARC max memory usage as the pfsense default was appropriate. The default is no longer appropriate in the current software version. It is true users could compensate for the degraded pfsense behaviour but surely a better solution is to correct the regression.

That is demonstrably untrue. If I run the same

periodic dailyscript on 22.05 that triggered high usage on 23.01 the ARC usage went very high on 22.05, too.Some things did change in FreeBSD but nothing directly related to ARC usage as far as I've been able to determine thus far.

But the past doesn't really matter here, you were always supposed to tune the ZFS ARC size if it matters, it's been in ZFS tuning guides for FreeBSD for many years. That you didn't notice it before was more luck than anything.

-

@jimp Since the ARC cache is wired and thus reported as USED and not pageable memory, why does it not figure in any of the memory reporting commands I tried? (top -HaSP, or ps -auxwww)

How do I see how much wired memory the ZFS ARC cache is currently occupying?

-

@keyser said in 23.1 using more RAM:

@jimp Since the ARC cache is wired and thus reported as USED and not pageable memory, why does it not figure in any of the memory reporting commands I tried? (top -HaSP, or ps -auxwww)

How do I see how much wired memory the ZFS ARC cache is currently occupying?

It's listed in

topon its own rows below the other memory usage. It's included in the wired total as well.

If you want to check the current size directly, you can runsysctl kstat.zfs.misc.arcstats.size.There is already an open redmine to have that put into graphs and so on.

-

@jimp EXCELLENT info... I didn't notice that.

-

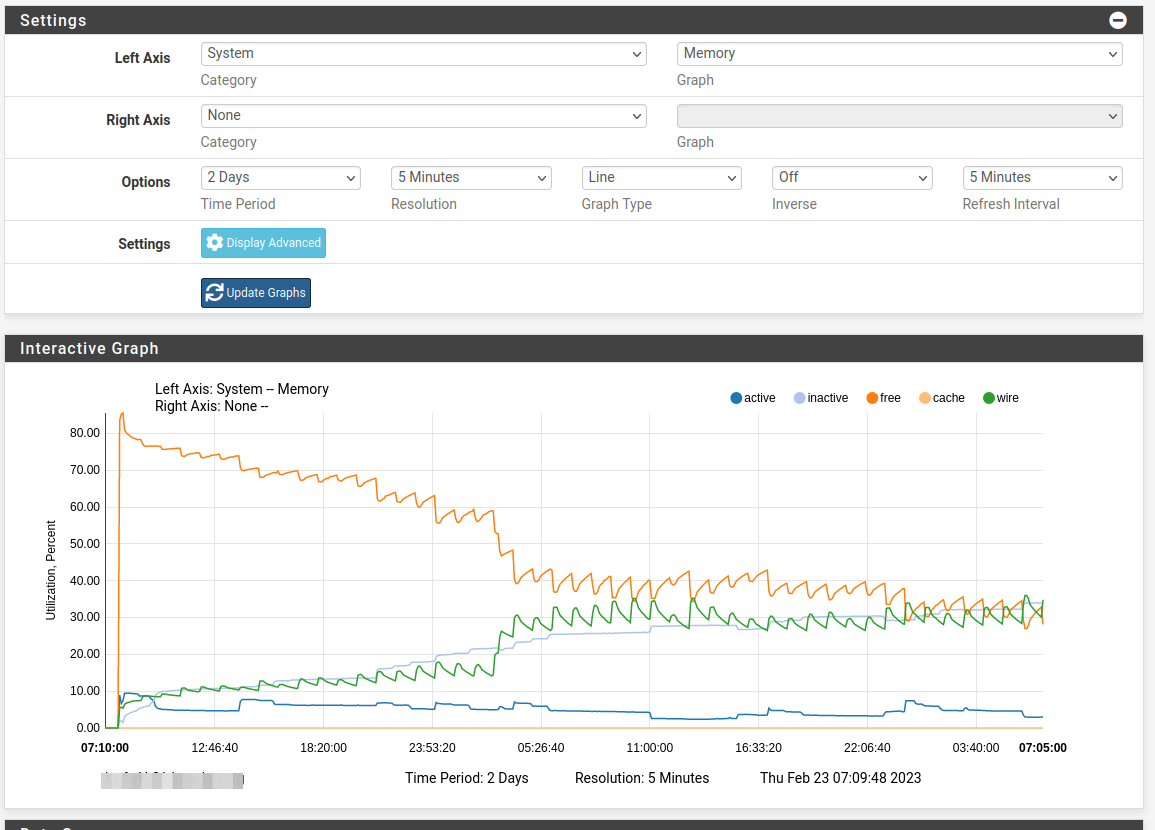

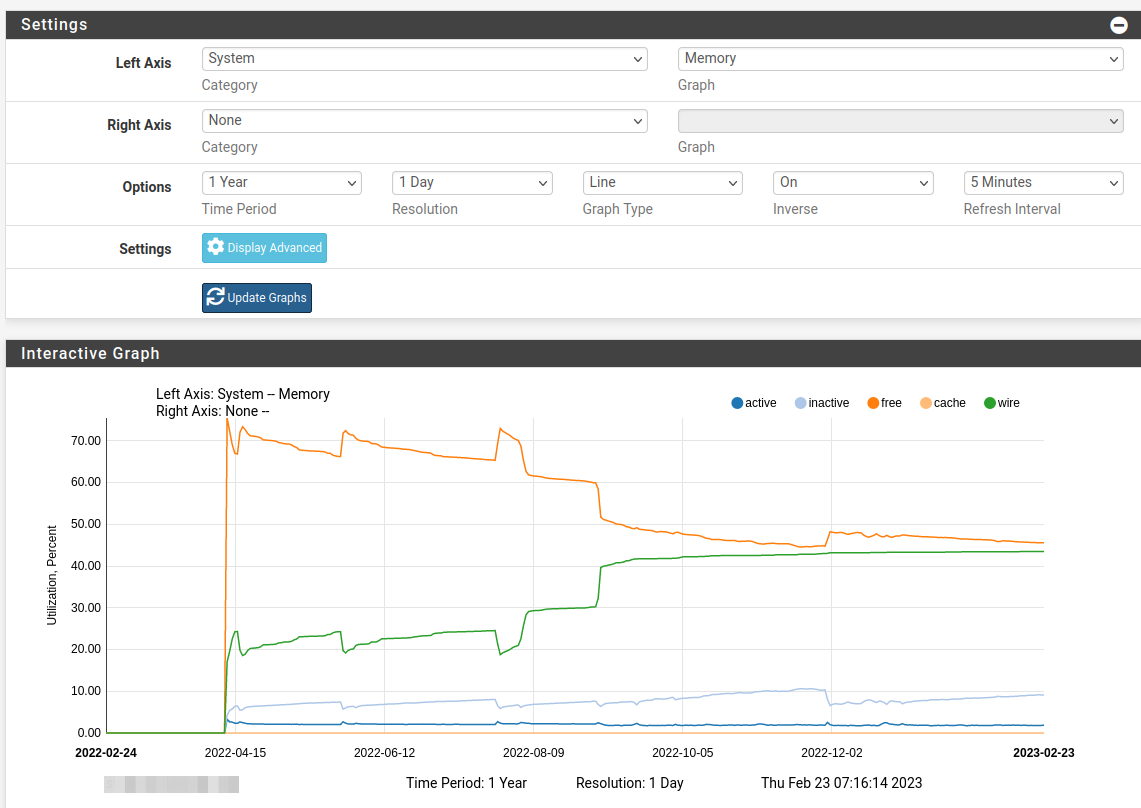

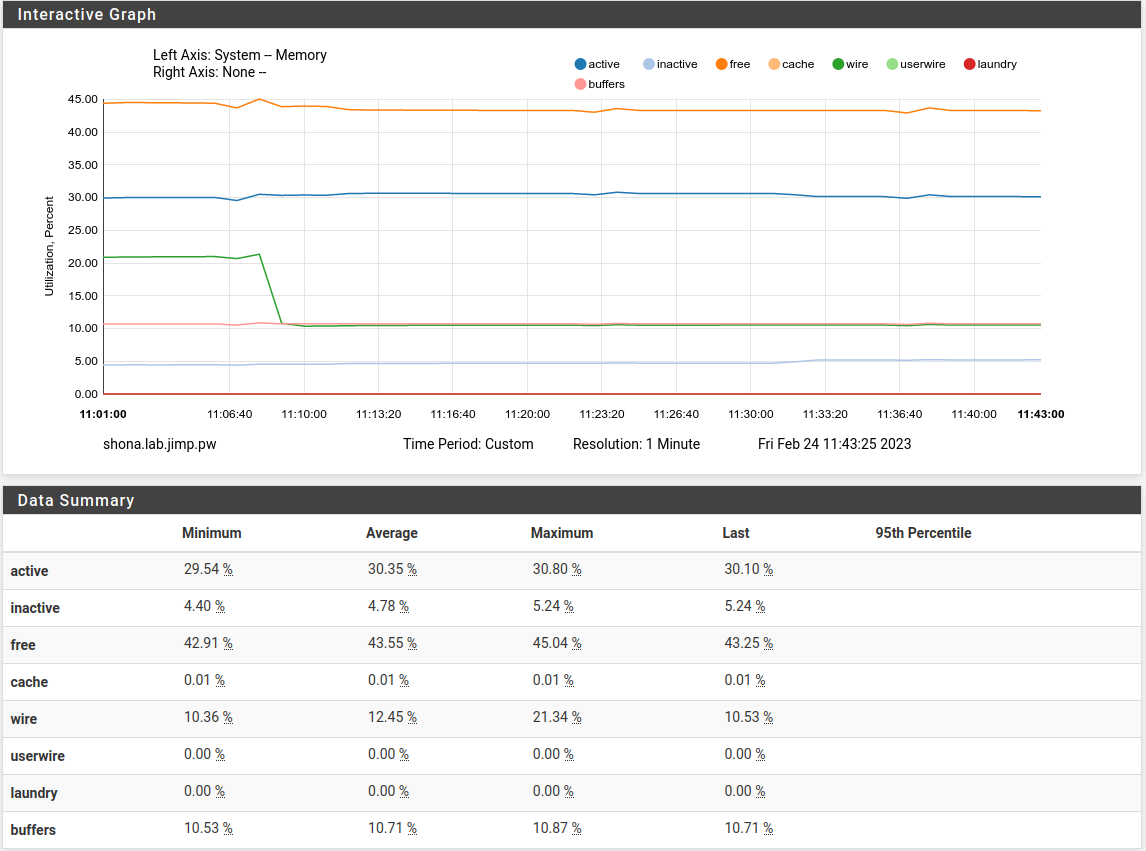

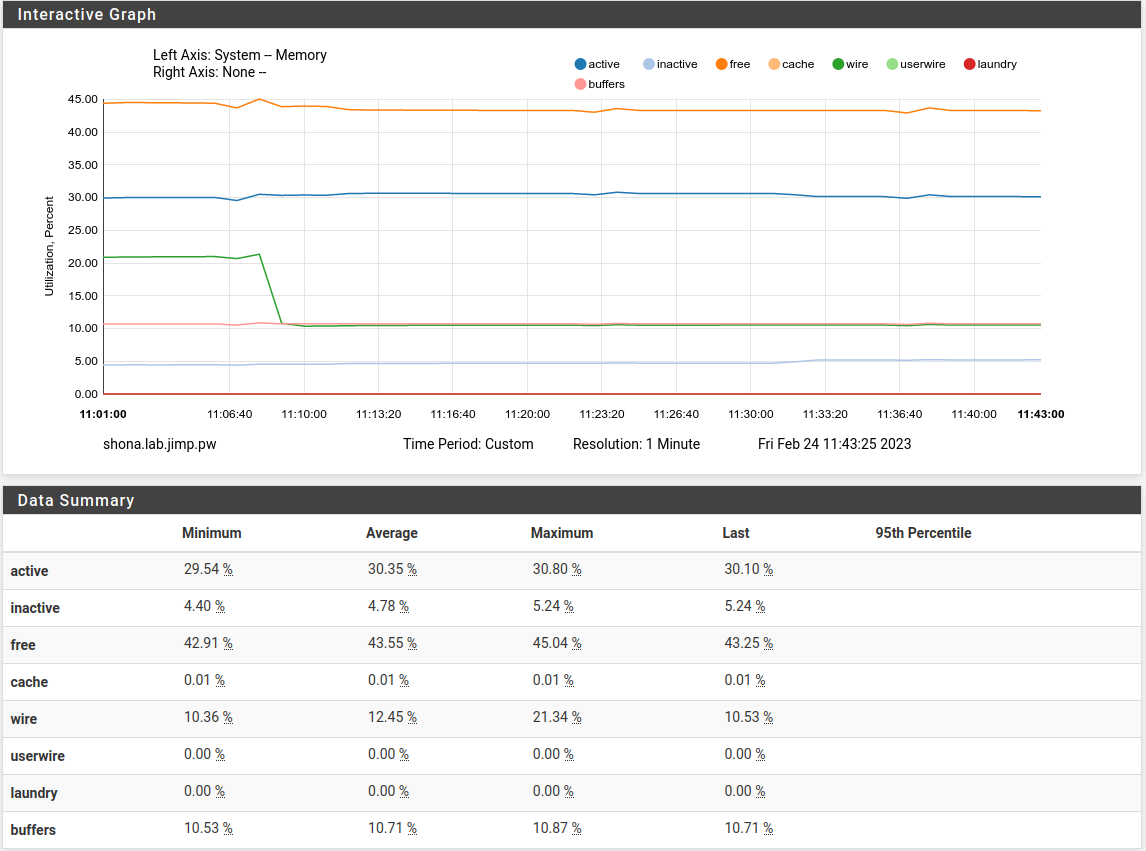

For those interested in better memory graph reporting, the patch I posted on https://redmine.pfsense.org/issues/14011#note-3. It's not committed yet since I need to do more testing and review on its behavior, but it's in good enough shape to try.

Apply the patch and reboot and it will upgrade the memory RRD so it has a better break-down and isn't missing data.

Don't mind that little blip at the start, that is when I was still refining the calculations.

On ZFS, the ARC usage will be graphed under "cache" as is the UFS dirhash. "buffers" is UFS buffers. So on ZFS systems you'll see more cache and on UFS systems you'll see a larger value of buffers and maybe some cache. Depends on the FS activity.

And since cache and buffers are a part of wired, the wired amount is reduced by the size of cache and buffers so it isn't reported double.

-

@jimp said in 23.1 using more RAM:

For those interested in better memory graph reporting, the patch I posted on https://redmine.pfsense.org/issues/14011#note-3. It's not committed yet since I need to do more testing and review on its behavior, but it's in good enough shape to try.

Apply the patch and reboot and it will upgrade the memory RRD so it has a better break-down and isn't missing data.

Don't mind that little blip at the start, that is when I was still refining the calculations.

On ZFS, the ARC usage will be graphed under "cache" as is the UFS dirhash. "buffers" is UFS buffers. So on ZFS systems you'll see more cache and on UFS systems you'll see a larger value of buffers and maybe some cache. Depends on the FS activity.

And since cache and buffers are a part of wired, the wired amount is reduced by the size of cache and buffers so it isn't reported double.

Does the patch do anything else besides rem'ing out those 3 periodic statements fron the crontab file? If not, then I'm good-to-go. Thanks!

-

@jimp said in 23.1 using more RAM:

Some things did change in FreeBSD but nothing directly related to ARC usage as far as I've been able to determine thus far.

But the past doesn't really matter here, you were always supposed to tune the ZFS ARC size if it matters, it's been in ZFS tuning guides for FreeBSD for many years. That you didn't notice it before was more luck than anything.Interesting.

On my system I have-

pfsense with zfs file system, which has never used excessive ram

-

Proxmox hypervisor with zfs file system which gradually builds up to 50% of available ram (the default for Linux) over 24 hours. The inefficient ram usage prevented setting "options zfs zfs_arc_max=2147483648" in /etc/modprobe.d/zfs.conf

-

pfsense VM does about 6x more disk writes than all other VM combined

Looking at the ARC memory usage on pfsense, ARC uses only 234MB of the 5GB VM memory

Mem: 81M Active, 281M Inact, 752M Wired, 3782M Free ARC: 234M Total, 104M MFU, 124M MRU, 33K Anon, 1114K Header, 5272K Other 94M Compressed, 264M Uncompressed, 2.82:1 Ratio Swap: 1024M Total, 1024M FreeLooking more closely at the limits set for arc

vfs.zfs.arc_min: 507601920 (0.473 GB) vfs.zfs.arc_max: 4060815360 (3.782 GB)So @jimp you are correct, FreeBSD 12.3-STABLE does not progressively consume all arc available cache but Linux 5.15.85 does writing similar data.

Looks like I should set vfs.zfs.arc_max to about 512MB.

This is likely to be the case for most pfsense users running the now default zfs file system. Perhaps it should be added to the predefined System Tunables or near the ram disk settings in System -> Advanced -> Miscellaneous

-

-

@patch said in 23.1 using more RAM:

should set vfs.zfs.arc_max

Per https://docs.freebsd.org/en/books/handbook/zfs/#zfs-advanced it's "vfs.zfs.arc.max [with dot] starting with 13.x (_vfs.zfs.arc_max for 12.x)". (and .min not _min)