Major DNS Bug 23.01 with Quad9 on SSL

-

@moonknight

Yep, that is the one. I have mine set at 2400 for reasons I read in a technical paper that I have long since forgotten. -

Thank you very much @robbiett :)

-

@robbiett said in Major DNS Bug 23.01 with Quad9 on SSL:

for reasons I read in a technical paper that I have long since forgotten

The hallmark of so many IT decisions carried forward decades into the future.

-

@steveits said in Major DNS Bug 23.01 with Quad9 on SSL:

The hallmark of so many IT decisions carried forward decades into the future.

Yeah, I resemble those remarks - especially as I am so many decades in. Started with a BBC Micro only to see ARM come around again. Next came the Apple IIe and off I went down that path, only to find myself down the line with BSD again.

I have forgotten so much along the way!

️

️ -

I've been playing with these two settings for a few months now.

"Minimum TTL for RRsets and Messages"

"Serve Expired"

They have no effect on this issue.

Perhaps however there is a relationship with the use of IPv6. I previously said that I had no more problems on 23.05, however at that moment I did not check that IPv6 worked for me. Due to another bug, IPv6 did not work for me and maybe that's why there was no issue. The other day I restored IPv6 and without ASLR the same issues began as before. Now testing with ASLR disabled…. -

@w0w said in Major DNS Bug 23.01 with Quad9 on SSL:

"Minimum TTL for RRsets and Messages"

"Serve Expired"

They have no effect on this issue.I don't think any of us were suggesting that. Of course, if you can get an answer from the DNS cache it does dodge the issue for that particular query and those 2 settings do improve cache performance and make a hit more likely.

Regarding your thoughts on IPv6, that could indeed be part of the issue but unproven. I run IPv6 and most of my traffic tends to use it. I have not tried disabling it as part of the DNS diagnostics but happy to do so if NetGate thinks there is merit in it.

️

️ -

@robbiett said in Major DNS Bug 23.01 with Quad9 on SSL:

Regarding your thoughts on IPv6, that could indeed be part of the issue but unproven. I run IPv6 and most of my traffic tends to use it. I have not tried disabling it as part of the DNS diagnostics but happy to do so if NetGate thinks there is merit in it.

Thing thoughts on my side.

If a ISP delivers a broken IPv4, they wouldn't exist.

If their IPv4 works fine, and they offer a broken IPv6, then thinks become complicated.Best choice : forget about pfSense, use the ISP router.

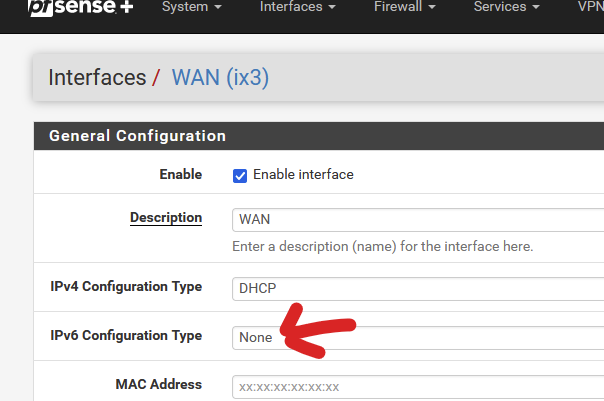

IPv6 might be ok then, but "with Quad9 on SSL " will be a no no. Exception : set it up for every LAN device manually.Or use pfSense, and disable IPv6 on the WAN side.

It's as easy as :

Your LAN devices will still use IPv6 among them, that's ok.

Btw : when this thread started, I disables my perfectly working unbound resolve mode, to activate "Quad9 on SSL using IPv6 and IPv4".

I apply the ASLR (see above) trick.

Up until today (3 weeks now ?) : I did not see any issue what so ever.

I've about 50 LAN devices connected, and a bunch of hotel clients on the captive portal.

No one ever yelled : "Internet doesn't work". -

@gertjan said in Major DNS Bug 23.01 with Quad9 on SSL:

Thing thoughts on my side.

If a ISP delivers a broken IPv4, they wouldn't exist.

If their IPv4 works fine, and they offer a broken IPv6, then thinks become complicated.Best choice : forget about pfSense, use the ISP router.

IPv6 might be ok then, but "with Quad9 on SSL " will be a no no. Exception : set it up for every LAN device manually.Or use pfSense, and disable IPv6 on the WAN side.

I would have a few issues with that:

- My ISP does not provide a router; even if they did it would not have the CPU power, interfaces and features that I, along with many others, require

- Forgetting about pfSense would be an odd thing to do with a NetGate router

- I have no issue with IPv6 and it is provided universally to UK broadband users - I am also dependant on it

- No link to IPv6 has been proven and NetGate has not offered it as a concern or potential cause of the forwarded DNS-over-TLS issues

️

️ -

@robbiett said in Major DNS Bug 23.01 with Quad9 on SSL:

My ISP does not provide a router;

That's actually a good thing. No need to to support one neither. They have to concentrate on the "IPv4 RFC"and the same way on "RFC IPv6" and you'll be a happy user.

@robbiett said in Major DNS Bug 23.01 with Quad9 on SSL:

Forgetting about pfSense would be an odd thing to do with a NetGate router

Humm. Didn't thinks about that one,

for you

for you

Anyway : I can't remove my ISP router as nothing else exists to terminate their fiber cable. This means that this ISP router doesn't only have to hand out GUA's for its LAN devices, as pfSense is just another LAN device, but it should also hand over entire /64 prefixes to pfSense, and that's already much more an issue these days.@robbiett said in Major DNS Bug 23.01 with Quad9 on SSL:

I have no issue with IPv6 and ....

You, maybe not.

Neither your ISP, maybe.

For IPv6 to work, also the entire IPv6 peering needs to work also.@robbiett said in Major DNS Bug 23.01 with Quad9 on SSL:

No link to IPv6 ....

You're right.

I'm probably 'out of subject'.

I brought it up because, if I use the same unbound (pfSense 23.01) using a Netgate device (4100) and the same "forward" settings to Quad9 over TLS, for 3 weeks, why can't I see any issue ? While others do. -

@robbiett said in Major DNS Bug 23.01 with Quad9 on SSL:

I don't think any of us were suggesting that.

I don't suggest this also

Seriously, I didn't notice any significant difference. Those random DNS errors that were there have not gone away, but those lags when the response comes with a significant delay too. In general, I'm just saying that if there was no main issue, I would never have noticed and the rest would not have been useful to set up anything if everything worked the same as in previous versions.@gertjan said in Major DNS Bug 23.01 with Quad9 on SSL:

Up until today (3 weeks now ?) : I did not see any issue what so ever.

I've about 50 LAN devices connected, and a bunch of hotel clients on the captive portal.@gertjan said in Major DNS Bug 23.01 with Quad9 on SSL:

for 3 weeks, why can't I see any issue ? While others do.

Maybe because it's not a "working/not working" question. When the page didn’t open once or the pictures didn’t load or didn’t load right away .... Well, I don’t know if I were a client of the network, it’s unlikely that I would run to complain to someone about something not working. 99.9% everything works, but the feeling of something wrong remains, when the picture on the site opens with a delay as if it is being viewed by special services of all countries in the world

Even more, the more clients, the less chance that the issue will appear, they constantly update the cache, well, purely theoretically, this is possible if everyone visits the same resources. -

@w0w My 2 cents: Something was certainly wrong when link to NYTimes returns url failed to load because server doesn’t respond. And not just that site but many sites. And DNS log is full of ‘servfail’ entries. And disabling pfBlocker does not change the problem.

Whatever was wrong is now ‘fixed’ for me after performing McDonald’s change to unbound config. As an aside, I do not have ipV6 enabled on WAN interface.

-

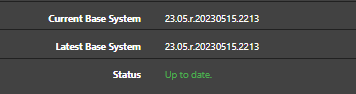

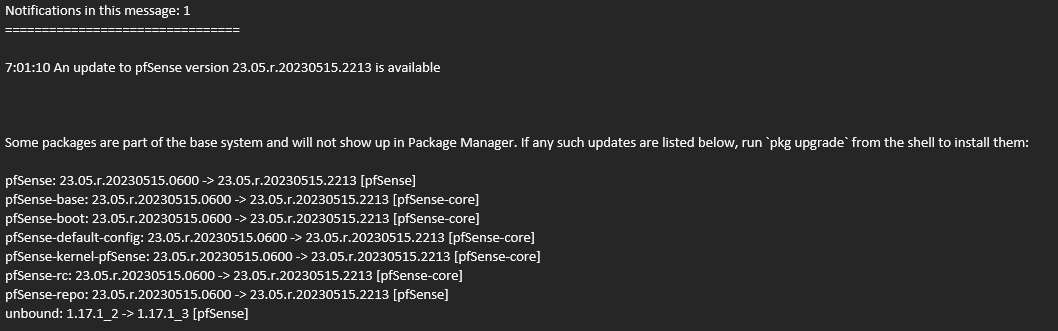

We had an opportunity to squeeze in some additional fixes before we cut the next RC (and hopefully RELEASE) build. The next build of 23.05 will include a fix for this.

We will continue to monitor upstream developments and adjust accordingly.

-

@cmcdonald said in Major DNS Bug 23.01 with Quad9 on SSL:

The next build of 23.05 will include a fix for this.

Very fine work, very fine work indeed.

️

️ -

@robbiett Updated to the latest release a few minutes ago. Not listening to the suggestions to disable IPv6 as I have been running IPv6 for a few years now without major issue until recently. DNS over TLS has been a major benefit as our ISP redirects port 53 DNS to their own servers and redirects mistyped domains or NXDOMAINS to their ad pages. This isn't cool in any way shape or form.

So here are the results that I am seeing:

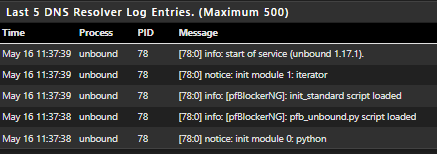

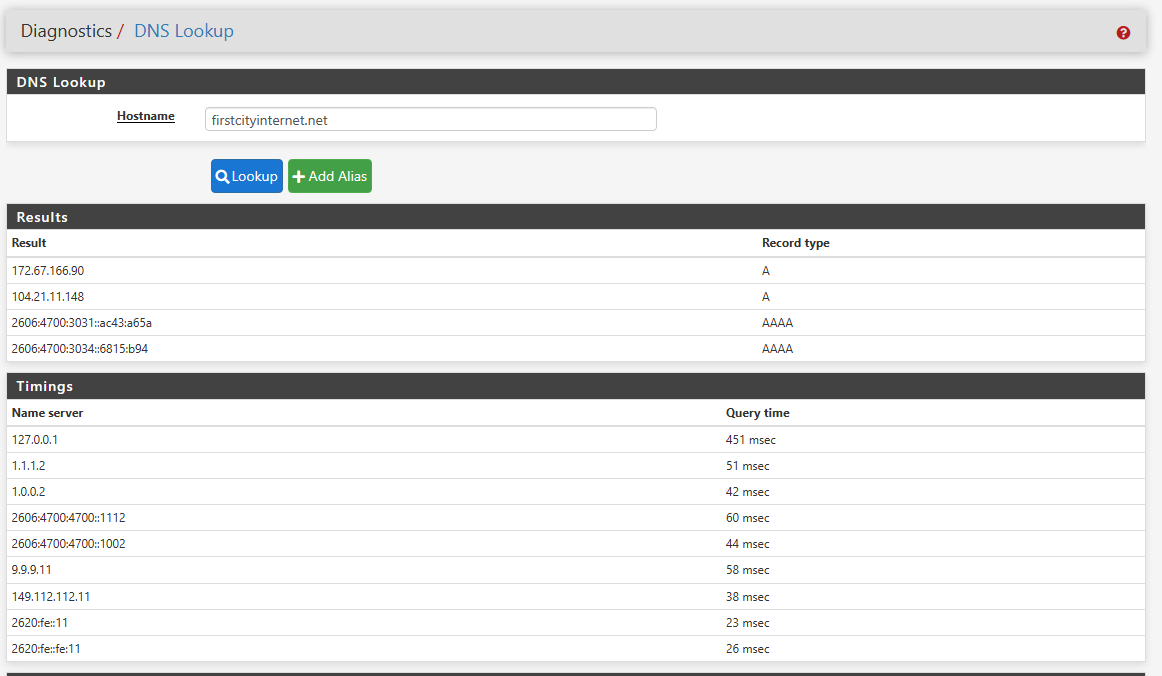

First a domain that hasn't been queried since Unbound restarted:

Notice the 127.0.0.1 takes 451 ms to complete a TLS handshake and do the upstream lookup. This is expected as DoT requires the creation of a verified TLS connection then the DNS lookup. If you add up ping time and essentially triple that, you should be at about the right time.

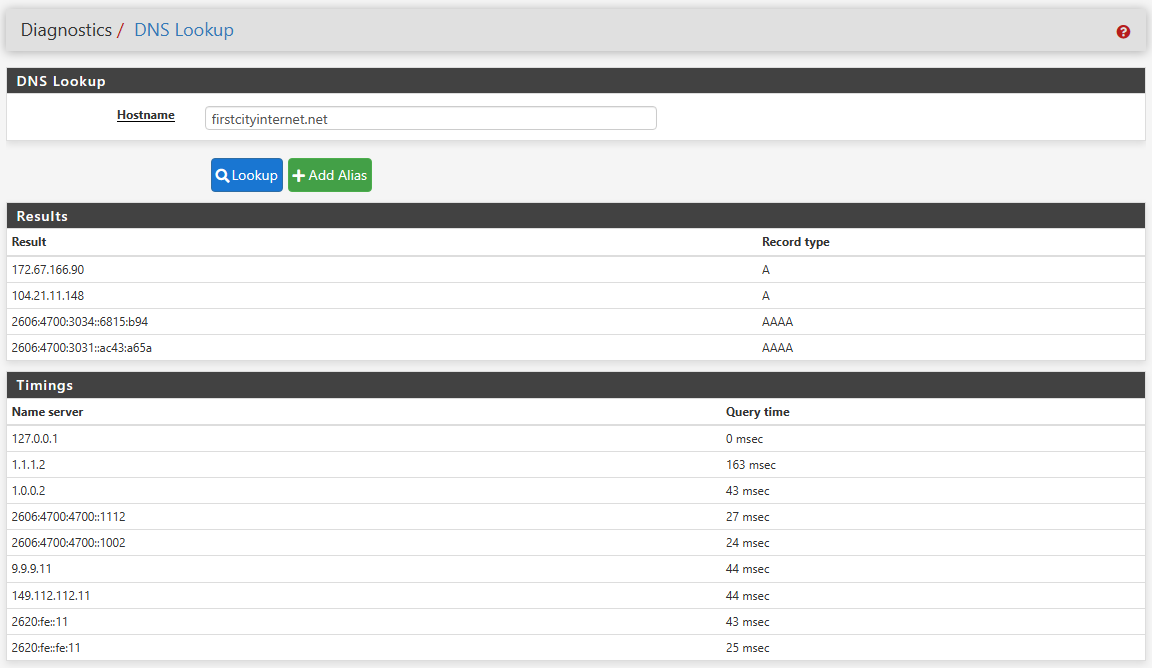

Here is the same query run a few seconds later:

Unbound is answering from its cache as you can see by the 0 ms time on the 127.0.0.1 IP.

Unbound is configured to do preemptive lookups for the most queried data in its cache so once Unbound is up and running, it should answer the most active DNS queries in 0 ms. So far, DNSSEC is working as is pfBlocker.

I still get a few of these errors:

debug: outnettcp got tcp error -1

But so far, the issues I was experiencing earlier have stopped.

Thanks for all the hard work on this!

-

@cmcdonald

So no need to disable ASLR anymore? Is it disabled now by option when compiled? -

Run the elfctl with the 'elf' (full path) on the command line without any other parameters :

See example here : https://forum.netgate.com/topic/178413/major-dns-bug-23-01-with-quad9-on-ssl/139?_=1684213270230 -

G Gertjan referenced this topic on

G Gertjan referenced this topic on

-

File '/usr/local/sbin/unbound' features: noaslr 'Disable ASLR' is set. noprotmax 'Disable implicit PROT_MAX' is unset. nostackgap 'Disable stack gap' is unset. wxneeded 'Requires W+X mappings' is unset. la48 'amd64: Limit user VA to 48bit' is unset.Yep.

Didn't think of it myself, thanks -

@n0m0fud said in Major DNS Bug 23.01 with Quad9 on SSL:

@robbiett

Updated to the latest release a few minutes ago.

Notice the 127.0.0.1 takes 451 ms to complete a TLS handshake and do the upstream lookup. This is expected as DoT requires the creation of a verified TLS connection then the DNS lookup. If you add up ping time and essentially triple that, you should be at about the right time.Thanks @N0m0fud, very helpful and always good to see real data.

I still find the timings odd though, which is also reflected in your examples. In your first screenshot we can see that your fastest return from Quad9 is:

2620:fe::11 = 23ms

If everything was working within the broad brush of a sys admin we would expect a DNS-over-TLS time delta akin to 3 times this value but we probably wouldn't raise a real-world eyebrow at something around a x4 increase:

2620:fe::11 @ 23ms x 4 = 92ms

The actual time to resolve the query in your example is:

127.0.0.1 = 451ms / 23ms

~20 times slower than a vanilla '53' query or

~5 times slower than expectedI'll dip my toe into what should be going on during the TCP/TLS handshakes only as far to state that the worst case deltas should only be experienced on the first query to the upstream DNS server (Quad9 in this case). TCP 'Fast Open' should ensure that the connections and sessions remain open for multiple queries in order to reduce this establishment overhead (see RFC7858). It is one of the factors going around in my head as I ponder why DoT is so slow on pfSense.

Another thing I ponder is how the pfSense resolver/forwarder is handling multiple upstream DNS name servers. On more lightweight DNS applications (eg dnsmasq) we are used to explicitly setting how multiple servers are used, how they are preferred, use of concurrency and (typically) preferring the fastest response rather than waiting on all the responses. Looking at the data in front of me it is not clear if the pfSense resolver (unbound) is faithfully utilising the fastest response.

I have only been running with the ASLR unset for a few days, so too early for meaningful data; but subjectively it seems much better then it come to the painful DNS-induced 'hangs' or parts of a webpage failing to load. I am less convinced that the timings & responsiveness of DoT is working as expected.

An interesting topic, at least to me!

️

️ -

-

@cmcdonald

@stephenw10

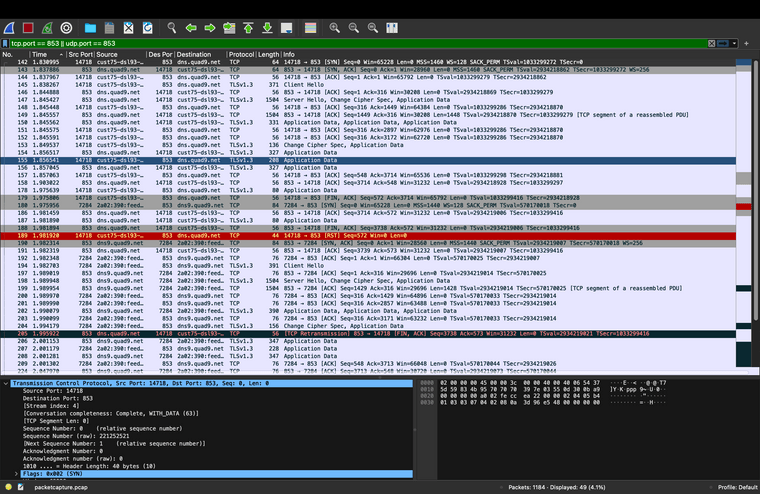

In an effort to explain why DoT is so slow on pfSense I have run multiple pcaps to try and understand how the resolver is handling forwarded queries to the servers set in 'General Setup'. The findings are illuminating and I now understand why slow queries are selected, compounded and compounded again by TLS to the point of failure, whilst ignoring faster name servers.On this simple successful test I am using 4 name servers from

dns.quad9.net.Two are ipv4 servers and 2 more are on ipv6:- 9.9.9.9

- 149.112.112.112

- 2620:fe::fe

- 2620:fe::9

From these servers a typical fast response is 7ms but can be as high as 12ms. Clearly if there is a problem with a name server the response can be much slower, up to 300ms or more.

In this single-lookup example I used kia.com (as something unlikely to be used and therefore cached). The sequence:

- pfSense sends a single query to just 1 ipv4 server - 149.112.112.112

- All other servers ignored

- Answered to unbound in 151ms

- pfSense sends a single query to just 1 ipv6 server - 2620:fe::9

- All other servers ignored

- Answered to unbound in 297ms

- DNS answered to client in 448ms

- This is the sum of the 2 queries, 151 + 297ms, as they are asked and answered sequentially

- The ipv6 query does not start until the ipv4 query is fully answered

The forwarded query does not go to all servers, one is simply picked at random. It does not matter how fast or slow a server is; as long as it is deemed valid and returning an answer in under 400ms it can be picked. If a server normally capable of returning an answer in 7ms is struggling, but still under 400ms, it will continue to be used. Multiples of this added latency will then pollute the back-and-forth of the DoT TCP and TLS handshakes, leading to a considerable delay or potentially a failure.

I have no answer as to why the attempt at using a ipv6 server only starts once the ipv4 DoT sequence is completed. Hopefully someone with more unbound insight can answer this element?

For those of us with upstream servers normally operating in the 7 to 12ms range the acceptance of up to 400ms seems ridiculous. The random choice of server used does little for the client but clearly eases the load at the upstream provider. Not having an option to ask all servers and utilise the fastest compounds matters further. Only starting an ipv6 query once ipv4 has completed is another unhealthy delay. Added all together along with the additional handshakes of TCP/TLS we are left with a slow and potentially unreliable DoT capability.

The example pcap snapshot, for those that like data:

Ref:

https://nlnetlabs.nl/documentation/unbound/info-timeout/ ️

️[As an aside, for Quad9 users only, the ipv6 response fqdn is shown as dns9.quad9.net, rather than dns.quad9.net as shown on the Quad9 help pages.]