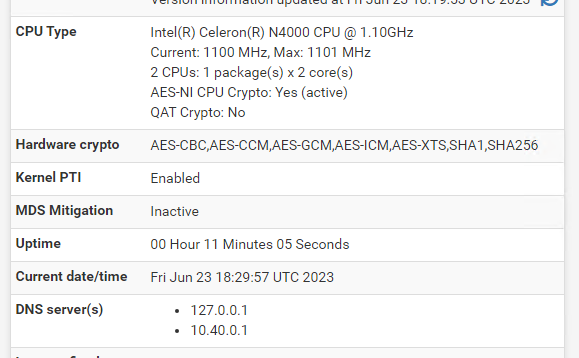

Slow performance on new N4000 i226V pfsense 2.7.0-Beta

-

Problem with new install with i226v

fresh install of both

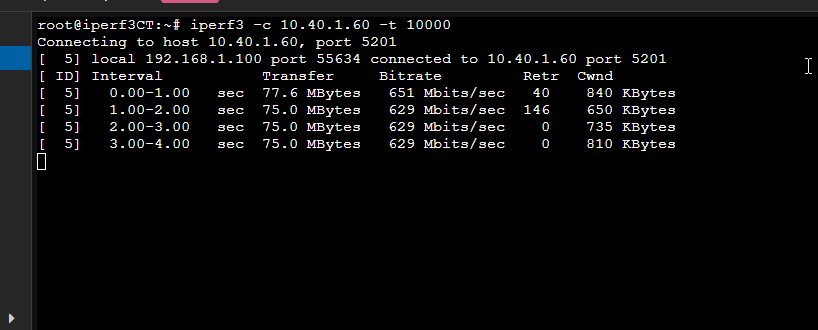

I get around 75Mb per sec in ipsec3.

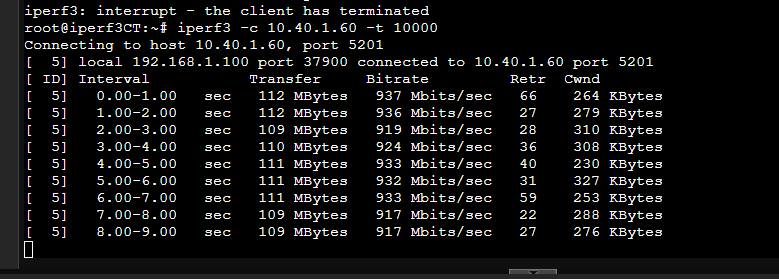

when i install opnsense latest I get full 106Mbs (full line speed) Same hardware. What am I missing?

CPU usage is high in PFsense, but low in Opnsense.

-

@waveworm said in Slow performance on new N4000 i226V pfsense 2.7.0-Beta:

I get around 75Mb per sec in ipsec3.

Do you mean over an IPSec VPN or using iperf3? Or both I guess?

How exactly are you testing?

Do the NICs show as linked at 1G?

Steve

-

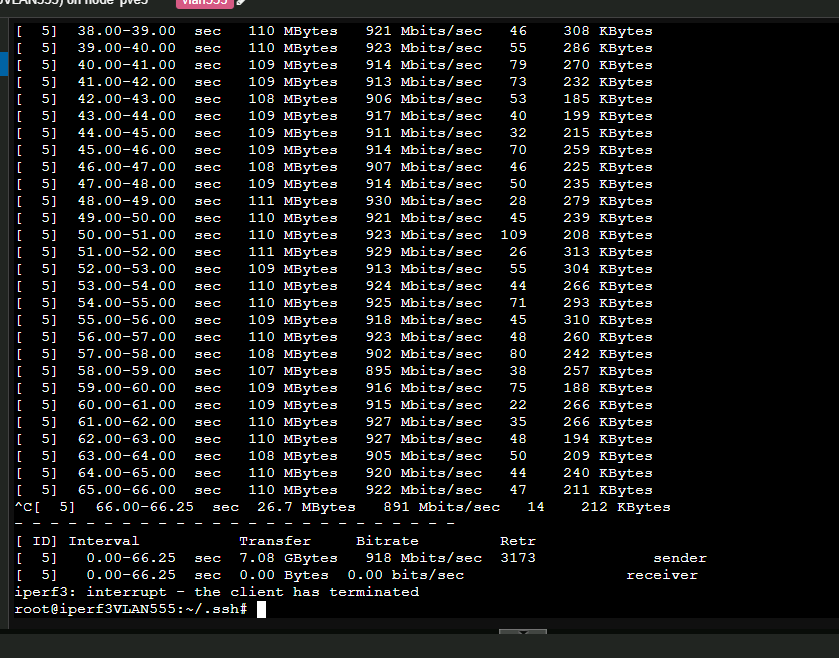

sorry about that, iperf3 is what I used. I setup an iperf3 server on my lan and set up a client behind the router. When I use OpnSense firewall I can fully saturate the gig interface, but with pfSense i can only get about 75%. And I am using same hardware and setup on both.

This is when I use OpnSense - Same hardware setup.

I have 2 nvme drives and I just swap them.

With pfSense cpu is at 75%, OpnSense is sitting at 35%-45%

-

Hmm, well one significant difference there is that opnsense disables all the hardware off-loading options on NICs by default. So it's possible the NICs you have are misbehaving somehow with one of those settings. I would try running

ifconfig -vvvmon both and see what options are set.Another possible difference could be the CPU speed. In FreeBSD 14 you might be seeing speed-shift applying a lower speed. Check the boot logs.

Steve

-

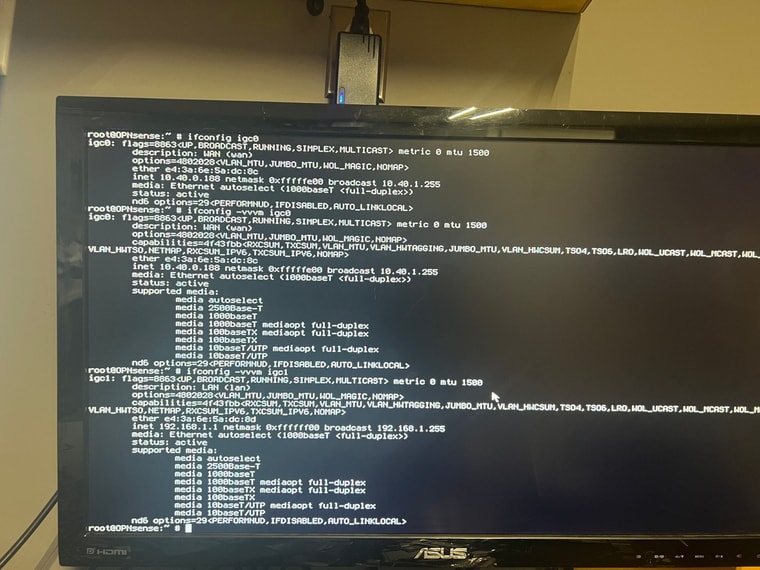

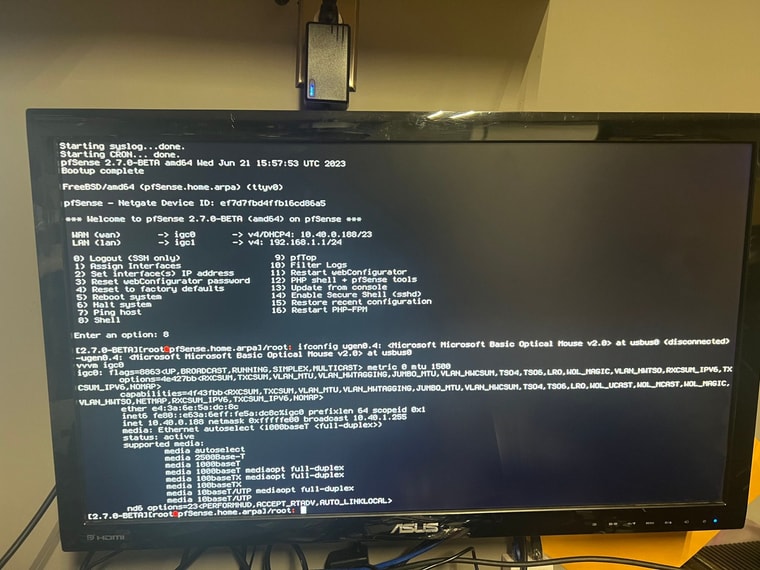

First is my Opnsense install.

Next my pfSense

I can see on the nd6 options that on the Opnsense the IFDISABLED is enabled. I have messed with pfsense and disabled hardware options, but it does not seem to work. see photo. Maybe there is another area I need to work in?

-

Much easier to see if you ssh to the firewall and then copy and paste the output here directly.

But what you should look at are the differences between the capabilities and options lines. the options lines show what's actually enabled and as you can see almost everything is disabled in opnsense.

Usually enabling hardware offloading options increases throughput but if your NICs don't correctly support one of the options they claim to it can slow things down significantly.

-

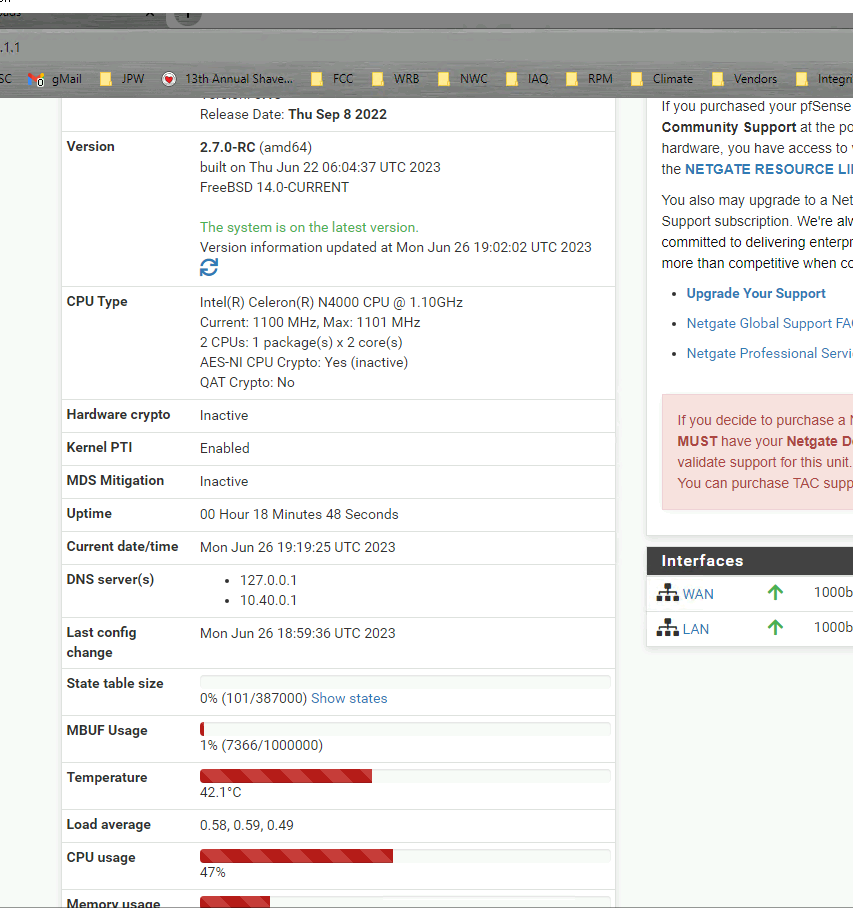

So whatever changed between the dev builds and the RC build fixed the issue. Not sure what changed, but the stock config now works 100% speed.

I tested a new box and was getting same result on the Dev build from last week. As soon as I upgraded to the RC, things worked at full line speed.

Thank you for your help.

-

BETA builds had debugging options enabled in the kernel by default. Those are removed in RC builds.

If performance is good on the RC builds, then it was most likely the kernel debugging options holding it back.

If you were really curious you could install and boot the debug kernel, and compare performance, but that's likely unnecessary.

-

@jimp Thank you for the info. After upgrading 3 units to the RC, everything works as it should