(yet another) IPsec throughput help request

-

sigh...sorry yall, I know there are a lot of these and here I am starting another.

TL;DR - slow site-to-site IPsec with AES-NI on both ends; AES256-GCM / 128 bits / AES-XCBC / DH 24; one end virtualized.

Hey yall, I could use some help. We've got a site-to-site IPsec. Both ends pfSense. One end, (Europe) is virtualized.

All iperf3 tests done with 5-8 threads.If I test to local iperf3 servers, each box gets close to what I'd expect for WAN speeds. If I test across the VPN, I get less than 20Mbps. If I test across the internet, without the VPN, between hosts behind both pfSense I get over 300Mbps - and given that we're crossing the ocean, that might be as good as it gets. Given that I dont seem to be CPU constrained, how can I get closer to that 300Mbps performance over the VPN?

VPN conf:

Phase 1:- Algo - AES256-GCM

- Key - 128 bits

- Hash - AES-XCBC

- DH - 24

Phase 2:

- Enc - AES192-GCM

- Hash - none

VPN traffic (from host to host, not to/from pfSense boxes)

[SUM] 0.00-10.00 sec 20.1 MBytes 16.8 Mbits/sec 542 sender

[SUM] 0.00-10.19 sec 18.1 MBytes 14.9 Mbits/sec receiverIperf3 Traffic outside of VPN, from site to site

[SUM] 0.00-10.00 sec 325 MBytes 373 Mbits/sec 529 sender

[SUM] 0.00-10.18 sec 321 MBytes 364 Mbits/sec receiverUS:

- WAN - 10gbps

- iperf3 to public us based iperf3 ~ 6Gbps

- CPU usage (iperf3 to public) - ~23%

Europe:

- host - 2 x Intel(R) Xeon(R) E-2386G CPU @ 3.50GHz

- VM - CPU type = host, 8GB RAM, VirtIO NICs

- VM WAN - 1gbps

- VM iperf3 to public us based iperf3 ~ 875-920Mbps

- VM CPU usage (iperf3 to public) - ~10%

-

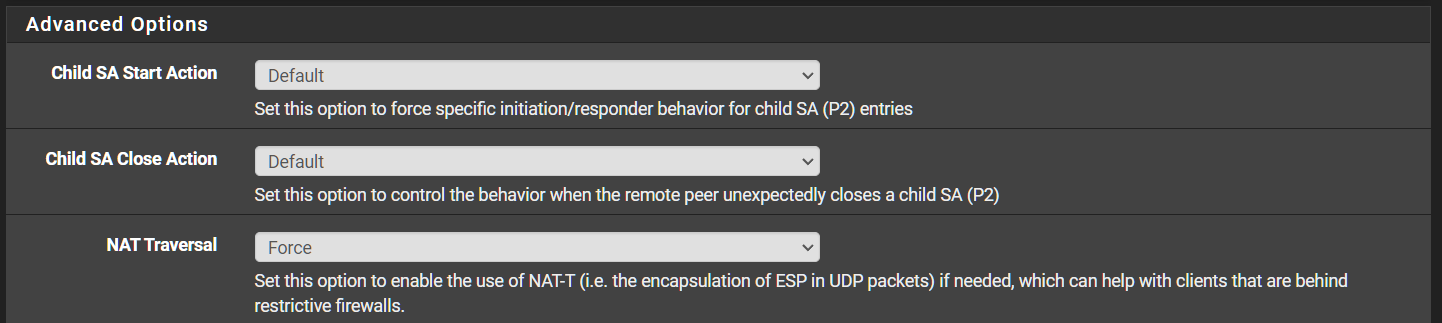

@SpaceBass Ran into a throughput problem as well months ago. It was recommended that I enable NAT-T Force

This will just encapsulate the ESP header with UDP.

My throughput shot up to almost line rate.

Seems that some providers do not like seeing IKE traffic (Port 500) on the wire and will throttle. So i would do that first, maybe bounce the peers so they renegotiate with NAT-T. -

@michmoor said in (yet another) IPsec throughput help request:

It was recommended that I enable NAT-T Force

Interesting... can you say more about how you enabled it? Does this mean you are using IKE1 and not IKE2?

-

-

@michmoor

Thanks for the tip - unfortunately, it didn't make any difference in my case. -

@SpaceBass In that case whats the hardware on each site terminating the VPN tunnel?

Seems perhaps there is a limitation there -

Europe: 2 x Intel(R) Xeon(R) E-2386G CPU @ 3.50GHz with 128gb RAM, SSD ZFS Raid 1

US: 2x Intel(R) Xeon(R) CPU E3-1270 v5 @ 3.60GHz with 64gb RAM, SSD ZFS raid 1

-

@SpaceBass Intel NICs?

-

@michmoor

thanks for the continued troubleshooting help!US - intel bare metal

Europe - VirtIO, host NIC in Intel -

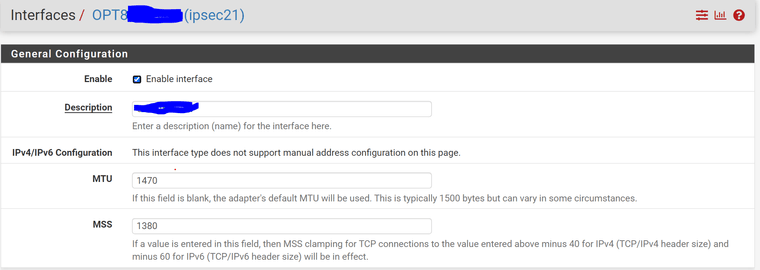

You may try to adjust your MTU/MSS Settings on both sides equally to exactly these numbers here:

-

@pete35 I dont (currently) use an interface for ipsec

-

@SpaceBass Do you have any Cryptographic Acceleration? Is it on?

-

@michmoor AES-NI, yes it is active on both pfSense machines

-

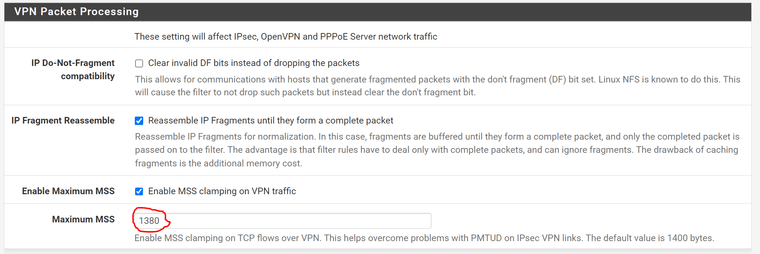

you can try to set MSS Clamping under system/advanced/firewall&Nat

Why dont you use routed vti?

-

For Tunnel mode MSS 1328 is most effective:

https://packetpushers.net/ipsec-bandwidth-overhead-using-aes/ -

@NOCling said in (yet another) IPsec throughput help request:

For Tunnel mode MSS 1328 is most effective:

https://packetpushers.net/ipsec-bandwidth-overhead-using-aes/WOAH! Massive difference (in only one direction)...

From US -> Europe

[SUM] 0.00-10.00 sec 252 MBytes 211 Mbits/sec 9691 sender [SUM] 0.00-10.20 sec 233 MBytes 192 Mbits/sec receiverEurope -> US

[SUM] 0.00-10.20 sec 22.0 MBytes 18.1 Mbits/sec 0 sender [SUM] 0.00-10.00 sec 20.5 MBytes 17.2 Mbits/sec receiver -

Nice, but now you have to find a way to the paring jungle how it will work fast on both ways.

Looks like US -> EU runs a other way than EU -> US.We talk about that, in our last meeting and the solution is not easy.

One Point is to use a Cloud Service Provider he is present on both sides and you can use the interconnect between this cloud instances. -

@NOCling and unfortunately my success was very short-lived ...

It looks like iperf3 traffic is still improved, but I'm moving data at 500kB/s - 1.50MB/s -

@SpaceBass if you temp switch to Wireguard does the issue follow?

If it does it may not be MTU related. -

How do you move your Data?

SMB is a very bad decision for high latency ways, you need rsync or other wan optimized protocols.