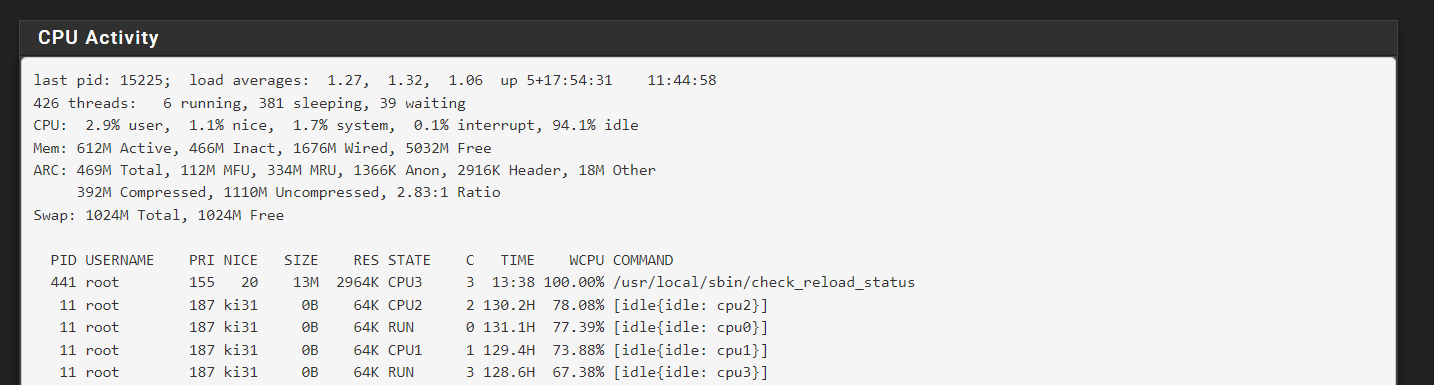

Run away process - check reload status

-

Hmm, I'm pretty sure there was a bug in check_reload_status at one time. Or at least something the presented like this. However I'm struggling to find it now! It was some time ago.

There have been other reports recently: https://forum.netgate.com/topic/181782/check_reload_status-hanging-with-100-cpu-load

-

@stephenw10 I remember that post.

Yeah may be a bug. Killing the process returns things to normal so no serious after effects.

After every change I make I monitor zabbix. Just post work validation.

The other issue I’ve suddenly seen is the sshguard spam in system.log.

I understand the cause but it’s strange how this is a sudden issue. Still digging tho. -

@michmoor said in Run away process - check reload status:

The other issue I’ve suddenly seen is the sshguard spam in system.log.

Something is logging a lot more that before that change. Check which logs are rotating more frequently.

-

@bmeeks not blaming Suricata at all. You it’s possible there is some type of clean up script that runs after a package gets uninstalled? But that post i mentioned , jimp did state that it’s difficult to reproduce.

Need to do something about that RAM

-

@stephenw10 /var/log

That’s where I check for all the logs right ? -

Yes. You'll see the rotated logs with timestamps so you can see which is filling fastest.

-

@stephenw10 https://redmine.pfsense.org/issues/2555 maybe

I vaguely remember something about the wrong Zabbix version but I don’t use it.

-

Hmm, I thought something more recent than that!

Not seeing it now though.

-

@stephenw10

The culprit is filter.log i think. I set the log file size from 500kb to 10M.

I am logging each rule. Sent to my log collector for analysis./var/log: ls -ltrh | grep bz2

-rw------- 1 root wheel 27K Aug 13 02:02 haproxy.log.6.bz2 -rw------- 1 root wheel 14K Aug 13 04:27 openvpn.log.0.bz2 -rw------- 1 root wheel 12K Aug 13 04:32 gateways.log.2.bz2 -rw------- 1 root wheel 6.6K Aug 13 04:43 gateways.log.1.bz2 -rw------- 1 root wheel 6.7K Aug 13 04:54 gateways.log.0.bz2 -rw------- 1 root wheel 29K Aug 13 04:57 system.log.0.bz2 -rw------- 1 root wheel 32K Aug 13 05:07 haproxy.log.5.bz2 -rw------- 1 root wheel 22K Aug 13 05:43 dhcpd.log.0.bz2 -rw------- 1 root wheel 28K Aug 13 11:20 haproxy.log.4.bz2 -rw------- 1 root wheel 21K Aug 13 12:50 haproxy.log.3.bz2 -rw------- 1 root wheel 16K Aug 13 13:47 haproxy.log.2.bz2 -rw------- 1 root wheel 22K Aug 13 16:52 haproxy.log.1.bz2 -rw------- 1 root wheel 13K Aug 13 17:47 ipsec.log.6.bz2 -rw------- 1 root wheel 13K Aug 13 18:14 ipsec.log.5.bz2 -rw------- 1 root wheel 14K Aug 13 18:41 ipsec.log.4.bz2 -rw------- 1 root wheel 13K Aug 13 19:08 ipsec.log.3.bz2 -rw------- 1 root wheel 30K Aug 13 19:14 haproxy.log.0.bz2 -rw------- 1 root wheel 14K Aug 13 19:35 ipsec.log.2.bz2 -rw------- 1 root wheel 16K Aug 13 19:37 resolver.log.6.bz2 -rw------- 1 root wheel 15K Aug 13 19:48 resolver.log.5.bz2 -rw------- 1 root wheel 16K Aug 13 20:01 resolver.log.4.bz2 -rw------- 1 root wheel 13K Aug 13 20:02 ipsec.log.1.bz2 -rw------- 1 root wheel 37K Aug 13 20:12 auth.log.0.bz2 -rw------- 1 root wheel 16K Aug 13 20:14 resolver.log.3.bz2 -rw------- 1 root wheel 15K Aug 13 20:25 resolver.log.2.bz2 -rw------- 1 root wheel 13K Aug 13 20:29 ipsec.log.0.bz2 -rw------- 1 root wheel 15K Aug 13 20:37 resolver.log.1.bz2 -rw------- 1 root wheel 15K Aug 13 20:46 resolver.log.0.bz2 -rw------- 1 root wheel 33K Aug 13 20:47 filter.log.6.bz2 -rw------- 1 root wheel 33K Aug 13 20:48 filter.log.5.bz2 -rw------- 1 root wheel 34K Aug 13 20:49 filter.log.4.bz2 -rw------- 1 root wheel 34K Aug 13 20:50 filter.log.3.bz2 -rw------- 1 root wheel 34K Aug 13 20:51 filter.log.2.bz2 -rw------- 1 root wheel 34K Aug 13 20:52 filter.log.1.bz2 -rw------- 1 root wheel 34K Aug 13 20:53 filter.log.0.bz2 -

The resolver log is also rotating every 15mins but, yes, the filter log is was rotating every minute when that data was taken.