Tunning after half a gig

-

A power outage took central storage out, while it rebuilt; I had no edge networking.

This array also holds the image store; I couldn't create a new firewall. My options were driving myself to madness with UniFi or adding edge functionality to a Mikrotik CHR appliance I occasionally use for its DHCP server.

Unlike my other DHCP server, Windows Server, this one doesn't use a relay, it's got L3s on every VLAN. Plus, it already had a starting ruleset from back when I was trying it out as a firewall—in the end I couldn't do without pfSense's ASN blocking.

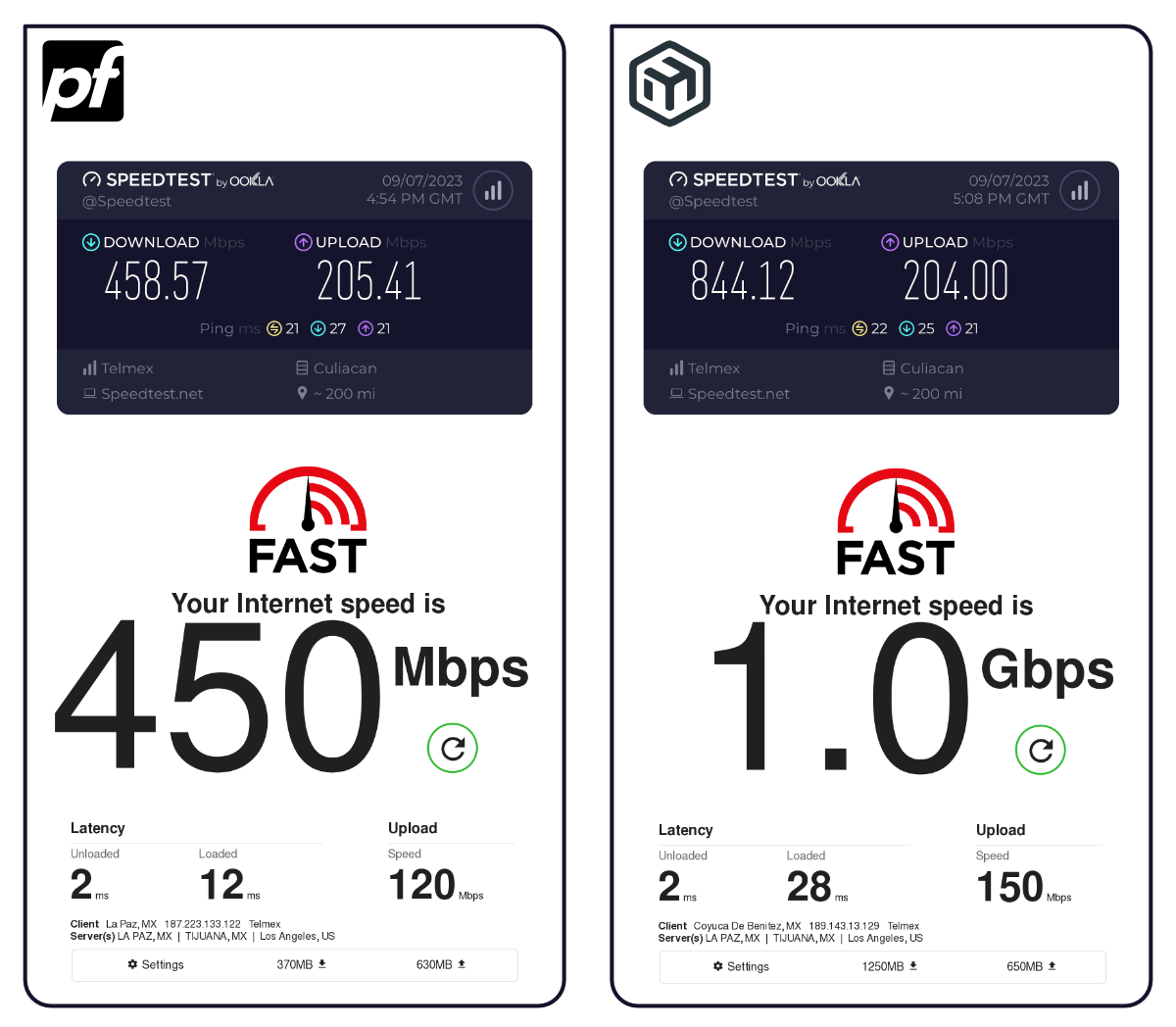

Anyway, I used a speed testing site out of hastiness. As soon as I saw response activity on the status bar I turned around to another station with CLIs on it to start testing individual services, a minute later I felt the big screen on wall flash finished.

When I turned around, jaw

︎floor. It maxed out my connection. That's almost twice as fast as the best result I'd get with pfSense. It was fast.com too, results are always a little too optimistic. I tried another site but again it was very close to the max speed and twice as fast as pfSense whose machine has 2x to 4x the specs.

︎floor. It maxed out my connection. That's almost twice as fast as the best result I'd get with pfSense. It was fast.com too, results are always a little too optimistic. I tried another site but again it was very close to the max speed and twice as fast as pfSense whose machine has 2x to 4x the specs.I have been using pfSense for so long, and it always managed to max out my connection, of course before fiber I had 3x VDSL2 lines, and 4x ADSL2+ lines before that, at the fastest, I never expected more than 150-170ish Mbit/s. When I was finally migrated to fiber, it never went over 600, 650 maybe. I just assumed server access wasn't that fast because of my region, or the tens, hundreds of thousands BT connections and other chatty services ever present on the network. Lastly, the loaned ONTs are the cheapest looking things, there are. Creaky plastic, a huge yellow+blue sticker with default Wi-Fi and admin credentials printed on it. Made by Huawei or other Chinese manufacturer and questionable slow and buggy mistranslated firmware. It doesn't inspire speed.

Normally I wouldn't care too much, between 100 and 200Mbit/s is all you need to browse comfortably speedy I think; I still have some Wi-Fi 4 APs and I don't intend to replace them anytime soon. :) This was just performance waste though, further accentuated by the fact that my ISP has a lower plan with the speeds I was getting and even though there's not much of a difference (mainly because the top 1Gbit/s speed isn't expensive to begin with) it makes you pay attention to it until you can't ignore it anymore.

A few hours later the storage was finally back online and I tested again with the same results. I create a new firewall just for testing: no complex rules, no dynamic routing, no packages whatsoever, no services running in it. I changed the default {LAN to any} pair to {any to <This Firewall>} effectively cutting off all traffic. I created another targeting the test machine {to any}; tested with the same results.

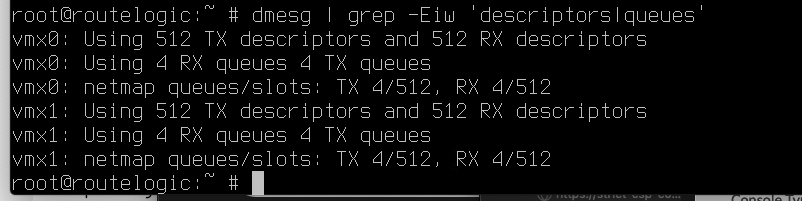

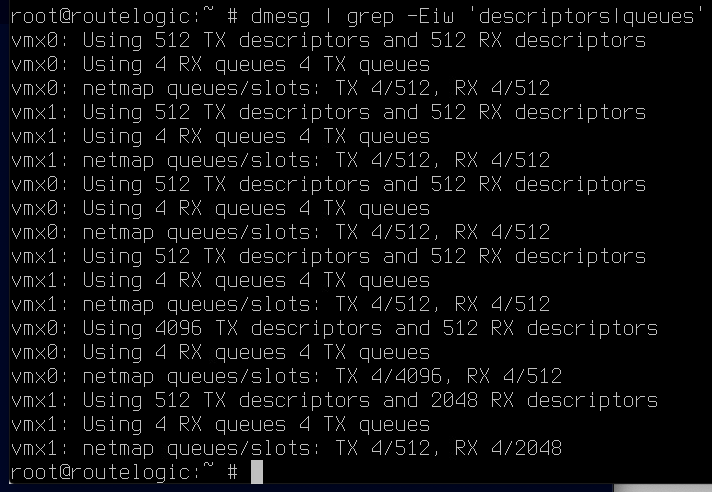

I followed the advise on Hardware Tuning and Troubleshooting, took before and afters:

But there wasn't any improvement:

These aren't cherry picked results, BTW, it's the first I got. Subsequent tests maxed at 1.2Gbit/s on CHR, mid-to-high 500s Mbit/s on pfSense.

So I switched gears and tried other platforms, specifically one of each underlying OS. From the BSD family, the pfSense fork — OPNsense — showed similar results than pfSense. On the Linux side and using many similar or the same features as CHR, such as DSA; OpenWRT showed results almost identical to CHR's.

One thing that caught my attention was the lack of difference in the upstream. It demonstrates, I think, it's not overhead, otherwise it would be proportional as well. The performance drop occurs, it seems, a little over half a gig's worth of throughput.

It points to the OS, I think. But the OS-related instructions that are documented, I already did them. What's left?

The machines are all virtualized so they all have identical "hardware", with the exception of double the specs (corecount and memory) on the xBSD appliances. Is it the abstraction/virtualization then?

I don't believe so, for starters, CHR is also virtualized and about three months ago I ran pfSense baremetal on a decommissioned hypervisor that itself was part of a cluster where a pfSense VM hopped around in . It was decommissioned partly because of its age but it's still nevertheless a behemoth to piece of hardware just to run a firewall that just does a single gig edge link and 2-3x single host DMZs. Intranet outing is offloaded to faster, more efficient switches. It was still below 600Mbps.

That contributed to my belief that I wasn't getting a full gig because some really nasty overhead, never mind that my ISP has always accounted for that so you always get what's advertised. Speaking of overhead and buffers, I did and aced the CoDEL tests, I almost forgot. ←I'm not positive that's not misspelled either.

Why is the performance gap so big? Can it be improved? Or rather, can mere mortals improve them without a science degree improve it? Though I learned how to create a script to parse ASNs during my VyOS/OpenWRT tryouts, which is likely the pfSense feature I need the most—and I think I can replicate it on CHR natively—pfSense is home. <3

-

Is your WAN PPPoE?

-

@stephenw10 said in Tunning after half a gig:

Is your WAN PPPoE?

Looks that way from his other posts.

BSD needs to get off the struggle bus when it comes to PPPoE. High-bandwidth PPPoE connections are here and it's starting to hurt pfSense.

️

️ -

It's less of an issue than it used to be simply because hardware is more powerful. The 4100 can pass 1G PPPoE for example.

Actually solving the issue of RSS queues for non Ethernet traffic is very much non-trivial.But if it's PPPoE than OP should apply the recommended mitigations for that:

https://docs.netgate.com/pfsense/en/latest/hardware/tune.html#pppoe-with-multi-queue-nicsSteve

-

J jimp moved this topic from Problems Installing or Upgrading pfSense Software on

J jimp moved this topic from Problems Installing or Upgrading pfSense Software on

-

@stephenw10

For sure but it takes away some capacity to handle Suricata, QoS, ntopng etc, especially with fibre connections moving beyond 1 GbE.I don't think it should be ignored as multi-core is the norm and relying on a single-core PPPoE handling is not ideal. I'm not convinced my 6100 will handle 1.8 GbE with typical services and packages running concurrently.

[I know, you are stuck on G.fast so I probably sound a little churlish with my first-world problem!]

️

️ -

@senseivita said in Tunning after half a gig:

Mikrotik

From what I read is once you add rules for IP filtering to Mikrotik performance drops a lot for IP. No rules are it's best-case scenario and not practical in the real world.

-

@RobbieTT said in Tunning after half a gig:

I know, you are stuck on G.fast

The struggle is real!

But, yes, multiqueue PPPoE sure would be nice.