Enhanced Intel SpeedStep / Speed Shift - Are they fully supported?

-

I appear to get higher (better) PPPoE throughput on my Xeon-D, which I didn't expect. I need to think a bit more as to why.

Anyway, just Intel Speed Select Technology to come...

️

️ -

@RobbieTT What is the model of your CPU?

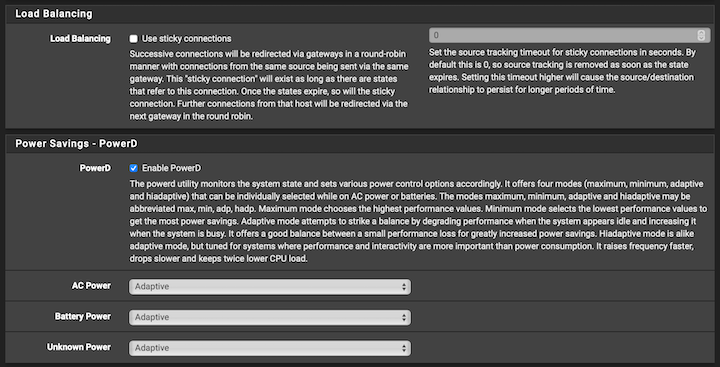

Were you using PowerD before?

Also any virtualization involved?

-

-

@RobbieTT This is way better than PowerD, PowerD = SpeedStep

Check out my link above.

-

-

After testing / monitoring for another week, I have concluded that a Speed Shift setting of "60" works provides a pretty good performance / efficiency trade off on the Intel Xeon D-1718T CPU in one of my systems. If I increase the value further to "80", I find that the low CPU frequencies become too sticky (i.e. it seems to take too long to ramp up), while not really resulting in incremental power savings. If I lower to "50" the CPU ramps up too quickly to top frequencies, resulting in a temperature increase. What's interesting - on another system with a Intel Core i3-10100 CPU, a setting of "60" appears not conservative enough and the CPU still ramps up very quickly to higher frequencies. Could there be some differences between how different Intel CPU architectures handle / implement Speed Shift?

-

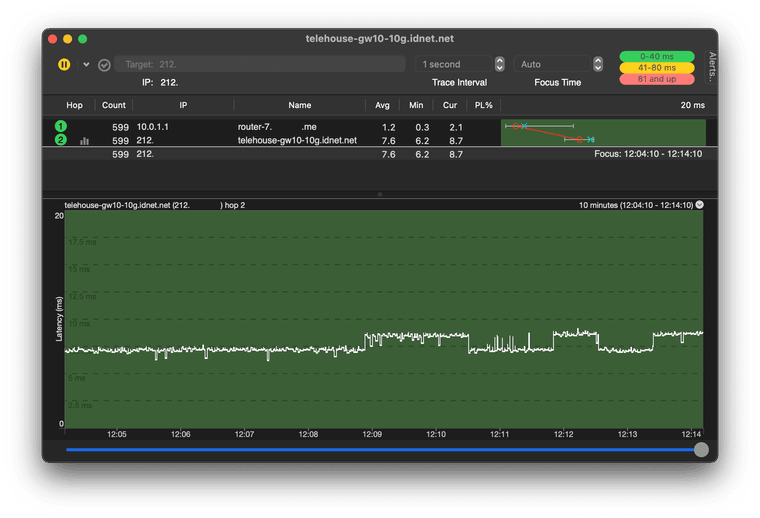

I just completed a number of WAN latency tests on my Xeon D-1718T system and had different results. I had to go down to a setting of 25 for Speed Shift to prevent the router from introducing latency on the WAN connection. 30 might have been ok, but 25 seemed to provide the best throughput and power results. A value of 60 increased the latency back to the same values I had when running on my Atom C3758 based router with PowerD set at Max values.

-

How much latency was that?

-

@stephenw10 Lowering the Speed Shift value from 60 to 30 dropped the loaded download latency by ~20ms on average based upon the Waveform Bufferbloat test: https://www.waveform.com/tools/bufferbloat

This is with a Comcast 1.2Gb download speed (real value is 1.4Gb).

-

Hmm, significant then.

-

I've not experienced anything like that, albeit I have more cores. I do have HyperThreading off though, so I wonder if that makes a difference?

️

️ -

@RobbieTT On the LAN side everything is 10Gb high powered devices, so maybe I'm just pushing the router a bit more.

-

@InstanceExtension Perhaps it is a difference in workload but my LANs are also 10GbE, plus I use bi-directional FQ_CoDel and I have the additional pfSense burden of limiting my PPPoE WAN to a single core.

️

️ -

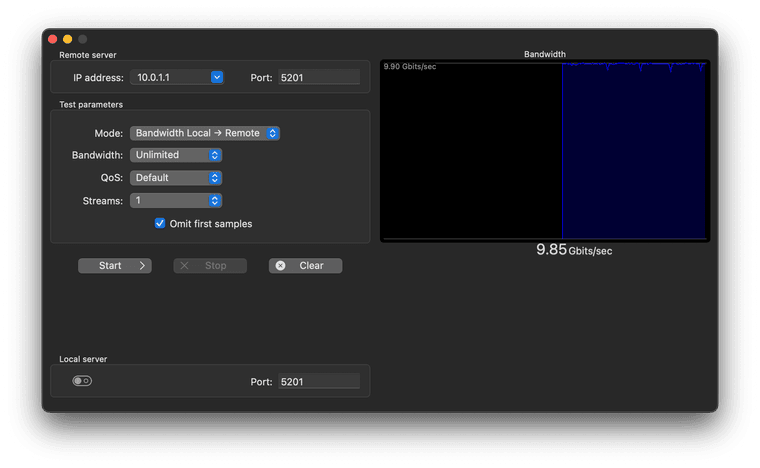

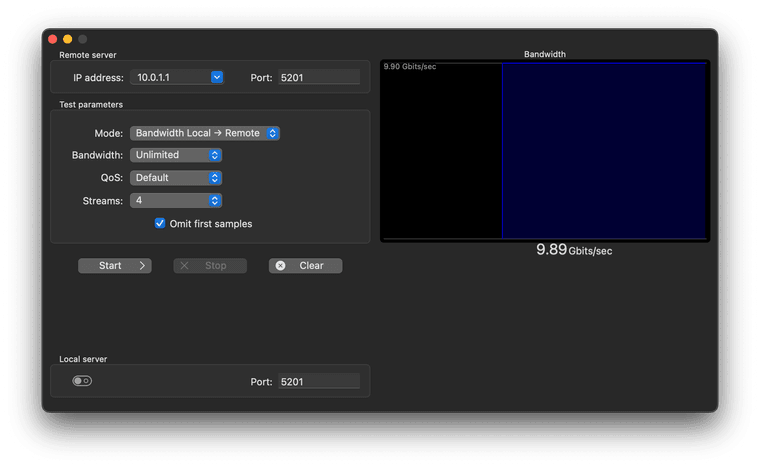

It's definitely a trade off and and the type of workload / environment matters as well. By favoring power savings over performance I also noticed an increase in latency, but it was very small: maybe an additional 200 microseconds (0.2ms) or so to the first hop (i.e. the dpinger gateway ping), which is probably due to the CPU sitting an min frequency the majority of the time. On the WAN side I have a symmetric 2Gbit Fiber circuit and have not seen any increase in latency or decrease in performance there. Where I have noticed a decrease in performance is when running an iperf3 test between two 10Gbit hosts located on different internals network segments (i.e. basically just L3 routing). Prior to adjusting speed shift settings, I could max out the bandwidth (~9.4Gbit/s) between the hosts with one iperf3 stream (P=1). By favoring power savings, I see closer to 8.5-9.0Gbit/s (on average) now with a single stream. Increasing the streams to two or greater results in the bandwidth maxed out again. Perhaps the load of just one stream (or maybe iperf3 in general) isn't great enough to push the CPU into the highest frequencies when power saving is favored. In any case, it's currently not limiting in any way because I don't have a need to route at 10Gbit line speed. Once WAN speeds increase to that level I will probably have to adjust / tweak the speed shift settings again. In the meantime, with a system that's fairly lightly loaded most of the time, I'm fine with accepting a slight increase in latency and slight decrease in performance in exchange for 2-3 degrees C lower temperatures (on average), along with decreased power consumption.

-

@tman222

With mine set at 80% I don't see any change in throughput when routing, with 1 or 4 streams. It is a pure flat line at 9.90 Gbps.If I generate 10GbE using the router as the server or client I can detect a small ripple in the graph with 1 stream but the 9.90 Gbps average remains:

4 streams is still a flat line though:

If I simultaneously ping a gateway using the same link I do see a small increase, as you would expect when saturating it with an iPerf test:

️

️ -

Hi @RobbieTT - that's interesting! Are those tests done using 1500 byte packets or a larger MTU? Do you get similar results if testing with a client behind the router vs. from the router?

-

Going via the router or to or from the router makes no difference at 10 GbE, other than the slight ripple you can see in the graphs when you are getting the router to produce the traffic.

Same for larger MTU (which I make use of) as the PPS is high enough for small packets within the 10 GbE cap I run at.

I don't have suitable equipment to produce results at 25 GbE but I would expect to see a clear delta between pure LAN to LAN routing and generating traffic from the router, like you do on low-power CPUs (albeit at much low speeds in those cases), when running above 10 GbE.

Real-world traffic through the WAN would be a lot lot lower due to the firewall, PPPoE limits and the limitations of pfSense/BSD at high bandwidths. I don't have a trustworthy means of testing, just enough to produce indicative results.

The platform and pfSense could do more if Netgate invests time and effort into using QAT in userspace as, at the moment, pfSense is still using the CPU for things that really should be directed at the dedicated silicon for. To still have the PPPoE weakness after all these years is another black-mark against pfSense/BSD.

️

️ -

Figured I would join in on the fun.

I do notice a little added latency on speedtest.net going from 4-5ms to 7-8ms ping on download but in web browsing and downloading I still get the same speeds.

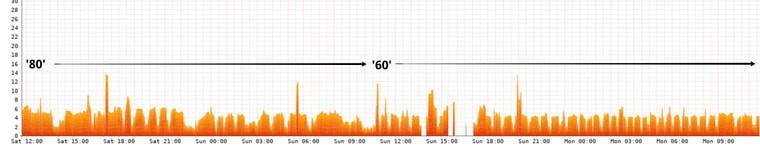

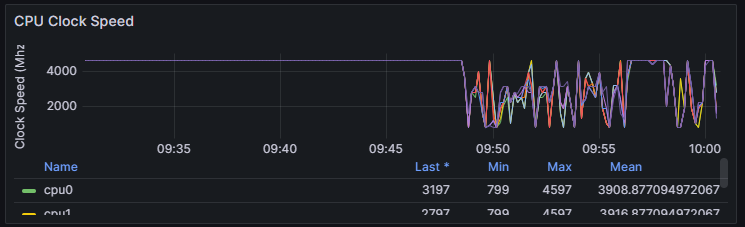

As you can see from my graphs setting to 80 allows the CPU to clock down to 800mhz when idle and only scale up to 4.6ghz when under regular speed test load.

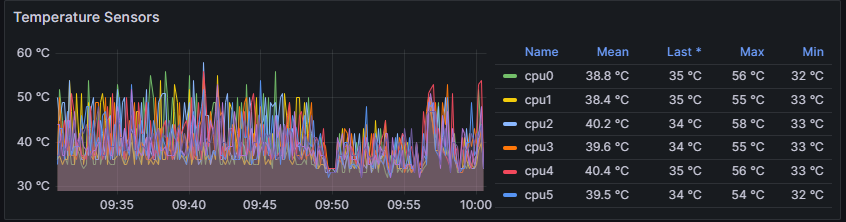

This also has a nice effect on temps.

Before you can see the clock speed was a constant 4.6ghz (Intel 9700k) and it pretty much never left that speed, at least whenever telegraf queried it.

I am going to leave this at 80 and see how it feels during normal usage, having this level of control over CPU usage really shifts the mindset to having a much more powerful CPU since it would be there when you need it and doesn't really cost much to have it there and waiting.

BTW - this is telegraf, pfblockerng, suricata, and ntopng all running in the background which was probably what held the CPU at 4.6ghz @ 50.

-

@bigjohns97 - thanks for sharing those results. I'd be curious - since you have an Intel "K" CPU, do you have MultiCore Enhancement (or similar name; this is what Asus calls it) enabled in the motherboard's BIOS? As I understand it, this setting turbos the cores more aggressively (i.e. allows all cores to run at maximum turbo frequency) and is now often enabled by default. Overall I see this potentially useful for gaming or other heavily CPU bound workloads, but maybe not for a router/firewall. I did disable this setting recently for a desktop machine and temps dropped another 2-3 degrees Celsius with no noticeable impact to performance.

-

@tman222 I do have multicore enhancement enabled in the BIOS, I want the CPU to be able to scale as fast as Intel intended but only do it when it is absolutely necessary.

I am hoping this 80 setting does exactly that.

All my pfSense machines are old gaming boxes :)