No State Creator Host IDs visible

-

I checked on another updated (2.7.1) firewall pair. Same behaviour.

On a firewall pair that has not yet been updated (2.6.0) there are pfsync nodes listed.

(assuming "pfsync nodes" means the same as "state creator host ids" because it's at the same spot in the GUI.) -

I haven't seen that here in my lab cluster pairs but mine all have manually set IDs.

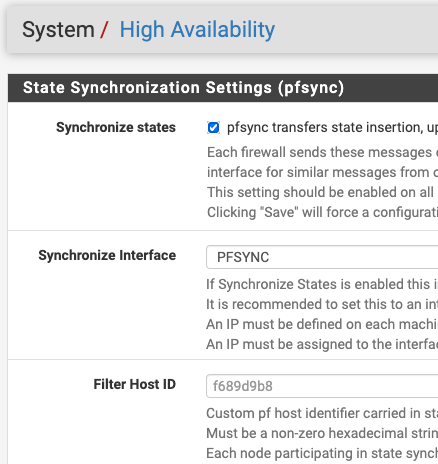

If you set a manual ID on each node at System > High Availability (e.g. primary node is

1, secondary node is2) and then reset the states does it change behavior?When those are unset it should be defaulting to a somewhat consistent string on each node, though.

There was also a new way of fetching the state creators introduced recently which may be a factor.

But if you have multiple clusters also make sure they aren't all sharing the same L2 to exchange state data.

-

Thanks for the advice Jim

I will set manual IDs next maintenance window.

Meanwhile I've upgraded another firewall pair. Here I can see State Creator Host IDs as it should be.

The difference between this pair (ok) and the pairs without IDs (nok):

- ok FWs are recently installed (2.6.0) virtual firewalls while nok FWs are older physical installations that have seen many upgrades.

- ok FWs have almost no ifs/states/workload while the nok FWs have manymany IFs, lots of states etc.

(L2 for data-stuff is separated from L2 for sync-stuff on all installations.)

I will keep you posted.

-

On the ones that are not working as expected, check the following commands from an SSH or console shell prompt:

grep hostid /tmp/rules.debugEven if you didn't set one manually it should be there, and it should be the last 8 characters of the NDI shown on the dashboard.

pfctl -scpfctl -vvss | awk '/creatorid:/ { print $4; }' | sort -uThe output of the last two commands should be identical and really should match the host ID from the first command. The second command will take much longer to process on systems with a lot of content in the state table.

On older systems before we set the host ID explicitly (Before Plus 22.05 / CE 2.7.0) the hostid/creatorid changed to a new random value on every filter reload so there were many different values even though the hosts themselves didn't change.

-

I have run the commands on 6 firewalls (2 pairs with the issue, 1 pair without the issue).

grep hostid /tmp/rules.debug shows on all devices

set hostid 0x(last8charofNDI)pfctl -sc shows the same output as pfctl -vvss | awk '/creatorid:/ { print $4; }' | sort -u if run on the working pair.

On the pairs with the issue pfctl -sc shows

pfctl: Failed to retrieve creatorsbut pfctl -vvss | awk '/creatorid:/ { print $4; }' | sort -u shows the expected list.

The messages "Failed to retrieve creators" probably is an explanation why the GUI doesn't show the creators even if they're there.

-

Curious. How many states are in the state table on the failing systems? Are there a lot more there or about the same amount?

EDIT:

If the output of

pfctl -vvss | awk '/creatorid:/ { print $4; }' | sort -ushows more than 2 creators, it would help to see what else is present in that output.It would also be helpful to get some debugging output from a run of

pfctl -scon a failing system , for example:truss -o creatordebug.txt -f pfctl -scAnd then attach the

creatordebug.txtfile here. -

@jimp said in No State Creator Host IDs visible:

truss -o creatordebug.txt -f pfctl -sc

Hi. We have exactly the same problem.

We have a 2.6.0 pair that we are upgrading to 2.7.2

We have upgraded the backup. When we test the CARP Maintenance on Master or going to the CARP Status on Backup, the backup spamkernel: Warning: too many creators!The GUI CARP Status display no state creator Host ID

[2.7.2-RELEASE][admin@backup]/root: grep hostid /tmp/rules.debug set hostid 0x... [2.7.2-RELEASE][admin@backup]/root: pfctl -sc pfctl: Failed to retrieve creators [2.7.2-RELEASE][admin@backup]/root: pfctl -vvss | awk '/creatorid:/ { print $4; }' | sort -u 06d0... (64 lines of 8 alphanumeric char)And I have attached the creatordebug.txt of this command :

2.7.2-RELEASE][admin@backup]/root: truss -o creatordebug.txt -f pfctl -sc pfctl: Failed to retrieve creatorsAs I can see in it :

97872: ioctl(3,DIOCGETCREATORS,0x93ade72a258) ERR#28 'No space left on device'here is the result of df

[2.7.2-RELEASE][admin@backup]/root: df -h Filesystem Size Used Avail Capacity Mounted on pfSense/ROOT/default 194G 817M 193G 0% / devfs 1.0K 0B 1.0K 0% /dev pfSense/tmp 193G 1.3M 193G 0% /tmp pfSense/var 193G 280K 193G 0% /var pfSense 193G 96K 193G 0% /pfSense pfSense/home 193G 276K 193G 0% /home pfSense/var/cache 193G 96K 193G 0% /var/cache pfSense/var/log 193G 3.1M 193G 0% /var/log pfSense/var/tmp 193G 104K 193G 0% /var/tmp pfSense/var/empty 193G 96K 193G 0% /var/empty pfSense/reservation 214G 96K 214G 0% /pfSense/reservation pfSense/var/db 193G 7.5M 193G 0% /var/db pfSense/ROOT/default/cf 193G 2.2M 193G 0% /cf pfSense/ROOT/default/var_cache_pkg 193G 228K 193G 0% /var/cache/pkg pfSense/ROOT/default/var_db_pkg 193G 4.9M 193G 0% /var/db/pkg tmpfs 4.0M 304K 3.7M 7% /var/run devfs 1.0K 0B 1.0K 0% /var/dhcpd/devIt's probably linked : when we launch CARP maintenance on Master (v2.6.0) it spam php-fm every second with this log

php-fpm[43047]: /rc.carpbackup: HA cluster member "(X.X.X.X@lagg1.VLAN): (WAN)" has resumed CARP state "BACKUP" for vhid 2During 10 minutes and then everything seems working like a charm.

(vhid 2 is a CARP interface where there is 131 IP Alias)Thanks for your help.

-

The "no space left" there probably isn't referring to disk space directly.

Thanks for getting the truss output, I created a Redmine issue for this and included some of the details there:

-

I think I see how the 'No space left on device' error can happen if we have many creator ids.

It's already fixed, because current versions of that code use the netlink to communicate, so that specific bug is already gone.That's not the root cause of this issue though, because we ought to have 1 or 2 (distinct) creator ids and no more, and the 'Warning: too many creators!' kernel message means we have 16.

A full state table output (i.e. pfctl -ss -vvv) might give us clues about why there are so many different creator ids.

-

@kprovost said in No State Creator Host IDs visible:

A full state table output (i.e. pfctl -ss -vvv) might give us clues about why there are so many different creator ids.

The file weighs 15 Mo so I cannot upload it here. Here is a link to download it :

https://filesender.renater.fr/?s=download&token=b5711bf0-1ff8-410a-83ff-47934c64a013

-

@marcolefo Thanks.

We do see many (97!) different creatorids here. That at least explains the kernel warning, but I do not understand how we'd end up in that state.

One of the first things to check is to see if both the primary and secondary keep a consistent hostid. Can you check that across reboots?

It's listed in thepfctl -si -voutput, and I'd expect it to be set through the rules (set hostid xxxxxin /tmp/rules.debug).It's also interesting that the majority of states in your file (those with hostid cad3f8bd) do not have rtable set, and those that do have one of the other hostids. That strongly suggests the two facts are related, but I'm struggling to form a theory that explains those facts.

Are your primary and secondary nodes running the same software version?

-

@kprovost said in No State Creator Host IDs visible:

Are your primary and secondary nodes running the same software version?

Not for now. One is 2.6.0 and the other one is 2.7.2.

We are going to upgrade the first one this afternoon. -

@marcolefo Well, that's supposed to just work, but there's functionally several years of code evolution between 2.6.0's pf and 2.7.2. It's entirely possible that's causing this problem.

It'd be useful to know if the problem remains or not once both nodes are running 2.7.x (ideally the same version, but 2.7.0 is vastly closer to 2.7.2 already).

-

@kprovost said in No State Creator Host IDs visible:

It'd be useful to know if the problem remains or not once both nodes are running 2.7.x (ideally the same version, but 2.7.0 is vastly closer to 2.7.2 already).

So now the 2 pfsense are 2.7.2.

The errorkernel: Warning: too many creators!is always here. It appears when we go Status > CARP (failover)The GUI CARP Status display no state creator Host ID

[2.7.2-RELEASE][admin@master]/root: grep hostid /tmp/rules.debug set hostid 0xf689d9b8 [2.7.2-RELEASE][admin@backup]/root: grep hostid /tmp/rules.debug set hostid 0xcad3f8bd [2.7.2-RELEASE][admin@master]/root: pfctl -sc pfctl: Failed to retrieve creators [2.7.2-RELEASE][admin@backup]/root: pfctl -sc pfctl: Failed to retrieve creatorsShould I try to set the filter host ID here ?

-

@marcolefo The hostid ought to be stable across reboots even if it's not configured, so let's confirm that first. (I.e. when you reboot the standby firewall does it have the same hostid in /tmp/rules.debug when it comes back?)

-

@kprovost said in No State Creator Host IDs visible:

@marcolefo The hostid ought to be stable across reboots even if it's not configured, so let's confirm that first. (I.e. when you reboot the standby firewall does it have the same hostid in /tmp/rules.debug when it comes back?)

Yes it's stable across reboots

[2.7.2-RELEASE][admin@backup]/root: grep hostid /tmp/rules.debug set hostid 0xcad3f8bd [2.7.2-RELEASE][admin@backup]/root: uptime 3:08PM up 3 mins, 2 users, load averages: 1.40, 0.76, 0.31 -

Okay, that's good to know.

I've had another look at the state listing.

All of the the states with unexpected creatorids list an rtable of 0, none of the others do. I suspect this is a clue.

I believe those states to be imported through pfsync.There's been a pfsync protocol change between 2.7.0 and 2.7.2. That could potentially have resulted in states being imported with invalid information, but that's highly speculative. I've also not been able to reproduce that in a setup with pfsync between a 2.7.0 and 2.7.2 host. If that were the cause it should also have gone away when both pfsync participants were upgraded to 2.7.2.

It's a bit of a long shot, but it might be worth capturing pfsync traffic, both so we can inspect it (be sure to capture full packets) and so we can verify that there's nothing other than the two pfsense machines sending pfsync traffic.

-

@kprovost sorry I did'nt post since my last answer, I was out of my office.

We do not have Warning: too many creators message since 2023-12-22.

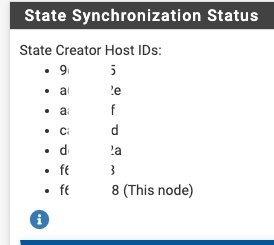

And in GUI we have now State Creator Host IDs (on the 2 nodes).

pfctl -si -vdisplays the Creator IDsI have tried to enter and leave persistent carp mode with no problem.

I think the problem is solved :)

But I don't know why it did not display correctly the last time.Thanks a lot for your help and happy new year.

-

@marcolefo That's good to know.

Given that the problem appears to have gone away after you moved both machines to the same version I think that's another data point suggesting that's at the root of this issue.

I still can't reproduce this, but it's good to know anyway. -

As this problem bit me several times now when upgrading CARP-clusters I tried to troubleshoot it a bit more. Here is what I found out:

I read somewhere that there were protocolchanges in pfsync between 2.7.0 and 2.7.2. So if you upgrade the nodes one by one there is the situation where one system is already on 2.7.2 and the other one still on 2.7.0. During this time 2.7.0 tries to sync states to 2.7.2 and vice versa. Due to the versionmismatch the statetable seems to have invalid records in the list that causes the too many creators error and causes the statesync to fail. Even after both nodes reach 2.7.2 those invalid records still remain in the statetables, which then still breaks the sync.

The resolution to this is:

Either reboot both nodes at the same time, so the broken records are not synced back and forth. Downtime about 1-2 minutes on fast systems.

Or disable the statesync via pfsync on both nodes (system>high availability), reset the statetables on both nodes (Diagnostics>States, Reset states). Then reenable the statesync again. Downtime a few seconds as only the dropped connections need to be reestablished.Unfortunately both procedures cause a disruption of traffic, though the second way of getting pfsync going again is rather short. An additional impacti is, that during the upgradeprocess statesync is broken from the time the first node reboots till the states get cleared on both nodes after both nodes are upgraded.

Hope this helps some people that run into the same issues.

Regards

Holger