Broadcom 5720

-

If you choose to pass through only one NIC will it attach correctly to either of them?

If you run bare metal do both NICs come up?

-

It doesn't allow just one to pass, as they are in the same iommu group.

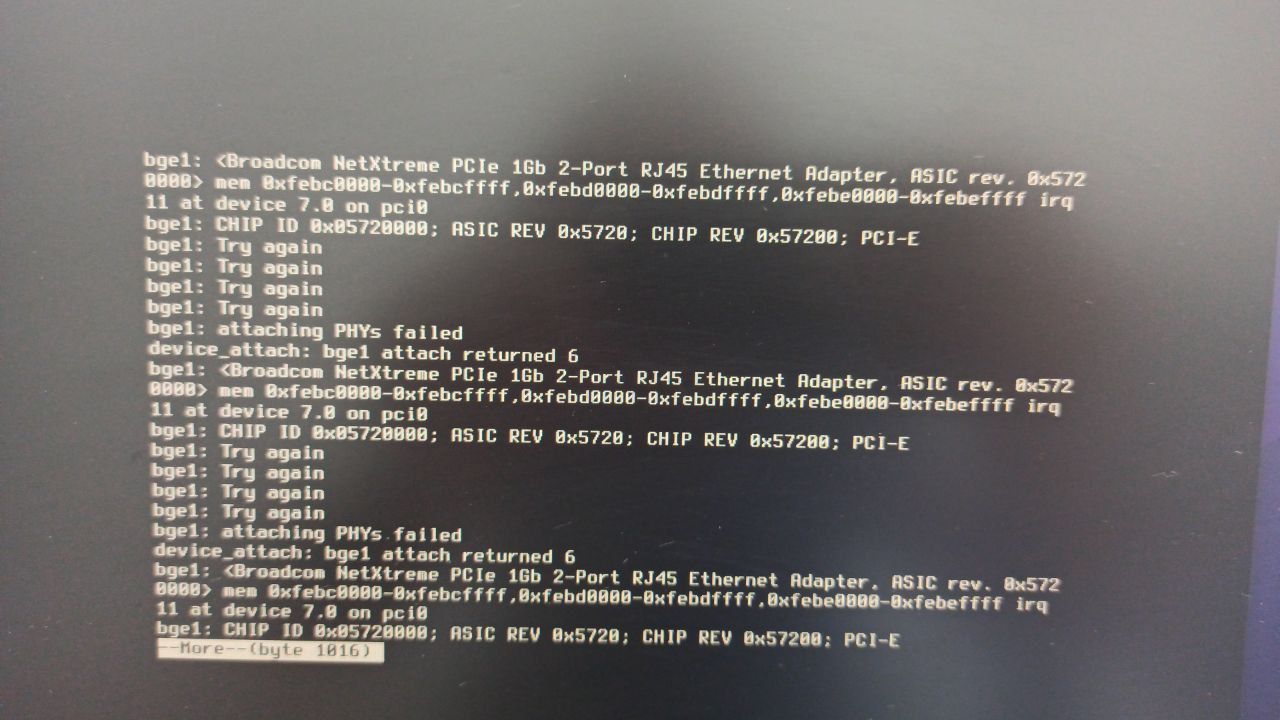

See the message that appears when trying to start the VM with only one of the interfaces:

"Please ensure all devices within the iommu_group are bound to their vfio bus driver."

I haven't tested directly with pfSense, only virtualized. But I uploaded a VM with FreeBSD 14 and it had the same problem.

I thought it could be something from the Linux kernel (host), but I did all the mapping, as stated in the KVM documentation.

-

Hmm, might be something in the FreeBSD forum them if it affects the vanilla install too.

-

It seems to me like a bug that affects even bare metal: https://bugs.freebsd.org/bugzilla/show_bug.cgi?id=268151

-

Ah, yup. Upstream bug then.

-

@robsonvitorm FYI I ran into the same thing with HPE 331T based on similar Broadcom Chipset.

msi: routing MSI IRQ 27 to local APIC 0 vector 54 bge1: using IRQ 27 for MSI bge1: CHIP ID 0x05719001; ASIC REV 0x5719; CHIP REV 0x57190; PCI-E bge1: Disabling fastboot bge1: Try again bge1: Try again bge1: Try again bge1: Try again bge1: attaching PHYs failed device_attach: bge1 attach returned 6 bge1: <HP Ethernet 1Gb 4-port 331T Adapter, ASIC rev. 0x5719001> mem 0xfebc0000-0xfebcffff,0xfebd0000-0xfebdffff,0xfebe0000-0xfebeffff irq 10 at device 9.0 on pci0 bge1: APE FW version: NCSI v1.4.22.0 bge1: attempting to allocate 1 MSI vectors (8 supported)Installing Bare Metal it works fine:

bge1: using IRQ 129 for MSI bge1: CHIP ID 0x05719001; ASIC REV 0x5719; CHIP REV 0x57190; PCI-E bge1: Disabling fastboot miibus1: <MII bus> on bge1 <------- Pass Thru never gets this far brgphy1: <BCM5719C 1000BASE-T media interface> PHY 2 on miibus1 brgphy1: OUI 0x001be9, model 0x0022, rev. 0 brgphy1: 10baseT, 10baseT-FDX, 100baseTX, 100baseTX-FDX, 1000baseT, 1000baseT-master, 1000baseT-FDX, 1000baseT-FDX-master, auto, auto-flow bge1: Using defaults for TSO: 65518/35/2048 bge1: bpf attached bge1: Ethernet address: 98:f2:b3:03:f1:75 bge2: <HP Ethernet 1Gb 4-port 331T Adapter, ASIC rev. 0x5719001> mem 0xb0050000-0xb005ffff,0xb0040000-0xb004ffff,0xb0030000-0xb003ffff irq 16 at device 0.2 on pci1 bge2: APE FW version: NCSI v1.4.22.0 -

@Casper042 apparently there is a problem with the hypervisor. The BSD folks don't seem too concerned about resolving this issue. If you can add it to Bugzilla, who knows, maybe they'll decide to correct it or show us where we're going wrong in the configuration.

https://bugs.freebsd.org/bugzilla/show_bug.cgi?id=268151

-

@robsonvitorm Hey quick FYI, I installed Proxmox VE 8.1, flipped the intel_iommu=on in grub and after a reboot I could install pfSense and successfully see all 4 of my bgeX ports.

Since Proxmox and Ubuntu are both based on Debian, makes me wonder if I'm just missing something in the Virtualization config...

-

@Casper042 Sorry, did you install Proxmox on Ubuntu and did the four interfaces work on virtualized pfSense? If so, we can analyze whether there is a patch applied by Ubuntu to the Linux Kernel, which perhaps makes this interface transition compatible.

-

I have the same use case... pfsense running on KVM / linux using PCI passthrough to assign a BCM5720 dual port NIC. I thought that the second interface not working was driver related, but after reading this thread I tried using the card on bare metal and both ports work fine.

Whatever is happening is definitely related to the hypervisor or host machine's linux kernel (my platform is TrueNAS scale). I'd love to be able to pin this down and get things working properly...

-

@robsonvitorm

First off, to be hyper specific, for my tests HW is a Lenovo m720q with a AJ??929 x8 Riser Adapter and an HP/HPE 331T (Broadcom 5719 4 port)

pfSense on Bare Metal = All 4 Ports work as expected, no PHY error on boot.

pfSense on ProxMox VE 8.1 (Debian based OS) = All 4 ports work as expected, Intel_IOMMU enabled in Grub

pfSense on Ubuntu 22.04 LTS (Debian based OS) = Only bge0 works, bge1 fails to init. Intel_IOMMU and MSR enabled in GrubIntel_IOMMU:

Edit /etc/default/grub

Append GRUB_CMDLINE_LINUX_DEFAULT with additional "intel_iommu=on" at the end.MSRs:

Edit /etc/default/grub

Append GRUB_CMDLINE_LINUX_DEFAULT with additional "kvm.ignore_msrs=1" at the end.After either change:

sudo grub-mkconfig -o /boot/grub/grub.cfgOn the Ubuntu Host, I also blacklisted the bge drivers so the Bare Metal Ubuntu did not load them and attach to the NIC Ports:

EDIT/Create: /etc/modprobe.d/vfio.conf

blacklist tg3

options vfio-pci ids=14e4:1657

# Prevent Broadcom bg3 driver from loading in Bare Metal OS - keep card ports free for VM passthrough

Then push changes to initram: sudo update-initramfs -u

This step did not seem to be needed with Proxmox.These mods to the OS came from a thread I found on GPU passthrough, which I adapted for NIC passthrough:

https://askubuntu.com/questions/1406888/ubuntu-22-04-gpu-passthrough-qemu

(Though I just noticed that I did not do Step 14, so will have to swap that drive back in and see if it makes any difference)-Casper