DNS_PROBE_FINISHED_NXDOMAIN sporadically for anywhere from 30secs to 10min. works flawlessly at all other times

-

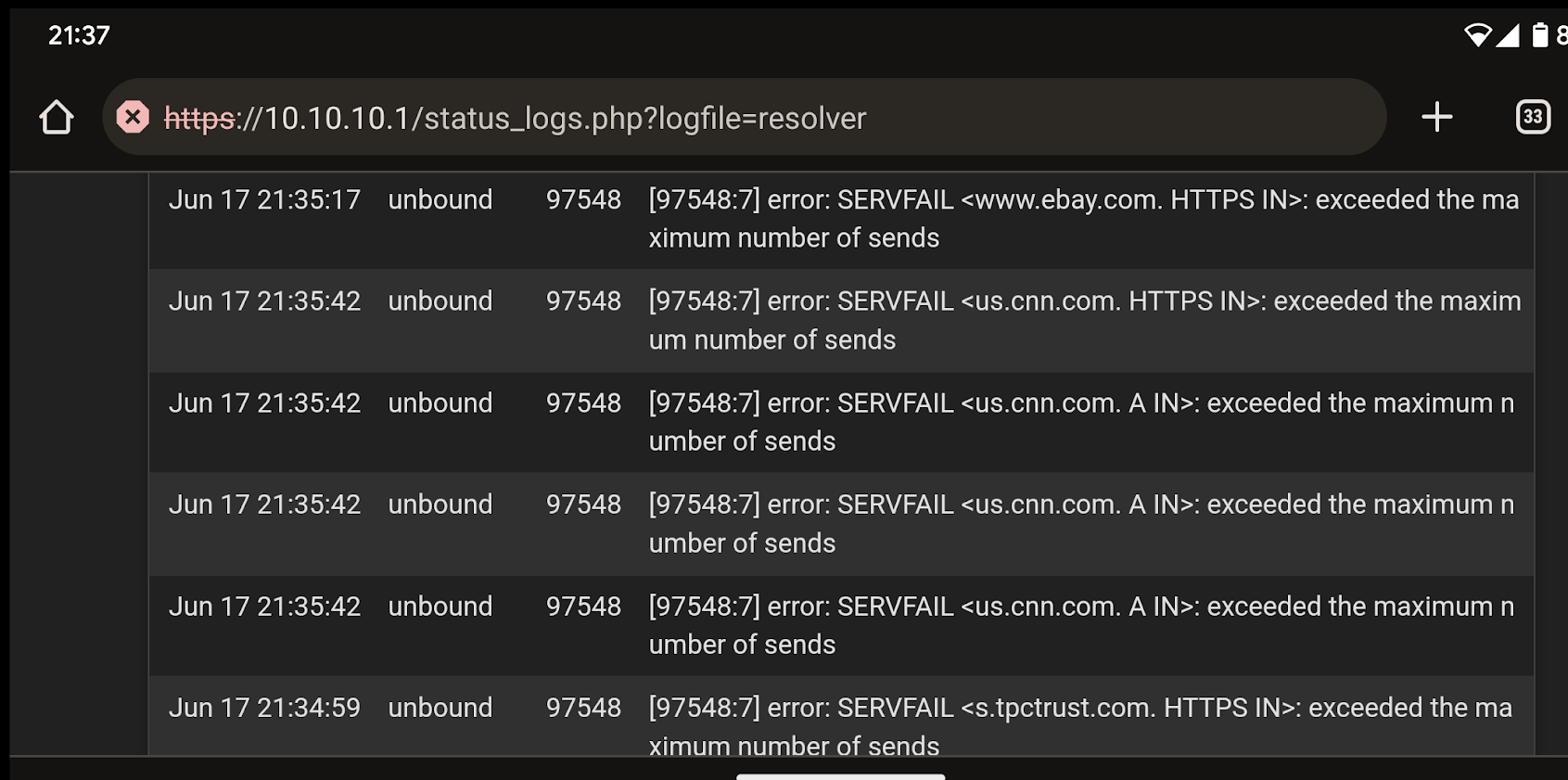

Ugh, albeit infrequent, the issue has occurred a couple times over the last couple days. Yesterday in bed my wife complained about it happening in the eBay app (and Chrome and the public libraries audiobook app) and I feverishly started opening random websites from my Google feed (swipe left on an android). I eventually got an nxdomain error but was able to open 5-6 links before AND after the error. It's very odd to me that my wife seems to experience the problem with much more consistency and degree than I do. Resetting the DNS Resolver restored usefulness immediately.

-

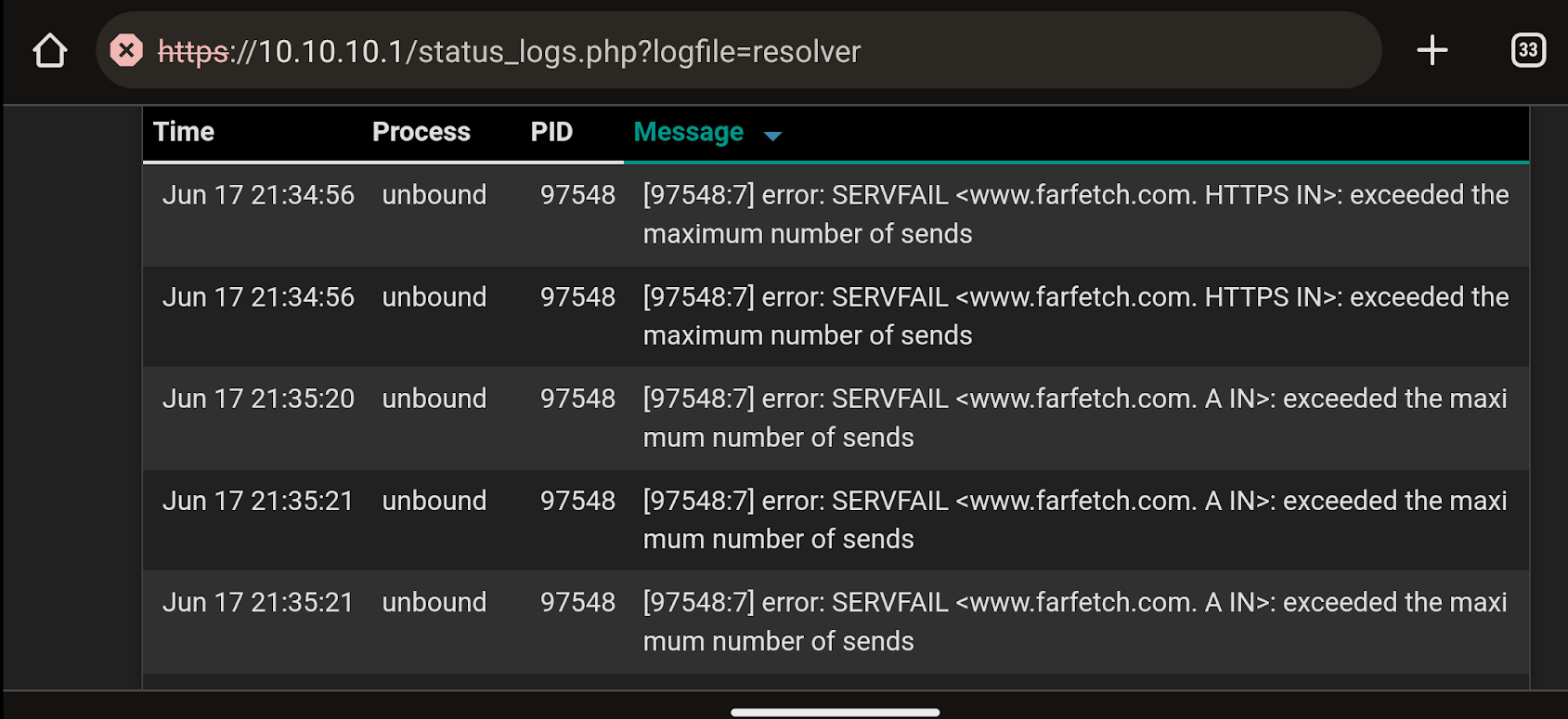

@RickyBaker exceeded max number of sends is the root of the problem it seems.

You might want to bump your logging level up to 5 in unbound.. This might give you more insight to the actual failure when you see the servfail and its reason.

I would prob set

do-ip6: no

As well..

Are you seeing a crazy amount of queries? Is something bombing unbound with queries before those failures?

-

Quite a long thread here on the

unboundGitHub account that I think might be related to the OP's issue: https://github.com/NLnetLabs/unbound/issues/362.From my reading of the long thread,

unbounditself is producing the SERVFAIL messages at times inappropriately as a byproduct of trying to "protect" the client from abuse. The thread is primarily an argument about DNSBL anti-spam logic and resultant DNS lookups, but I think the underlying logic insideunbounditself may be part (or maybe even all) of the issue the OP is seeing randomly.There are also a couple of suggested

unboundconfig changes in that thread that might help mitigate the issue. Of note is the parameter to greatly increase the "maximum number of sends" limit.For what it's worth, I found reports of this same behavior on "the other Sense" product. That leads me to strongly suspect the root cause is something in

unboundthat perhaps specific configurations or even specific queries triggers the error. -

@bmeeks I would concur.. From the quick look into that error, also got a sense that ipv6 failing or issues with IPv6 could bring it on.. Which is why I would suggest telling unbound not to do IPv6, it sure isn't going to break anything that is for sure.

I have never ran into this issue, but then again I have the ipv6 set to no.. So maybe that is why I haven't seen it???

Something triggers it for sure.. What that something is the question ;)

I don't see how increasing the number of sends could cause any sort of neg issues.. Increasing that again don't see how it could hurt anything or make anything worse. I don't have it set to anything other than default.. But then again my clients don't actually ask pfsense, they ask a pihole in front of pfsense.. So maybe a massive amount of queries that trigger the max send is being held back by pihole. I have seen warnings where clients have been throttled in pihole because they have done more than 1000 queries in 60 seconds..

Sometimes blocking something from resolving can cause clients to go into panick mode or something - and whatever idiot logic the guy that wrote it thought that hey didn't get an answer, lets ask 1000 times in a minute and we might get an answer?? hehhehehe

-

@bmeeks said in DNS_PROBE_FINISHED_NXDOMAIN sporadically for anywhere from 30secs to 10min. works flawlessly at all other times:

Quite a long thread here on the unbound GitHub account that I think might be related to the OP's issue: https://github.com/NLnetLabs/unbound/issues/362.

Mentioned that thread @NETlabs a month ago, some propositions were tested already AFAIK.

@Gertjan said in DNS_PROBE_FINISHED_NXDOMAIN sporadically for anywhere from 30secs to 10min. works flawlessly at all other times:

This very issue (or whatever it is) has its own thread on NLnetLabs (the author of unbound) exceeded the maximum nameserver nxdomains.

exceeded the maximum nameserver nxdomains = https://github.com/NLnetLabs/unbound/issues/362

-

@Gertjan very interesting thread.. But seems to be about 2 different issues really.. the NX domain issue, and then errors with the max sends.

Apr 09 18:57:37 unbound[9338:c] error: SERVFAIL <pull-flv-l11-cny.douyincdn.com. A IN>: exceeded the maximum number of sends Apr 09 18:57:37 unbound[9338:8] debug: request has exceeded the maximum number of sends with 33I see one post that seems related to this issue specifically with the verbosity increased it shows the number that caused the error, with the max send limit at the default of 32..

And seems internet connection error could lead to problems as well - but was not sure if was the nx problem or the max send problem

Seems they are seeing the problem with something doing really a massive amount of look ups, related to smtp servers and spam checking, etc.

Which is why would be interesting to see log of queries, if unbound is getting flooded with queries before its failing, or if maybe an issue with network connectivity that is causing the max send problem where unbound is trying to look up something but the internet connection or atleast the dns queries are failing.

-

@Gertjan said in DNS_PROBE_FINISHED_NXDOMAIN sporadically for anywhere from 30secs to 10min. works flawlessly at all other times:

Mentioned that thread @NETlabs a month ago, some propositions were tested already AFAIK.

I thought the thread seemed vaguely familiar ...

, but I confess I did not scroll backwards through all of the 166 posts at the time to check if the link was already present.

, but I confess I did not scroll backwards through all of the 166 posts at the time to check if the link was already present.My suspicion is the logic within

unbounditself is not working as intended in all cases. Seems to work the vast majority of the time, but some set of conditions triggers an incorrect mode of operation that only a restart ofunboundseems to resolve. -

@bmeeks said in DNS_PROBE_FINISHED_NXDOMAIN sporadically for anywhere from 30secs to 10min. works flawlessly at all other times:

but some set of conditions triggers

That's the definition of this thread.

If @RickyBaker doesn't use a special WAN connection, it's time to take the box to another place (the neighbor, friends, family) and se if the some scenario shows up.

( he should also bring his wife along, and her connected devices ^^ ) -

@Gertjan I thank everyone for chiming in, i will implement the do-ip6: no under the custom options of DNS Resolver (i presume?) and, yes, I did implement the suggestions in that thread and, at least one of them, completely broke the experience. I basically couldn't use the internet at all...Happy to retest them if that would be useful

-

@johnpoz just want to report that I haven't had one outage since I made the no-ip6 settings change...

-

@RickyBaker thanks for letting us keep those curiosity kats from meowing at us..

-

Edit: Just heard back from VSSL. known issue with Google Home/Speaker Groups. Sorry about that!

@johnpoz @Gertjan @SteveITS @bmeeks Hey all! sorry to necromance an old thread but I ran into a possibly related issue and just wanted to see if anyone that was up to speed had any thoughts.

I've noticed that my VSSL (zoned audio like sonos) speakers show as offline in the Google Home app. But i'm able to stream spotify to the individual zones no problem. I see all the zones/speakers in the proprietary VSSL App and there are no errors on the physical VSSL units. When I pull up the Spotify "select your device" menu to choose speakers, I see all the zones AND the Speaker Groups (multiple zones, that I define in the Google Home), BUT if I select a Speaker Group it spins forever saying it's connecting and never does. Each zone has a static IP on the IoT VLAN and playing to Speaker Groups def worked before the do-ip6:no option was added. Does anyone know if Google Speaker Groups use ip6? Any ideas how to fix this?

Full disclosure: It's obviously been a while since I used the zoned audio, so it's possible something else is causing the issue but I'm somewhat convinced that VSSL is related to the DNS_PROBE_FINISHED_NXDOMAIN exceeded maximum number of sends error. It's a wild hunch, but I was having an issue much earlier where turning on the VSSL's would boot a bunch of devices off the DHCP server (still operating but no way to access them over IP). It was actually one of the motivations for segmenting the networks in the first place. Seems like too many coincidences.....

-

R RickyBaker referenced this topic on

-

R RickyBaker referenced this topic on