2.7.0 / wiped after reboot

-

On a 2.7.0 cluster of vms, i rebooted the backup node before starting to upgrade as a safety measure.

The vm was stucked after reboot so i reset it after a couple of minute but this time it didnt even booted.

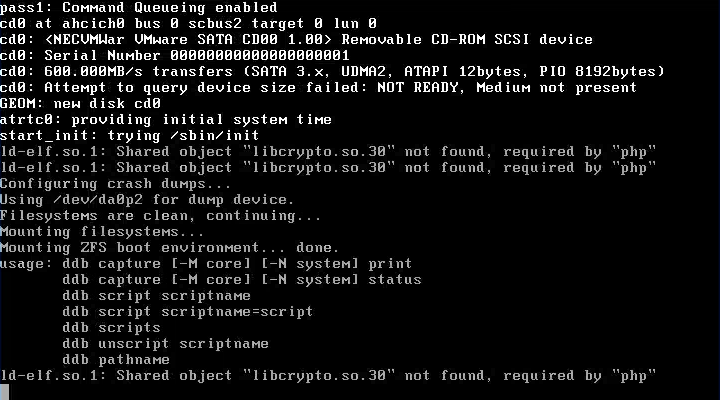

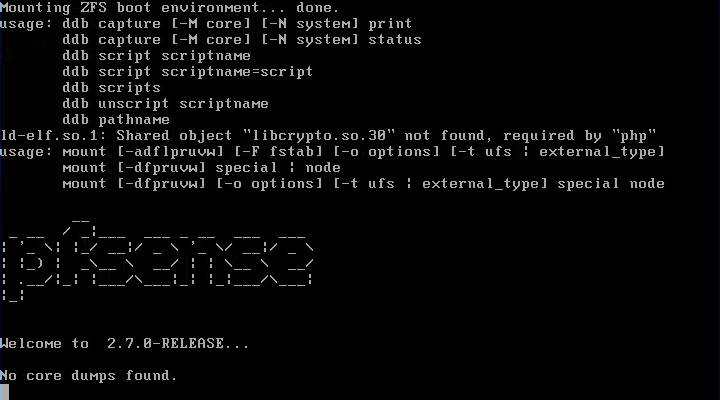

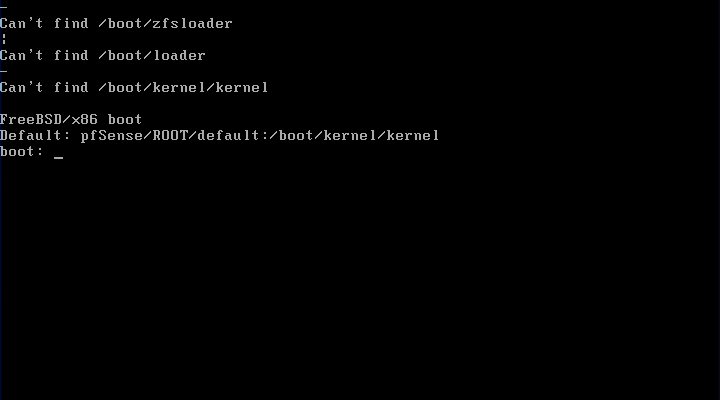

After restoring vm backup i figured that the partition was wiped during the boot as you can see in the consolefirst boot :

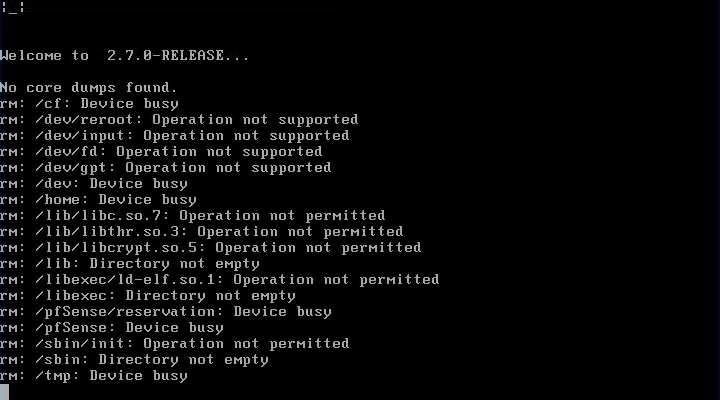

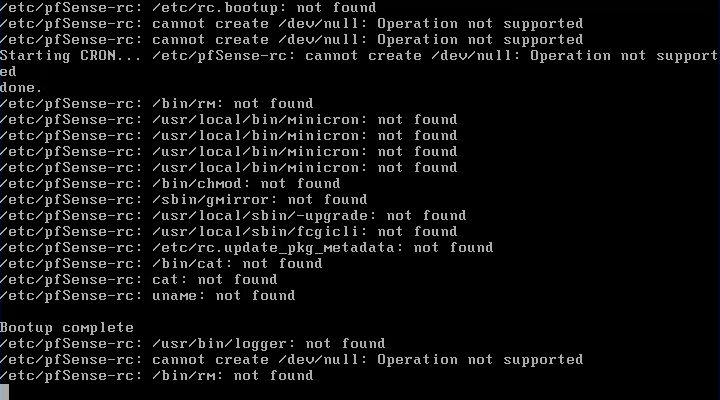

at this point the vm is stuck, and then i reset:

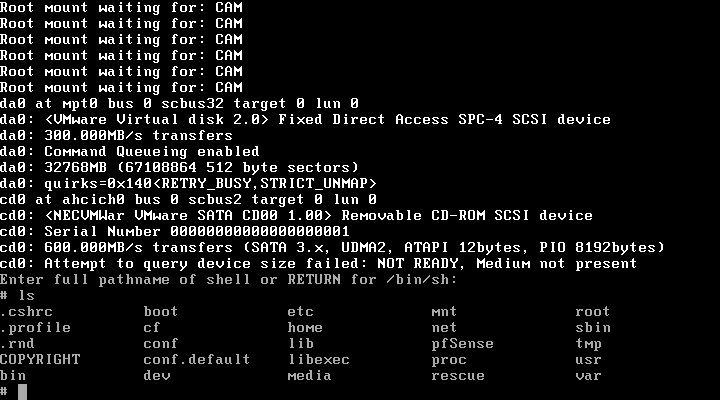

single user works fine:

here the full console video

https://youtu.be/I0mUpN5yN6g?si=ljUTuUbfVcviJgU_

Any idea to repair it would be much apreciated :)

Thanks -

@hypervisor well all those rm commands look like something was deleting all the files. Reinstalling clean seems easiest and safest.

-

-

FYI it's in production since 2 years, already did some upgrade and has a very few plugins (wireguard, arpwatch and pfblocker)

-

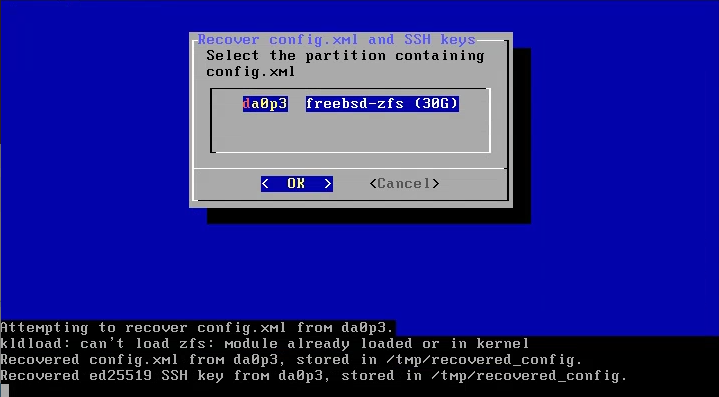

in single user mode and in the backups i noticed that there is no more config.xml file so that's a mandatory restore...

-

@SteveITS config recover saved the day

-

Be sure to backup your config files!

Use auto-config-backup if not doing something locally.

Or snapshots since they're VMs.

But library errors like that can often indicate a pkg was pulled in from the wrong repo. Potentially you installed a package when the 2.7.2 upgrade branch was selected.

-

And because no one likes security issues : don't use older versions with "known bug", use a more modern version with less known bugs : 2.7.2 is out for at least a year now. 2.7.0 is ... old and not supported (as no one it using it anymore).

-

@stephenw10

since it's a backup node, it's not as easy to backup the configuration so that's why i rely on veeam backup but in this case same result with previous backups -

After fixing the backup node, i encounter the exact same issue on the master node...

Snapshot before reboot to be able to recover the config file !