10GB Lan causing strange performance issues, goes away when switched over to 1GB

-

I am running pfsense plus on a super fast Xeon CPU D-1541 system that has 2(x) 10GB Intel nics. My switch is a Cisco WS-C3650-48PD which has 2(x) SPF+ 10GB ports.

My current setup is that Pfsense LAN Nic is connected to Port 1 of the switch which is simply a 1GB port. My cable modem is connected to the WAN Nic of pfsense which is also set to 1GB. In this configuration my internet benchmarks are great, I pay for 1GB service and my benchmarks are always 930Mbps with an A+ on bufferbloat.

Here is where it gets weird. If I switch the Pfsense LAN Nic to the 10GB SPF+ port on my switch the internet benchmarks from any of my systems on the lan go down drastically. The same box which always scores 930Mbps struggles to hit 300Mbps. The SPF+ port is using a SPF+ to RJ45 adapter and a Cat 8 cable is used to make the connection. Now if I simply remove the Cat8 from the SPF+ port and connect back to the standard 1GB port the benchmarks on all systems jump right back up to 930Mbps.

On the PFsense side I have tried is rebooting the box, the dashboard and interface section all properly show the LAN connection at 10Gb when it was connected to that port. The Cisco switch also properly negotiated 10Gb and shows 0 errors or dropped packets. I am stumped as to why my internet speeds would so drastically suffer by simply increasing the available bandwidth on the LAN side.

If anyone could explain this or offer any suggestions I would greatly appreciate.

-

Do you see any errors or drops on the pfSense end? Is flow control enabled? Can you test without an RJ45 adapter? Just with fiber or a 10G DAC?

-

@stephenw10 I did not mess with flow control, are you referring to the pfSense end or switch end. The only way I can connect the firewall nic to my switch is via RJ45 Cat 8, thus I have to use a SPF+ to RJ45 adapter, the only I have is a high quality 10Gb 80m model.

I think you could be on to something with flow control but where does one disable it in pfSense.

-

See: https://docs.netgate.com/pfsense/en/latest/hardware/tune.html#flow-control

For ix NICs, which I assume you have, you can set it globally like that or via a sysctl for each NIC like:

[24.11-RELEASE][admin@6100.stevew.lan]/root: sysctl -d dev.ix.0.fc dev.ix.0.fc: Set flow control mode using these values: 0 - off 1 - rx pause 2 - tx pause 3 - tx and rx pause [24.11-RELEASE][admin@6100.stevew.lan]/root: sysctl dev.ix.0.fc=3 dev.ix.0.fc: 0 -> 3 -

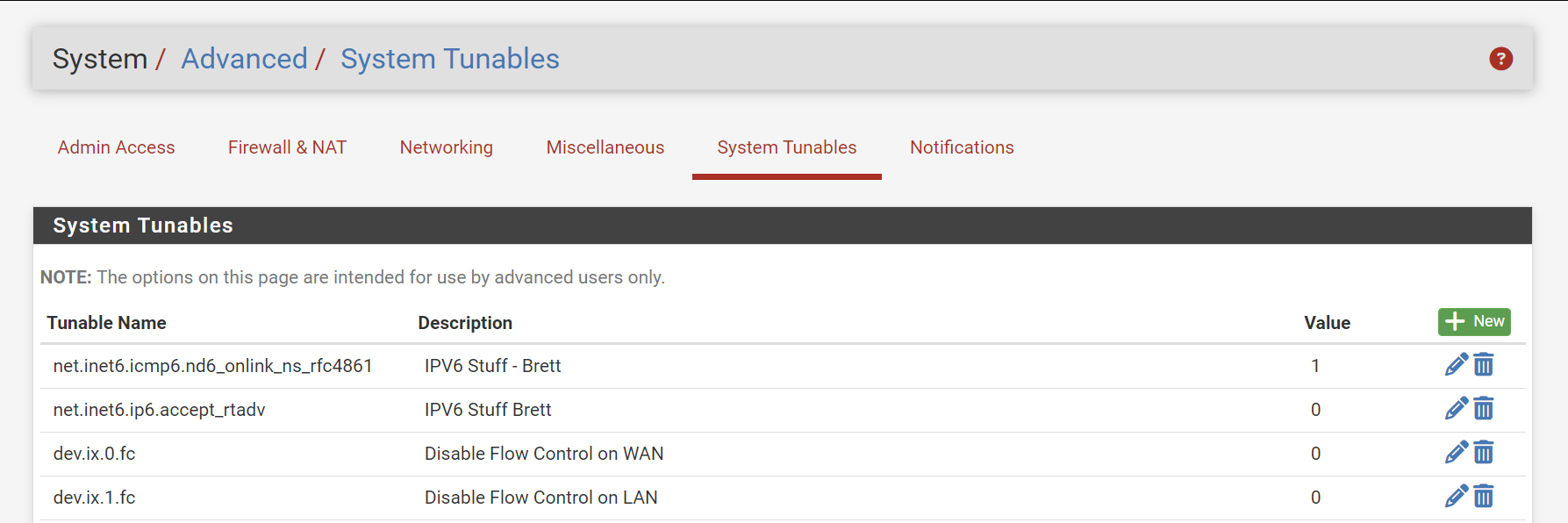

@stephenw10 Could I simply add the following below to the System Tuneables section or is that not preferred, I've read that some people are having issues with this setting being persistent.

Last should I disable Flow control on just the WAN nic or LAN or both WAN & LAN Nics, thanks.

dev.ix.0.fc=0

-

I see the opposite behavior. I have Netgear XS728T switch (24x10Gb/s and 4 SFP+). All my machines that have 10Gb/s NICs (mix of Intel x710 and x550) have full internet speed (1.5Gb/s). All machines that have 1Gb/s NICs max out at 300 Mb/s in tests, be it Ookla SpeedTest or iperf3 test to public servers.

pfSense has Intel x710 NIC connected via SPF+ to RJ45 adapter to Rogers (Canada) XB8 modem, and Intel SPF+ to Netgear Switch SFP+ port. I tried all possible permutations of switch parameters with regard to flow control, green Ethernet, etc. to no avail.

Any idea how to change flow control for ixl on pfSense? That's about the only thing I did not try. Would that even make a difference? Works for faster connection, but not for slower one. Doesn't look like flow control issue. Any other idea?

iperf3 tests on LAN always max out regardless of 10 or 1 Gb/s NIC.

-

So I first tried disabling Flow Control on the WAN via the system tunables, ran benchmark and same performance issue. I then disabled on LAN in addition, same issue. I rebooted firewall, same issue.

Starting to think this is not worth the hassle and I should just go back to 1GB on WAN & LAN, however I would really like to understand where my issue lies.

Thanks.

-

I also tried it your way with

sysctl dev.ix.0.fc=0

and

sysctl dev.ix.1.fc=0

via command line.

No change to benchmark, still only 250Mbps. I also tried changing the LAN MTU to 9000, made no difference. I have reverted all settings and moved back to 1GB again and boom, speed is back.

I was planning on upgrading to a full 10GB switch for my internal lan in the near future so I would love to figure this out. Any other suggestions or logs I can look at.

-

It may have been disabled already. Some connections require flow control to be enabled to prevent continuously overrunning the buffers.

It also won't do anything (or shouldn't) if the other end has flow control disabled. You want to have both ends set the same.

-

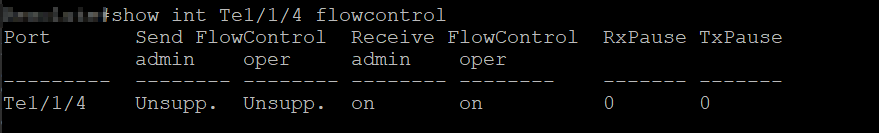

@stephenw10 The output of the command was that the value went from 3 --> 0. I benchmarked it all ways, both on, both off and mixed, no difference in speed, all terrible. I also confirmed that my Cisco 3650 switch does not support flow control, so its disabled there already.

Any other suggestions, tests, logs, etc.

Thanks.

-

Try running some iperf tests locally across that LAN link. See if you can replicate the same low throughput there.

-

I ran some tests, net result = internal transfer speeds are perfect.

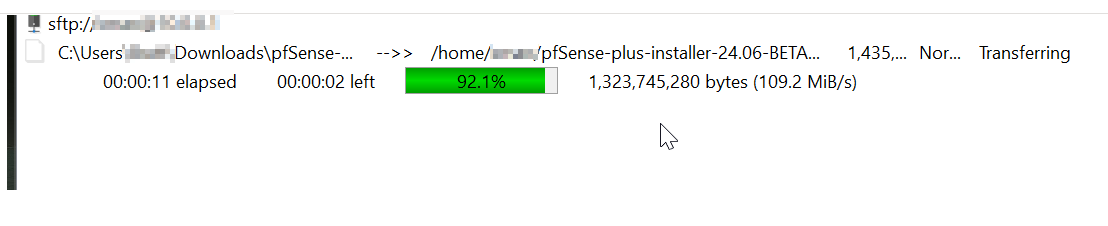

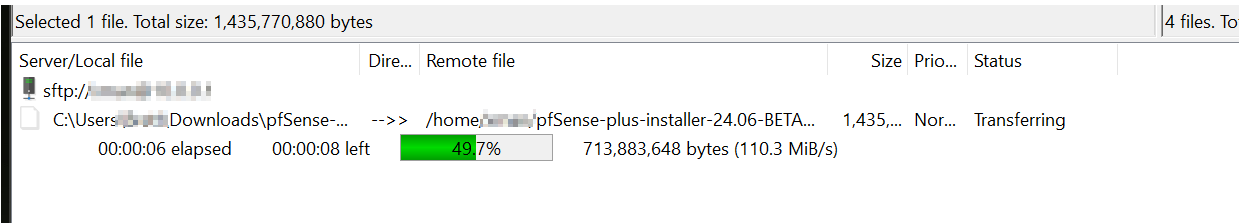

pfSense LAN on 1Gb Port - (Workstation on 1Gb Nic – SFTP File Transfer of 1.5GB ISO file to /Home = 109MiB/s (Full Speed)

pfSense LAN on 10Gb NIC (SPF+ RJ45) - (Workstation on 1Gb Nic – SFTP File Transfer of 1.5GB ISO file to /Home = 110MiB/s (Full Speed)

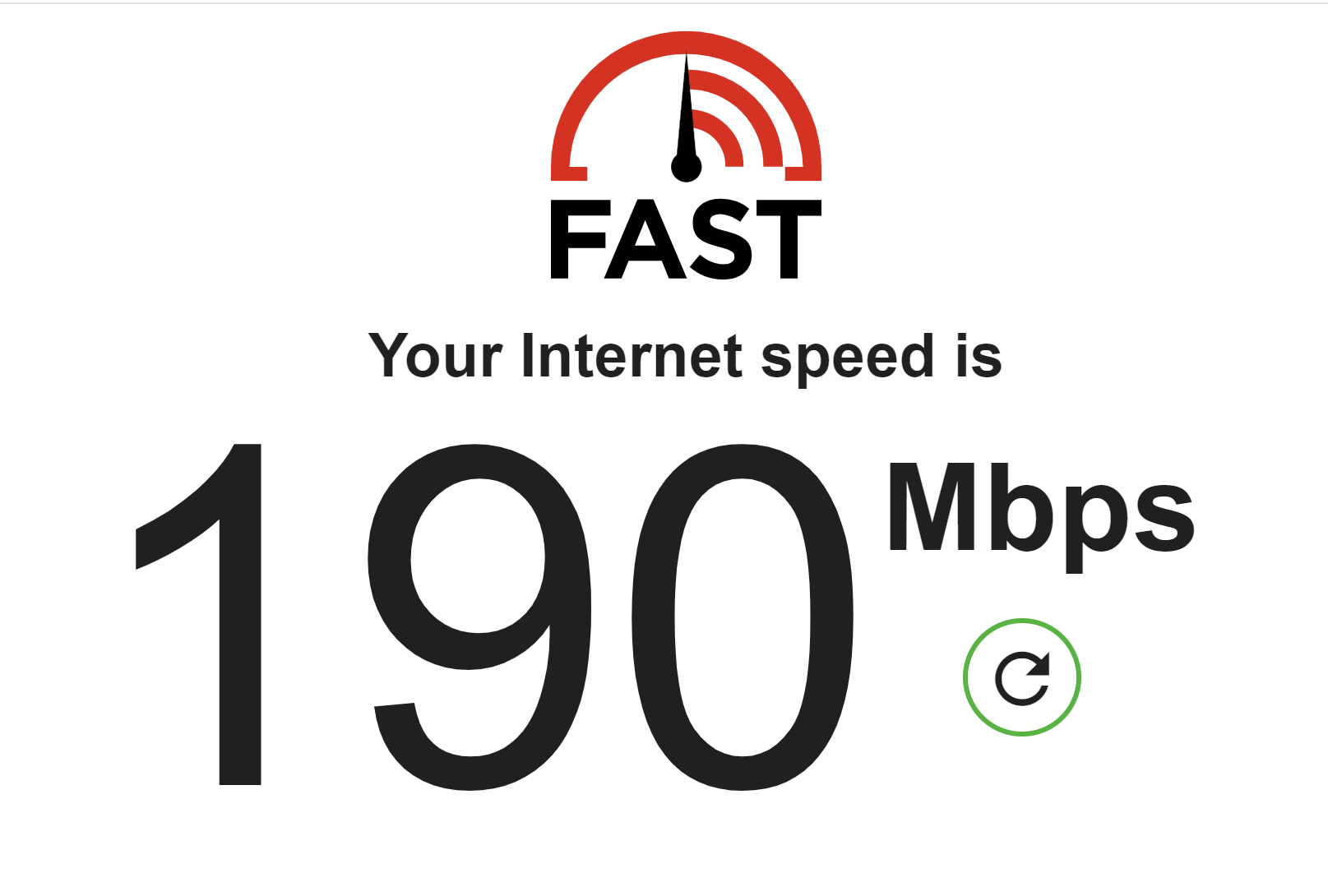

Internet still suffers when Lan connect to 10Gb, this should be 930Mbps not 190Mbps.

What else can we try, any logs worth pulling or viewing while doing an internet speedtest ?

-

Hmm, you said you tried setting MTU values but this does feel like it could be a fragmentation issue. A packet capture should show that.

Is the speed equally bad in both directions?

-

@stephenw10 I captured a PCAP, nothing is jumping out at me, anything thing specifically I should be filtering for or looking for in regards to fragmentation within Wireshark ?

-

I tried the following wireshark filters

ip.fragment

ip.flags.mf ==1 or ip.frag_offset gt 0

I get 0 returned data, this is leading me to believe there is no fragmentation going on.

-

@stephenw10 said in 10GB Lan causing strange performance issues, goes away when switched over to 1GB:

Is the speed equally bad in both directions?

This could be telling if it's not.

-

@stephenw10 Are you suggesting that I send a large file from the pfsense side to a target SFTP server on my LAN and see if it can sustain the same level of performance as my other tests ?

-

Yes. Or just when you test against fast.com do you also see restricted upload? Assuming your WAN is 1G symmetric.

-

@stephenw10 Ah, sorry, that will not be a good test. I am on cable internet. My download speed is 1Gb but my upload is only 30Mb :( so sadly that test will be of no value.

Anything else we can play with or check in logs, again no fragmentation in the PCAP, looks clean. Its like pfsense is just tanking.

I also tried enabling all the hardware offloading, was previously disabled, no difference.

-

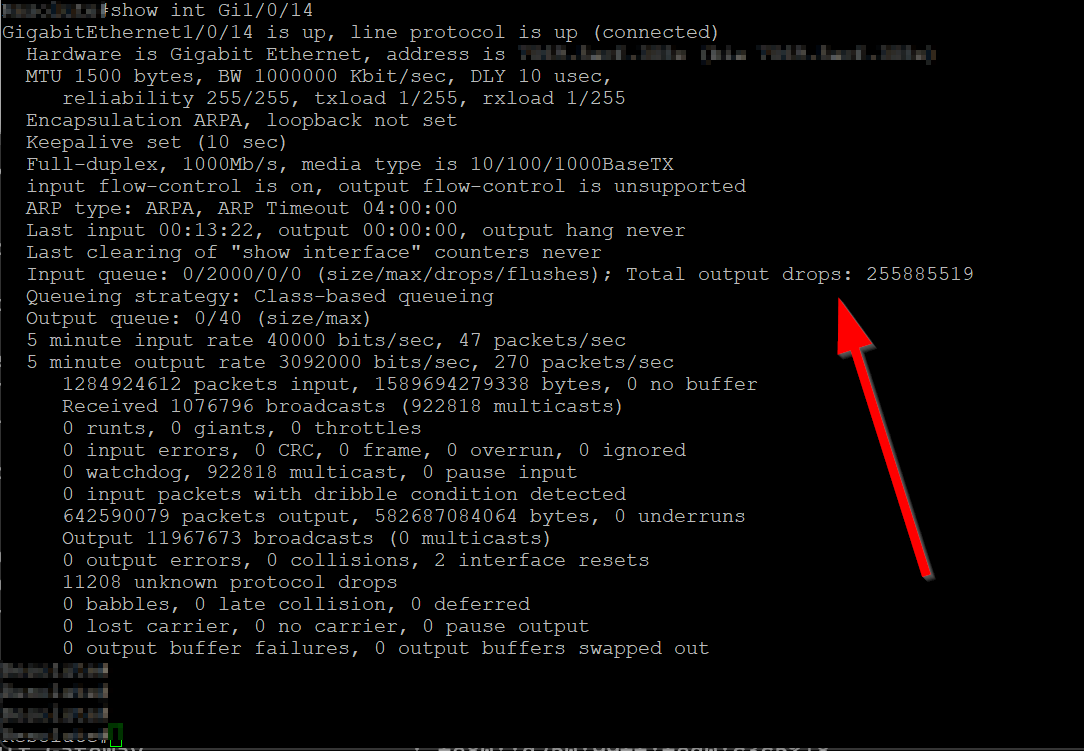

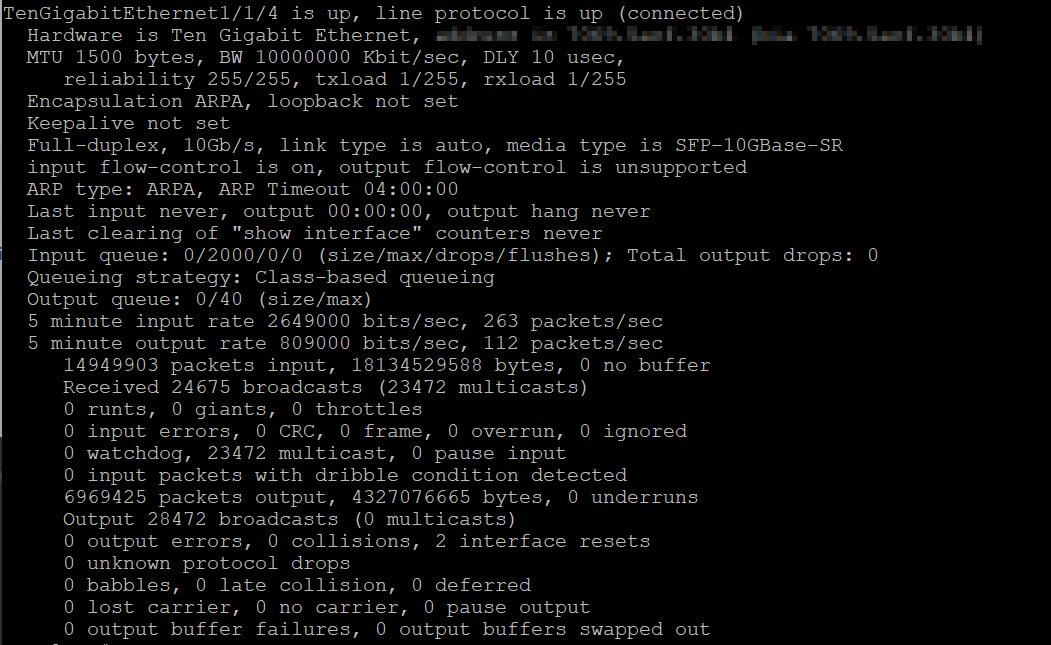

This is interesting.

The port on my switch for the client/workstation shows output drops, this rapidly goes up when I run a speed test.

But the 10GB port uplink to the firewall shows none.

Perhaps the issue is on the Cisco side ?

My Understanding of the 3650 is that it does not have true flow control support