Another Netgate with storage failure, 6 in total so far

-

Probably want some way to limit or suppress the number of alerts/emails. Those values never go back so you could end up with.... a lot!

You might also argue that since it only does it when opening the dashboard an alert shown there might be better. Or maybe both.

-

@stephenw10 said in Another Netgate with storage failure, 6 in total so far:

Probably want some way to limit or suppress the number of alerts/emails. Those values never go back so you could end up with.... a lot!

You might also argue that since it only does it when opening the dashboard an alert shown there might be better. Or maybe both.

Good suggestions!

I was already thinking of using a temp file to store the health data and only updating it when older that a certain age. A similar thing could be done to set a flag/rate limiter for alerting.Ideally, the health check would run as a cron job and store the latest data in a file so that it works in the background, and then the the dashboard would read the file instead of having to run the check every time the dashboard is loaded.

-

@stephenw10 said in Another Netgate with storage failure, 6 in total so far:

Probably want some way to limit or suppress the number of alerts/emails. Those values never go back so you could end up with.... a lot!

Each of which will trigger a write...

-

Yes you are right

This was just sample to start

Here is some other idea<?php require_once("functions.inc"); require_once("guiconfig.inc"); // Path for the timestamp file to limit email notifications const NOTIFY_TIMESTAMP_FILE = "/var/db/emmc_health_notify_time"; const NOTIFY_INTERVAL = 2592000; // 30 days in seconds // Function to retrieve eMMC health data def get_emmc_health() { $cmd = "/usr/local/bin/mmc extcsd read /dev/mmcsd0rpmb | egrep 'LIFE|EOL'"; $output = shell_exec($cmd); if (!$output) { return ["status" => "error", "message" => "Failed to retrieve eMMC health data."]; } preg_match('/LIFE_A\s+:\s+(0x[0-9A-F]+)/i', $output, $matchA); preg_match('/LIFE_B\s+:\s+(0x[0-9A-F]+)/i', $output, $matchB); $lifeA = isset($matchA[1]) ? hexdec($matchA[1]) * 10 : null; $lifeB = isset($matchB[1]) ? hexdec($matchB[1]) * 10 : null; if (is_null($lifeA) || is_null($lifeB)) { return ["status" => "error", "message" => "Invalid eMMC health data."]; } return ["status" => "ok", "lifeA" => $lifeA, "lifeB" => $lifeB]; } $data = get_emmc_health(); // Determine color class based on wear level def get_color_class($value) { if ($value < 70) { return "success"; // Green } elseif ($value < 90) { return "warning"; // Yellow } else { return "danger"; // Red } } // Check if email notification should be sent def should_send_email() { if (!file_exists(NOTIFY_TIMESTAMP_FILE)) { return true; } $last_sent = file_get_contents(NOTIFY_TIMESTAMP_FILE); return (time() - (int)$last_sent) > NOTIFY_INTERVAL; } // Send email notification if wear level is critical def send_emmc_alert($lifeA, $lifeB) { global $config; if (!should_send_email()) { return; } $subject = "[pfSense] eMMC Wear Level Warning"; $message = "Warning: eMMC wear level is high!\n\n" . "Life A: {$lifeA}%\nLife B: {$lifeB}%\n\n" . "Consider replacing the storage device."; if ($lifeA >= 90 || $lifeB >= 90) { notify_via_smtp($subject, $message); file_put_contents(NOTIFY_TIMESTAMP_FILE, time()); // Update last sent time } } // Ensure that email is sent only when eMMC is the boot disk and no RAM disk is used def is_valid_environment() { if (file_exists("/etc/rc.ramdisk")) { return false; // RAM disk is enabled } $boot_disk = trim(shell_exec("mount | grep 'on / ' | awk '{print $1}'")); return strpos($boot_disk, "mmcsd") !== false; // Ensure eMMC is the boot device } if ($data["status"] === "ok" && is_valid_environment()) { send_emmc_alert($data["lifeA"], $data["lifeB"]); } ?><div class="panel panel-default"> <div class="panel-heading"> <h3 class="panel-title">eMMC Disk Health</h3> </div> <div class="panel-body"> <?php if ($data["status"] === "error"): ?> <div class="alert alert-danger"><?php echo $data["message"]; ?></div> <?php else: ?> <table class="table"> <tr> <th>Life A</th> <td class="bg-<?php echo get_color_class($data['lifeA']); ?>"> <?php echo $data['lifeA']; ?>%</td> </tr> <tr> <th>Life B</th> <td class="bg-<?php echo get_color_class($data['lifeB']); ?>"> <?php echo $data['lifeB']; ?>%</td> </tr> </table> <?php endif; ?> </div> </div>You can send it once a month. You can skip sending if eMMC is no longer the primary storage or if RAM disks are being used… Well, I don't need to explain to an experienced programmer how such issues can be handled. You could even store this data and the lock file for sending alerts on your own RAM disk.

<?php require_once("functions.inc"); require_once("guiconfig.inc"); // Define RAM disk path and ensure it exists const RAMDISK_PATH = "/mnt/health/emmc_health_notify_time"; const RAMDISK_MOUNT_POINT = "/mnt/health"; const NOTIFY_INTERVAL = 2592000; // 30 days in seconds // Function to set up RAM disk if not already mounted def setup_ramdisk() { if (!is_dir(RAMDISK_MOUNT_POINT)) { mkdir(RAMDISK_MOUNT_POINT, 0777, true); } $mounted = trim(shell_exec("mount | grep ' " . RAMDISK_MOUNT_POINT . " '")); if (!$mounted) { shell_exec("mdmfs -s 100M md " . RAMDISK_MOUNT_POINT); } } // Function to retrieve eMMC health data def get_emmc_health() { $cmd = "/usr/local/bin/mmc extcsd read /dev/mmcsd0rpmb | egrep 'LIFE|EOL'"; $output = shell_exec($cmd); if (!$output) { return ["status" => "error", "message" => "Failed to retrieve eMMC health data."]; } preg_match('/LIFE_A\s+:\s+(0x[0-9A-F]+)/i', $output, $matchA); preg_match('/LIFE_B\s+:\s+(0x[0-9A-F]+)/i', $output, $matchB); $lifeA = isset($matchA[1]) ? hexdec($matchA[1]) * 10 : null; $lifeB = isset($matchB[1]) ? hexdec($matchB[1]) * 10 : null; if (is_null($lifeA) || is_null($lifeB)) { return ["status" => "error", "message" => "Invalid eMMC health data."]; } return ["status" => "ok", "lifeA" => $lifeA, "lifeB" => $lifeB]; } $data = get_emmc_health(); // Determine color class based on wear level def get_color_class($value) { if ($value < 70) { return "success"; // Green } elseif ($value < 90) { return "warning"; // Yellow } else { return "danger"; // Red } } // Check if email notification should be sent def should_send_email() { if (!file_exists(RAMDISK_PATH)) { return true; } $last_sent = file_get_contents(RAMDISK_PATH); return (time() - (int)$last_sent) > NOTIFY_INTERVAL; } // Send email notification if wear level is critical def send_emmc_alert($lifeA, $lifeB) { global $config; if (!should_send_email()) { return; } $subject = "[pfSense] eMMC Wear Level Warning"; $message = "Warning: eMMC wear level is high!\n\n" . "Life A: {$lifeA}%\nLife B: {$lifeB}%\n\n" . "Consider replacing the storage device."; if ($lifeA >= 90 || $lifeB >= 90) { notify_via_smtp($subject, $message); file_put_contents(RAMDISK_PATH, time()); // Update last sent time on RAM disk } } // Ensure that email is sent only when eMMC is the boot disk and no RAM disk is used def is_valid_environment() { if (file_exists("/etc/rc.ramdisk")) { return false; // RAM disk is enabled } $boot_disk = trim(shell_exec("mount | grep 'on / ' | awk '{print $1}'")); return strpos($boot_disk, "mmcsd") !== false; // Ensure eMMC is the boot device } // Set up RAM disk if necessary setup_ramdisk(); if ($data["status"] === "ok" && is_valid_environment()) { send_emmc_alert($data["lifeA"], $data["lifeB"]); } ?><div class="panel panel-default"> <div class="panel-heading"> <h3 class="panel-title">eMMC Disk Health</h3> </div> <div class="panel-body"> <?php if ($data["status"] === "error"): ?> <div class="alert alert-danger"><?php echo $data["message"]; ?></div> <?php else: ?> <table class="table"> <tr> <th>Life A</th> <td class="bg-<?php echo get_color_class($data['lifeA']); ?>"> <?php echo $data['lifeA']; ?>%</td> </tr> <tr> <th>Life B</th> <td class="bg-<?php echo get_color_class($data['lifeB']); ?>"> <?php echo $data['lifeB']; ?>%</td> </tr> </table> <?php endif; ?> </div> </div> -

Someone with a dead 4200 today. Killed by ntopng in 10 months. The user was unaware of any risks from running ntopng on 16gb of eMMC, and there is no way to monitor the eMMC on the 4200. Luckily the device is still under warranty so it's being replaced under RMA.

-

Based on what I've learned from this thread, I added a 256GB Samsung SSD to my 4200 today, replacing the built-in drive, and it's working fine. Netgate instructions had me hopping around from place to place in the documentation but did they did the job.

I don't want foreseeable future problems, so thank everyone who contributed here. Hopefully this will lead to a longer life than this box might have otherwise had.

-

@Mission-Ghost I am glad you found this thread useful. A 256GB SSD should last a long time!

-

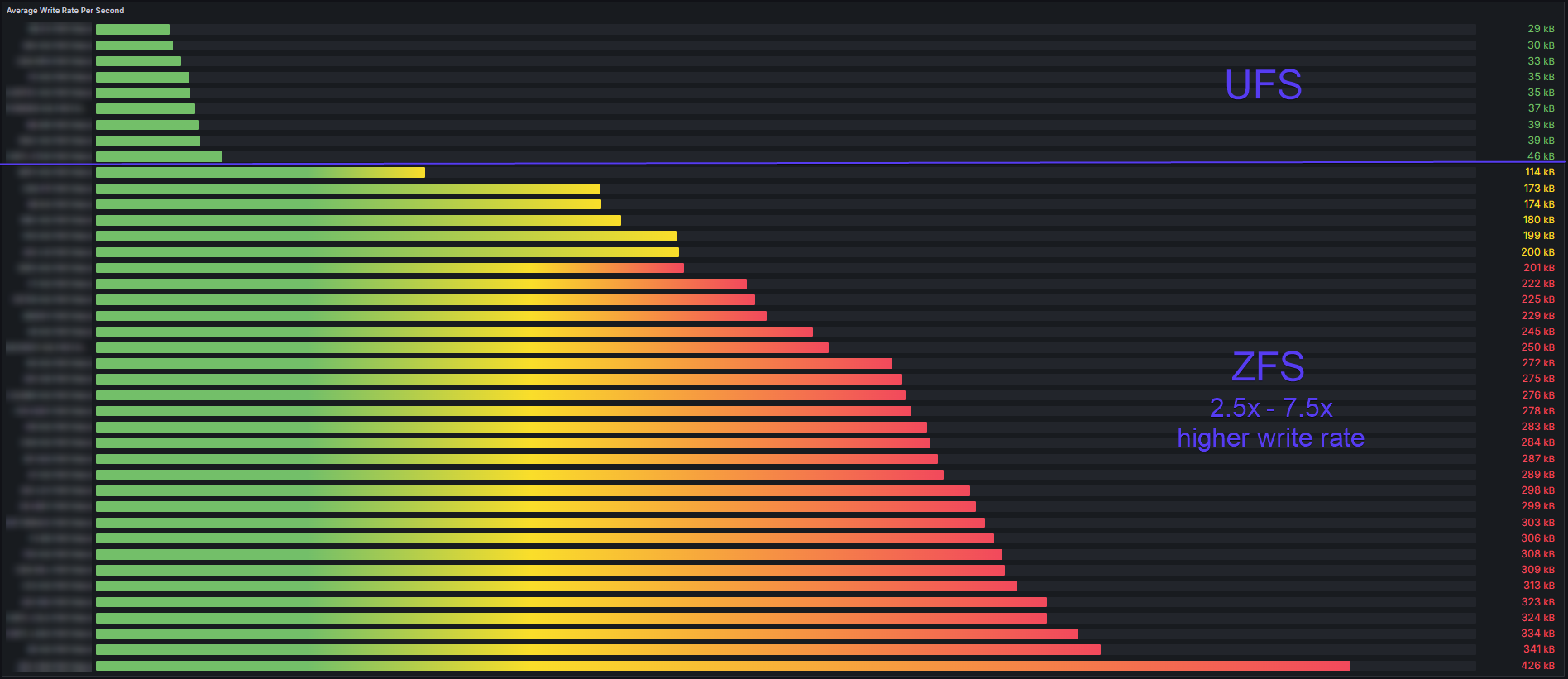

One thing that has always stood out to me about my data has been the 8 devices with with average write rates below 50KBps.

Today I checked our devices and confirmed that those 8 outliers are all running UFS and everything else is using ZFS.

Compared to the highest UFS rate, the ZFS rate is from 2.5x to 7.5x higher.I also looked at some of the devices that have high storage wear. They are in smallish offices and are just doing basic functions. The only packages installed are Zabbix Agent and Zabbix Proxy. A few had the logging enabled for the default rules so I turned those off.

I tried to find a reason why all the devices using ZFS have such high average writes compared to the devices using UFS, but could find no explanation. We use a standardized configuration and nearly all devices are low-load, and just have the Zabbix packages. On most, the log entries for each category fit within the default 500 events shown. I copied a day's worth of general system log events into a text file - it was 38KB.

I went so far as to raise the update interval from 1 minute to 5 minutes of nearly all items in the Zabbix template, but that made no difference.300KB/sec is 18MB/min, 1.1GB/hour, 25GB/day, 9.4TB/year, 18.8TB/2 years, 28.2TB/3 years. This is in the ballpark for the maximum write life of the storage. No wonder we are seeing so many failures at the 2-3 year mark!

Comparatively, a device doing 50KB/sec would be at 4.7TB after 3years and 9.4TB after 6 years.

This could explain why our older 3100 and 7100 units on UFS have lasted 6-7 years and the eMMC is still in good health, meanwhile we have many 4100 that have failed or are near death in only 2 years.

In his thread eMMC Write endurance, @keyser noted

With ZFS, pfBlockerNG in default config with only 4 feeds loaded and NTopNG running, my box averages about 1 MB/s sustained write to the SSD.

I am only 700KBps less (300KBps vs 1000KBps) yet am not running pfblockerng or ntopng.

I will need to dig in deeper with iostat, top, and systat to try and find the cause of the writes. At this point it would appear that ZFS itself is the major cause of the increased write activity compared to UFS.

-

@stephenw10 said in Another Netgate with storage failure, 6 in total so far:

Hmm, not sure why the pkg isn't in the CE repo. I guess there wasn't much call for it at the time. Seems like we could add that pretty easily. Let me see....

Did you have any luck getting mmc-utils added to the CE repo?

-

@andrew_cb said in Another Netgate with storage failure, 6 in total so far:

I will need to dig in deeper with iostat, top, and systat to try and find the cause of the writes.

Hi,

I got a reduction from ~19GBw/day to 1,8 GBw/day by using this settings:

zfs set sync=disabled zroot/tmp (pfSense/tmp) zfs set sync=disabled zroot/var (pfSense/var) (after review my settings I saw that I had set it to disabled)and fine tuning:

vfs.zfs.txg.timeout=120(ZFS Pool in my case is "zroot" actual systems use "pfSense")

Remarc: this is a private system and private use.

-

@fireodo

A wonderful idea and discovery! It seems quite reasonable not to synchronize the tmp folder and 2 minutes delay for transaction writes. Good alternative to ram disks if it can not be used for some reason. -

@w0w said in Another Netgate with storage failure, 6 in total so far:

2 minutes delay

PS. If you test you can set the delay to greater values de amount of writing rate will decrease but you have a greater risk of loosing data when a power failure comes in ... (it reduce the robustness of ZFS filesystem)

-

In the case of a firewall, I think it is acceptable.

Most critical logs should be sent to an external syslog server, and I don't see any risks that could compromise the system. I can't think of any scenarios where this would be critical for pfSense, but I might be wrong. I don't know—some major updates are also managed by BE and shouldn't be affected. -

@andrew_cb said in Another Netgate with storage failure, 6 in total so far:

it would appear that ZFS itself is the major cause of the increased write activity

That is my understanding. ZFS results in significant write amplification but as a result is more robust on power failure.

But I thought later installs of pfsense did not use ZFS for temporary files.

-

/var should be standard sync by default anyway, was yours not?

-

@stephenw10 said in Another Netgate with storage failure, 6 in total so far:

/var should be standard sync by default anyway, was yours not?

It was, but I set it to disabled! (my decision)

Here the output of my

zfs get -r sync zrootNAME PROPERTY VALUE SOURCE zroot sync standard local zroot/ROOT sync standard inherited from zroot zroot/ROOT/default sync standard inherited from zroot zroot/ROOT/default/var_cache_pkg sync standard inherited from zroot zroot/ROOT/default/var_db_pkg sync standard inherited from zroot zroot/reservation sync standard inherited from zroot zroot/tmp sync disabled local zroot/var sync disabled localEdit: The firewall is working with this settings since 08.2021.

I experimented also with huge delays (up to 1800s what make the amount of daily written data to go down to 0,28GBw/day ...)

Its up to you to consider what weights more to you! (with a eMMC that cannot be replaced easily, I guess its better to go with that risk!) -

In 2100-max

zfs get -r sync zroot

Shell Output - zfs get -r sync zroot

cannot open 'zroot': dataset does not existShell Output - zpool status -x

all pools are healthyShell Output - zfs get -r sync NAME PROPERTY VALUE SOURCE pfSense sync standard default pfSense/ROOT sync standard default pfSense/ROOT/23_05_01_clone sync standard default pfSense/ROOT/23_05_01_clone/cf sync standard default pfSense/ROOT/23_05_01_clone/var_db_pkg sync standard default pfSense/ROOT/23_05_01_ipv4 sync standard default pfSense/ROOT/23_05_01_ipv4@2024-01-12-11:46:05-0 sync - - pfSense/ROOT/23_05_01_ipv4@2024-02-29-08:52:57-0 sync - - pfSense/ROOT/23_05_01_ipv4@2024-04-01-12:32:27-0 sync - - pfSense/ROOT/23_05_01_ipv4@2024-06-27-11:52:26-0 sync - - pfSense/ROOT/23_05_01_ipv4@2024-07-03-09:09:28-0 sync - - pfSense/ROOT/23_05_01_ipv4@2025-01-20-10:11:49-0 sync - - pfSense/ROOT/23_05_01_ipv4/cf sync standard default pfSense/ROOT/23_05_01_ipv4/cf@2025-01-20-10:11:49-0 sync - - pfSense/ROOT/23_05_01_ipv4/var_cache_pkg sync standard default pfSense/ROOT/23_05_01_ipv4/var_cache_pkg@2025-01-20-10:11:49-0 sync - - pfSense/ROOT/23_05_01_ipv4/var_db_pkg sync standard default pfSense/ROOT/23_05_01_ipv4/var_db_pkg@2025-01-20-10:11:49-0 sync - - pfSense/ROOT/23_05_01_ipv4_Backup sync standard default pfSense/ROOT/23_05_01_ipv4_Backup/cf sync standard default pfSense/ROOT/23_05_01_ipv4_Backup/var_cache_pkg sync standard default pfSense/ROOT/23_05_01_ipv4_Backup/var_db_pkg sync standard default pfSense/ROOT/23_05_01_ipv6 sync standard default pfSense/ROOT/23_05_01_ipv6@2024-07-25-15:54:45-0 sync - - pfSense/ROOT/23_05_01_ipv6/cf sync standard default pfSense/ROOT/23_05_01_ipv6/var_db_pkg sync standard default pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy sync standard default pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/cf sync standard default pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/cf@2024-01-12-11:46:05-0 sync - - pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/cf@2024-02-29-08:52:57-0 sync - - pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/cf@2024-04-01-12:32:27-0 sync - - pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/cf@2024-06-27-11:52:26-0 sync - - pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/cf@2024-07-25-15:54:45-0 sync - - pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/var_db_pkg sync standard default pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/var_db_pkg@2024-01-12-11:46:05-0 sync - - pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/var_db_pkg@2024-02-29-08:52:57-0 sync - - pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/var_db_pkg@2024-04-01-12:32:27-0 sync - - pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/var_db_pkg@2024-06-27-11:52:26-0 sync - - pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/var_db_pkg@2024-07-25-15:54:45-0 sync - - pfSense/ROOT/23_09_01_ipv4_20240703094025 sync standard default pfSense/ROOT/23_09_01_ipv4_20240703094025/cf sync standard default pfSense/ROOT/23_09_01_ipv4_20240703094025/var_cache_pkg sync standard default pfSense/ROOT/23_09_01_ipv4_20240703094025/var_db_pkg sync standard default pfSense/ROOT/24_03_01_ipv4 sync standard default pfSense/ROOT/24_03_01_ipv4/cf sync standard default pfSense/ROOT/24_03_01_ipv4/var_cache_pkg sync standard default pfSense/ROOT/24_03_01_ipv4/var_db_pkg sync standard default pfSense/ROOT/24_03_01_ipv6_20250113135850 sync standard default pfSense/ROOT/24_03_01_ipv6_20250113135850@2024-07-03-09:40:36-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850@2024-07-23-10:05:22-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850@2025-01-13-13:59:02-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/cf sync standard default pfSense/ROOT/24_03_01_ipv6_20250113135850/cf@2024-07-03-09:09:28-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/cf@2024-07-03-09:40:36-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/cf@2024-07-23-10:05:22-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/cf@2025-01-13-13:59:02-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/var_cache_pkg sync standard default pfSense/ROOT/24_03_01_ipv6_20250113135850/var_cache_pkg@2024-07-03-09:09:28-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/var_cache_pkg@2024-07-03-09:40:36-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/var_cache_pkg@2024-07-23-10:05:22-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/var_cache_pkg@2025-01-13-13:59:02-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/var_db_pkg sync standard default pfSense/ROOT/24_03_01_ipv6_20250113135850/var_db_pkg@2024-07-03-09:09:28-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/var_db_pkg@2024-07-03-09:40:36-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/var_db_pkg@2024-07-23-10:05:22-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/var_db_pkg@2025-01-13-13:59:02-0 sync - - pfSense/ROOT/auto-default-20240112115753 sync standard default pfSense/ROOT/auto-default-20240112115753@2024-01-12-11:57:53-0 sync - - pfSense/ROOT/auto-default-20240112115753/cf sync standard default pfSense/ROOT/auto-default-20240112115753/cf@2024-01-12-11:57:53-0 sync - - pfSense/ROOT/auto-default-20240112115753/var_cache_pkg sync standard default pfSense/ROOT/auto-default-20240112115753/var_cache_pkg@2024-01-12-11:57:53-0 sync - - pfSense/ROOT/auto-default-20240112115753/var_db_pkg sync standard default pfSense/ROOT/auto-default-20240112115753/var_db_pkg@2024-01-12-11:57:53-0 sync - - pfSense/ROOT/quick-20240401123227 sync standard default pfSense/ROOT/quick-20240401123227/cf sync standard default pfSense/ROOT/quick-20240401123227/var_db_pkg sync standard default pfSense/home sync standard default pfSense/reservation sync standard default pfSense/tmp sync standard default pfSense/var sync standard default pfSense/var/cache sync standard default pfSense/var/db sync standard default pfSense/var/log sync standard default pfSense/var/tmp -

@stephenw10 is that a setting on M.2 sata SSDs and NVMe drives we should leave be?

-

@JonathanLee said in Another Netgate with storage failure, 6 in total so far:

zfs get -r sync zroot

replace "zroot" with "pfSense" in your case!

-

Shell Output - zfs get -r sync pfSense NAME PROPERTY VALUE SOURCE pfSense sync standard default pfSense/ROOT sync standard default pfSense/ROOT/23_05_01_clone sync standard default pfSense/ROOT/23_05_01_clone/cf sync standard default pfSense/ROOT/23_05_01_clone/var_db_pkg sync standard default pfSense/ROOT/23_05_01_ipv4 sync standard default pfSense/ROOT/23_05_01_ipv4@2024-01-12-11:46:05-0 sync - - pfSense/ROOT/23_05_01_ipv4@2024-02-29-08:52:57-0 sync - - pfSense/ROOT/23_05_01_ipv4@2024-04-01-12:32:27-0 sync - - pfSense/ROOT/23_05_01_ipv4@2024-06-27-11:52:26-0 sync - - pfSense/ROOT/23_05_01_ipv4@2024-07-03-09:09:28-0 sync - - pfSense/ROOT/23_05_01_ipv4@2025-01-20-10:11:49-0 sync - - pfSense/ROOT/23_05_01_ipv4/cf sync standard default pfSense/ROOT/23_05_01_ipv4/cf@2025-01-20-10:11:49-0 sync - - pfSense/ROOT/23_05_01_ipv4/var_cache_pkg sync standard default pfSense/ROOT/23_05_01_ipv4/var_cache_pkg@2025-01-20-10:11:49-0 sync - - pfSense/ROOT/23_05_01_ipv4/var_db_pkg sync standard default pfSense/ROOT/23_05_01_ipv4/var_db_pkg@2025-01-20-10:11:49-0 sync - - pfSense/ROOT/23_05_01_ipv4_Backup sync standard default pfSense/ROOT/23_05_01_ipv4_Backup/cf sync standard default pfSense/ROOT/23_05_01_ipv4_Backup/var_cache_pkg sync standard default pfSense/ROOT/23_05_01_ipv4_Backup/var_db_pkg sync standard default pfSense/ROOT/23_05_01_ipv6 sync standard default pfSense/ROOT/23_05_01_ipv6@2024-07-25-15:54:45-0 sync - - pfSense/ROOT/23_05_01_ipv6/cf sync standard default pfSense/ROOT/23_05_01_ipv6/var_db_pkg sync standard default pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy sync standard default pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/cf sync standard default pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/cf@2024-01-12-11:46:05-0 sync - - pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/cf@2024-02-29-08:52:57-0 sync - - pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/cf@2024-04-01-12:32:27-0 sync - - pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/cf@2024-06-27-11:52:26-0 sync - - pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/cf@2024-07-25-15:54:45-0 sync - - pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/var_db_pkg sync standard default pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/var_db_pkg@2024-01-12-11:46:05-0 sync - - pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/var_db_pkg@2024-02-29-08:52:57-0 sync - - pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/var_db_pkg@2024-04-01-12:32:27-0 sync - - pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/var_db_pkg@2024-06-27-11:52:26-0 sync - - pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/var_db_pkg@2024-07-25-15:54:45-0 sync - - pfSense/ROOT/23_09_01_ipv4_20240703094025 sync standard default pfSense/ROOT/23_09_01_ipv4_20240703094025/cf sync standard default pfSense/ROOT/23_09_01_ipv4_20240703094025/var_cache_pkg sync standard default pfSense/ROOT/23_09_01_ipv4_20240703094025/var_db_pkg sync standard default pfSense/ROOT/24_03_01_ipv4 sync standard default pfSense/ROOT/24_03_01_ipv4/cf sync standard default pfSense/ROOT/24_03_01_ipv4/var_cache_pkg sync standard default pfSense/ROOT/24_03_01_ipv4/var_db_pkg sync standard default pfSense/ROOT/24_03_01_ipv6_20250113135850 sync standard default pfSense/ROOT/24_03_01_ipv6_20250113135850@2024-07-03-09:40:36-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850@2024-07-23-10:05:22-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850@2025-01-13-13:59:02-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/cf sync standard default pfSense/ROOT/24_03_01_ipv6_20250113135850/cf@2024-07-03-09:09:28-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/cf@2024-07-03-09:40:36-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/cf@2024-07-23-10:05:22-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/cf@2025-01-13-13:59:02-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/var_cache_pkg sync standard default pfSense/ROOT/24_03_01_ipv6_20250113135850/var_cache_pkg@2024-07-03-09:09:28-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/var_cache_pkg@2024-07-03-09:40:36-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/var_cache_pkg@2024-07-23-10:05:22-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/var_cache_pkg@2025-01-13-13:59:02-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/var_db_pkg sync standard default pfSense/ROOT/24_03_01_ipv6_20250113135850/var_db_pkg@2024-07-03-09:09:28-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/var_db_pkg@2024-07-03-09:40:36-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/var_db_pkg@2024-07-23-10:05:22-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/var_db_pkg@2025-01-13-13:59:02-0 sync - - pfSense/ROOT/auto-default-20240112115753 sync standard default pfSense/ROOT/auto-default-20240112115753@2024-01-12-11:57:53-0 sync - - pfSense/ROOT/auto-default-20240112115753/cf sync standard default pfSense/ROOT/auto-default-20240112115753/cf@2024-01-12-11:57:53-0 sync - - pfSense/ROOT/auto-default-20240112115753/var_cache_pkg sync standard default pfSense/ROOT/auto-default-20240112115753/var_cache_pkg@2024-01-12-11:57:53-0 sync - - pfSense/ROOT/auto-default-20240112115753/var_db_pkg sync standard default pfSense/ROOT/auto-default-20240112115753/var_db_pkg@2024-01-12-11:57:53-0 sync - - pfSense/ROOT/quick-20240401123227 sync standard default pfSense/ROOT/quick-20240401123227/cf sync standard default pfSense/ROOT/quick-20240401123227/var_db_pkg sync standard default pfSense/home sync standard default pfSense/reservation sync standard default pfSense/tmp sync standard default pfSense/var sync standard default pfSense/var/cache sync standard default pfSense/var/db sync standard default pfSense/var/log sync standard default pfSense/var/tmp sync standard default