NAT Logs

-

@mcury

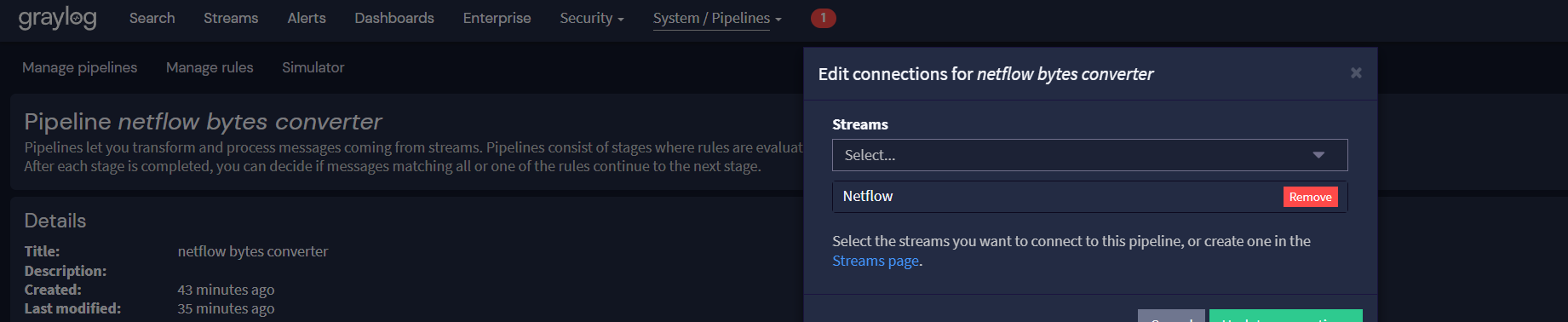

Im on Graylog 6.0.5Yes my pipeline is connected to my stream. Im using Netflow data just for testing.

-

@michmoor I'm on Graylog 6.1.5, but it should work on 6.0.5, no problems with that.

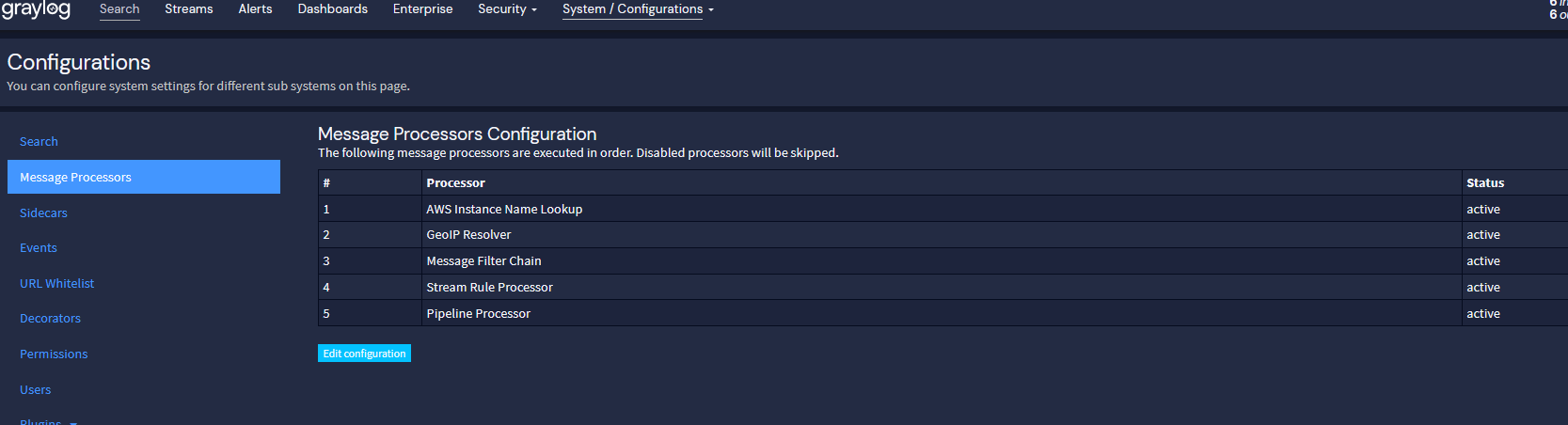

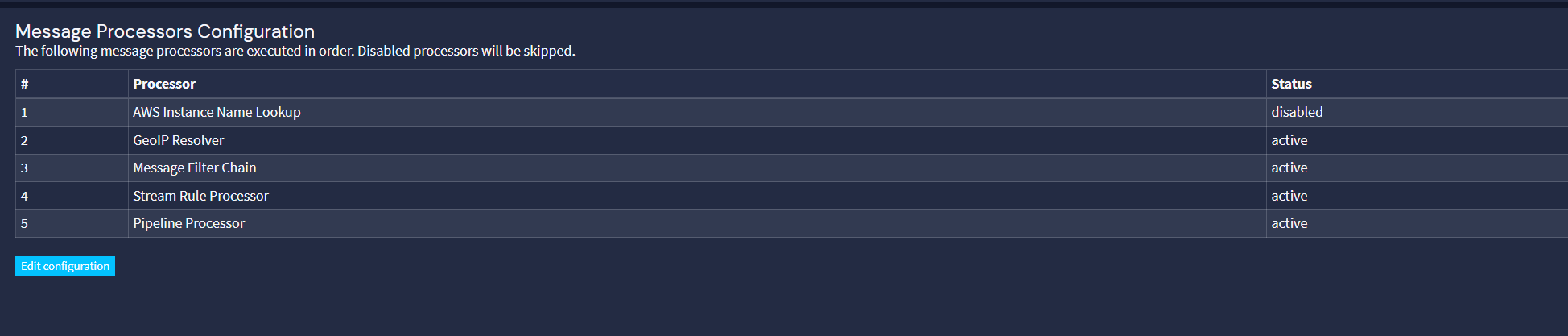

Is Configurations tab, Manage processors, is pipeline active ?

-

@mcury

Yep its active.

I have other pipelines in effect so this is all working. The new pipeline for Netflow is the latest addition. I guess I'm trying to figure out how to prove its working

With my other pipelines, I'm extracting fields into Grok Patterns so i can tell immediately if its working.

Considering i see no errors in the pipeline i have to imagine its working.

-

-

-

-

It does. I feel like we are getting close to a confirmation. LOL

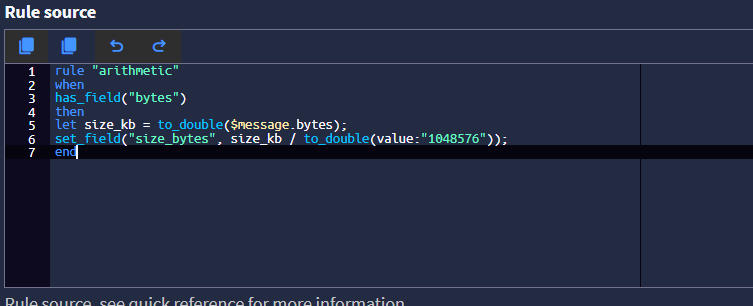

Is my pipeline rule correct?

-

It does. I feel like we are getting close to a confirmation. LOL

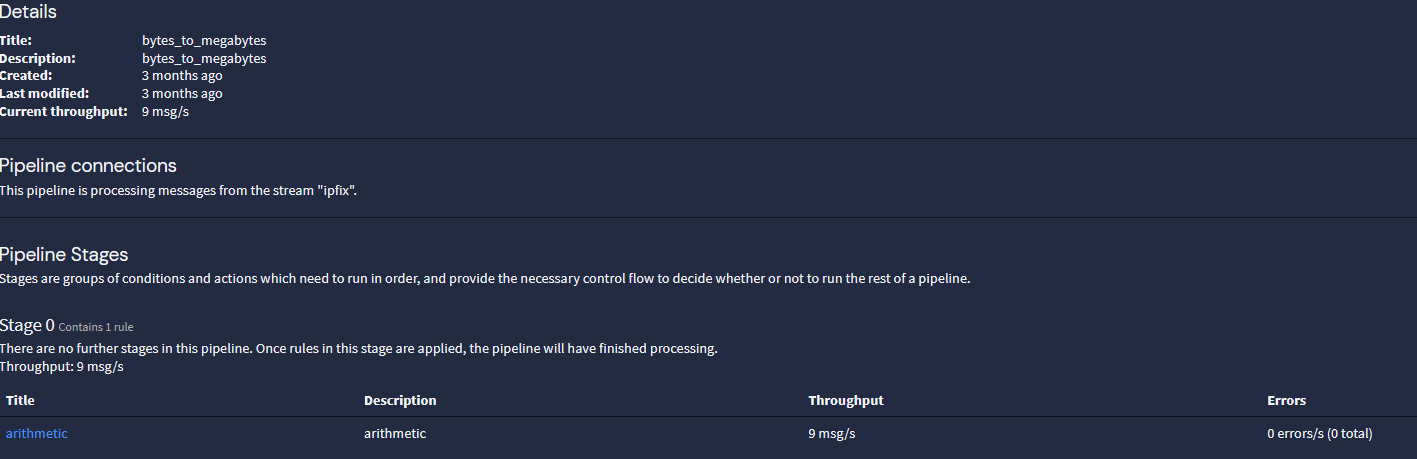

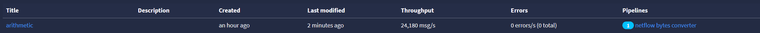

So, the rule is processing the data, and the field exists.

It must be showing up in the logs..Is my pipeline rule correct?

That is an old rule, I'm using now octetDeltaCount, replace the rule with the one I posted above.

After that, go to rule simulator, JSON, paste the example below, then click in run rule simulator, and check if the field size_bytes will appear

{ "destinationTransportPort": 3246, "size_bytes": 0, "gl2_remote_ip": "192.168.255.249", "gl2_remote_port": 20815, "sourceIPv4Address": "192.168.255.249", "source": "192.168.255.249", "ipClassOfService": 0, "gl2_source_input": "6627f43d99aaec416300cd0e", "hostname_src": "pfsense.home.arpa.", "egressInterface": 1, "octetDeltaCount": 168, "gl2_source_node": "0f929def-42b9-4ea6-8980-799a62f7bdb3", "sourceTransportPort": 3246, "flowEndMilliseconds": "2024-04-23T20:21:02.098Z", "timestamp": "2024-04-23T20:21:24.000Z", "destinationIPv4Address": "192.168.255.253", "gl2_accounted_message_size": 575, "streams": [ "63fe3ab48b6393126ef3c2f7" ], "gl2_message_id": "01HW69XJD000004NFV0XGMQ27B", "message": "Ipfix [192.168.255.249]:3246 <> [192.168.255.253]:3246 proto:1 pkts:2 bytes:168", "ingressInterface": 1, "packetDeltaCount": 2, "protocolIdentifier": 1, "hostname_dst": "rpi4.home.arpa.", "_id": "0ea147d7-01af-11ef-8e3e-dca632a54719", "flowStartMilliseconds": "2024-04-23T20:21:01.096Z" } -

@mcury Rule simulation returns without a problem

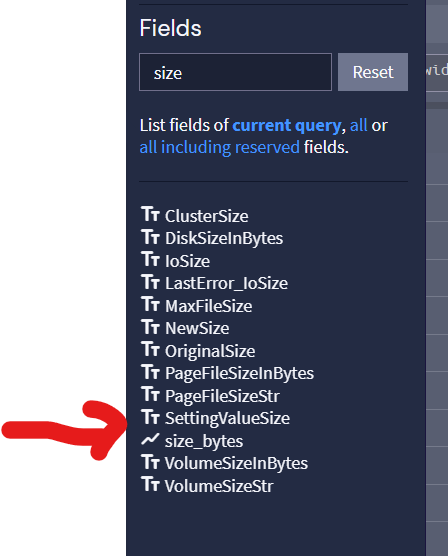

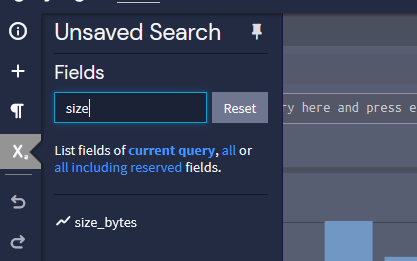

So I'm a bit confused as to how you figured out there was a field called "size_bytes" as that does not come up for me when i review the available fields. In fact, unlike NetFlow which has a field called "nf_bytes" there is nothing similar in IPFix within Graylog.

-

@mcury Rule simulation returns without a problem

So I'm a bit confused as to how you figured out there was a field called "size_bytes" as that does not come up for me when i review the available fields. In fact, unlike NetFlow which has a field called "nf_bytes" there is nothing similar in IPFix within Graylog.

I'm using IPFIX UDP, the rule "arithmetic" above reflects that.

The size_bytes field is the one we are creating with the pipeline.

I'm transforming the field octetDeltaCount, which is in bytes, to size_bytes , which is in megabytes. -

@mcury You're the man.

I corrected some things looking at your example and i got it working.One last question, how did you get DNS lookups as part of your set up?

edit:

I have updated some Dashboards to reflect the new data points IPFix provides.

@stephenw10 pflow definitely helped out in this but....would be nice to just have straight-up logging within pfsense just saying

-

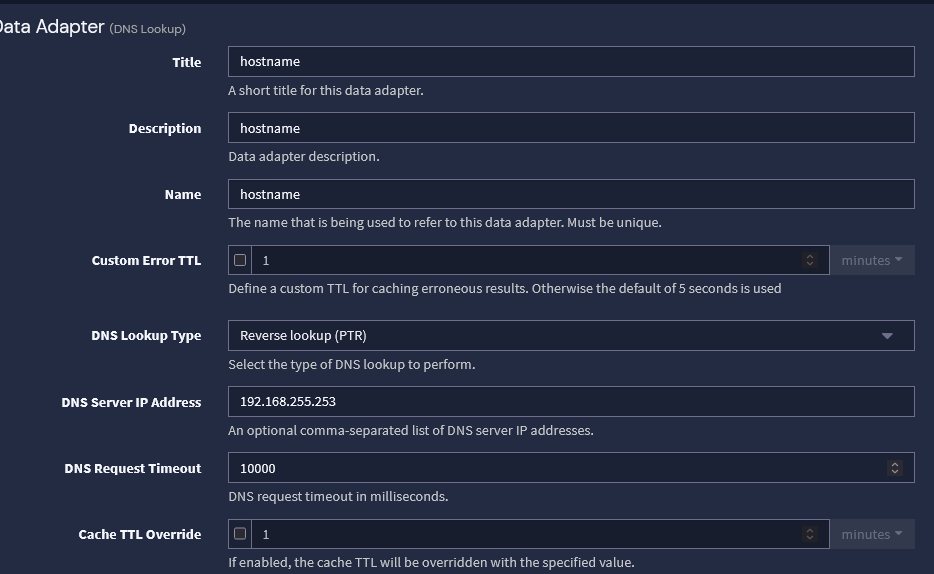

One last question, how did you get DNS lookups as part of your set up?

There are a few steps, hope I remember all of them in this post (22 o´clock here already).

1- First, you need a reverse zone in your DNS.- This reverse zone can be dynamically updated or not, you choose. I opted by having static IP leases and creating A and PTR records for each host individually, but you don't need to do it.

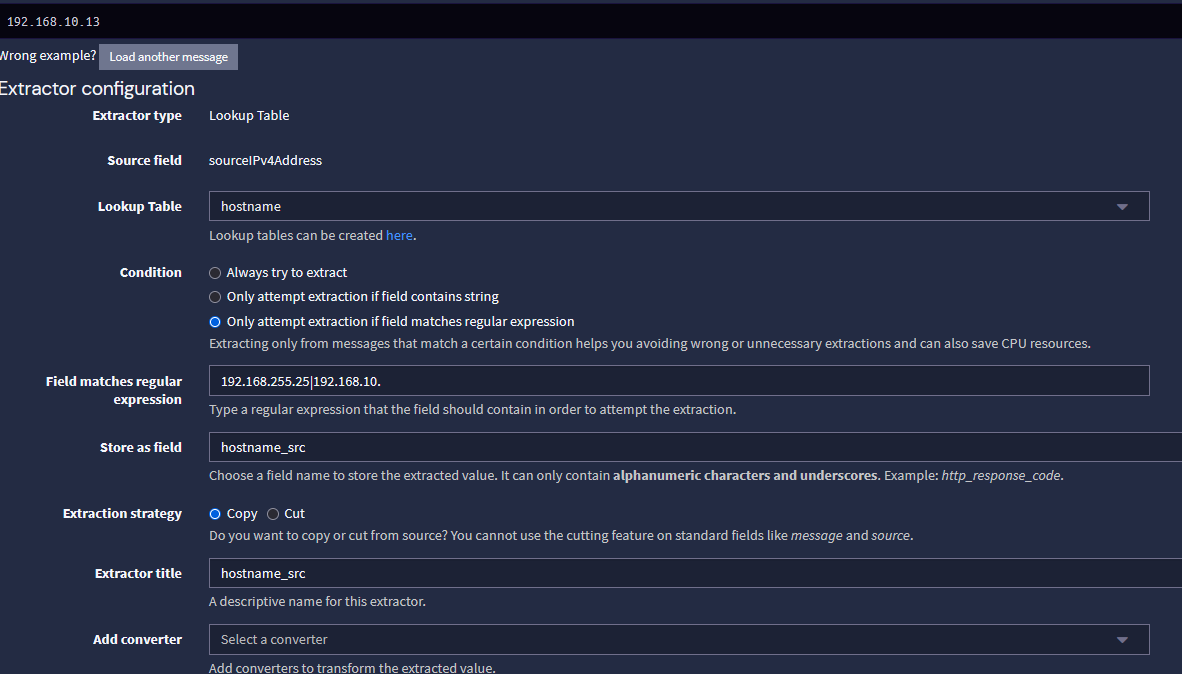

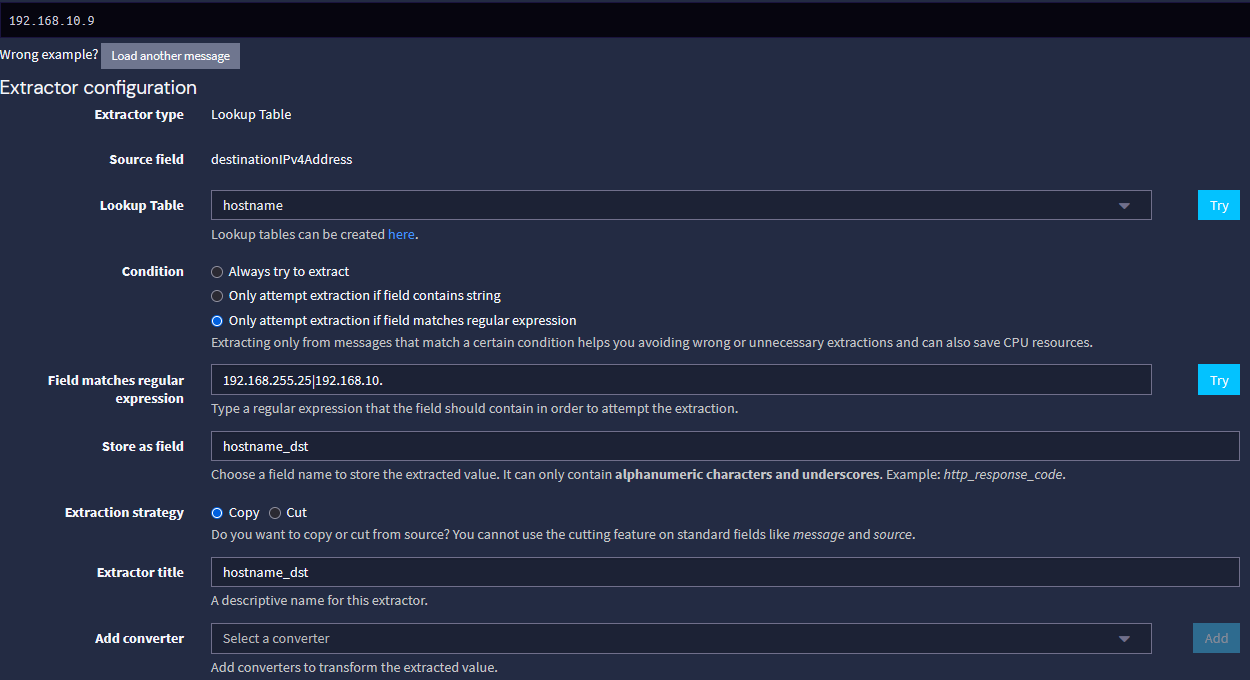

2- Import these extractors to any input you want to use them:

{ "extractors": [ { "title": "hostname_src", "extractor_type": "lookup_table", "converters": [], "order": 12, "cursor_strategy": "copy", "source_field": "sourceIPv4Address", "target_field": "hostname_src", "extractor_config": { "lookup_table_name": "hostname" }, "condition_type": "regex", "condition_value": "192.168.255.25|192.168.10." }, { "title": "hostname_dst", "extractor_type": "lookup_table", "converters": [], "order": 13, "cursor_strategy": "copy", "source_field": "destinationIPv4Address", "target_field": "hostname_dst", "extractor_config": { "lookup_table_name": "hostname" }, "condition_type": "regex", "condition_value": "192.168.255.25|192.168.10." } ], "version": "6.1.5" }Or, through the GUI:

The condition value was added to allow only local addresses to be resolved, but if you want the entire world to be resolved, just remove that.

3- Go to lookup tables in Graylog, create an adapter, a cache and a lookup table, point it your DNS server.

That alone should do it, if you have any doubts just ask, but I will only answer tomorrow, 22:30 here already.

- This reverse zone can be dynamically updated or not, you choose. I opted by having static IP leases and creating A and PTR records for each host individually, but you don't need to do it.

-

@mcury Got it working.

Ever thought about creating a blog and posting this? At the very least this is extremely useful and should be preserved

-

-

@mcury Had to update the JVM Heap size. Looks like IPFix widget i made was killing my VM.

-

Had to update the JVM Heap size. Looks like IPFix widget i made was killing my VM.

DNS data can be overwhelming.

I had to increase the JVM heap size and configure zswap with zmalloc and zstd to avoid crashes.

Then, checked every rule that was logging, or tracking, and optimized that also.

Created a bunch of no log rules, changed to 5 days of live data only, older indices are closed but I can open them anytime I need. -

Just updated my graylog to 6.1.6, still works.

I'm doing some magic here, managed to work with Graylog, samba domain controller with freeradius, apache server with php and ssl, a nut server for everything (APC nobreak) and a Unifi controller that controls 3 devices, one AP, and two switches.

All of these services running in a Raspberry pi 5 8GB hehe

It runs so well if you optimize it correctly, that I've already deployed a raspberry pi 5 a few to some small customers.

As soon as the 16GB variant reaches my region, I'll get one to see how many days of live logs I'll be able to get..

Very low cost and power usage solution, easy to replace or rebuild the sd-card in case of disaster.

Powering it up through PoE with an adapter bought in Aliexpress. -

All of these services running in a Raspberry pi 5 8GB hehe

You are a brave man! I have an XCP-NG 2x server set up.

I am planning a future migration of Graylog. When i first stood it up years ago i made the very very bad error of storing all data on the vhd - So i have a 500GB drive attached to this Virtual Machine. As you can probably imagine wanting to back up the VM takes some time. I wanted to move it to an NFS share at the very least but my drives are not very performant. Its a project that is on the radar but i never have time. -

All of these services running in a Raspberry pi 5 8GB hehe

You are a brave man! I have an XCP-NG 2x server set up.

I am planning a future migration of Graylog. When i first stood it up years ago i made the very very bad error of storing all data on the vhd - So i have a 500GB drive attached to this Virtual Machine. As you can probably imagine wanting to back up the VM takes some time. I wanted to move it to an NFS share at the very least but my drives are not very performant. Its a project that is on the radar but i never have time.If you are using the community version, that is possible with the Graylog 6.0 community version

But, If I were you, I wouldn't update to 6.1 series.They are slowly dropping support to opensearch, if you check the installation guide, they even removed opensearch from it.

The alternative now is Graylog-datanode.Since the "archive" is a paid feature, Graylog-datanode doesn't give you that option in the community version, as opensearch used to.

I used to archive everything in my NAS and restore them once needed, now I can't do it anymore.

So, I'm keeping two months of logs only, its enough to get 30GB of logs.I have a script that cleans up for me, but not on a schedule:

#!/bin/bash for counter in {0..38}; do curl -X DELETE --key /path_to_your/_key/key_datanode.crt --cert /path_to_your/cert/cert_datanode.crt --cacert /path_to_your/ca//ca_datanode.crt https://raspberry.yourdoamin.arpa:9200/pfsense_$counter --pass datanode_password_you_configured; doneIn the example above, it delete closed indices from 0 to 38 and keep the others untouched.

I can run it each of the indices I have.Edit: For bigger customers with higher requirements, a NFS share would be a good idea indeed.

Perhaps a cluster of graylog servers also.. -

Found something interesting..

IPfix is working out (@mcury seriously..you the man) but I noticed some data is not being loggedI have pfSense set up as a Tailscale subnet router. There is another Subnet router within my tailnet.

I can reach devices behind the other subnet router. The LAN on that side is 192.168.8.0/24. I am connecting from 192.168.6.0/24.

No IPFix logs were created. @stephenw10 Does pflow treat "vpns" differently somehow ?

Perhaps in a near future, have to organize a few things here and there before

Perhaps in a near future, have to organize a few things here and there before