Does upgrade to a modern 10G NIC make sense !!??

-

I am using an older Intel520 2x 10G SFP+ board in my firewall and I wonder if pfSense would profit from a more capable newer interface board (by offloading functionality from the CPU).

If so logical choices could be:

- ConnectX4

- Intel x710

- Intel e810

All those boards are more capable and do have better / long lasting driver support especially the Intel boards.

Not technical advantages to mention here:

- ConnectX4 PCIE-3 (x520 = PCIE-2); relatively cheap; 25G

- Intel x710 PCIE-3 ; significantly newer and driver support and four port versions available for about 200 € ! ; 10G

- Intel e810 PCIE-4; better than x710 newer and driver support ; 25G; not much more expensive as an x710, however regrettable no reasonable priced four port version

And for met PCIE-4 and a 4 port version are both significant advantages, since

- I am using a consumer mainboard where the second PCIE x16-slot only has four lanes.

- and four ports is a significant advantage for a mini-itx board just having one PCIE-slot. In the future for a next firewall build, I would love to switch to a smaller (mini itx) case

But as said this are arguments NOT involving the very important factor "Does pfSense profit / use the options of those more advanced boards !!??" / will it do in the future.

*Note: a couple of the mentioned boards do support both 10G and 25G. However given the fact that there are no consumer 25G switches, I will use them as least in the near future as "advanced" 10G-NIC's. I also prefer SFP+ above UTP for high speed NIC's

-

Personally I would keep the x520 unless you have some really good reason. I have one and see close to zero issues with it. The ixgbe driver seems more mature.

Are you actually hitting a limit on it?

-

I am trying to improve my network. In regard to that the background for this post is two fault:

The transfer speed between my TrueNas system and my PC does not reach a full 10G. Not even if both sides use a NVME SSD. I try to understand why and try to remove (potential) causes. If replacing the NIC would contribute to that (for reasonable cost), I would do that. Note that the windows filesystem does not help especially in case of small files

The second, more long term problem, is the size of my actual pfSense system. It is a uATX-system and in the future I want to migrate to a smaller mini-atx system without sacrificing performance. That smaller case imply's only one NIC-card. For that reason I would prefer a four port (SFP+) NIC.

So the short answer on your question is, I do not know if the x520 is a limiting, but if replacing it with a modern card would help, I would consider that.

-

@louis2 does traffic from your TrueNas to PC actually go through your firewall, or are they on the same LAN?

Traffic within a LAN typically just goes through a switch not the firewall.

-

iperf3 and testing will tell you if and where are issues.

Having said that, upgrading hardware and staying at the same speed, seldom makes sense.But speeds above 10G require rather expensive switches.

Maybe too early for non business setups. -

I would not expect an x520 NIC to be significantly restricting traffic vs, for example, an x710 NIC. The connectX NICs can be variable. I've had bad luck with them but some users report great throughput. YMMV!

-

My network is alike a professional network. E.g. the NAS is in the GreenZone (VLAN) and the PC is in the normal (V)LAN. IOT is in a third etc.

So the traffic is passing the FW / pfSense

-

As you know modern cards can offload tasks which previously had to been done by the CPU. From CRC's over flow control, que handling etc. That can, depending on the application and OS make a huge(!) difference.

I do not know how and if pfSense and freebsd use these capabilities and to which extend it is useful for a firewall application I can imagine it is.

A ConnectX4 is not the best of the newer card but it can be had below € 60 / $ 60. So it is perhaps worth trying do not know. As you know I have become very very hazy as soon as it comes to changing hardware

And I am not sure a connectX4 is formally supported by FreeBSD (my impression is that Nvidia has a driver) -

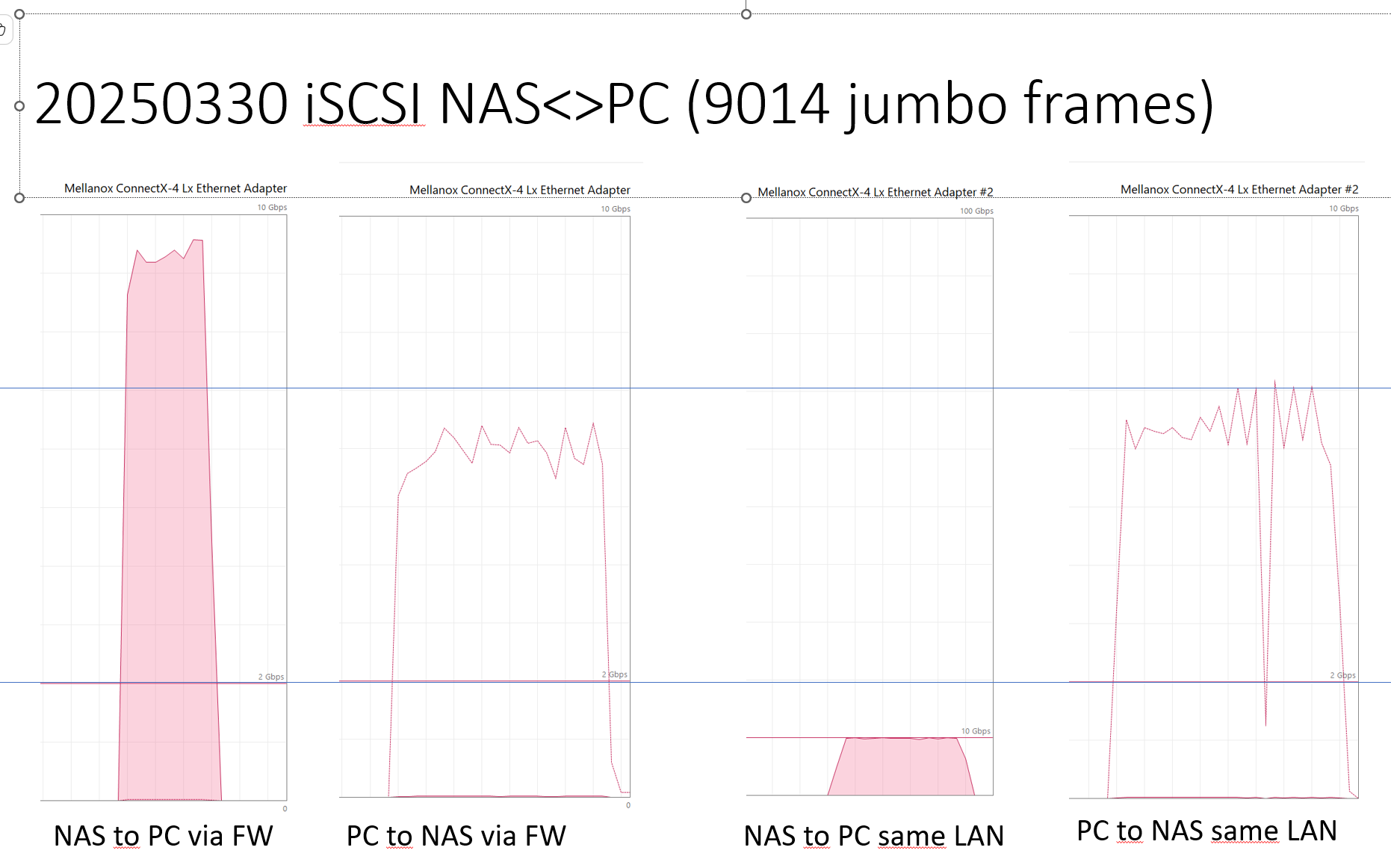

I realized that I could compare the transfer between NAS and PC in two situations:

- PC and NAS in the samen vlan and

- PC to NAS via the pfSense

I had to make some changes in my network, however it would show the impact of pfSense

The test setup I used was as follows

- The TrueNAS-scale system connected to my 10G-main-switch

- The PC via ConnectX4-port-1 connected to the 10G-main-switch routed to the NAS via the FW

- An extra 10G switch connected to the 10G-main-switch NAS-vlan and to the PC via ConnectX4 port-2

- NAS and PC both equipped with a better quality NVME SSD

- testing using iSCSI drive on the NAS

- Using one big file which is not really a representative test

This setup allowed me to test both situations, via the FW and using the same vlan by switching the used PC NIC-port

The result was better that I expected! But of course there is some impact due to the FW. It is also clear that the PC (Windows11 pro 64bit), does not manage to send data to the NAS at full speed.

Note that I am using jumbo frames (9014), to minimize the number of frames the FW has to handle and a bit more efficient ethernet frames.

See for the results the picture below

-

@louis2 That was a good test to do and it is showing you where to focus, and it doesn't look like it's the NIC's...

What HW are you running pfsense on?

-

Mmm, that's a pretty good result for the routed/filtered throughput.

-

CPU

Core(TM) i5-6600K CPU @ 3.50GHz

Current: 3602 MHz, Max: 3500 MHz

4 CPUs : 1 package(s) x 4 core(s)

AES-NI CPU Crypto: Yes (active)

IPsec-MB Crypto: Yes (inactive)DISC

200GB NVME SSDRAM

16 GBNIC's

Intel I219 (On MB)

Intel X520 DA2 2x SFP+

Intel x550 2x 10G UTPThe test was done via de SFP+ card

-

Note that I did choose to test with one big file about 17 TB despite that that is not exactly representative.

I did that to avoid major impact from windows and the nas. I know that .e.g. windows small file performance is ..... terrrible

I do not know what is the effect of one big file versus many small one's on pfSense trough put, but I assume that the num,ber of packages has more impact on pfsense, than te number of files.

-

@louis2 said in Does upgrade to a modern 10G NIC make sense !!??:

CPU

Core(TM) i5-6600K CPU @ 3.50GHzI'm guessing the results actually scale quite well based on CPU performance in such a test. And I'm seeing iperf results around 9 Gbit on my i5-11400 (VLAN to VLAN). Comparing the CPU's on cpubenchmark I see roughly 30% better single thread performance on my 11400 vs your 6600. Which seems to be about right based on the VLAN results you have...

https://www.cpubenchmark.net/compare/2570vs4233/Intel-i5-6600K-vs-Intel-i5-11400BTW, I'm also running X520's, and have 4 cores assigned from that CPU.

-

I just do not understand what the limiting factor is. The complexity & number of the rules when sending data towards the NAS is higher than the from the NAS to the PC. That might be a reason. However that can not be the main reason since the transfers PC to NAS are also far from 10G when pfSense is not involved.

So I do not know if the NAS or the PC is the main reason for the 'slow' transfer. Given the fact that writing is always slower than reading the change that the NAS is the main factor seems a bit higher.

Where to add that the NAS interface stack is a lot more complex than the PC-interface stack and the PC is equipped with a ConnectX4 and the NAS with a connectX3. If that matters, I do not know.

-

Yeah I would definitely run an iperf test to eliminate disk reads and smb issues.

That also means you can try parallel streams to see if it's a core/queue issue in the firewall. Though that's unlikely IMO with that CPU.

-

@louis2 said in Does upgrade to a modern 10G NIC make sense !!??:

However that can not be the main reason since the transfers PC to NAS are also far from 10G when pfSense is not involved.

Sorry, you are right, I clearly didn't read the graphs (or rather the labels)... You clearly have more or less the same performance in both scenarios. Testing with iperf will, probably give you more realistic data on the actual throughput.

One difference between the NAS to PC and PC to NAS is the cache you may have in the NAS. Whilst the PC is likely reading the file from the SSD before sending, the NAS may perhaps be reading from cache, completely bypassing SSD...

-

Yep TrueNas is using ZFS and a big ram cache, however the NVME-SSD should be ... fast enough to write 10G ... I think & hope. However I must admit that SSD's are not by far as fast as advertised if you are writing larger amounts of data ..

It is a 4TB WD_BLACK SN850X not the worst ssd ....