25.03 beta - Bufferbloat / FQ CoDel issues

-

@RobbieTT

Yeah, interesting...

If possible, I’d repeat the tests on version 24.11 — do you still have an old boot environment? Just in case the issue turns out to be caused by some changes on the provider’s side. -

@w0w

Ok, switched back to 24.11 and ran the Apple tool again:rob@Smaug ~ % networkQuality ==== SUMMARY ==== Uplink capacity: 90.237 Mbps Downlink capacity: 805.436 Mbps Responsiveness: High (33.661 milliseconds | 1782 RPM) Idle Latency: 12.625 milliseconds | 4752 RPM rob@Smaug ~ %Responsiveness score returns back to 'High' again.

I find it perplexing that the older firmware with single-core PPPoE is, in this regard, working better than multiple cores with if_pppoe.It was a valid idea to double check again though.

Edit: Scratch the above for now as I think I found a misplaced patch being applied when it should not have been. This may have polluted my real-world experience and the testing....

️

️ -

I'm also starting to recall and analyze a bit what's going on with these traffic limiters. It's actually quite interesting that I'm seeing packet drops on the PPPoE upload, even though I haven’t set any actual bandwidth limit. It's configured to the maximum. Still, under load—though it's actually below 1 Gbit/s—I’m seeing drops specifically on the upload, on PPPoE using the new backend. I haven’t tested it yet on the old backend. However, I did test it on the second provider (which is behind triple NAT through ROOter using a 5G mobile network). Yes, I have Multi-WAN, but the second provider is only used for failover. So... either I didn’t notice, or under the same test conditions as before, I’m not seeing any drops at all on the second WAN, which is ~200/~50Mbit/s. Obviously, the same limiters are in place, and the bandwidth cap is still 1 Gbit/s, but logically, it shouldn't be active in either case, right?

Edit: just tested using old PPPoE backend, same drops on the upload pipe. -

@w0w

Some of your fq_codel setting are really demanding though.With a usual latency variance over the internet of around ±1ms or more (when unloaded) and with a usual setting of 5ms on fq_codel, you have a setting of 1µs. That's quite brutal I guess and probably more suited to use inside a data centre than over the net.

My router crashed in the early hours for no explicable reason, so my testing today was borked. Outside of testing or configuration changes it's my first ever hard crash of pfSense.

️

️ -

@RobbieTT said in 25.03 beta - Bufferbloat / FQ CoDel issues:

Some of your fq_codel setting are really demanding though

Those are new default settings, I think. I have seen something on redmine regarding it, but... Ignored it

@RobbieTT said in 25.03 beta - Bufferbloat / FQ CoDel issues:

My router crashed in the early hours for no explicable reason, so my testing today was borked

It just happens sometimes, any crash dumps available?

-

@w0w said in 25.03 beta - Bufferbloat / FQ CoDel issues:

@RobbieTT said in 25.03 beta - Bufferbloat / FQ CoDel issues:

Some of your fq_codel setting are really demanding though

Those are new default settings, I think. I have seen something on redmine regarding it, but... Ignored it

@RobbieTT said in 25.03 beta - Bufferbloat / FQ CoDel issues:

My router crashed in the early hours for no explicable reason, so my testing today was borked

It just happens sometimes, any crash dumps available?

Hi @w0w - I'm curious about this too. Where did you see that there might be new defaults on FQ CoDel parameters? Unless I missed it and that particular traffic shaping algorithm was changed / improved, 1us seems way too low. Thanks in advance.

-

@tman222 said in 25.03 beta - Bufferbloat / FQ CoDel issues:

Where did you see that there might be new defaults on FQ CoDel parameters?

https://redmine.pfsense.org/issues/16037

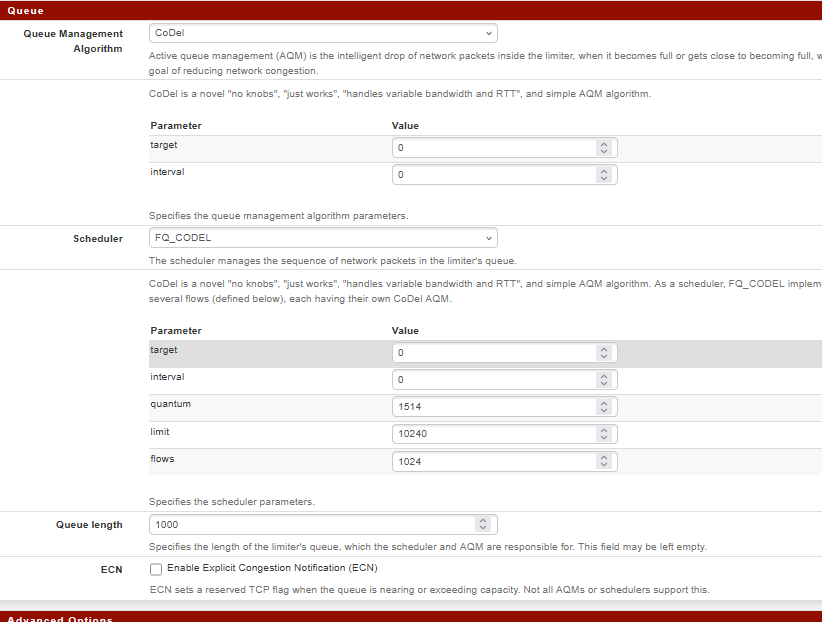

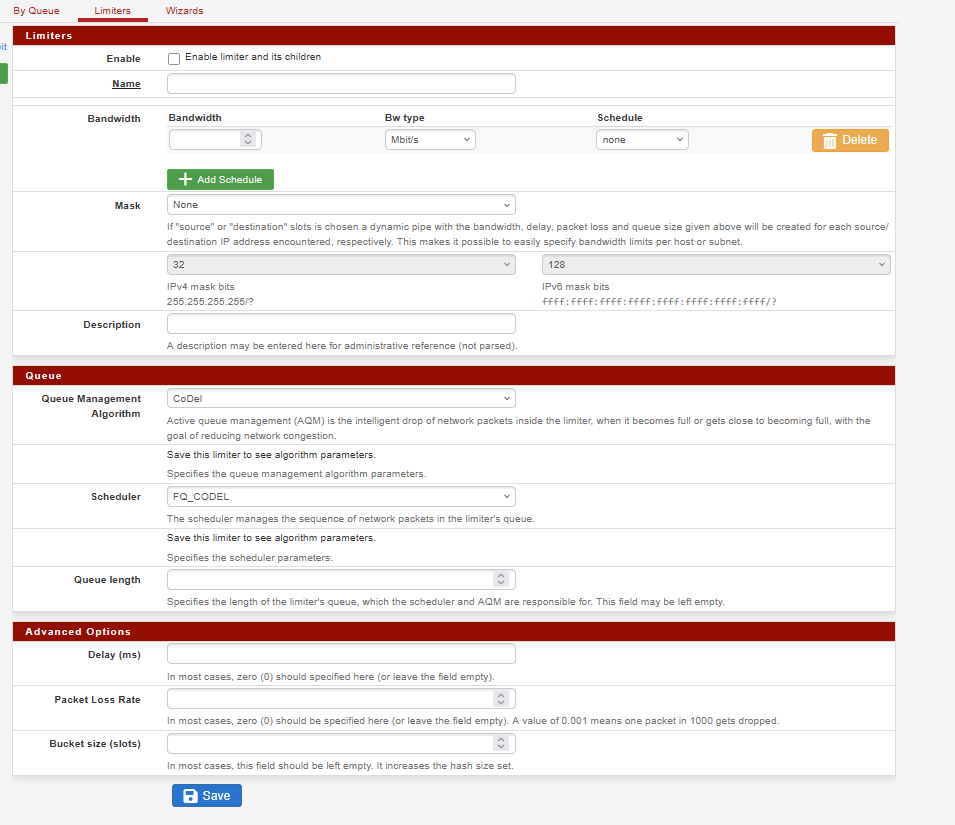

And this is what I see when I select an already created limiter — but you also don’t see any of those parameters when creating one...

And when you try to create the new one

I don't really think those are new defaults, because all the fq-codel man pages I can find on the web reference the same 5ms value that @RobbieTT mentioned.

-

@w0w said in 25.03 beta - Bufferbloat / FQ CoDel issues:

It just happens sometimes, any crash dumps available?

No crash log or anything of note in the usual logs. It just stopped doing its stuff.

️

️ -

@w0w said in 25.03 beta - Bufferbloat / FQ CoDel issues:

@tman222 said in 25.03 beta - Bufferbloat / FQ CoDel issues:

Where did you see that there might be new defaults on FQ CoDel parameters?

And this is what I see when I select an already created limiter — but you also don’t see any of those parameters when creating one...

I don't really think those are new defaults, because all the fq-codel man pages I can find on the web reference the same 5ms value that @RobbieTT mentioned.

The defaults can be messed up and showing zero, according to the redmine. The pfSense manual still has the correct defaults listed.

You do see the parameters when creating a new one, only that they do not appear until you set and save that page. If you look closely on your screenshot, below Scheduler: FQ_CODEL, you will see this note:

Save this limiter to see algorithm parameters.

Caution, coffee may be hot etc.

It catches many of us out when we haven't set a new one in ages. It's a weird UI human factor fail thing and I have no idea why pfSense makes it so complicated compared to other routers.

As Douglas Adams would have it "It's a black panel with a black button that lights-up black when you press it..."*

*Hotblack's ship, when he was spending a year dead, for tax reasons.

-

@RobbieTT said in 25.03 beta - Bufferbloat / FQ CoDel issues:

Caution, coffee may be hot etc.

It catches many of us out when we haven't set a new one in ages.

Absolutely. Of course, that doesn’t change the fact that no one expects the default parameters to have values different from those stated in the documentation — or at the very least, everyone is used to trusting that those parameters actually exist and are being applied. I just didn’t check them myself, of course.

-

@w0w

No it doesn't and until your link to the redmine I had no idea it was a thing. It doesn't look like Netgate has addressed the issue, presumably because it is both intermittent and potentially unnoticed when new limiters are set. ️

️ -

@RobbieTT said in 25.03 beta - Bufferbloat / FQ CoDel issues:

Working fast.com harder doesn't really change my results. Presumably because the download and upload sessions are sequential:

They are.

The reasons is : a massive upload will not only saturation the upload pipe, but also use "a lot of" the download pipe.

After all, every TCP packet (about 1500 bytes in size) has to be acknowledged by an downstream "ACK", which will have the size of a minimal TCP ACK packet, or 46 bytes.

This means, you would lose 3 %. -

@Gertjan said in 25.03 beta - Bufferbloat / FQ CoDel issues:

@RobbieTT said in 25.03 beta - Bufferbloat / FQ CoDel issues:

Working fast.com harder doesn't really change my results. Presumably because the download and upload sessions are sequential:

They are.

The query is between a test format that runs upload and download stress tests in parallel and one that does it sequentially, nothing about packet sizes, TCP or overheads.

Your description of how every packet is ack'ed or the actual size of packets is not that relevant anymore; time has moved on in the age of IPv6 TCP, IPv4 UDP or even QUIC. The majority of my traffic is IPv6, including for the testing above, with many 'continuing' packets per Ack.

️

️ -

Humm.

"Packets size" determines speed. Like : how bigger and more there are, the faster the pipe fills up.IPv6 or IPv4 or something else : that close to irrelevant imho

-

No, speed is latency.

The 'pipe' is bandwidth.

The 'pipe' can be 'filled' with data, overheads, acks, padding and, unfortunately, the additional dead space of latency and buffering. A 'pipe' can be saturated well before it hits its bandwidth limits. This can be managed somewhat by techniques such as FQ_CoDel to shape throughput into the 'goodput' of the data we actually want to move.

But this thread is not about that, other than the exerting some existing methods of balancing them. It is about a shift in pfSense performance with the new pppoe backend that is in beta and the apparent impact on FQ_CoDel over multiple (active pppoe) cores.

The forum is full of threads where you have inserted yourself without understanding the topic, before you derail it by stating stuff that is both not relevant and often incorrect.

If you have nothing to offer on the subject or even have a relevant question to ask then try not to post. If you want to debate stuff of your choosing then start a thread on that topic in the correct place.

Hope that helps matters.

️

️ -

@RobbieTT said in 25.03 beta - Bufferbloat / FQ CoDel issues:

methods of balancing them. It is about a shift in pfSense performance with the new pppoe backend that is in beta and the apparent impact on FQ_CoDel over multiple (active pppoe) cores

So it's only on the new backend?

-

Seems so or possibly an interaction with if_pppoe and something else within the new beta.

Regressing to 24.11 again and symptoms vanish. Reverting to the old backend on the beta seems fine too (albeit with the old pppoe issues).

I had to work back again and re-test as I had the test diff patch applied with the revised beta, which drew some doubts on my results for a while. Fat-fingering in a config error when testing is something I try to avoid but you have to admit your mistakes.

I'm waiting for the next beta drop really, to see if the changes also impact the issues I see. Opening up all the cores may just be peeling back something that was already latent and just masked by the old backend process.

I did do a couple of tests that suggests the upload fq_codel settings may need adjusting against a different workload for if_pppoe; but too early to be sure.

I'm also being nudged to try Kea again, as apparently it has matured a bit since its launch.

️

️ -

R RobbieTT referenced this topic on

R RobbieTT referenced this topic on