New PPPoE module (if_pppoe) causes high cpu usage and cause lagging

-

Current setup :

PfSense CE 2.8.0 (ufs install) virtualized on Proxmox.

No device passthrough used.

Host is a I5-8500T, 96gb of ram, nvme disks and with dual X710 10gbps SFP card.

VM has 4 cores / 8gb ram and 3 virtio 10gbps interfaces.

mtu is set to 9000 on the lan interface and left to default for the wan.My isp is Bell Canada (3gbps) with a modem bypass using a XGS-PON SFP+ from ECI

Now, the vm cpu usage used to be between 2% and 5% before I tried to enable if_pppoe and now it stays steady at 20% without any major network load. I used to go up to 20-30% under more intense network activity but now this goes up to 70-90%.

I know this isn't scientific but browsing is lagging, accessing PfSense webui is also pretty laggy some times (situation that I never had before). So I guess I must have something set wrong but I can't figure... Looking at the regular logs, I don't see anything different or evident.This returns nothing under a speedtest / load test:

dtrace -n 'fbt::if_sendq_enqueue:return / arg1 != 0 / { stack(); printf("=> %d", arg1); }'I do have the following tuning set :

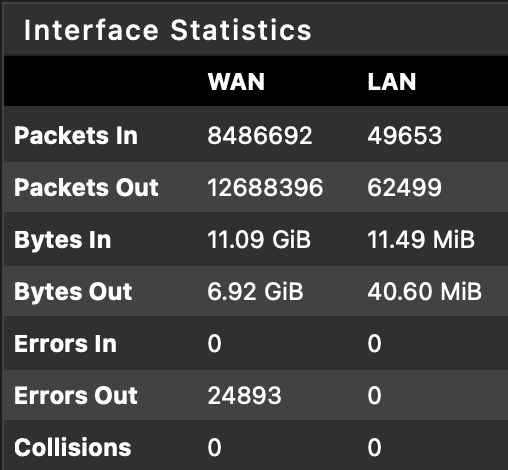

net.link.ifqmaxlen="2048" net.isr.defaultqlimit="2048" net.isr.dispatch="deferred" net.isr.maxthreads="-1" net.isr.bindthreads="1" legal.intel_ipw.license_ack="1" legal.intel_iwi.license_ack="1" machdep.hyperthreading_allowed="0"running Speedtest from the vm itself i can see the error count increase drastically in the interface statistics :

-

I found my issue !

I used to have an old VIP attached to the WAN interface.

It wasn't making any issue when using MPD but ended up creating problems with IF_PPPOE.

VIP removed, all good now :) -

Interesting, does it means that the new PPPoE driver is not HA compatible, your VIP was CARP or ALIAS ?

-

@mr_nets Alias

-

Using an ALTQ traffic shaper also causes a large number of WAN out errors - the workaround is to change to limiters.

-

I’m experiencing the same issue. However, I use those VIPs (aliases) and can't remove them, so I’m stuck with MPD for now.

Is there any fix planned?

-

Something definitely going on. Not sure is it really related to your issue.

https://redmine.pfsense.org/projects/pfsense/search?utf8=%E2%9C%93&q=Vip

-

@w0w My issue also appears to be related to Problems after enabling if_pppoe. I'm experiencing the exact same behavior described there.

Let’s hope we won’t have to wait a year for https://redmine.pfsense.org/issues/16235 to make its way into CE...

-

@cust

You don't need to wait anything.

You can apply this patch via system patches package, just use commit id 62b1bc8b4b2606d3b20a48a853ef373ff1d71e26