CARP Setup Constant Listen Queue Log Entries And Traffic Dropping

-

I have a CARP setup on the latest version of pfSense plus with Netgate 1541 firewalls in production use. Things have been working flawlessly for literally years, through a ton of configuration changes.

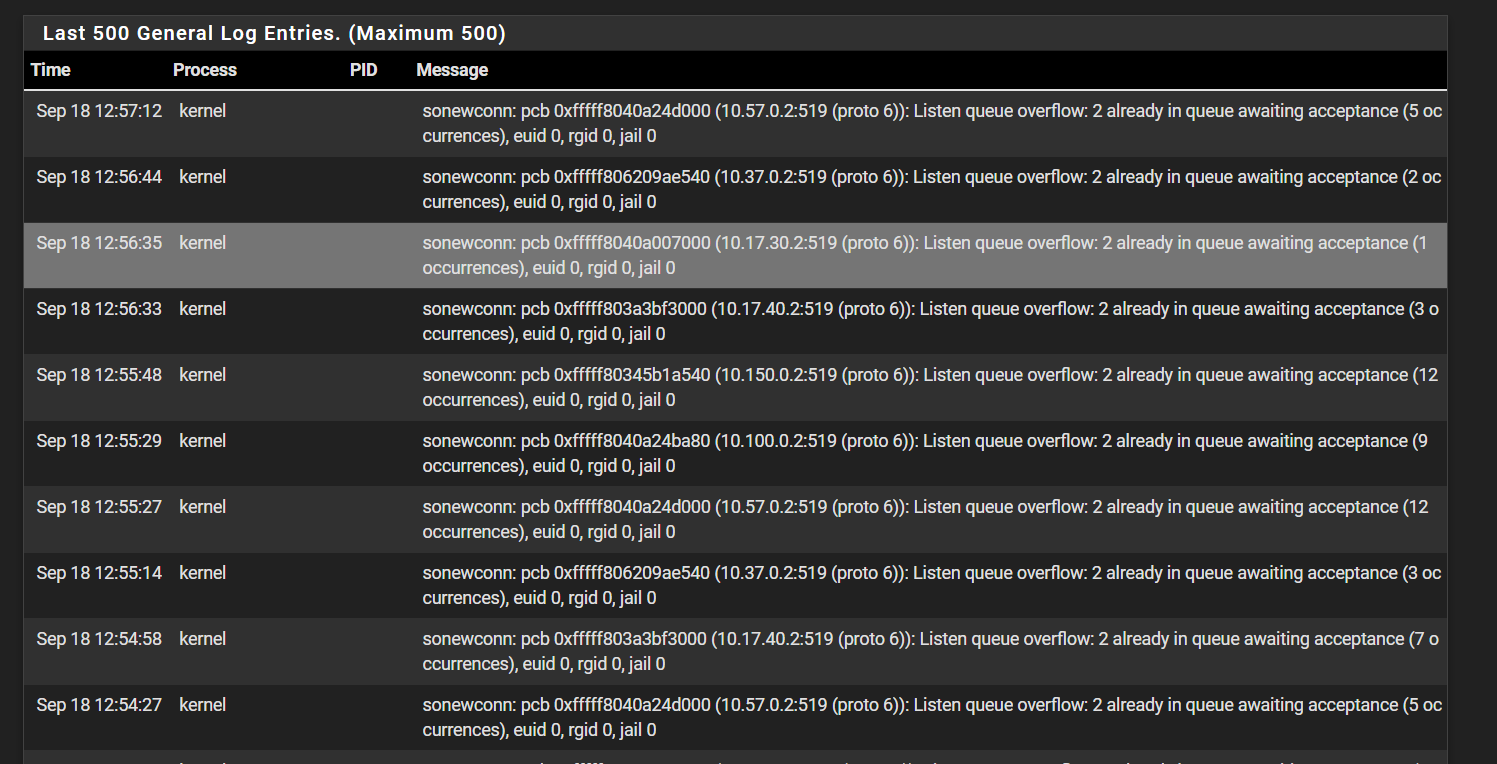

Today, I had to configure a few more phase 2 entries on a VPN (we have many and this is a common thing I do frequently), after doing so and then changing a few firewall rules, my logs started getting flooded with the below image of Listen queue issues.

Traffic between VLANs stopped passing (at random), certain external traffic stopped working, etc...

I failed over to the backup CARP firewall and things are working as they should now. But even after a reboot, I am still seeing constant log entries for this.

Any clue what would cause something like this?

-

As another update, I realized now that this primary firewall did NOT reboot when I asked it too, it just hung and never proceeded.

I have consoled in and tried rebooting there and it's stuck on Stopping package WireGuard and has been for several minutes now, the webGUI remains responsive.

If it goes on too long I will pull the plug and go from there, maybe a reboot will resolve this, but this is some of the weirdest behavior I've seen with pfSense.

I've been managing them for a living for about a decade now, a lot of them in production, and very familiar with more advanced configurations like CARP setups, and basically have never seen any major issues, so this is bizarre indeed.

If needed I can contact Netgate support directly, but hoping we can all maybe try to investigate here since I prefer forums for that and so far the secondary firewall is working perfectly.

-

I'm wondering if an SSD is starting to fail on the primary and that was the issue. It is configured in a mirror, but after reboot, ada0 started having to resynchronize, in theory it shouldn't cause an issue to have just 1 SSD have issues, but still something notable.

-

Were you able to resolve this?

What do you have running on port 519?

-

@stephenw10 that's what I am wondering as well, nothing should be listening on that port that I am aware of.

So far, after the reboot (which stopping WireGuard got hung on requiring a force power off) we are back on the primary firewall and zero issues, nothing in the logs related to this error either.

I'm honestly lost as to what would have caused this issue. It's also odd that again the secondary didn't have the issue and it's configuration is 100% identical to this one other than your normal interface settings being different and WireGuard since it's manually configured.

I suspect WireGuard was the issue, since it wouldn't stop, but that would be odd given the errors I was seeing and the fact that WireGuard is on default port.

-

Try running

sockstat -l4and see what (if) anything is listening there now. -

@stephenw10 dhcpd is listening on that port, which makes sense why I was seeing it on so many of our IPs at the same time.

Forgot to check sockstat like an idiot too lol.

So that's DHCP failover, which is odd, not sure what would be triggering flooding of that port.

-

Oh of course!

In which case it implies dhcpd/Kea stopped listening on the port. I would have expected something to be logged for that.

-

@stephenw10 Yeah good point, this is odd indeed.

I don't have service watchdog setup on this unit yet, but you know what, I saw a few dhcpd crashes on the same pfSense version at another site that service watchdog caught and restarted.

Weird behavior, but setting up the watchdog should fix it.

I'm wondering if there is a new bug with the dhcp daemon causing this?

I am using ISC not KEA to be clear.

Not sure I have enough log retention to go back and see if there was anything in the DHCP service entries. Though if the daemon crashed wouldn't I expect to see that in the System Logs and not DHCP specific ones?

-

I'm not aware of any new issues in isc-dhcpd.

It depends how it failed. If it was unable to service requests but was still running it might log an error. If it was just so busy it stopped responding you might see that in the logs. Or, yes, if it just crashed out you might see that in the main system log.

-

@stephenw10 Well the good news is I haven't been able to reproduce this at all.

But also wish I knew what the actual cause was lol. This was enough to check off my "incident report" but would be nice to dig deeper, just not sure where to go from here with the logs I have so I guess that's that.

I've made some changes similar to what I did when this happened (aliases, rules, IPsec tunnels, etc...) and nothing went wrong.