What is the biggest attack in GBPS you stopped

-

Dude - it crashes. They know. Have you tested 2.2.2?

-

Not yet.

-

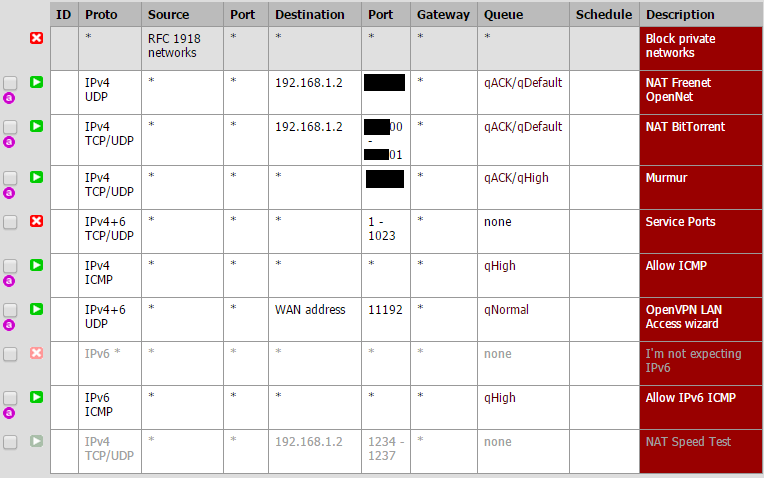

I would assume that an application DDoS would be impossible if the application only needed to process the packet header before knowing a packet's legitimacy. There are quite a few superfluous firewall rules hidden behind pfSense's pretty GUI.

Harvey66, can you look at your queues and see … well, anything interesting? (Nice server btw!)

Supermule, are you spoofing IPs to achieve this? Perhaps confusing the firewall's states? Meh, that'd be too easy. Malformed packets? IPv6? Packets with knives duct-taped to them?

I still hope this is a case of misconfiguration, but I am doubting it.

-

Yes spoofed ip's and different packetsize as well.

Both TCP and UDP if needed. Also ipV6 if needed to test…

-

I'm not home to get my rules, but I have only a few WAN rules for NAT and a bunch of floating rules for traffic shaping. About the same number of floating rules as what is created by default when you run through the Traffic Shaping Wizard.

I did not check my queues after the test for any dropped packets. Most of the time during the first few tests, PFSense was unresponsive, so there would be no way for me to get information as it was happening. During the bandwidth DDOS, PFSense was perfectly responsive, even though CPU was at 100%.

-

Floating actually has fewer rules than stock QoS. Some of the games I do not plan to ever have and some rules overlapped in ports for correctness reasons, but I didn't care to have duplicates and some also had separate rules for UDP and TCP that could be combined.

-

What proxy state do you use for the rules?

Even if it has the "none" setting, it crashes…

-

I use the default, which seems to be "Keep State". I won't use SYN Proxy because it breaks the RWIN, limiting it to 64KB.

-

Why is that an issue?

-

RWIN is how much in-flight data you can have, and at 100Mb/s, 64KB is not much data. At 100Mb, the max latency you can have before your bandwidth is reduced is 5ms. At 10ms, your maximum bandwidth is 50Mb. A system 30ms away from me would at max get 16.6Mb/s from me if they initiated the connection.

Remember, SYN proxy only affects incoming connections, so for most users that make out going connections, they won't see this issue, but if you're running any services on TCP that listen, they will be affected.

-

Doesnt the client scale the RWIN value by itself based on the latency to keep maximum throughput on the connection??

Despite using SYN Proxy as state in the FW?

-

The old RWin only supported up to 64KB, the new extended RWin that supports up to 4GB is configured during the TCP handshake. Since SYN proxy doesn't allow the receiver to participate in the handshake, SYN proxy assume the 64KB because it can't know if the target will actually support the newer RWin.

Or something along those lines. The main issue is that the increase from 64KB to 4GB was a bandaid fix. Since the field is only 16bits, they use a multiplier during negotiation, but only newer TCP stacks understand the multiplier.

http://tools.ietf.org/html/rfc1323

The scale factor is carried in a new TCP option, Window

Scale. This option is sent only in a SYN segment (a segment with

the SYN bit on), hence the window scale is fixed in each direction

when a connection is opened. -

So if one side is using delayed acks or dont send them at all, then it fills the stack until its dropped by RTT??

And thereby filling the pipe quickly and grind it to a halt if PPS is big enough?

-

If the other side does not send ACKs in a timely fashion, the un-acked data gets resent. After n seconds, the TCP connection times-out if no ACKs are received. But yes, the sender is should not have more outstanding data sent than the agreed upon amount. Technically the sender could send more than the RWin, but it would be a bad actor.

-

Even if it has the "none" setting, it crashes…

Not true, though you have to actually correctly put in rules so no states are created (both in and out directions).

There are a variety of tuning options new to 2.2.1 under System>Advanced, Firewall/NAT, to granularly control timers, which greatly helps with DDoS resiliency. TCP first in particular for SYN floods, turning that down to 3-5 seconds or so is probably a good idea if you don't care about people with really poor connectivity.

-

I do not care about those people.. :p

I find life is so much simpler once we stop caring /joke

I'll install 2.2.2 about a week after it goes live.

-

Nice thread but also some overkill statements from people :D

- I was one of the first to locate this issue, and since last i've been much more experienced and today having an almost bulletproof setup regarding SYN flood.

Too much custom work to make a how-to guide at this moment, but looking forward to see the new corrections in 2.2.2

Will make some test in upcoming weeks.

- I was one of the first to locate this issue, and since last i've been much more experienced and today having an almost bulletproof setup regarding SYN flood.

-

Too much custom work to make a how-to guide at this moment, but looking forward to see the new corrections in 2.2.2

it would be helpful if you could point out some various important clues … perhaps the gifted people here could make their own fullblown howto out of the info you could provide

-

Thanks for checking back in, lowprofile. Would definitely appreciate if you could just share some brief tips of your findings with people here. Enough others are interested that I think they'll run with it in doing more testing and putting together recommendations for specific scenarios. I'd like to put out a guide myself, just going to be a bit until I have enough time for that.

Too much custom work to make a how-to guide at this moment, but looking forward to see the new corrections in 2.2.2

Those new config options made 2.2.1 actually, no changes in that regard from 2.2.1 to 2.2.2. There weren't any "corrections" technically I guess, as nothing changed by default, we just exposed all those timer values for configuration since they're greatly helpful in some circumstances.

-

During part of the test, the incoming bandwidth was around 40Mb/s, and I was still getting packetloss to my Admin interface. The bandwidth DDOS was the only part of the DDOS where PFSense was responding correctly, the other parts of the DDOS that did not consume 100% of the bandwidth left it unstable.

You're using the traffic shaper, that's almost certainly what caused that.

Those messing with this and doing traffic shaping on the same box, all bets are off there. ALTQ is not very fast for the kind of scale abuse we're talking here, and queuing in general really complicates things. If you're looking to handle as big of a DDoS as possible, you don't want to be running traffic shaping.