Iperf testing, same subnet, inconsistent speeds.

-

@andyrh said in Iperf testing, same subnet, inconsistent speeds.:

Did I miss something? The original post shows about 30k retries. That is a dirty connection. iperf has done its job and pointed to the problem.

For reference I have trouble getting a copy on all flash NetApps to run faster than about 2 to 3 Gb/s when doing a file copy with large files. These systems are running clean LACP 2x10Gb. In aggregate they easily exceed 10Gb, but when reading/writing to a single file system they are limited to how the file table works, block allocation is single threaded.

Assuming you are all flash, it is still consumer level HW not backed by plenty of cache. Windows and Linux are not optimized in a way to make file transfers super fast. Just a guess, but I doubt Synology NAS systems are actually highly optimized Linux systems. Meaning you are limited by other things in the OS and file system management.

Watch for the write cliff with SSDs. They all run at blazing speed then hit a cliff and performance falls off dramatically.

Networking and pfSense are a hobby, storage has fed the family for 20 years.Exactly, that is very very likely caused by the SFP+ -> RJ45 tranciever.

For the record: One of these NAS's with 4 spinning drives in Raid 5/6 will do 112 MB/s (Full GigE) easily in any somewhat sequential workload - even with copying thousands of files as long as they are 1 MB+ in size and the drives does not get bogged down in filetable updates.

-

The nas and PC are connected to dumb switch.. The sfp connection doesn't come into play when pc talking to nas

-

Are you sure? Why do we have only the ix1 info then? That could be connected to the switch, no?

-

@stephenw10 said in Iperf testing, same subnet, inconsistent speeds.:

Are you sure?

No not really.. But seems more logical.. And if the sfp was problematic, he would of seen that issue when testing between pc and nas.

Where is the nas connected if the pc is directly connected to the pfsense.. He makes no mention of bridge, etc. And that the pc and nas are on the same network.

The port of on his pfsense is the uplink from the switch..

And when stated that pfsense is not part of the conversation between pc and nas he agreed, etc. So to me the pc and nas are connected to the switch, like any normal setup.

Look at his tests between pc and nas - his wire speed is not the issue for his slow file copies.

-

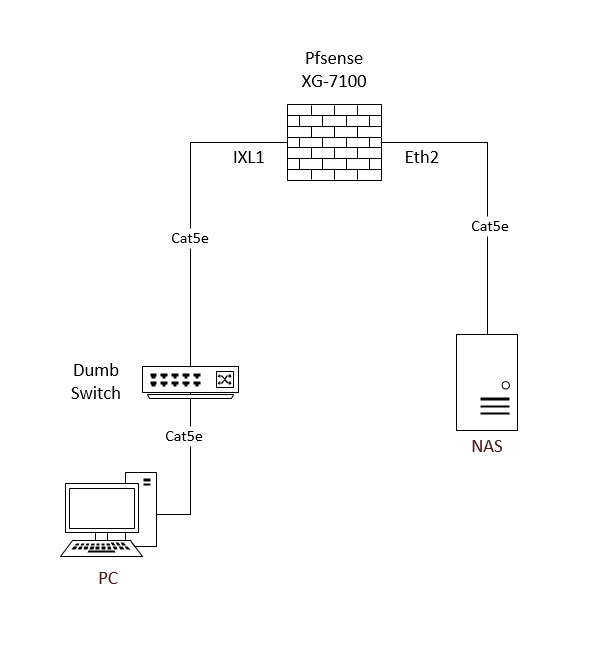

I should probably clarify, My setup is not exactly standard.

My NAS is connected to the built in marvel switch on my XG-7100. My PC is connected to a 5 port dumb switch, which is then connected to the SFP+ port on pfsense.

Here's a drawing so we don't get confused.

-

Just to add another data point. I tried scp a file of random junk, both 500MB and 1GB to both the synology flash disk and the RAID array and got the same speeds:

1GB scp to RAID array:

500MB to /tmp :

The original iperf3 test I did from synology I actually copied the results from the server side, so it omitted the retries. Here is the PC to NAS and NAS to PC iperf tests again:

PC to NAS NAS# iperf3 -V -s -p 4444 PC# iperf3 -V -c 10.10.1.3 -p 4444 Output from PC: iperf 3.7 Linux PC 5.11.0-41-generic #45~20.04.1-Ubuntu SMP Wed Nov 10 10:20:10 UTC 2021 x86_64 Control connection MSS 1448 Time: Tue, 14 Dec 2021 18:45:40 GMT Connecting to host 10.10.1.3, port 4444 Cookie: v3oma5g64ia6jxp36hk4grhdfn2sb3j6xval TCP MSS: 1448 (default) [ 5] local 10.10.0.2 port 35896 connected to 10.10.1.3 port 4444 Starting Test: protocol: TCP, 1 streams, 131072 byte blocks, omitting 0 seconds, 10 second test, tos 0 [ ID] Interval Transfer Bitrate Retr Cwnd [ 5] 0.00-1.00 sec 114 MBytes 957 Mbits/sec 0 355 KBytes [ 5] 1.00-2.00 sec 112 MBytes 938 Mbits/sec 0 355 KBytes [ 5] 2.00-3.00 sec 113 MBytes 946 Mbits/sec 0 373 KBytes [ 5] 3.00-4.00 sec 112 MBytes 939 Mbits/sec 0 373 KBytes [ 5] 4.00-5.00 sec 112 MBytes 939 Mbits/sec 0 373 KBytes [ 5] 5.00-6.00 sec 112 MBytes 942 Mbits/sec 0 393 KBytes [ 5] 6.00-7.00 sec 113 MBytes 947 Mbits/sec 0 393 KBytes [ 5] 7.00-8.00 sec 112 MBytes 940 Mbits/sec 0 410 KBytes [ 5] 8.00-9.00 sec 112 MBytes 941 Mbits/sec 0 410 KBytes [ 5] 9.00-10.00 sec 112 MBytes 941 Mbits/sec 0 410 KBytes - - - - - - - - - - - - - - - - - - - - - - - - - Test Complete. Summary Results: [ ID] Interval Transfer Bitrate Retr [ 5] 0.00-10.00 sec 1.10 GBytes 943 Mbits/sec 0 sender [ 5] 0.00-10.00 sec 1.10 GBytes 941 Mbits/sec receiver CPU Utilization: local/sender 1.5% (0.0%u/1.5%s), remote/receiver 18.7% (0.8%u/17.8%s) snd_tcp_congestion cubic rcv_tcp_congestion cubic iperf Done.NAS to PC PC# iperf3 -V -s -p 4444 NAS# iperf3 -V -c 10.10.0.2 -p 4444 Output from NAS: iperf 3.6 Linux NAS 4.4.180+ #42218 SMP Mon Oct 18 19:16:01 CST 2021 aarch64 Control connection MSS 1448 Time: Tue, 14 Dec 2021 18:46:41 GMT Connecting to host 10.10.0.2, port 4444 Cookie: 5cn3v22hqr5wpyglpotmt2g63zf7kfxyntov TCP MSS: 1448 (default) [ 5] local 10.10.1.3 port 41532 connected to 10.10.0.2 port 4444 Starting Test: protocol: TCP, 1 streams, 131072 byte blocks, omitting 0 seconds, 10 second test, tos 0 [ ID] Interval Transfer Bitrate Retr Cwnd [ 5] 0.00-1.00 sec 114 MBytes 957 Mbits/sec 0 375 KBytes [ 5] 1.00-2.00 sec 111 MBytes 935 Mbits/sec 0 375 KBytes [ 5] 2.00-3.00 sec 112 MBytes 941 Mbits/sec 0 375 KBytes [ 5] 3.00-4.00 sec 112 MBytes 941 Mbits/sec 0 375 KBytes [ 5] 4.00-5.00 sec 111 MBytes 934 Mbits/sec 0 375 KBytes [ 5] 5.00-6.00 sec 112 MBytes 943 Mbits/sec 0 375 KBytes [ 5] 6.00-7.00 sec 111 MBytes 935 Mbits/sec 0 375 KBytes [ 5] 7.00-8.00 sec 112 MBytes 941 Mbits/sec 0 375 KBytes [ 5] 8.00-9.00 sec 112 MBytes 941 Mbits/sec 0 375 KBytes [ 5] 9.00-10.00 sec 112 MBytes 940 Mbits/sec 11 314 KBytes - - - - - - - - - - - - - - - - - - - - - - - - - Test Complete. Summary Results: [ ID] Interval Transfer Bitrate Retr [ 5] 0.00-10.00 sec 1.10 GBytes 941 Mbits/sec 11 sender [ 5] 0.00-10.03 sec 1.09 GBytes 936 Mbits/sec receiver CPU Utilization: local/sender 7.1% (0.0%u/7.1%s), remote/receiver 16.2% (2.0%u/14.3%s) snd_tcp_congestion cubic rcv_tcp_congestion cubic iperf Done.NAS to PC did have a few retires, nothing more than 20 per interval. Done a second time I get only 10-20 retires over all the intervals.

I am at a loss for what is the bottleneck here. I get the same speeds to the synology onboard flash as the RAID array? but its not full gigabit, yet iperf shows the network is not the problem?

It seems if I use any actual application that transfers data (ssh, rsync, smb) then I don't see full gigabit...

-

@erasedhammer said in Iperf testing, same subnet, inconsistent speeds.:

Just to add another data point. I tried scp a file of random junk, both 500MB and 1GB to both the synology flash disk and the RAID array and got the same speeds:

1GB scp to RAID array:

500MB to /tmp :

The original iperf3 test I did from synology I actually copied the results from the server side, so it omitted the retries. Here is the PC to NAS and NAS to PC iperf tests again:

PC to NAS NAS# iperf3 -V -s -p 4444 PC# iperf3 -V -c 10.10.1.3 -p 4444 Output from PC: iperf 3.7 Linux PC 5.11.0-41-generic #45~20.04.1-Ubuntu SMP Wed Nov 10 10:20:10 UTC 2021 x86_64 Control connection MSS 1448 Time: Tue, 14 Dec 2021 18:45:40 GMT Connecting to host 10.10.1.3, port 4444 Cookie: v3oma5g64ia6jxp36hk4grhdfn2sb3j6xval TCP MSS: 1448 (default) [ 5] local 10.10.0.2 port 35896 connected to 10.10.1.3 port 4444 Starting Test: protocol: TCP, 1 streams, 131072 byte blocks, omitting 0 seconds, 10 second test, tos 0 [ ID] Interval Transfer Bitrate Retr Cwnd [ 5] 0.00-1.00 sec 114 MBytes 957 Mbits/sec 0 355 KBytes [ 5] 1.00-2.00 sec 112 MBytes 938 Mbits/sec 0 355 KBytes [ 5] 2.00-3.00 sec 113 MBytes 946 Mbits/sec 0 373 KBytes [ 5] 3.00-4.00 sec 112 MBytes 939 Mbits/sec 0 373 KBytes [ 5] 4.00-5.00 sec 112 MBytes 939 Mbits/sec 0 373 KBytes [ 5] 5.00-6.00 sec 112 MBytes 942 Mbits/sec 0 393 KBytes [ 5] 6.00-7.00 sec 113 MBytes 947 Mbits/sec 0 393 KBytes [ 5] 7.00-8.00 sec 112 MBytes 940 Mbits/sec 0 410 KBytes [ 5] 8.00-9.00 sec 112 MBytes 941 Mbits/sec 0 410 KBytes [ 5] 9.00-10.00 sec 112 MBytes 941 Mbits/sec 0 410 KBytes - - - - - - - - - - - - - - - - - - - - - - - - - Test Complete. Summary Results: [ ID] Interval Transfer Bitrate Retr [ 5] 0.00-10.00 sec 1.10 GBytes 943 Mbits/sec 0 sender [ 5] 0.00-10.00 sec 1.10 GBytes 941 Mbits/sec receiver CPU Utilization: local/sender 1.5% (0.0%u/1.5%s), remote/receiver 18.7% (0.8%u/17.8%s) snd_tcp_congestion cubic rcv_tcp_congestion cubic iperf Done.NAS to PC PC# iperf3 -V -s -p 4444 NAS# iperf3 -V -c 10.10.0.2 -p 4444 Output from NAS: iperf 3.6 Linux NAS 4.4.180+ #42218 SMP Mon Oct 18 19:16:01 CST 2021 aarch64 Control connection MSS 1448 Time: Tue, 14 Dec 2021 18:46:41 GMT Connecting to host 10.10.0.2, port 4444 Cookie: 5cn3v22hqr5wpyglpotmt2g63zf7kfxyntov TCP MSS: 1448 (default) [ 5] local 10.10.1.3 port 41532 connected to 10.10.0.2 port 4444 Starting Test: protocol: TCP, 1 streams, 131072 byte blocks, omitting 0 seconds, 10 second test, tos 0 [ ID] Interval Transfer Bitrate Retr Cwnd [ 5] 0.00-1.00 sec 114 MBytes 957 Mbits/sec 0 375 KBytes [ 5] 1.00-2.00 sec 111 MBytes 935 Mbits/sec 0 375 KBytes [ 5] 2.00-3.00 sec 112 MBytes 941 Mbits/sec 0 375 KBytes [ 5] 3.00-4.00 sec 112 MBytes 941 Mbits/sec 0 375 KBytes [ 5] 4.00-5.00 sec 111 MBytes 934 Mbits/sec 0 375 KBytes [ 5] 5.00-6.00 sec 112 MBytes 943 Mbits/sec 0 375 KBytes [ 5] 6.00-7.00 sec 111 MBytes 935 Mbits/sec 0 375 KBytes [ 5] 7.00-8.00 sec 112 MBytes 941 Mbits/sec 0 375 KBytes [ 5] 8.00-9.00 sec 112 MBytes 941 Mbits/sec 0 375 KBytes [ 5] 9.00-10.00 sec 112 MBytes 940 Mbits/sec 11 314 KBytes - - - - - - - - - - - - - - - - - - - - - - - - - Test Complete. Summary Results: [ ID] Interval Transfer Bitrate Retr [ 5] 0.00-10.00 sec 1.10 GBytes 941 Mbits/sec 11 sender [ 5] 0.00-10.03 sec 1.09 GBytes 936 Mbits/sec receiver CPU Utilization: local/sender 7.1% (0.0%u/7.1%s), remote/receiver 16.2% (2.0%u/14.3%s) snd_tcp_congestion cubic rcv_tcp_congestion cubic iperf Done.NAS to PC did have a few retires, nothing more than 20 per interval. Done a second time I get only 10-20 retires over all the intervals.

I am at a loss for what is the bottleneck here. I get the same speeds to the synology onboard flash as the RAID array? but its not full gigabit, yet iperf shows the network is not the problem?

It seems if I use any actual application that transfers data (ssh, rsync, smb) then I don't see full gigabit...

Please read My former replies. Your issue is the SFP+ tranciever.

-

2 more questions:

- Are copper SFP+ supported now?

- As suggested, there is a chance the SFP+ is at fault. Can you move from IX1 to a switch port for testing? If there is a free port it is not too hard to add/remove VLANs from the ports.

-

@andyrh said in Iperf testing, same subnet, inconsistent speeds.:

Are copper SFP+ supported now?

There's no reason why not in an ixl port, using the x710 expansion card. That's what the diagram shows but the output further back is from ix1 which does not support it.

I believe we have seen one or two modules that worked by chance but I would not expect it to. If that's what you have there I would definitely look at moving to some other connection type.

Steve

-

Is the netgate appliance the one that does not support RJ45 SFP+ modules or the Intel network adapter they used?

I'll admit I have had plenty of troubles with RJ45 SFP+ modules in the past, most of the time running pure fiber then using a proper media converter solved my issues historically. Unfortunately I don't have any spare ports on pfsense right now, so I'll try out a media converter.

-

The on-board SFP+ ports in the 7100 (ix0 and ix1) do not support RJ-45 modules.

https://docs.netgate.com/pfsense/en/latest/solutions/xg-7100-1u/io-ports.html#sfp-ethernet-portsThe SoC cannot read the the module data. If it works it's by chance only and should not be relied upon.

Steve

-

Sounds good, I have some more fiber cables on order. I will be switching to SFP+ port -> LC SFP+ module -> Om3 fiber -> media converter -> RJ45. The Fiber SFP+ modules I have actually are on the supported list, so should be a painless switch.

Since I will need the SFP+ port, media converter sounds like my only option.Will report back with results in a few days with hopefully good news.

-

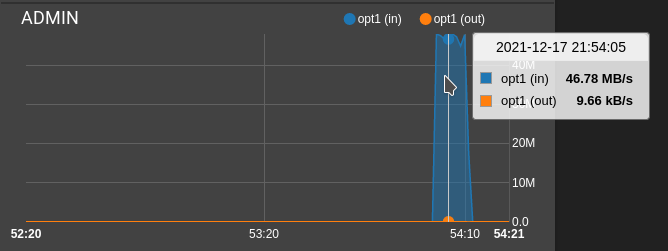

Just got the media converter and new fiber in. No change to transfer speeds. Still sitting right at 50MB/s

ix1: flags=8943<UP,BROADCAST,RUNNING,PROMISC,SIMPLEX,MULTICAST> metric 0 mtu 1500 description: Admin options=e138bb<RXCSUM,TXCSUM,VLAN_MTU,VLAN_HWTAGGING,JUMBO_MTU,VLAN_HWCSUM,WOL_UCAST,WOL_MCAST,WOL_MAGIC,VLAN_HWFILTER,RXCSUM_IPV6,TXCSUM_IPV6> capabilities=f53fbb<RXCSUM,TXCSUM,VLAN_MTU,VLAN_HWTAGGING,JUMBO_MTU,VLAN_HWCSUM,TSO4,TSO6,LRO,WOL_UCAST,WOL_MCAST,WOL_MAGIC,VLAN_HWFILTER,VLAN_HWTSO,NETMAP,RXCSUM_IPV6,TXCSUM_IPV6> ether 00:08:a2:0f:13:b1 inet6 fe80::208:a2ff:fe0f:13b1%ix1 prefixlen 64 scopeid 0x2 inet 10.10.0.1 netmask 0xfffffff0 broadcast 10.10.0.15 media: Ethernet autoselect (1000baseSX <full-duplex,rxpause,txpause>) status: active supported media: media autoselect media 1000baseSX nd6 options=21<PERFORMNUD,AUTO_LINKLOCAL> plugged: SFP/SFP+/SFP28 1000BASE-SX (LC) vendor: INTEL PN: SFP-GE-SX SN: INGE1K70662 DATE: 2020-07-18 module temperature: 31.35 C Voltage: 3.31 Volts RX: 0.40 mW (-3.97 dBm) TX: 0.23 mW (-6.33 dBm) SFF8472 DUMP (0xA0 0..127 range): 03 04 07 00 00 00 01 20 40 0C 00 03 0D 00 00 00 37 1B 00 00 49 4E 54 45 4C 20 20 20 20 20 20 20 20 20 20 20 00 00 00 00 53 46 50 2D 47 45 2D 53 58 20 20 20 20 20 20 20 41 20 20 20 03 52 00 09 00 1A 14 14 49 4E 47 45 31 4B 37 30 36 36 32 20 20 20 20 20 32 30 30 37 31 38 20 20 68 B0 01 11 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00Transfer 1GB file:

What is the throughput of the marvel switch? If all ports are populated, does it loose throughput?

The RJ45 SFP+ transceiver was not the issue. -

Hmm. Are you still seeing it in one direction only?

And only on the link to ix1?

The Marvell switch should not be any sort of restriction, it can pass the 5Gbps combined internal ports easily.

Can you try reassigning the port to ix0?

Steve

-

I have been doing some SCP testing using 1GB file to other devices off the marvel switch, it appears inconsistent. I get full speed transfers (111MB/s-123MB/s) to two intel NUCs and a custom ITX build (Ports 2, 5, and 8).

Testing scp to my Synology and an x86 SBC (Up board) both result in 20MB/s-50MB/s.

I'll admit the x86 SBC probably isn't the best indicator of file transfer speed (Atom x5-Z8350, 32GB eMMC, Realtek 8111G - PCIe Gen2 x1 link to cpu), and the eMMC storage appears to be hitting its write speed limit for sustained transfer. (Maintains about 60MB/s for 2 seconds, then drops to 20MB/s).There is a local network upstream of this pfsense device, connected to the ix0 interface.

Testing scp to any of those devices also nets me around 111MB/s.This leads me to believe there is a problem with the actual port to both the Synology and my x86 SBC. Or potentially those two devices have something in common at the OS or network adapter level that compromises file transfer speeds, but not iperf testing?

At this point I have to say, ix0/ix1 and their transceivers are not the issue.

Here is some information about the ports on the marvel switch.

Ports 1, 3, and 4 are the problem. Synology is connected (now in active-passive mode) to port 3 and 4. The x86 SBC is connected to port 1.etherswitch0: VLAN mode: DOT1Q port1: pvid: 101 state=8<FORWARDING> flags=0<> media: Ethernet autoselect (1000baseT <full-duplex>) status: active port2: pvid: 101 state=8<FORWARDING> flags=0<> media: Ethernet autoselect (1000baseT <full-duplex,master>) status: active port3: pvid: 101 state=8<FORWARDING> flags=0<> media: Ethernet autoselect (1000baseT <full-duplex>) status: active port4: pvid: 101 state=8<FORWARDING> flags=0<> media: Ethernet autoselect (1000baseT <full-duplex>) status: active port5: pvid: 1018 state=8<FORWARDING> flags=0<> media: Ethernet autoselect (1000baseT <full-duplex,master>) status: active port6: pvid: 900 state=8<FORWARDING> flags=0<> media: Ethernet autoselect (none) status: no carrier port7: pvid: 103 state=8<FORWARDING> flags=0<> media: Ethernet autoselect (100baseTX <full-duplex>) status: active port8: pvid: 103 state=8<FORWARDING> flags=0<> media: Ethernet autoselect (1000baseT <full-duplex,master>) status: active port9: pvid: 1 state=8<FORWARDING> flags=1<CPUPORT> media: Ethernet 2500Base-KX <full-duplex> status: active port10: pvid: 1 state=8<FORWARDING> flags=1<CPUPORT> media: Ethernet 2500Base-KX <full-duplex> status: activeDespite the scp showing low speeds, iperf3 to and from the x86 SBC is practically full speed.

From x86 SBC to PC iperf 3.7 Linux host 5.10.0-9-amd64 #1 SMP Debian 5.10.70-1 (2021-09-30) x86_64 Control connection MSS 1448 Time: Sat, 18 Dec 2021 14:38:38 GMT Connecting to host 10.10.0.2, port 4444 Cookie: s55tkrqkae6ayrmxixoyiig3lboy43xsume4 TCP MSS: 1448 (default) [ 5] local 10.10.1.4 port 52440 connected to 10.10.0.2 port 4444 Starting Test: protocol: TCP, 1 streams, 131072 byte blocks, omitting 0 seconds, 10 second test, tos 0 [ ID] Interval Transfer Bitrate Retr Cwnd [ 5] 0.00-1.00 sec 68.8 MBytes 577 Mbits/sec 0 392 KBytes [ 5] 1.00-2.00 sec 97.1 MBytes 814 Mbits/sec 0 602 KBytes [ 5] 2.00-3.00 sec 111 MBytes 934 Mbits/sec 0 602 KBytes [ 5] 3.00-4.00 sec 112 MBytes 942 Mbits/sec 0 602 KBytes [ 5] 4.00-5.00 sec 111 MBytes 935 Mbits/sec 0 602 KBytes [ 5] 5.00-6.00 sec 106 MBytes 891 Mbits/sec 0 602 KBytes [ 5] 6.00-7.00 sec 111 MBytes 933 Mbits/sec 0 602 KBytes [ 5] 7.00-8.00 sec 112 MBytes 944 Mbits/sec 0 602 KBytes [ 5] 8.00-9.00 sec 112 MBytes 944 Mbits/sec 0 602 KBytes [ 5] 9.00-10.00 sec 109 MBytes 911 Mbits/sec 0 636 KBytes - - - - - - - - - - - - - - - - - - - - - - - - - Test Complete. Summary Results: [ ID] Interval Transfer Bitrate Retr [ 5] 0.00-10.00 sec 1.03 GBytes 882 Mbits/sec 0 sender [ 5] 0.00-10.01 sec 1.02 GBytes 879 Mbits/sec receiver CPU Utilization: local/sender 21.8% (0.6%u/21.2%s), remote/receiver 18.3% (2.5%u/15.8%s) snd_tcp_congestion cubic rcv_tcp_congestion cubic iperf Done.From PC to x86 SBC iperf 3.7 Linux host 5.11.0-43-generic #47~20.04.2-Ubuntu SMP Mon Dec 13 11:06:56 UTC 2021 x86_64 Control connection MSS 1448 Time: Sat, 18 Dec 2021 14:39:23 GMT Connecting to host 10.10.1.4, port 4444 Cookie: hky2jxyxjobncjqjsqkkutvqxpadhkkhxm2g TCP MSS: 1448 (default) [ 5] local 10.10.0.2 port 35838 connected to 10.10.1.4 port 4444 Starting Test: protocol: TCP, 1 streams, 131072 byte blocks, omitting 0 seconds, 10 second test, tos 0 [ ID] Interval Transfer Bitrate Retr Cwnd [ 5] 0.00-1.00 sec 106 MBytes 891 Mbits/sec 0 960 KBytes [ 5] 1.00-2.00 sec 100 MBytes 839 Mbits/sec 0 960 KBytes [ 5] 2.00-3.00 sec 101 MBytes 849 Mbits/sec 0 960 KBytes [ 5] 3.00-4.00 sec 100 MBytes 839 Mbits/sec 0 960 KBytes [ 5] 4.00-5.00 sec 108 MBytes 902 Mbits/sec 0 1007 KBytes [ 5] 5.00-6.00 sec 106 MBytes 891 Mbits/sec 0 1.25 MBytes [ 5] 6.00-7.00 sec 101 MBytes 849 Mbits/sec 0 1.25 MBytes [ 5] 7.00-8.00 sec 100 MBytes 839 Mbits/sec 0 1.25 MBytes [ 5] 8.00-9.00 sec 100 MBytes 839 Mbits/sec 0 1.25 MBytes [ 5] 9.00-10.00 sec 100 MBytes 839 Mbits/sec 0 1.25 MBytes - - - - - - - - - - - - - - - - - - - - - - - - - Test Complete. Summary Results: [ ID] Interval Transfer Bitrate Retr [ 5] 0.00-10.00 sec 1022 MBytes 858 Mbits/sec 0 sender [ 5] 0.00-10.00 sec 1016 MBytes 852 Mbits/sec receiver CPU Utilization: local/sender 1.3% (0.0%u/1.3%s), remote/receiver 49.5% (6.7%u/42.8%s) snd_tcp_congestion cubic rcv_tcp_congestion cubic iperf Done.I think this is a problem with these two devices (Synology and x86 SBC). I am pretty sure the Synology uses a Realtek nic, maybe that could be the issue?

-

Realtek NIC under Linux is probably fine.

The fact iperf gets full speed and SCP transfers do not implies the limitation is not the network. It's the storage speed or the CPU ability to run the SCP encryption rates.I'd be very surprised if the switch ports behaved differently but try swapping them, it should be easy enough.

I do note that ports 2,5 and 8 have some flow control active and the others do not.

Steve

-

The speed limitation also applies to native rsync and SMB3.

Is there a more verbose switch command for marvel that I can run?

I have not personally configured any flow control. -

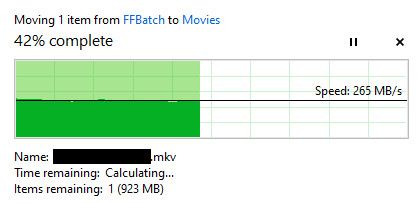

@erasedhammer Why don't you just take pfsense out of the equation if you suspect it to be causing your 50MBps limit in file transfers.

I don't see how that would be the case when your showing network speeds at pretty close to wire, and for sure higher than 50MBps speeds.

Connect your PC and NAS to the dumb switch - do you see full speed file transfers then?

edit: I am in the middle of moving some files around from my PC to NAS and while I do not have your specific nas, I have a synology DS918+ I do not have any issues with disks or cpu causing slowdowns.. I far exceed 50MBps - while even streaming movies off the nas to currenly 4 different viewers.

This was like a 1.8GB file.. via a 2.5ge connection

-

This equipment is separated by two floors and some of these devices are essential to the network. Taking things out of service for more than a couple minutes is not possible right now.

I'm just trying to troubleshoot via the least invasive way. Next month I am doing a major migration and will have the required downtime to properly test this.

-

@erasedhammer said in Iperf testing, same subnet, inconsistent speeds.:

Is there a more verbose switch command for marvel that I can run?

You can run:

etherswitchcfg -vbut that's the same info the gui displays.

I would be very surprised if this was an issue with the switch. There's always a first time but as far as know we have never seen an issue like that.The flow control is negotiated when the link is established so some of those devices are capable or configured to use it.

Steve