Netgate 6100 Max ZFS Install Location

-

On that system you want to use

nvd0as that is the add-on SSD.The eMMC drive is the smaller built-in SSD.

-

@jimp Great, thanks for the help!

-

@jimp Confirmed, that worked!

-

@jimp said in Netgate 6100 Max ZFS Install Location:

On that system you want to use

nvd0as that is the add-on SSD.The eMMC drive is the smaller built-in SSD.

Hm, I think I messed up with my MAX and reinstalled on the smaller SSD.

What would be the best approach for me now? It seems like it is still UFS instead of ZFS despite following the steps mentioned in https://forum.netgate.com/topic/168038/proper-steps-for-zfs-pave-re-install-on-6100

-

@eirikrcoquere You might want to wait for an official answer, but I imagine you'll have to reformat the smaller drive and then reinstall to

nvd0. -

@leeroy said in Netgate 6100 Max ZFS Install Location:

@eirikrcoquere You might want to wait for an official answer, but I imagine you'll have to reformat the smaller drive and then reinstall to

nvd0.I went ahead and installed on nvd0. Now it boots to the installation on that drive and it is ZFS.

So far so good.

Now, what I should do with the internal smaller drive.

-

Just ignore it is the easiest thing to do.

You can boot back to it if you need to from the boot menu.

pfSense does not directly support multiple drives so you can't officially use it for anything.

Steve

-

@stephenw10 said in Netgate 6100 Max ZFS Install Location:

Just ignore it is the easiest thing to do.

You can boot back to it if you need to from the boot menu.

pfSense does not directly support multiple drives so you can't officially use it for anything.

Steve

Superb! Thanks! Oh and by the way, this thing is a BEAST. Just, wow!

-

@stephenw10 said in Netgate 6100 Max ZFS Install Location:

Just ignore it is the easiest thing to do.

You can boot back to it if you need to from the boot menu.

pfSense does not directly support multiple drives so you can't officially use it for anything.

Steve

Revisiting this.

I've been playing around (a lot) and decided to reinstall with 22.01. No back-up. For some reason I now have 22.01 installed on the small and large drive. It seems to boot to the small drive by default and I can't get it to change that to the large drive. Tried resetting boot order, tried changing boot order. I messed up.

What would be the best approach? It might be a good idea to completely wipe everything and install on the large drive?

Would it be best to pick a manual installation, delete all partitions, create partitions (which?), install?

-

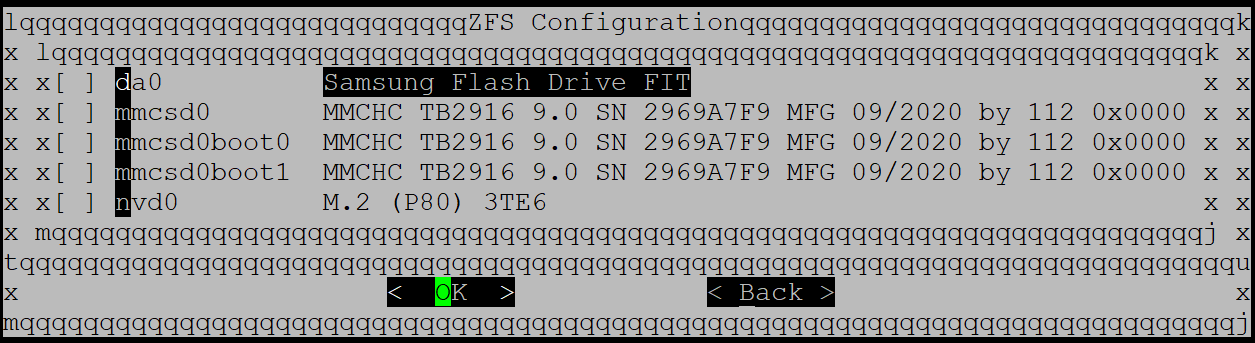

This is what I have now.

I am able to reinstall on nvd0 but it boots to mmcsd0.

The boot order menu gives me mmcsd0boot0 and mmcsd0boot1.

Geom name: nvd0 Providers: 1. Name: nvd0 Mediasize: 120034123776 (112G) Sectorsize: 512 Mode: r0w0e0 descr: M.2 (P80) 3TE6 lunid: 0100000000000000000000a84904e20a ident: YCA12110210320010 rotationrate: 0 fwsectors: 0 fwheads: 0 Geom name: mmcsd0 Providers: 1. Name: mmcsd0 Mediasize: 15678308352 (15G) Sectorsize: 512 Stripesize: 512 Stripeoffset: 0 Mode: r2w2e3 descr: MMCHC TB2916 9.0 SN 5A9A3ADD MFG 08/2021 by 112 0x0000 ident: 5A9A3ADD rotationrate: 0 fwsectors: 0 fwheads: 0 Geom name: mmcsd0boot0 Providers: 1. Name: mmcsd0boot0 Mediasize: 4194304 (4.0M) Sectorsize: 512 Stripesize: 512 Stripeoffset: 0 Mode: r0w0e0 descr: MMCHC TB2916 9.0 SN 5A9A3ADD MFG 08/2021 by 112 0x0000 ident: 5A9A3ADD rotationrate: 0 fwsectors: 0 fwheads: 0 Geom name: mmcsd0boot1 Providers: 1. Name: mmcsd0boot1 Mediasize: 4194304 (4.0M) Sectorsize: 512 Stripesize: 512 Stripeoffset: 0 Mode: r0w0e0 descr: MMCHC TB2916 9.0 SN 5A9A3ADD MFG 08/2021 by 112 0x0000 ident: 5A9A3ADD rotationrate: 0 fwsectors: 0 fwheads: 0geom label status tells me this

Name Status Components gpt/efiboot0 N/A nvd0p1 msdosfs/EFISYS N/A nvd0p1 gpt/gptboot0 N/A nvd0p2 gpt/swap0 N/A nvd0p3 gpt/zfs0 N/A nvd0p4Which is weird because pfSense boots to an old config which I believe is on mmcsd0 and keeps on doing so whenever I reinstall on nvd0. Also, the dashboard of pfSense shows the smaller size of the mmcsd0.

df -h

Filesystem Size Used Avail Capacity Mounted on pfSense/ROOT/default 10G 899M 9.4G 9% / devfs 1.0K 1.0K 0B 100% /dev pfSense/var 9.4G 3.2M 9.4G 0% /var pfSense/tmp 9.4G 196K 9.4G 0% /tmp pfSense 9.4G 96K 9.4G 0% /pfSense pfSense/cf 9.4G 96K 9.4G 0% /cf pfSense/home 9.4G 96K 9.4G 0% /home pfSense/var/db 9.4G 4.6M 9.4G 0% /var/db pfSense/var/log 9.4G 1.0M 9.4G 0% /var/log pfSense/var/empty 9.4G 96K 9.4G 0% /var/empty pfSense/var/cache 9.6G 217M 9.4G 2% /var/cache pfSense/reservation 11G 96K 11G 0% /pfSense/reservation pfSense/var/tmp 9.4G 104K 9.4G 0% /var/tmp pfSense/cf/conf 9.4G 508K 9.4G 0% /cf/conf tmpfs 4.0M 124K 3.9M 3% /var/run devfs 1.0K 1.0K 0B 100% /var/dhcpd/dev/etc/fstab

# Device Mountpoint FStype Options Dump Pass# /dev/mmcsd0p3 none swap sw 0 0 -

If you installed to both as ZFS and didn't change the default zpool name then you can end up with conflicting zfs pools. In that situation if mounts the eMMC pool resulting in the confusing behaviour you see.

The easiest thing to do if that happens is to reinstall to the eMMC as UFS then make sure the NVMe drive is above it in the boot order.

You can remove the zpool from the eMMC when installing to NVMe by dropping to the shell in the installer and running:

zpool labelclear -f /dev/mmcsd0p3Then type exit to continue/complete the install.

Steve

-

This post is deleted! -

@stephenw10 said in Netgate 6100 Max ZFS Install Location:

The easiest thing to do if that happens is to reinstall to the eMMC as UFS then make sure the NVMe drive is above it in the boot order.

This was the solution. Thank you!

-

@stephenw10 New user here, 6100 non-Max with custom SSD upgrade. I ran into this issue as the 6100 now comes with ZFS preinstalled and I did another ZFS installation on the SSD but it is booting from the eMMC and mouting it. This makes it impossible for the "zpool labelclear" command to succeed. I booted up with the installer, went to the shell and I tried the command but I got an error, any ideas?

# zpool labelclear -f /dev/mmcsd0p3 failed to read label from /dev/mmcsd0p3 # zpool list no pools available # ls -al /dev/mmcsd0p3 crw-r----- 1 root operator 0x5f Aug 31 13:52 /dev/mmcsd0p3 # -

-

@jimp Thanks. I did try few things there but none helped. In the end I did the other suggestions from @stephenw10 which was to reinstall reinstall to the eMMC as UFS then make sure the NVMe drive is above it in the boot order. I rather have a functional installation in the eMMC which I can always use in case of SSD failure (provided I have a good config backup of course). But it will be better if the installer could detect deal with this issue as it is likely to happen more often now that pfsense is installed on ZFS by default. I wasn't sure if I should rename the defaul ZFS pool name, this sort of changes can sometimes lead to upgrade issues. Is it safe to name whatever the installer wants?

-

@cdturri said in Netgate 6100 Max ZFS Install Location:

@jimp Thanks. I did try few things there but none helped. In the end I did the other suggestions from @stephenw10 which was to reinstall reinstall to the eMMC as UFS then make sure the NVMe drive is above it in the boot order. I rather have a functional installation in the eMMC which I can always use in case of SSD failure (provided I have a good config backup of course). But it will be better if the installer could detect deal with this issue as it is likely to happen more often now that pfsense is installed on ZFS by default. I wasn't sure if I should rename the defaul ZFS pool name, this sort of changes can sometimes lead to upgrade issues. Is it safe to name whatever the installer wants?

Having the second install is more likely to be a source of problems than a helpful backup. Read the section of the doc I posted a bit deeper, it explains why. Also you're passing the wrong parameter to

zpool labelclearand the example on the doc has the correct syntax. -

Hmm, interesting I'm sure I tested that at the time...

I've never seen a problem using UFS and ZFS installs though it would obviously be possible to boot the alternative install perhaps without intending to.

Steve

-

@jimp @stephenw10 In this case it was two ZFS, one on eMMC and the other on SSD. If having two installs is bad practice then why not have the installer prevent this by wipping the other install at the same time? Anyway I am sorted now, new ZFS install on the SSD and the Netgate boots to the correct drive.

-

Just for reference the actual required command there for a default ZFS install to eMMC on a 6100 is:

zpool labelclear -f /dev/mmcsd0p4Neither p3 or the base device work.

# zpool labelclear -f /dev/mmcsd0p3 failed to clear label for /dev/mmcsd0p3 # zpool labelclear -f /dev/mmcsd0 failed to clear label for /dev/mmcsd0 # zpool labelclear -f /dev/mmcsd0p4 #Steve