[solved] pfSense (2.6.0 & 22.01 ) is very slow on Hyper-V

-

@m0nji I tried this with 2.5.2. I don't see anything regarding rsc in the logs or OS Boot log.

Also where to put those commands in? If I try with putty, it gives my permission denied. In the WebUI there is no output as well.So I guess not noob friendly enough.

Enter an option: 8 [2.5.2-RELEASE][admin@pfSense.home.arpa]/root: # grep -i "rsc" /var/log/messages #: Command not found. [2.5.2-RELEASE][admin@pfSense.home.arpa]/root: grep -i "rsc" /var/log/system.log [2.5.2-RELEASE][admin@pfSense.home.arpa]/root: ~ # grep -i "rsc" /var/log/messages /root: Permission denied. [2.5.2-RELEASE][admin@pfSense.home.arpa]/root: -

@bob-dig i think your output is correct. you should not see anything RSC related on pfSense 2.5.x

This is what @stephenw10 said:

"RSC support wasn't added to hn(4) until 12.3 so I would expect to see no sysctls there." -

This post is deleted! -

@bob-dig you missed a "."

hn.0 is probably the wan interface. you should also try hn.1, hn.2 and hn.3

EDIT: you already fixed your post ;) try it also for hn1, hn2 and hn3. it depends which hn adapter you use

EDIT2: if i understood it correctly, then none hn adapter should give you a value >0 if RSC is disabled. if it tells you a value >0 then the setting RSC disabled does not realy work

-

2.6:

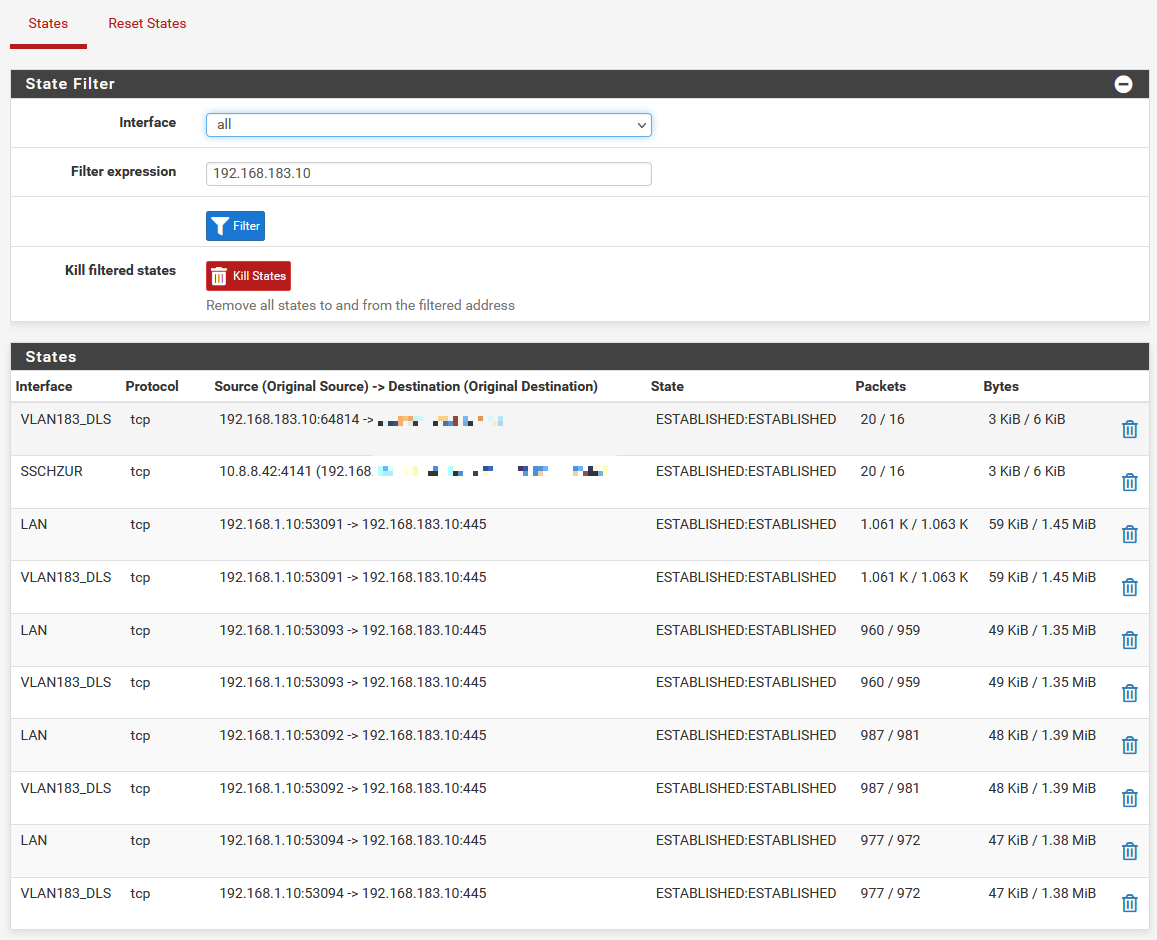

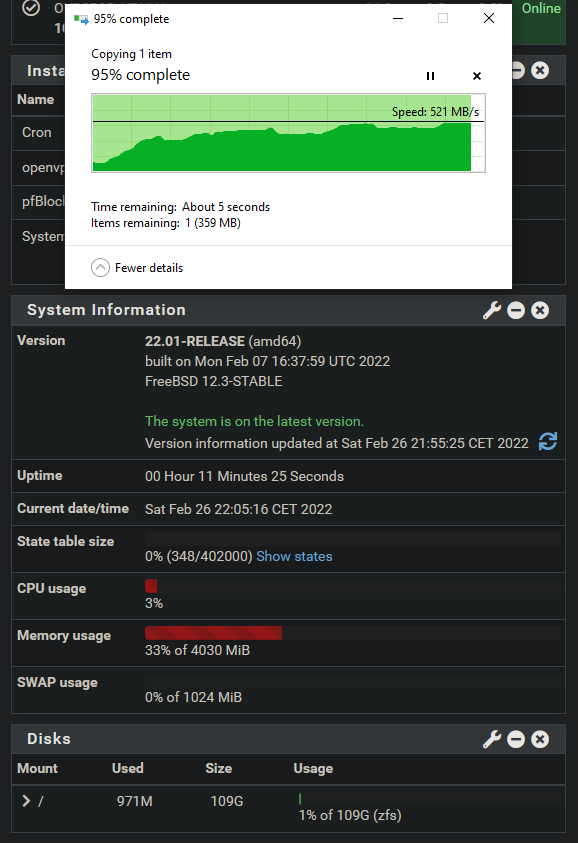

SoftwareRscEnabled RscOffloadEnabled ------------------ ----------------- False False False False False Falsegrep -i "rsc" /var/log/system.logFeb 25 13:18:24 pfSense kernel: hn0: hwcaps rsc: ip4 1 ip6 1 Feb 25 13:18:24 pfSense kernel: hn0: offload rsc: ip4 2, ip6 2 Feb 25 13:18:24 pfSense kernel: hn1: hwcaps rsc: ip4 1 ip6 1 Feb 25 13:18:24 pfSense kernel: hn1: offload rsc: ip4 2, ip6 2 Feb 25 13:18:24 pfSense kernel: hn2: hwcaps rsc: ip4 1 ip6 1 Feb 25 13:18:24 pfSense kernel: hn2: offload rsc: ip4 2, ip6 2While "copying":

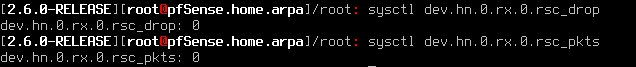

sysctl dev.hn.1.rx.0.rsc_drop sysctl dev.hn.1.rx.0.rsc_pkts sysctl dev.hn.2.rx.0.rsc_drop sysctl dev.hn.2.rx.0.rsc_pkts[2.6.0-RELEASE][root@pfSense.home.arpa]/root: sysctl dev.hn.1.rx.0.rsc_drop dev.hn.1.rx.0.rsc_drop: 0 [2.6.0-RELEASE][root@pfSense.home.arpa]/root: sysctl dev.hn.1.rx.0.rsc_pkts dev.hn.1.rx.0.rsc_pkts: 0 [2.6.0-RELEASE][root@pfSense.home.arpa]/root: sysctl dev.hn.2.rx.0.rsc_drop dev.hn.2.rx.0.rsc_drop: 0 [2.6.0-RELEASE][root@pfSense.home.arpa]/root: sysctl dev.hn.2.rx.0.rsc_pkts dev.hn.2.rx.0.rsc_pkts: 107The last number constantly rises.

Everything stays the same for me if I re-enable rsc in Windows, no difference at all.

SoftwareRscEnabled RscOffloadEnabled ------------------ ----------------- True False True False True False -

Hmm, so just to be clear you are seeing the RSC packets counter increment whether or not you have disabled RSC on the vswitch that interface is connected to?

That seems like a different result to those for whom disabling RSC solved the issue. And seems to support my conjecture...

Unclear what we can do about it though if that is the case. Yet.

Steve

-

@stephenw10 said in After Upgrade inter (V)LAN communication is very slow (on Hyper-V).:

Hmm, so just to be clear you are seeing the RSC packets counter increment whether or not you have disabled RSC on the vswitch that interface is connected to?

True

Unclear what we can do about it though if that is the case. Yet.

I hope you guys figure it out.

-

Ok, so we need more data points here to be sure it's actually what's happening.

But assuming that's true it appears:

There's an issue with the RSC code added in FreeBSD.

In some situations the vswitches in hyper-v do not respect the disable RSC setting.Steve

-

Ok, so it looks like our European friends in fact already hit this because they are actually building on 13-stable and came to the same conclusions. I have opened a bug report: https://redmine.pfsense.org/issues/12873

Steve

-

What do you guys see for these sysctls?:

dev.hn.0.hwassist: 607<CSUM_IP,CSUM_IP_UDP,CSUM_IP_TCP,CSUM_IP6_UDP,CSUM_IP6_TCP> dev.hn.0.caps: 7ff<VLAN,MTU,IPCS,TCP4CS,TCP6CS,UDP4CS,UDP6CS,TSO4,TSO6,HASHVAL,UDPHASH> dev.hn.0.ndis_version: 6.30 dev.hn.0.nvs_version: 393217Please report whether or not you're hitting the issue with the values shown.

-

@stephenw10 Hittin it hard

sysctl dev.hn.0.hwassist sysctl dev.hn.0.caps sysctl dev.hn.0.ndis_version sysctl dev.hn.0.nvs_version2.5.2 dev.hn.0.hwassist: 1617<CSUM_IP,CSUM_IP_UDP,CSUM_IP_TCP,CSUM_IP_TSO,CSUM_IP6_UDP,CSUM_IP6_TCP,CSUM_IP6_TSO> dev.hn.0.caps: 7ff<VLAN,MTU,IPCS,TCP4CS,TCP6CS,UDP4CS,UDP6CS,TSO4,TSO6,HASHVAL,UDPHASH> dev.hn.0.ndis_version: 6.30 dev.hn.0.nvs_version: 327680 2.6.0 dev.hn.0.hwassist: 607<CSUM_IP,CSUM_IP_UDP,CSUM_IP_TCP,CSUM_IP6_UDP,CSUM_IP6_TCP> dev.hn.0.caps: 7ff<VLAN,MTU,IPCS,TCP4CS,TCP6CS,UDP4CS,UDP6CS,TSO4,TSO6,HASHVAL,UDPHASH> dev.hn.0.ndis_version: 6.30 dev.hn.0.nvs_version: 393217Although this time I haven't deactivated RSC in Windows Host.

-

RSC disabled on Hyper-V Host

PS C:\Users\m0nji\Downloads\iperf-3.1.3-win64\iperf-3.1.3-win64> .\iperf3.exe -c 192.168.187.11 -R Connecting to host 192.168.187.11, port 5201 Reverse mode, remote host 192.168.187.11 is sending [ 4] local 192.168.189.10 port 49995 connected to 192.168.187.11 port 5201 [ ID] Interval Transfer Bandwidth [ 4] 0.00-1.00 sec 55.6 KBytes 454 Kbits/sec [ 4] 1.00-2.01 sec 21.4 KBytes 174 Kbits/sec [ 4] 2.01-3.00 sec 21.4 KBytes 176 Kbits/sec [ 4] 3.00-4.00 sec 21.4 KBytes 175 Kbits/sec [ 4] 4.00-5.00 sec 17.1 KBytes 140 Kbits/sec [ 4] 5.00-6.00 sec 21.4 KBytes 175 Kbits/sec [ 4] 6.00-7.00 sec 21.4 KBytes 175 Kbits/sec [ 4] 7.00-8.01 sec 15.7 KBytes 128 Kbits/sec [ 4] 8.01-9.00 sec 20.0 KBytes 165 Kbits/sec [ 4] 9.00-10.00 sec 21.4 KBytes 175 Kbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth [ 4] 0.00-10.00 sec 384 KBytes 314 Kbits/sec sender [ 4] 0.00-10.00 sec 237 KBytes 194 Kbits/sec receiver iperf Done.pfSense 2.6.0 (hitting the issue)

dev.hn.0.hwassist: 607<CSUM_IP,CSUM_IP_UDP,CSUM_IP_TCP,CSUM_IP6_UDP,CSUM_IP6_TCP> dev.hn.0.caps: 7ff<VLAN,MTU,IPCS,TCP4CS,TCP6CS,UDP4CS,UDP6CS,TSO4,TSO6,HASHVAL,UDPHASH> dev.hn.0.ndis_version: 6.30 dev.hn.0.nvs_version: 393217 dev.hn.1.hwassist: 607<CSUM_IP,CSUM_IP_UDP,CSUM_IP_TCP,CSUM_IP6_UDP,CSUM_IP6_TCP> dev.hn.1.caps: 7ff<VLAN,MTU,IPCS,TCP4CS,TCP6CS,UDP4CS,UDP6CS,TSO4,TSO6,HASHVAL,UDPHASH> dev.hn.1.ndis_version: 6.30 dev.hn.1.nvs_version: 393217 dev.hn.2.hwassist: 607<CSUM_IP,CSUM_IP_UDP,CSUM_IP_TCP,CSUM_IP6_UDP,CSUM_IP6_TCP> dev.hn.2.caps: 7ff<VLAN,MTU,IPCS,TCP4CS,TCP6CS,UDP4CS,UDP6CS,TSO4,TSO6,HASHVAL,UDPHASH> dev.hn.2.ndis_version: 6.30 dev.hn.2.nvs_version: 393217for comparision FreeBSD 12.3 (hitting the issue)

dev.hn.0.hwassist: 17<CSUM_IP,CSUM_IP_UDP,CSUM_IP_TCP,CSUM_IP_TSO> dev.hn.0.caps: 7ff<VLAN,MTU,IPCS,TCP4CS,TCP6CS,UDP4CS,UDP6CS,TSO4,TSO6,HASHVAL,UDPHASH> dev.hn.0.ndis_version: 6.30 dev.hn.0.nvs_version: 393217 dev.hn.1.hwassist: 17<CSUM_IP,CSUM_IP_UDP,CSUM_IP_TCP,CSUM_IP_TSO> dev.hn.1.caps: 7ff<VLAN,MTU,IPCS,TCP4CS,TCP6CS,UDP4CS,UDP6CS,TSO4,TSO6,HASHVAL,UDPHASH> dev.hn.1.ndis_version: 6.30 dev.hn.1.nvs_version: 393217 dev.hn.2.hwassist: 17<CSUM_IP,CSUM_IP_UDP,CSUM_IP_TCP,CSUM_IP_TSO> dev.hn.2.caps: 7ff<VLAN,MTU,IPCS,TCP4CS,TCP6CS,UDP4CS,UDP6CS,TSO4,TSO6,HASHVAL,UDPHASH> dev.hn.2.ndis_version: 6.30 dev.hn.2.nvs_version: 393217FreeBSD 13.0 (not hitting the issue but obviously not STABLE version)

dev.hn.0.hwassist: 17<CSUM_IP,CSUM_IP_UDP,CSUM_IP_TCP,CSUM_IP_TSO> dev.hn.0.caps: 7ff<VLAN,MTU,IPCS,TCP4CS,TCP6CS,UDP4CS,UDP6CS,TSO4,TSO6,HASHVAL,UDPHASH> dev.hn.0.ndis_version: 6.30 dev.hn.0.nvs_version: 327680 dev.hn.1.hwassist: 17<CSUM_IP,CSUM_IP_UDP,CSUM_IP_TCP,CSUM_IP_TSO> dev.hn.1.caps: 7ff<VLAN,MTU,IPCS,TCP4CS,TCP6CS,UDP4CS,UDP6CS,TSO4,TSO6,HASHVAL,UDPHASH> dev.hn.1.ndis_version: 6.30 dev.hn.1.nvs_version: 327680 dev.hn.2.hwassist: 17<CSUM_IP,CSUM_IP_UDP,CSUM_IP_TCP,CSUM_IP_TSO> dev.hn.2.caps: 7ff<VLAN,MTU,IPCS,TCP4CS,TCP6CS,UDP4CS,UDP6CS,TSO4,TSO6,HASHVAL,UDPHASH> dev.hn.2.ndis_version: 6.30 dev.hn.2.nvs_version: 327680 -

I figured out an interim solution for me. I created two external Switches, one for pfSense and one for all the other VMs. With that it does work, no slow speed anymore. Drawback is, it is using one more port and everything goes through a physical Switch.

-

So that's no routing between VMs in the same host? That seems like it's what should trigger this.

-

@stephenw10 I think the key point is that pfsense is not using the same vSwitch then the others. This is an untypical setup and no one with a right mind would do it like this, but I did. And it does work here. I think I simulated "having two (vm) hosts", where it is natural, that the VMs can't use the same vSwitch.

-

It feels like coming home, finally.

Still hope for a real fix to that situation some have for the future.

-

Set-VMSwitch -Name "*" -EnableSoftwareRsc $false Get-VMNetworkAdapter -VMName "vmname" | Where-Object {$_.MacAddress -eq "yourmacaddress"} | Set-VMNetworkAdapter -RscEnabled $falseSeems to have worked for restoring both my wan upload and inter vlan throughput. Is there any official guidance on this?

-

@dd Did you downgrade without a reinstall? The one time I forgot to take a snapshot and now I'm left with dialup speeds on my 10G server.

-

@i386dx said in After Upgrade inter (V)LAN communication is very slow (on Hyper-V).:

damn this did the trick right now

Get-VMNetworkAdapter -VMName "vmname" | Where-Object {$_.MacAddress -eq "yourmacaddress"} | Set-VMNetworkAdapter -RscEnabled $falsewe were just concentrating of disabling RSC on the vSwitch but there is also a variable for the VMs.

so the workaround should be:- disable RSC support on the vSwitch

Set-VMSwitch -Name "vSwitchName" -SoftwareRscEnabled $false- disable RSC support on the VM

Get-VMNetworkAdapter -VMName "VMname" | Set-VMNetworkAdapter -RscEnabled $falseone important side note: right now, you have to enable & disable RSC on the VM after every VM reboot even when the value is still $false!

at least on my host... -

W werter referenced this topic on

W werter referenced this topic on

-

@m0nji said in After Upgrade inter (V)LAN communication is very slow (on Hyper-V).:

one important side note: right now, you have to enable & disable RSC on the VM after every VM reboot even when the value is still $false!

at least on my host...Yes, same behaviour on my host too (Windows server 2022, gen2 vm, I350-T2 adapter, sr-iov unavailable, vmq disabled).

In my case I could even leave-SoftwareRscEnabledenabled on the vswitches and just flip-RscEnabledon and off on the vm network adapter(s) to restore normal bandwidth.Unfortunately I'm not aware if there are ways to enforce nvs version 5 (327680) on the hn driver.