QoS / Traffic Shaping / Limiters / FQ_CODEL on 22.05

-

@thenarc Well, I ran some tests with an old Trendnet router I had around and results were the same. It has some rudimentary bandwidth limiting too, and I'm finding that whether I use it or pfSense, I can limit my 400Mbps downstream all the way to 50Mbps or less and I still get catastrophic latency spikes (up to 1s or more) if I run a multi-stream download test (again, I'm just using the fast.com test with the parallel connections maxed out at 30). I'm not really certain how to diagnose further (could it be my cable modem, for example? It's a SB6180, which is not a Puma6 modem) or just crappy ISP configuration? In either case, I'm satisfied based on this testing that it's not pfSense, but I still have no solution :)

-

@emikaadeo Thanks for posting this. I don't know what's wrong, but I've been at this all day today and gotten nowhere.

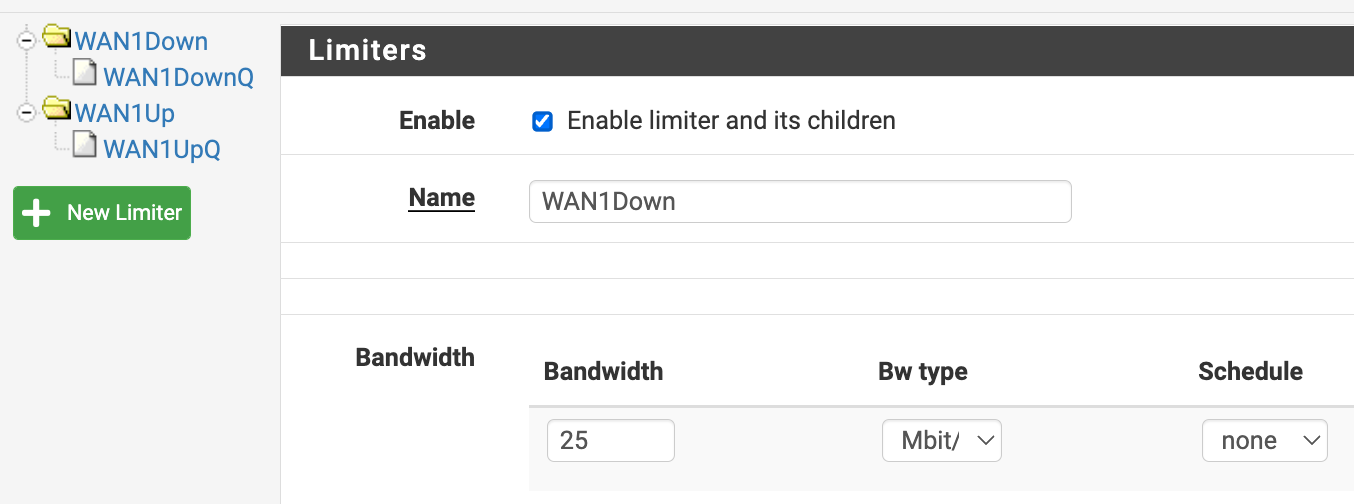

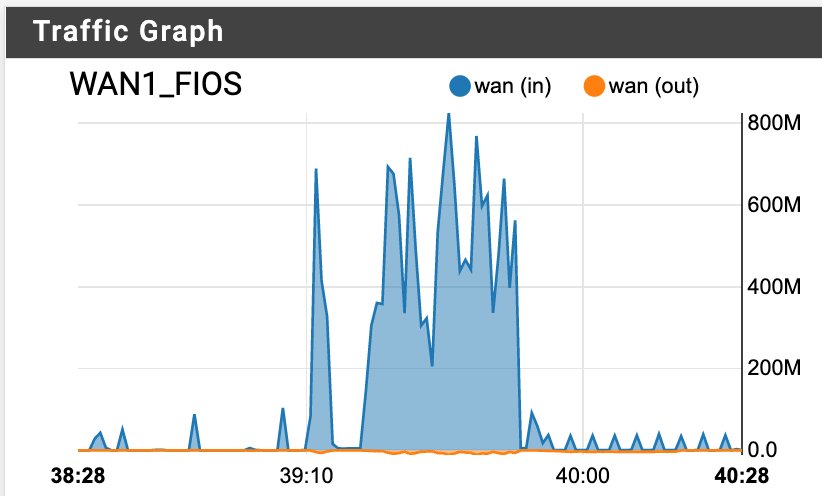

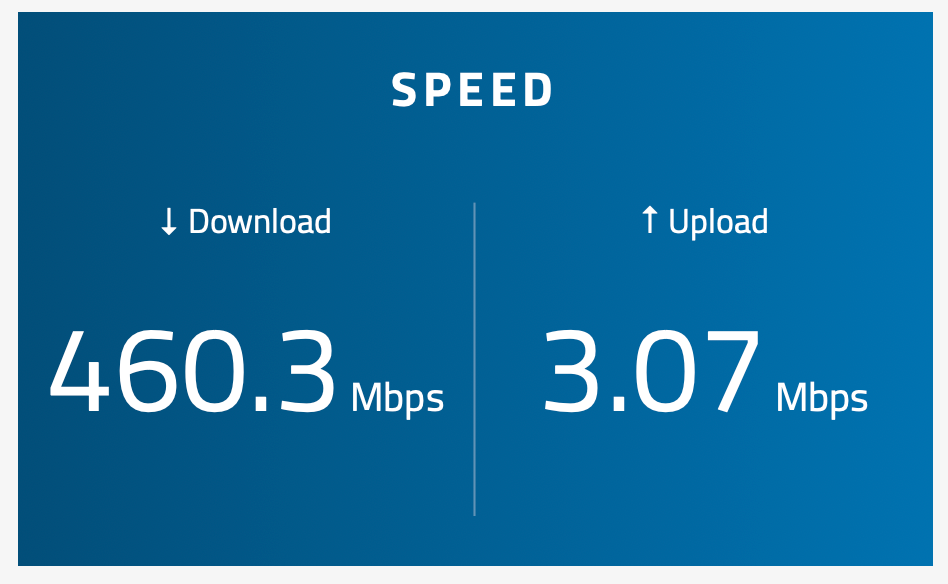

Can someone explain how the below is even possible?

As a test I set my bandwidth to 25Mbit/s to see if the limiter was even working at all...

# cat /tmp/rules.limiter pipe 1 config bw 25Mb droptail sched 1 config pipe 1 type fq_codel target 5ms interval 100ms quantum 300 limit 10240 flows 1024 ecn queue 1 config pipe 1 droptail pipe 2 config bw 25Mb droptail sched 2 config pipe 2 type fq_codel target 5ms interval 100ms quantum 300 limit 10240 flows 1024 ecn queue 2 config pipe 2 droptail

And yet...

-

Just read through about 9 other threads reporting various breakage with ipfw limiters on 2.6 / 22.0x

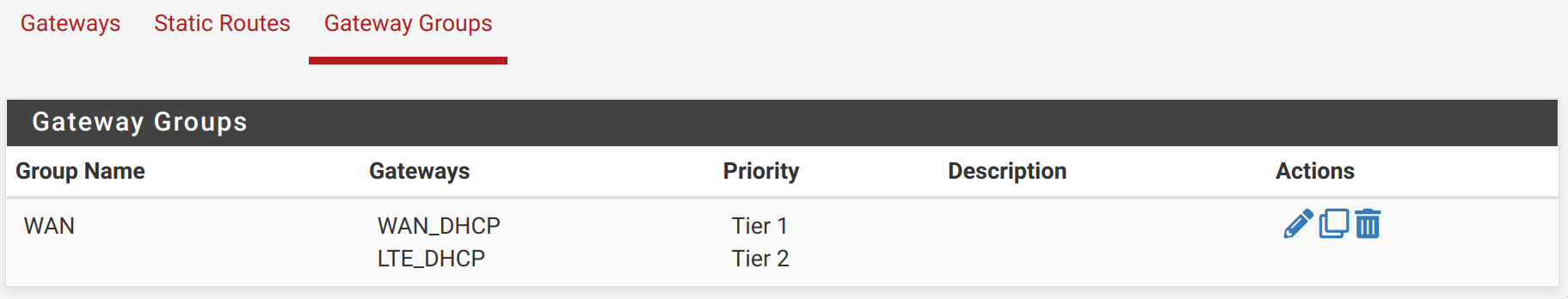

Before I lose another day, @jimp or @stephenw10 is it the case that limiters are bugged on the latest builds of pfSense? Specifically for multi-wan setups with gateway groups? It would be nice to know, otherwise if the answer is "no, everything works fine" then I will keep trying or maybe even buy TAC to figure this out because it is driving me nuts.

-

@luckman212 Make sure you have IPv6 disabled on your machine, otherwise the test will not work correct.

-

@luckman212 said in QoS / Traffic Shaping / Limiters / FQ_CODEL on 22.0x:

is it the case that limiters are bugged on the latest builds of pfSense? Specifically for multi-wan setups with gateway groups?

They seem to work fine on my multi-wan setup with gateway groups.

Before I lose another day

Maybe throw in a quick downgrade to 2.4.5-p1 just to be sure?

-

@bob-dig said:

@luckman212 Make sure you have IPv6 disabled on your machine, otherwise the test will not work correct.

Yes at this time I don't have IPv6 enabled at all.

@thiasaef said:

Maybe throw in a quick downgrade to 2.4.5-p1 just to be sure?

I can try that but it's a fair bit of work since my config has changed a lot since 2.5.x/22.x was released, and the configs are not backwards-compatible. So before doing that I'd like to know if I'm barking up the wrong tree here. Since you say it works for you, would you mind sharing how you've got it configured?

-

I'm not sure how helpful this will be, but I've got two separate locations both on 1Gbit/s FiOS circuits running pfSense 22.01 with limiters + FQ-Codel configured. No issues at either site. The instructions I followed for the limiter setup are these originally posted in the large FQ-Codel thread:

https://forum.netgate.com/topic/112527/playing-with-fq_codel-in-2-4/814

The main difference I suppose is that I've only got the one FiOS connection at either location (i.e. no multi-wan or gateway groups configured).

Hope this helps.

-

@luckman212 said in QoS / Traffic Shaping / Limiters / FQ_CODEL on 22.0x:

would you mind sharing how you've got it configured?

Settings:

- Firewall > Traffic Shaper > Limiters:

- WAN1Down/WAN1DownQ

- bandwidth: 265Mbps

- Queue mgmt algo: Tail Drop

- Scheduler: FQ_CODEL (5/100/1514/10240/8192)

- Queue length: empty

- ECN: not checked

- Firewall > Rules > Floating:

- Action: Match

- Quick: unchecked

- Interface: WAN1

- Direction: out

- Family: IPv4

- Protocol: any

- Source: WAN1 address

- Dest: Any

- Gateway: WAN1

- In/Out Pipe: WAN1UpQ / WAN1DownQ

but it also works when I apply your exact settings (except for the different bandwidth).

@luckman212 said in QoS / Traffic Shaping / Limiters / FQ_CODEL on 22.0x:

triggering failover to my 4G LTE backup connection which does not have any shaper applied

As a side note, I also have a shaper on my 4G LTE backup that works wonders in terms of latency under load.

- Firewall > Traffic Shaper > Limiters:

-

@luckman212 I did it exactly like what you already posted in your first post.

-

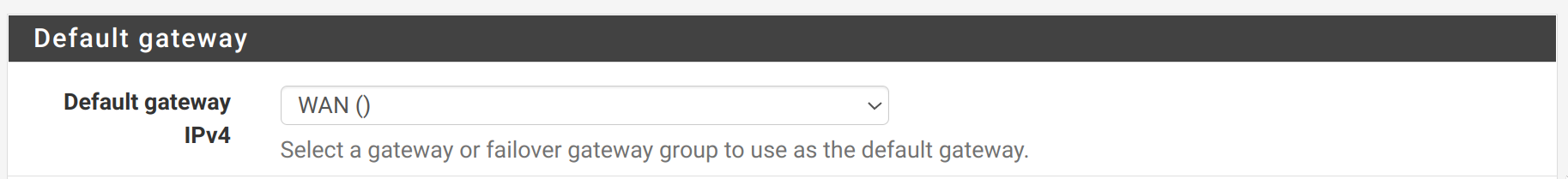

@thiasaef What version of pfSense are you running there? Do you use gateway groups? What's your System > Routing > default gw IPv4 set to?

-

What version of pfSense are you running there?

2.6.0-RELEASE (amd64)

Do you use gateway groups?

What's your System > Routing > default gw IPv4 set to?

-

@luckman212 said in QoS / Traffic Shaping / Limiters / FQ_CODEL on 22.0x:

Just read through about 9 other threads reporting various breakage with ipfw limiters on 2.6 / 22.0x

Before I lose another day, @jimp or @stephenw10 is it the case that limiters are bugged on the latest builds of pfSense? Specifically for multi-wan setups with gateway groups? It would be nice to know, otherwise if the answer is "no, everything works fine" then I will keep trying or maybe even buy TAC to figure this out because it is driving me nuts.

There is a known issue with limiters if you also have Captive Portal enabled but that's the only problem I'm aware of at the moment:

https://redmine.pfsense.org/issues/12954

It's working fine for me on multi-WAN on my edge at home with this setup:

https://docs.netgate.com/pfsense/en/latest/recipes/codel-limiters.html

-

@jimp Are you running 22.05 snaps on that system? Any possible chance you'd share a sanitized config.xml with me?

-

22.05 snapshot, yes, but I haven't updated that system in a couple weeks, it's on a snapshot from the 14th.

No need to share config, it's exactly as described on the docs page I linked. I wrote that based on the config I have been using successfully for months. Only difference is maybe the queue lengths since I have two fast WANs (1Gbit/s and 300Mbit/s) though I don't use limiters on my 1Gbit/s WAN since it's not necessary. I have to use the codel setup on my 300/30 WAN or the performance is crap under load.

A couple common mistakes people make:

- Do not over-match with the floating rules. Outbound floating rules happen after NAT so the source can only be the IP address(es) on that interface, or perhaps routed IP address blocks if you have any. Don't use a source of 'any', private addresses, or the address of other WANs. For most people the best source to use is the interface address.

- Don't re-use limiters for multiple interfaces/purposes. You should have one upload limiter+queue and one download limiter+queue for each WAN.

- Some people might need or want to exclude ICMP traffic from being put in limiters. It can mess with traceroute results and maybe give a false sense of latency that doesn't really exist. That said, any traffic not put through the limiter will potentially mess with how accurate the limiter can be when it comes to knowing how full a circuit is.

- Use large enough queue lengths on the limiter to hold any potential backlog. On my 300/30 WAN I'm using a queue length of

3000on the limiter (parent) and I've left the default on the queues. Might be overkill, but it works for me.

-

@jimp Thanks for the common mistakes bullet points; in particular I don't recall having seen the limiter queue length guidance before so that's especially useful. Quick question on the floating rules: for a basic single-WAN setup is there still a compelling reason to match on WAN out and WAN in as opposed to LAN in and WAN in? I certainly understand that with multi-WAN you'd lose the granularity required to assign one limiter per WAN by matching on LAN in. But with single-WAN - and especially if ovpn client tunnels are in use - it has seemed more straightforward to me to match on LAN in. Probably a dumb question, but hoping to understand whether doing so may be problematic in a way I don't understand. Thanks again.

-

If you only have one WAN and one LAN and no VPNs then matching in on LAN may be OK. One of the main reasons to do it on WAN outbound is because there is no chance you are catching local traffic in the limiter (to/from the firewall, to/from other LANs, VPNs, other unrelated WANs, etc) -- there is a ton of room for error there so for most people it's much easier to take care of it outbound on WAN instead.

Sure you can setup a lot more rules to pass to the other destinations without the limiter but you end up adding so much extra complexity it's just not worth the effort to avoid using floating rules when it's a much cleaner solution.

-

Ok @jimp thanks for the advice. I'll probably have time this weekend to pave my box and try with stock 2.4.5, 2.5 / 2.6 and 22.01 to see if this is a config problem or some edge case (I am known for those...)

If I can't sort it by then I'll probably just plunk down for TAC so I can work on it with you guys.

-

@jimp Today I did 2 things:

- updated to 22.05.a.20220331.1603 (no change)

- factory reset my box, all defaults. Then ONLY set up the limiters and floating rule in accordance with the official guide and re-tested. Sadly I got the same results (wildly fluctuating speeds, failed speedtests, C or D grade on bufferbloat tests)

Without the limiters enabled, I get a perfect 880/940 result on various speedtests, and everything basically works well—except when my upload gets saturated. Then latency spikes >200ms and we start having problems with VoIP, Zoom, Teams etc.

I'm at the end of my rope... my "WAF" score is very low right now

and I need to fix this. I'm totally willing to buy TAC to continue troubleshooting, but, do you think that will be helpful? I can't imagine this is a config issue at this point, given the factory reset ... could this possibly be a hardware problem?? (using a 6100)

and I need to fix this. I'm totally willing to buy TAC to continue troubleshooting, but, do you think that will be helpful? I can't imagine this is a config issue at this point, given the factory reset ... could this possibly be a hardware problem?? (using a 6100) -

What limits are you setting for your circuit? What happens if you set them a lot lower? For example, if you have a 1G/1G line what happens if you set them at 500/500? 300/300?

I wouldn't expect results like you are seeing unless the limits are higher than what the circuit is actually capable of pushing, so it isn't doing much to help because it doesn't realize the circuit is loaded.

It's also possible the queue lengths are way too low for the speed.

-

It's a 1G FIOS circuit, real world I get 880 down and 939 up consistently. Latency to 8.8.8.8 is 4ms.

[22.05-DEVELOPMENT][root@r1.lan]/root: ping 8.8.8.8 PING 8.8.8.8 (8.8.8.8): 56 data bytes 64 bytes from 8.8.8.8: icmp_seq=0 ttl=118 time=4.097 ms 64 bytes from 8.8.8.8: icmp_seq=1 ttl=118 time=4.315 ms 64 bytes from 8.8.8.8: icmp_seq=2 ttl=118 time=4.118 ms 64 bytes from 8.8.8.8: icmp_seq=3 ttl=118 time=4.004 ms ^C --- 8.8.8.8 ping statistics --- 4 packets transmitted, 4 packets received, 0.0% packet loss round-trip min/avg/max/stddev = 4.004/4.133/4.315/0.113 msI played around with the queue length. Tried leaving it empty/default, as well as 3000 and then 5000. Didn't try higher than that.

I also had the same thought as you- let's just see if the limiter is even working at all, so I tried setting it much lower e.g. 50Mbit or 100Mbit, and that didn't work (as seen in my screenshots from the post above).