Performance issue on virtualised pfSense

-

@stephenw10 , there are no loops, traffic 50-100Mb/s both directions as I mentioned.

I'm just curious is it normal to have 40-70% one core utilisation for system process that is called [kernel{vtnet1 rxq 0}] ? How much traffic can I get on my 10Gb NIC passing through pfSense without performance degrade. Does it has something to do with vNIC queue size or host KVM linux settings?

-

Hmm, I mean I'd expect a single core to pass a lot more than that but it will obviously pass a lot more with multicores/multiqueue NICs.

Do you have multiqueue disabled in the sysctls?

[2.7.0-DEVELOPMENT][admin@cedev.stevew.lan]/root: sysctl hw.vtnet hw.vtnet.rx_process_limit: 512 hw.vtnet.mq_max_pairs: 8 hw.vtnet.mq_disable: 0 hw.vtnet.lro_disable: 0 hw.vtnet.tso_disable: 0 hw.vtnet.csum_disable: 0Or in KVM

Do those NICs shows as multiqueue in the boot logs?

What changed between the 10% and 50% use situations?

-

@stephenw10 , ain't nothing changed from pfSense perspective when we noticed performance degrade.

Regarding multiqueue capability, on pfSense guest:

/root: sysctl hw.vtnet hw.vtnet.rx_process_limit: 512 hw.vtnet.mq_max_pairs: 8 hw.vtnet.mq_disable: 0 hw.vtnet.lro_disable: 0 hw.vtnet.tso_disable: 0 hw.vtnet.csum_disable: 0On linux host:

# ethtool -S enp130s0f0 NIC statistics: rx_noskb_drops: 0 rx_nodesc_trunc: 0 tx_bytes: 117663001808396 tx_good_bytes: 117663001808396 tx_bad_bytes: 0 tx_packets: 394346355386 tx_bad: 0 tx_pause: 0 tx_control: 0 tx_unicast: 393533426208 tx_multicast: 778204848 tx_broadcast: 34724330 tx_lt64: 0 tx_64: 133721490546 tx_65_to_127: 77339988519 tx_128_to_255: 62782083547 tx_256_to_511: 62501260602 tx_512_to_1023: 18919528903 tx_1024_to_15xx: 21005284882 tx_15xx_to_jumbo: 18076718387 tx_gtjumbo: 0 tx_collision: 0 tx_single_collision: 0 tx_multiple_collision: 0 tx_excessive_collision: 0 tx_deferred: 0 tx_late_collision: 0 tx_excessive_deferred: 0 tx_non_tcpudp: 0 tx_mac_src_error: 0 tx_ip_src_error: 0 rx_bytes: 221848242143190 rx_good_bytes: 221848242143190 rx_bad_bytes: 0 rx_packets: 452802863050 rx_good: 452802863050 rx_bad: 0 rx_pause: 0 rx_control: 0 rx_unicast: 452695437804 rx_multicast: 49781922 rx_broadcast: 57643324 rx_lt64: 0 rx_64: 325328389 rx_65_to_127: 130124419365 rx_128_to_255: 98720637028 rx_256_to_511: 95056512114 rx_512_to_1023: 32474881787 rx_1024_to_15xx: 96101084367 rx_15xx_to_jumbo: 0 rx_gtjumbo: 0 rx_bad_gtjumbo: 0 rx_overflow: 0 rx_false_carrier: 0 rx_symbol_error: 0 rx_align_error: 0 rx_length_error: 0 rx_internal_error: 0 rx_nodesc_drop_cnt: 0 tx_merge_events: 290566742 tx_tso_bursts: 0 tx_tso_long_headers: 0 tx_tso_packets: 0 tx_tso_fallbacks: 0 tx_pushes: 3305249032 tx_pio_packets: 0 tx_cb_packets: 1701382 rx_reset: 0 rx_tobe_disc: 0 rx_ip_hdr_chksum_err: 0 rx_tcp_udp_chksum_err: 11869 rx_inner_ip_hdr_chksum_err: 0 rx_inner_tcp_udp_chksum_err: 0 rx_outer_ip_hdr_chksum_err: 0 rx_outer_tcp_udp_chksum_err: 0 rx_eth_crc_err: 0 rx_mcast_mismatch: 26 rx_frm_trunc: 0 rx_merge_events: 0 rx_merge_packets: 0 tx-0.tx_packets: 394346403017 tx-1.tx_packets: 2 tx-2.tx_packets: 0 tx-3.tx_packets: 0 tx-4.tx_packets: 0 tx-5.tx_packets: 0 tx-6.tx_packets: 0 tx-7.tx_packets: 0 tx-8.tx_packets: 1 tx-9.tx_packets: 0 tx-10.tx_packets: 2 tx-11.tx_packets: 3 rx-0.rx_packets: 35352224928 rx-1.rx_packets: 35097722216 rx-2.rx_packets: 34532357156 rx-3.rx_packets: 50495387611 rx-4.rx_packets: 36002566213 rx-5.rx_packets: 34079572593 rx-6.rx_packets: 38382266621 rx-7.rx_packets: 38362390639 rx-8.rx_packets: 33880795280 rx-9.rx_packets: 48521808392 rx-10.rx_packets: 34180770870 rx-11.rx_packets: 33915051801 -

Hmm, actually the ALTQ changes in pfSense prevent that (after some playing with settings!).

That loading where the actual pf load is shown. Anything changed there? More packges? Longer rule lists?

Steve

-

@stephenw10 said in Performance issue on virtualised pfSense:

That loading where the actual pf load is shown. Anything changed there? More packges? Longer rule lists?

We don't use shaping on pfSense.

-

That doesn't matter, multiqueue is disabled for vtnet(4) in the pfSense build to allow ALTQ to run on it whether or not it's actually used.

-

@stephenw10 Yeah multiqueue doesnt work for vtnet on pfSense,I requested it on redmine as I noticed they added a toggle for the hyper-v net driver, but the response was because its a compile time only flag they wont be able to add a toggle.

-

@chrcoluk , @stephenw10, guys could you please explain how multi queue capability impacts performance. Are there ways to mitigate it, for example adding more CPU/RAM or it's just a limit of virtualised appliance routining capability?

-

The NICs can only have one Rx and one Tx queue which means they are only serviced by one CPU core. So to go faster you need that CPU core to run faster, more CPU cores doesn't help.

That's particularly true here where you are running as 'router on a stick' so only have one NIC/queue-pair doing all the work.Nothing has changed in that respect though. There's nothing that should have suddenly increased the load for the same throughput.

Steve

-

@stephenw10 , ok thanks for explanation.

In case of using smart NICs like Intel X710, do you think that network can perform better in the same conditions. Or it's just a limit to have one dedicated CPU core to proceed network traffic on ~100Mb/s rate? Can smart NIC itself proceed network traffic in hardware instead of software? Or is it better to setup pfSense cluster on a separate servers? -

Any multiqueue NIC can spread the load across multiple CPU cores for most traffic types. That includes other types in KVM like vmxnet.

Other hardware offloading is not usually of much use in pfSense, or any router, where the router is not the end point for TCP connections. So 'TCP off-loading' is not supported.

Steve

-

@stephenw10, how can I know if my ethernet card will work under vmxnet driver in KVM with multiqueue capability? Actually I was expecting performance degrade on speeds above ~1Gb/s. Do you think that running pfSense on bare metal server can provide me a performance near to 10Gig firewalling capacity?

Let's say having the same hardware on which I'm hosting pfSense now, will it help if I setup it natively, without KVM? Will I have multiQ capability for my 10Gb NICs?

-

It doesn't matter what the hardware is as long as the hypervisor supports it. Unless you are using PCI pass-through the hypervisor presents the NIC type to the VM with whatever you've configured it as. I'm using Proxmox here, which is KVM, and vmxnet is one of the NIC types it can present.

-

@stephenw10 , is there any chance to change virtio to vmxnet network drivers in virsh and get multiqueue NIC? It's a big deal to change such settings in our environment that's why I'm asking. If it was in my lab I would easily test, but if I do it now, I may loose virtual appliance and access to it. Is it worth even trying to change virtio to vmxnet ?

-

@shshs said in Performance issue on virtualised pfSense:

Is it worth even trying to change virtio to vmxnet ?

Only if you're not seeing the throughput you need IMO. High CPU use on one core is not a problem until it hits 100% and you need more.

Steve

-

@stephenw10, thanks a lot! How can I verify if NIC is multiQ, except of verifying vmxnet driver?

-

Usually by checking the man page like: vmx(4)

Most drivers don't require anything, vmx has specific instructions.Most drivers support multiple hardware chips so the number of queues available will depend on that.

Drivers that do will be limited by the number of CPU cores or the number of queues the hardware supports. Whichever is greater. So at boot you may see:

ix3: <Intel(R) X553 L (1GbE)> mem 0x80800000-0x809fffff,0x80c00000-0x80c03fff at device 0.1 on pci9 ix3: Using 2048 TX descriptors and 2048 RX descriptors ix3: Using 2 RX queues 2 TX queues ix3: Using MSI-X interrupts with 3 vectors ix3: allocated for 2 queues ix3: allocated for 2 rx queuesThat's on a 4100 but on a 6100 the same NIC shows:

ix3: <Intel(R) X553 L (1GbE)> mem 0x80800000-0x809fffff,0x80c00000-0x80c03fff at device 0.1 on pci10 ix3: Using 2048 TX descriptors and 2048 RX descriptors ix3: Using 4 RX queues 4 TX queues ix3: Using MSI-X interrupts with 5 vectors ix3: allocated for 4 queues ix3: allocated for 4 rx queuesSteve

-

@stephenw10 we don't have vmxnet driver in KVM, where did you get it?

-

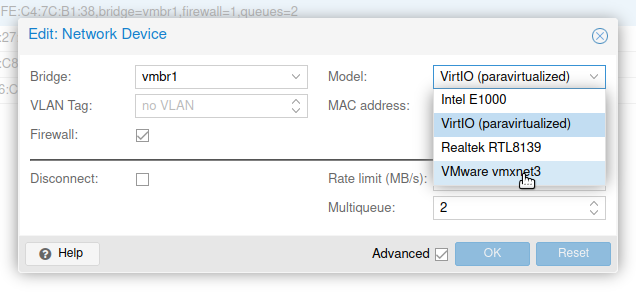

It's available by default in Proxmox:

And since that is built on KVM I would assume it can be used there also. I have no idea how to add though.

Steve

-

@stephenw10 yeah i forgot proxmox supports 'vmxnet', I assume its not as optimised as it is in esxi, but I do wonder if a multi queue 'vmxnet' on proxmox is more capable than a single queue 'vtnet'. It will be interesting to find out.